Scene Flow Estimation Method Based on Local Rigidity Assumption in RGBD Video

A technology of scene flow in video, applied in computing, computer components, image analysis, etc., can solve the problems of scene flow estimation error in local area and inaccurate estimation of scene flow in global area, etc., and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

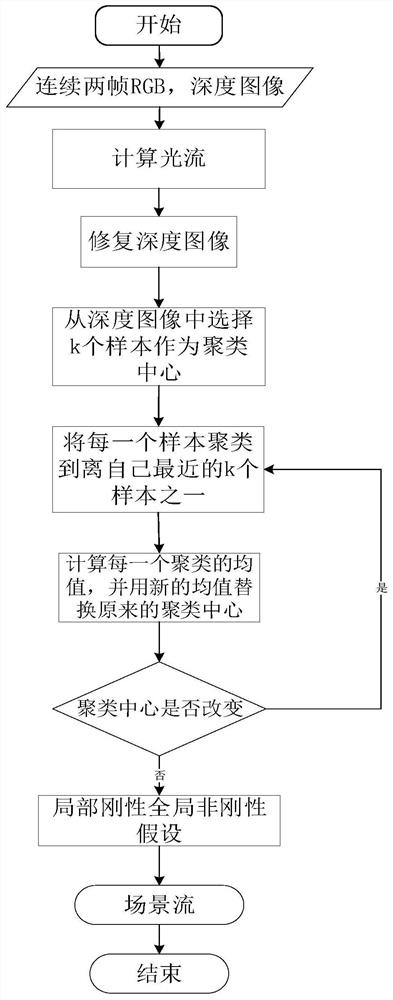

[0109] The implementation process of scene flow estimation method based on local rigidity assumption in RGBD video is illustrated by an operation example on a set of simulation data.

[0110] (1) First execute step 1, collect two consecutive frames of RGB and depth images, and then calculate the optical flow information based on two consecutive frames of RGB images, Figure 4 It is to collect two consecutive frames of original RGB image and depth image, Figure 5 is the optical flow information from RGB image 1 to RGB image 2 and the optical flow information map from RGB image 2 to RGB image 1 calculated based on two frames of RGB images;

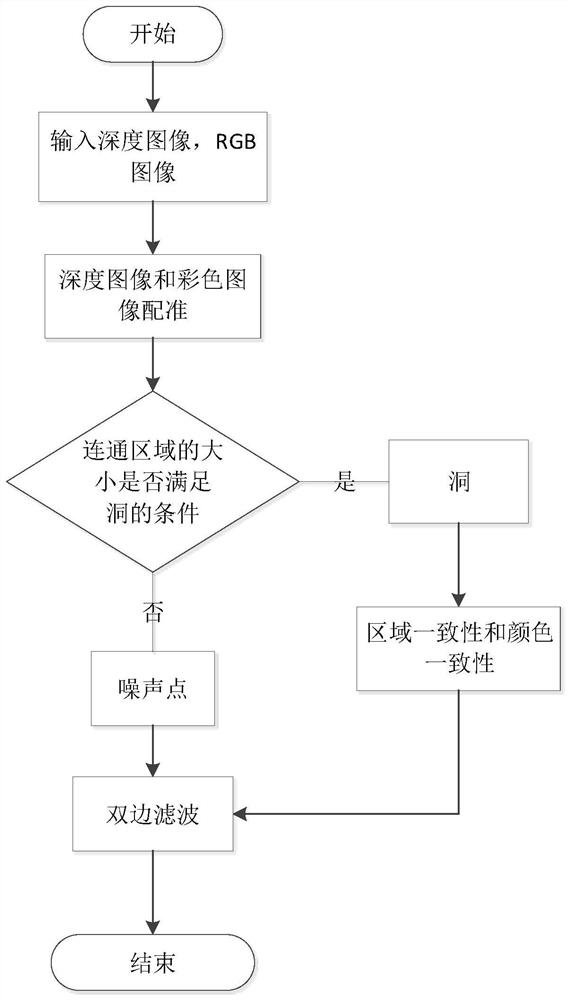

[0111] (2) Execute step 2, the repaired depth image can be obtained, the result is as follows Figure 6 Shown are two frames of depth images repaired according to the corresponding RGB image information.

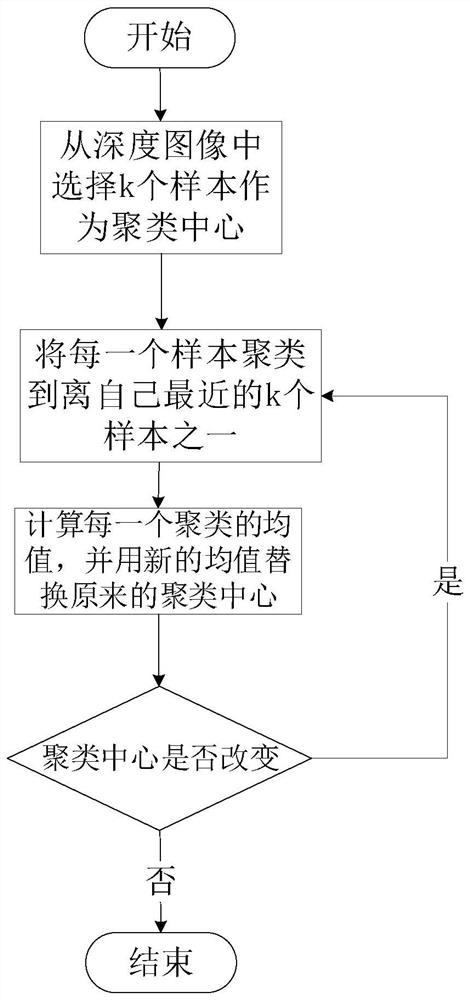

[0112] (3) Executing step 3, the layered information of the depth image can be obtained, and the segmentation result of the depth imag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com