Image labeling method based on convolutional neural network and binary coding features

A convolutional neural network and binary coding technology, applied in the field of visual images, can solve the problem that a single label cannot fully describe the image, and achieve the effect of low cost, high speed and high efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments, so that those skilled in the art can better understand the present invention and implement it, but the examples given are not intended to limit the present invention.

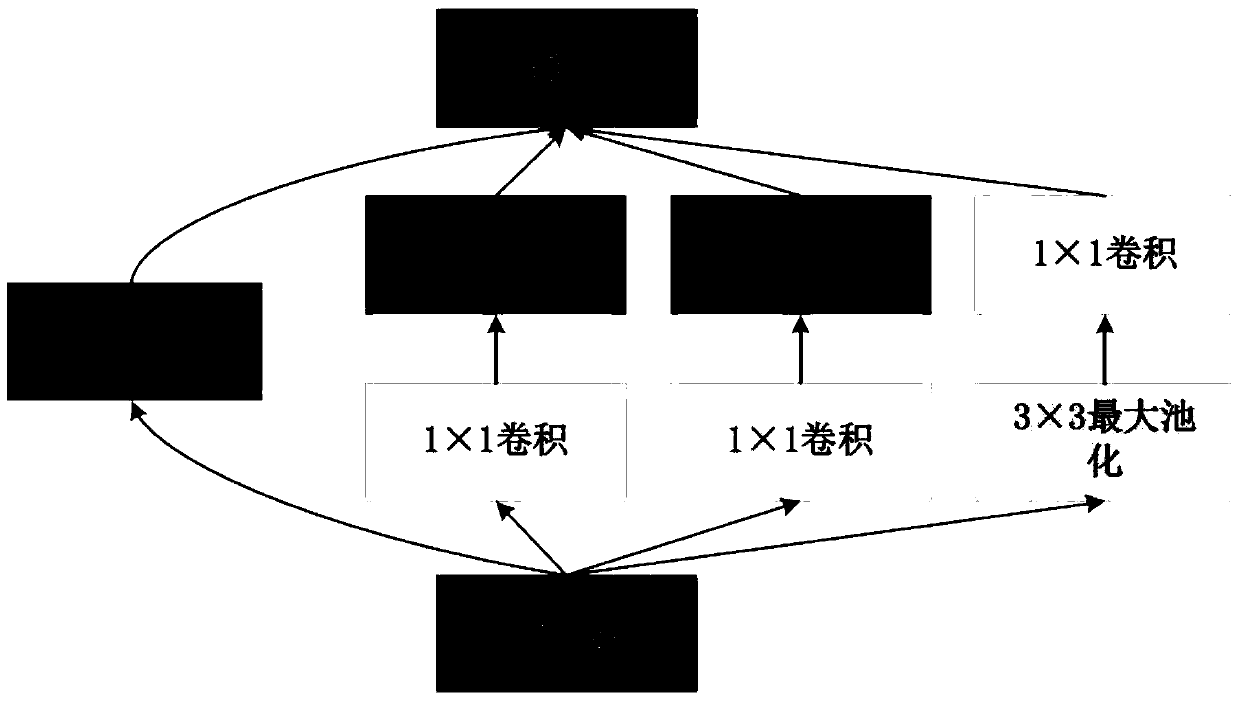

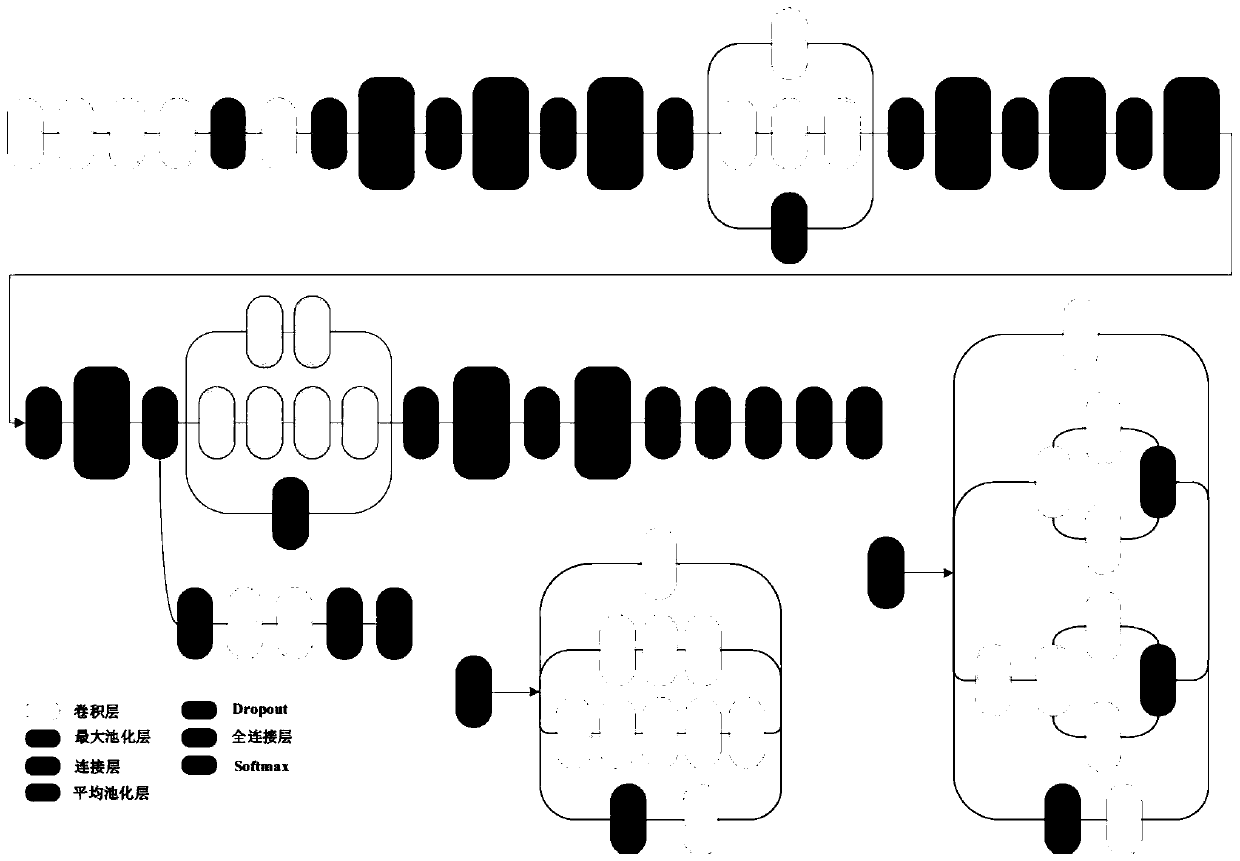

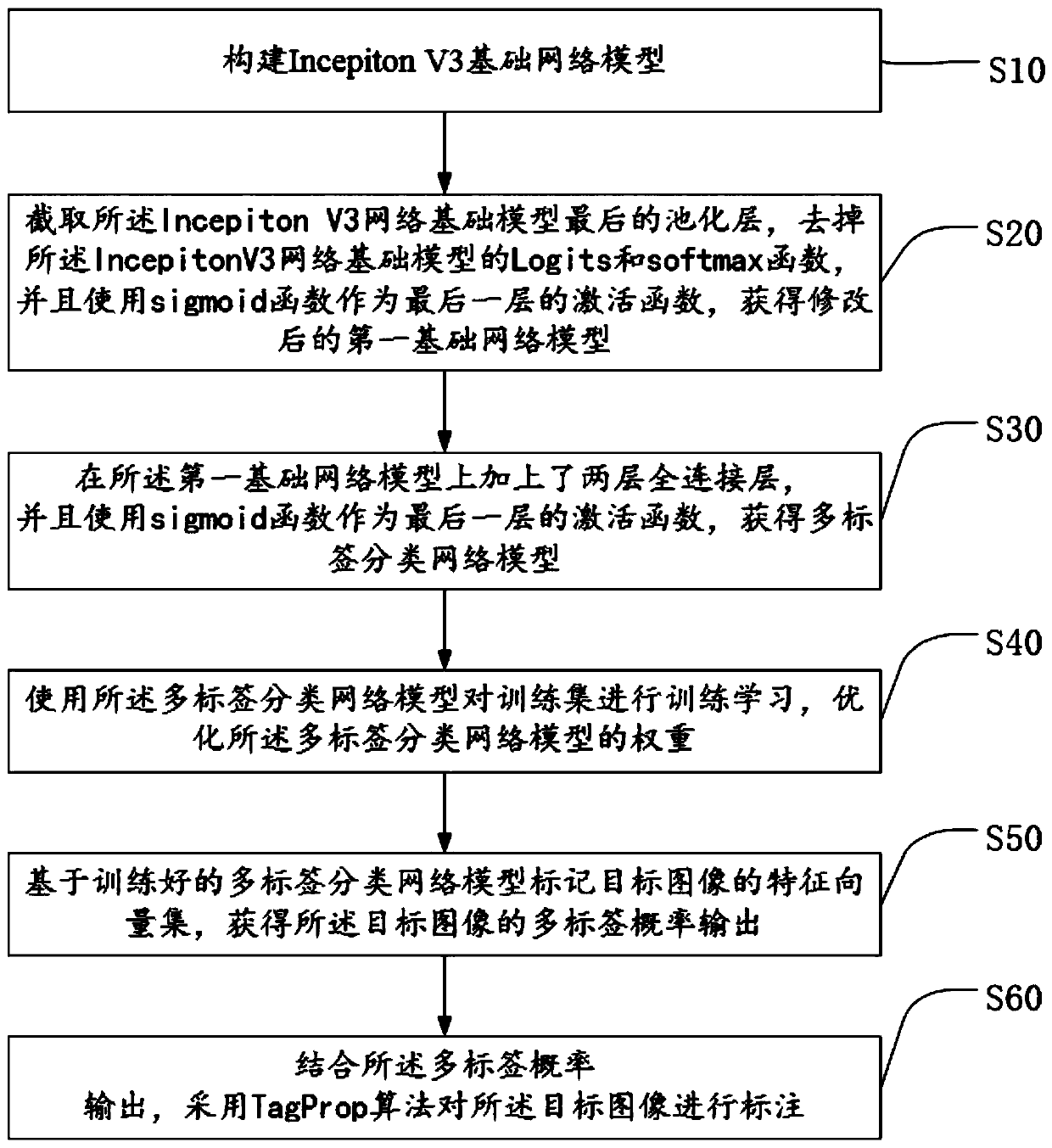

[0035] The present invention uses Inception V3 as the basic network structure of the model. The characteristic of the Inception network is that while controlling the calculation amount and parameter amount, it also obtains very good classification performance. The Inception network does not blindly increase the number of layers of the network. It proposes the Inception Module, whose structure is as follows figure 1 Shown is a schematic diagram of the Inception network model. This modular design reduces the number of parameters of the network and shrinks the design space of the network while increasing the thickness of the network. The Inception network model also introduces the i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com