Deep learning network application distribution self-assembly instruction processor core, processor, circuit and processing method

A deep learning network, application distribution technology, applied in the field of deep learning network application distribution self-assembly instruction processor core, can solve the problems of lack of system adaptability, lack of solutions, lack of flexibility, etc., to achieve architecture adaptability , Reduce the use of storage capacity and logic resources, and improve the effect of the number of operations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0048] According to an aspect of one or more embodiments of the present disclosure, a deep learning network application distributed self-assembly instruction processor core is provided.

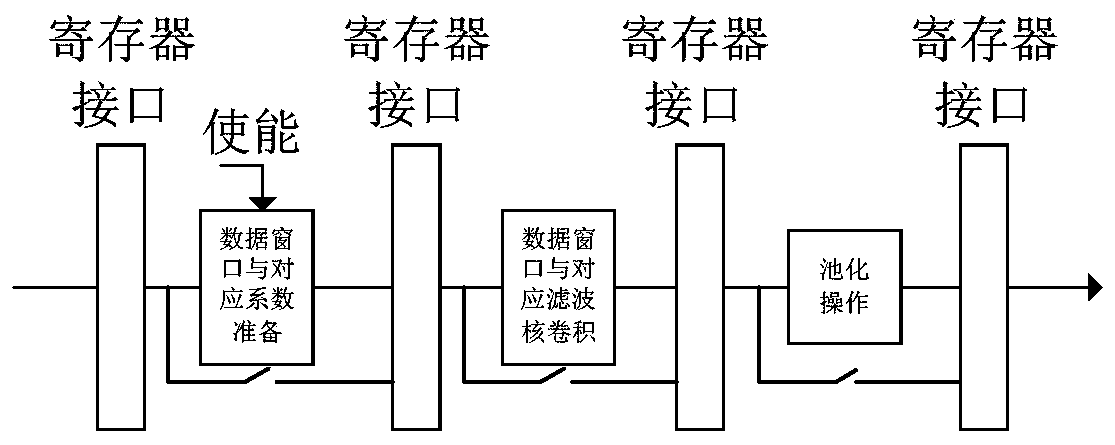

[0049] Such as figure 1 As shown, a deep learning network application distributed self-assembly instruction processor core, the processor core includes:

[0050] Four register interface modules, a preparation module, a convolution operation module and a pooling operation module are sequentially arranged between the two register interface modules;

[0051] The register interface module is configured as a connection register;

[0052] The preparation module is configured to prepare data windows and their corresponding coefficients;

[0053] The convolution operation module is configured as a data window and a corresponding filter kernel convolution operation, and its convolution kernel parameters can be configured;

[0054] The pooling module is configured to perform pooling operations.

[...

Embodiment 2

[0059] According to an aspect of one or more embodiments of the present disclosure, a deep learning network application distributed self-assembly instruction processor is provided.

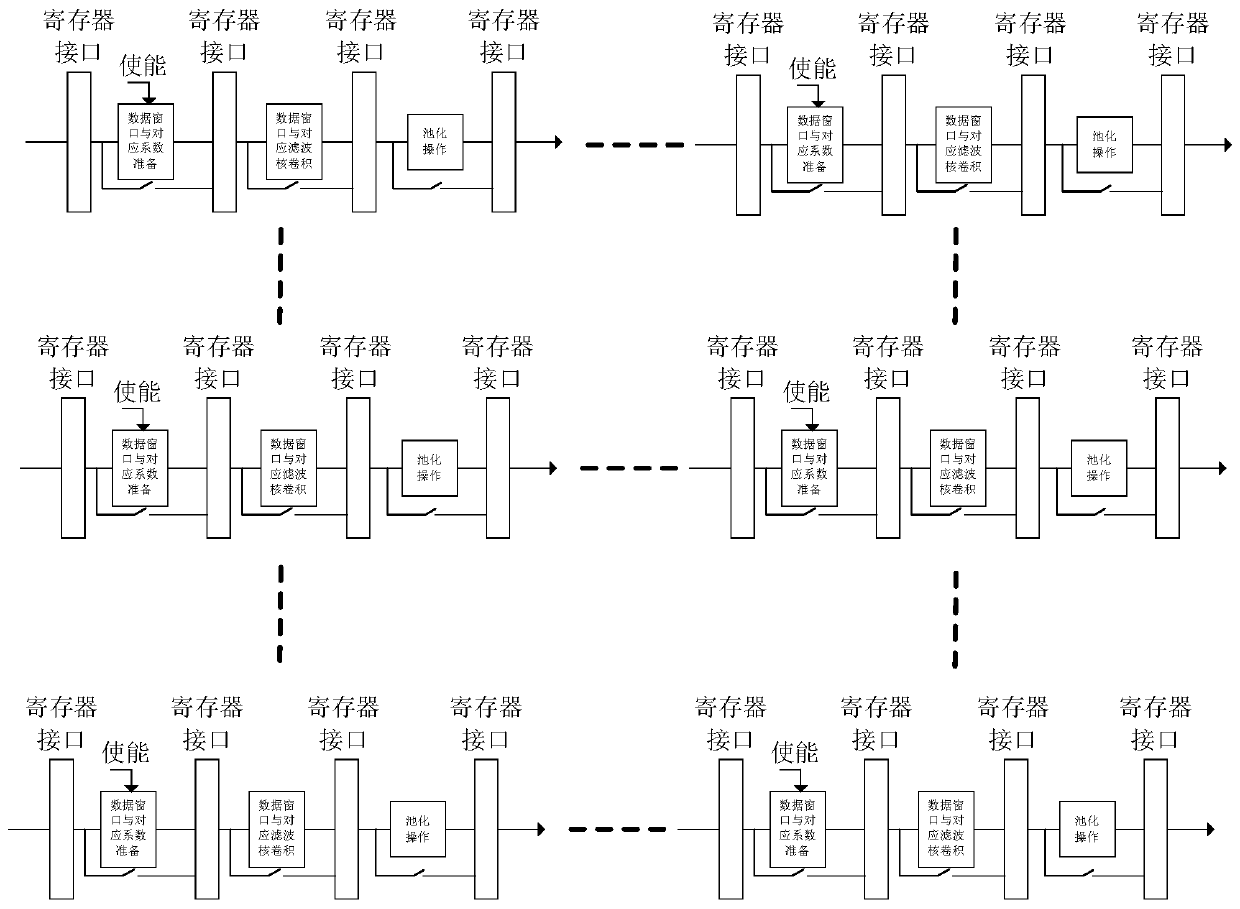

[0060] Such as Figure 2-3 As shown, a deep learning network application distributed self-assembly instruction processor, including: several processor cores and instruction statistics distribution modules;

[0061] The instruction statistics distribution module is configured to count the instructions of the deep convolutional network and distribute the instruction stream;

[0062] The instruction statistics allocation module is respectively connected to the processor core through the instruction stack module, and the instruction stack module is configured to receive and store the instruction stream allocated by the instruction statistics allocation module, and perform multi-instruction according to the stored instruction stream The accelerated operation of the stream controls the processor cores ...

Embodiment 3

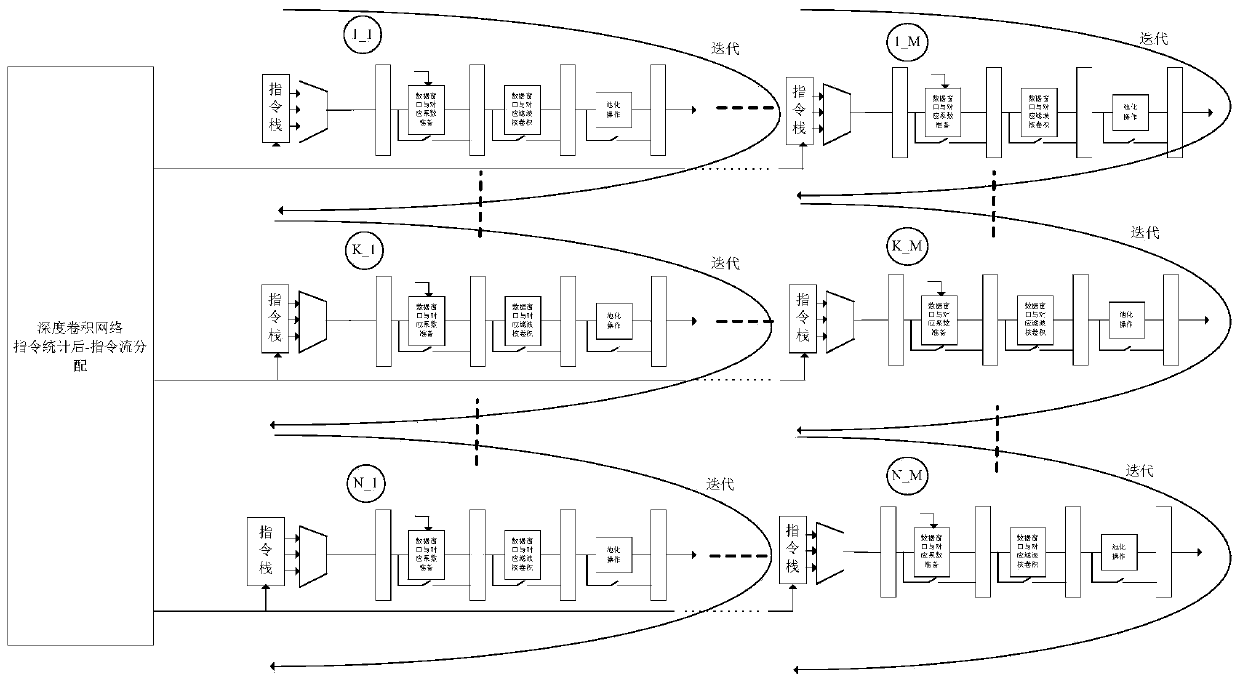

[0067] On the basis of a deep learning network application distributed self-assembly instruction processor disclosed in Embodiment 2, the instruction flow of "preparing data windows and corresponding coefficients + single convolution + pooling" is executed, such as Figure 4 As shown in , the register interface module, preparation module, convolution operation module and pooling operation module in each processor core form a deep neural convolutional network architecture, Figure 4 Shown in gray.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com