Radar-Image Cross-modal Retrieval Method Based on Deep Hash Algorithm

A hash algorithm and image technology, applied in the field of radar-image cross-modal retrieval, can solve problems such as incompleteness and blurred images, and achieve the effect of small storage space, small data storage space, and fast retrieval speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment c

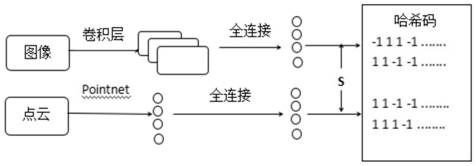

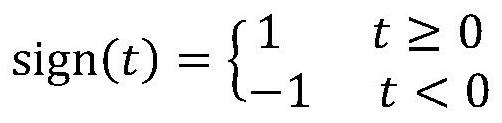

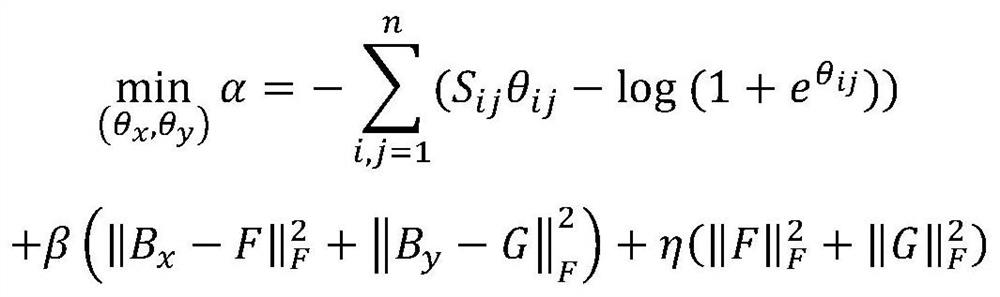

[0067] 3) see figure 1 , train the deep hash network, input the point cloud files and images preprocessed in step 2) into the deep hash network, and construct a similarity matrix S at the same time, so that the data between different modalities are correlated, so as to obtain the image Deep Learning Subnetwork Parameters θ x and point cloud deep learning subnetwork parameters θ y ; The specific method is as follows: wherein in the PointNet network, the input is a collection of all point cloud files of a frame, expressed as a vector of Nx3, wherein N represents the number of point clouds, N=3000, and 3 corresponds to the three components of the Cartesian coordinates, input The point cloud files in the training set are first aligned by multiplying the transformation matrix learned by a T-Net (an alignment network, which is part of the point cloud depth sub-network), which ensures the invariance of the PointNet network to specific space transformations. , after extracting the f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com