AR model training method and device, electronic device and storage medium

A technology of AR model and training method, which is applied in the field of image processing, can solve the problems of long acquisition period, AR model does not change with time, and high labor cost, so as to achieve the effect of ensuring accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

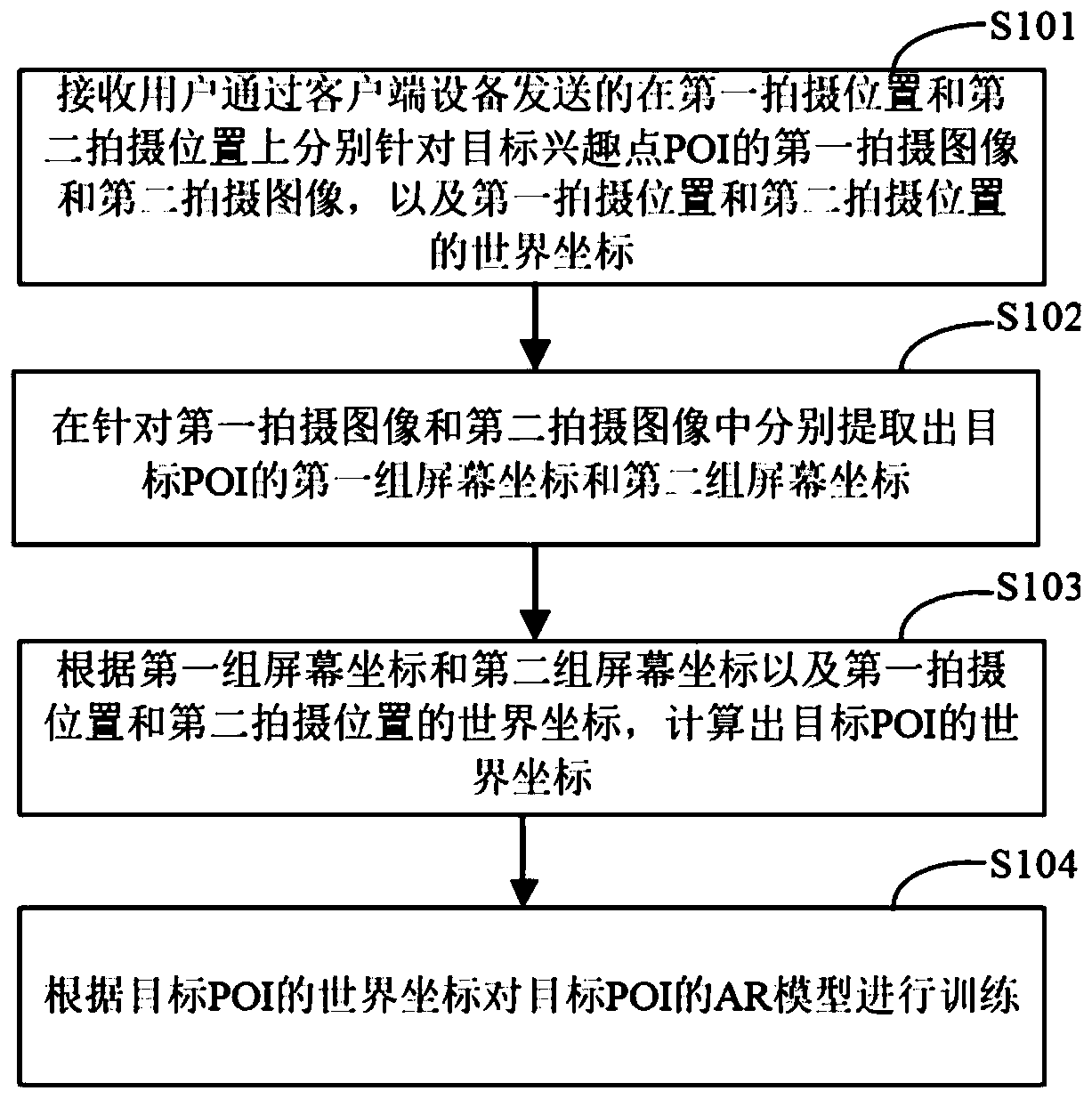

[0057] figure 1 It is a schematic flowchart of the AR model training method provided in Embodiment 1 of the present application. The method can be executed by the AR model training device or the background service device, and the device or the background service device can be implemented by software and / or hardware. The device or background service equipment can be integrated in any intelligent equipment with network communication function. like figure 1 As shown, the training method of the AR model may include the following steps:

[0058] S101. Receive the first shot image and the second shot image of the target point of interest POI at the first shooting position and the second shooting position respectively sent by the user through the client device, and the world of the first shooting position and the second shooting position coordinate.

[0059] In a specific embodiment of the present application, the background service device may receive the first captured image and ...

Embodiment 2

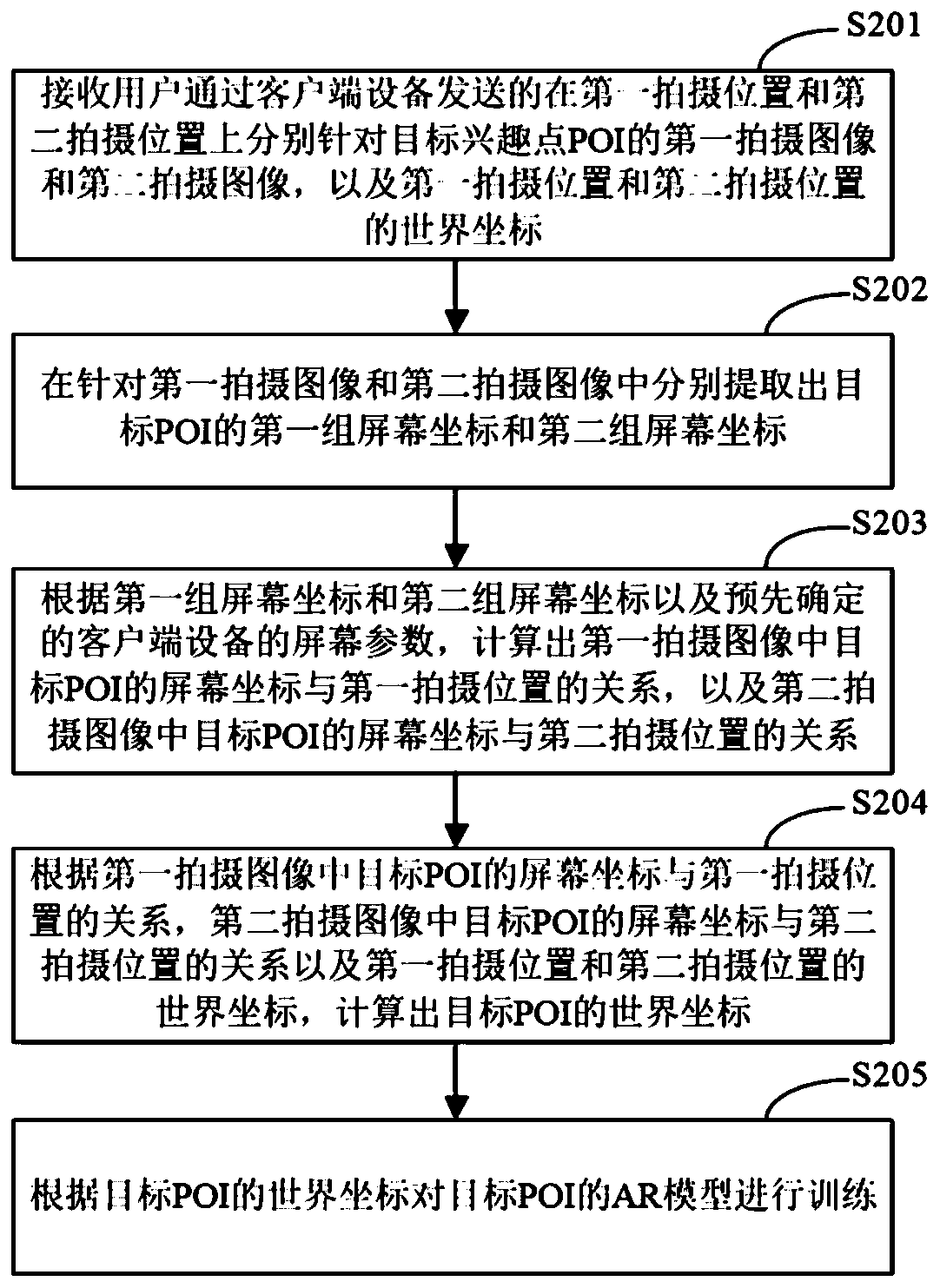

[0068] figure 2 It is a schematic flowchart of the AR model training method provided in Embodiment 2 of the present application. like figure 2 As shown, the training method of the AR model may include the following steps:

[0069] S201. Receive the first shot image and the second shot image of the target point of interest POI at the first shooting position and the second shooting position respectively, and the world of the first shooting position and the second shooting position sent by the user through the client device coordinate.

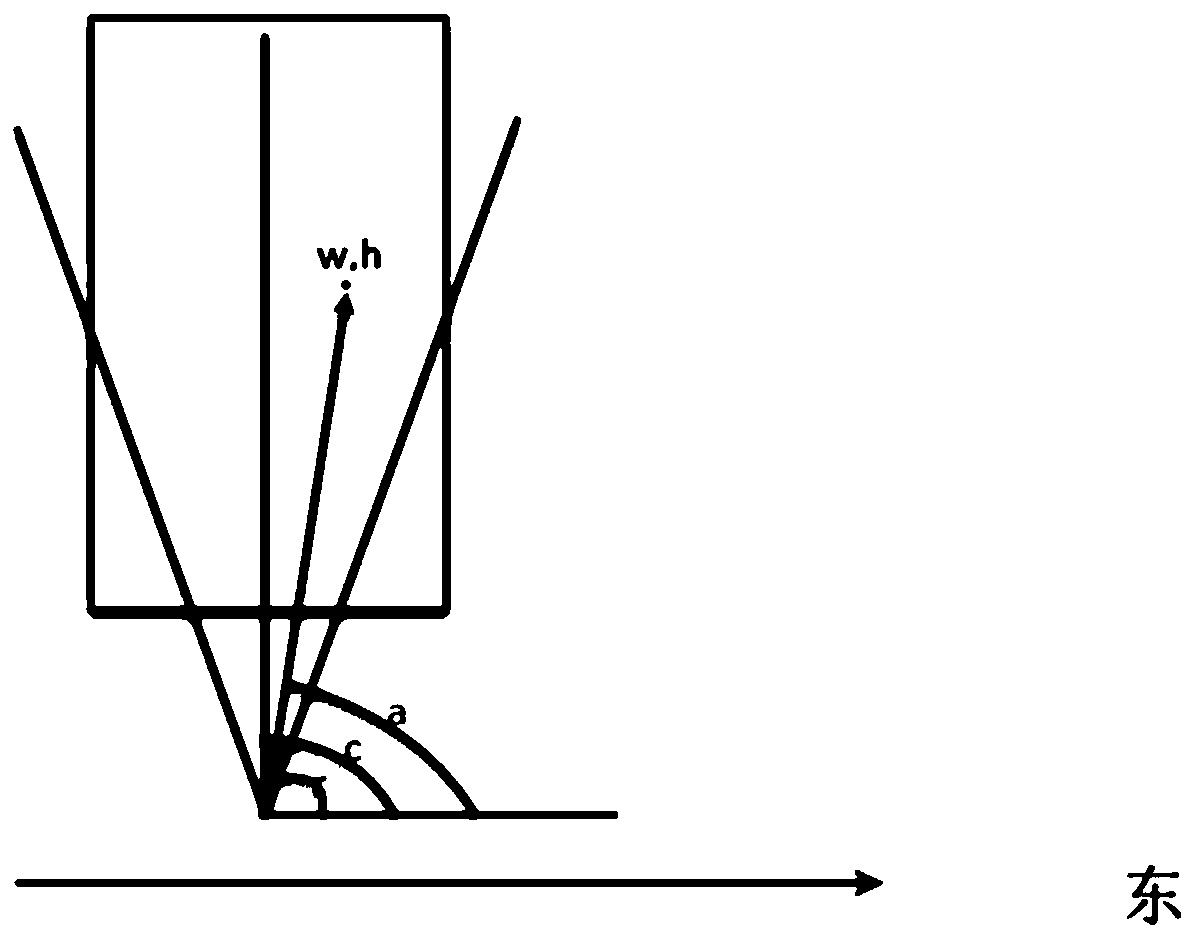

[0070] In a specific embodiment of the present application, the background service device may receive the first captured image and the second captured image of the target point of interest POI at the first shooting position and the second shooting position respectively sent by the user through the client device, and The world coordinates of the first camera position and the second camera position. Specifically, the client device may first a...

Embodiment 3

[0083] Figure 5 It is a schematic flowchart of the AR model training method provided in Embodiment 3 of the present application. like Figure 5 As shown, the training method of the AR model may include the following steps:

[0084] S501. Obtain the location area where the user is located.

[0085] In a specific embodiment of the present application, the client device may acquire the location area where the user is located. Exemplarily, the location and area is a circular area centered on the location of the user and with a preset length as the side length; or the location area may also be an area of other regular shape, which is not limited here.

[0086] S502. If it is detected that the user has entered the recognizable range according to the location area where the user is located, image acquisition is performed on the target POI at the first shooting position and the second shooting position in the location area, and the first shooting image and the first shooting ima...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com