Real-time pose estimation method and positioning grabbing system for three-dimensional target object

A target object, pose estimation technology, applied in computing, computer components, image data processing and other directions, can solve problems such as low efficiency and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

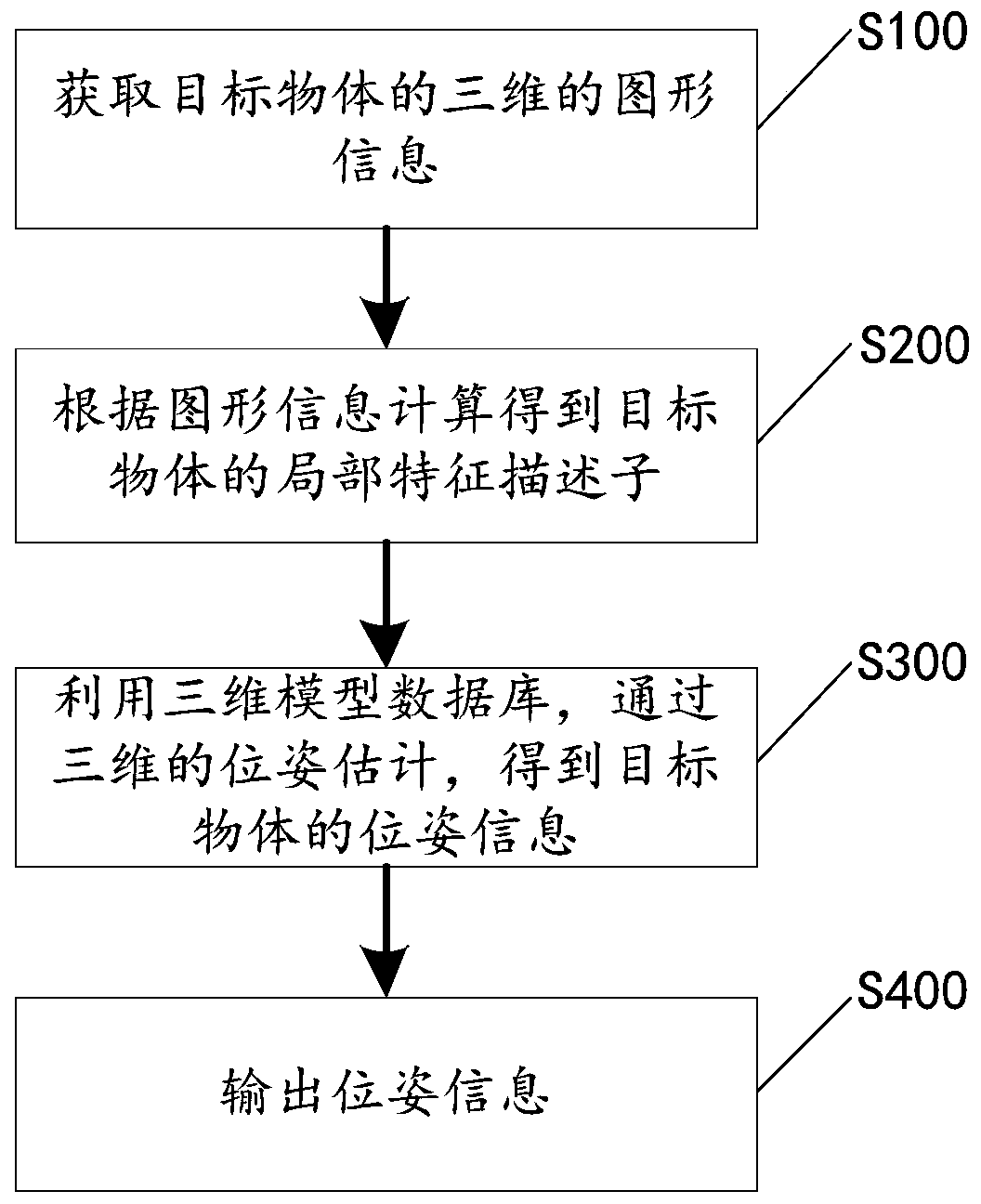

[0048] Please refer to figure 1 , the present application discloses a method for estimating a real-time pose of a three-dimensional target object, including steps S100-S400, which are described below respectively.

[0049] Step S100, acquiring three-dimensional graphic information of a target object.

[0050] It should be noted that the target object here may be a product on an industrial assembly line, a mechanical part in an object box, a tool on an operating table, etc., and is not specifically limited. Then, the three-dimensional graphic information of these target objects can be obtained through visual sensing instruments such as camera equipment, laser scanning equipment, etc., and the graphic information may be a part of the appearance shape data of the surface of the target object.

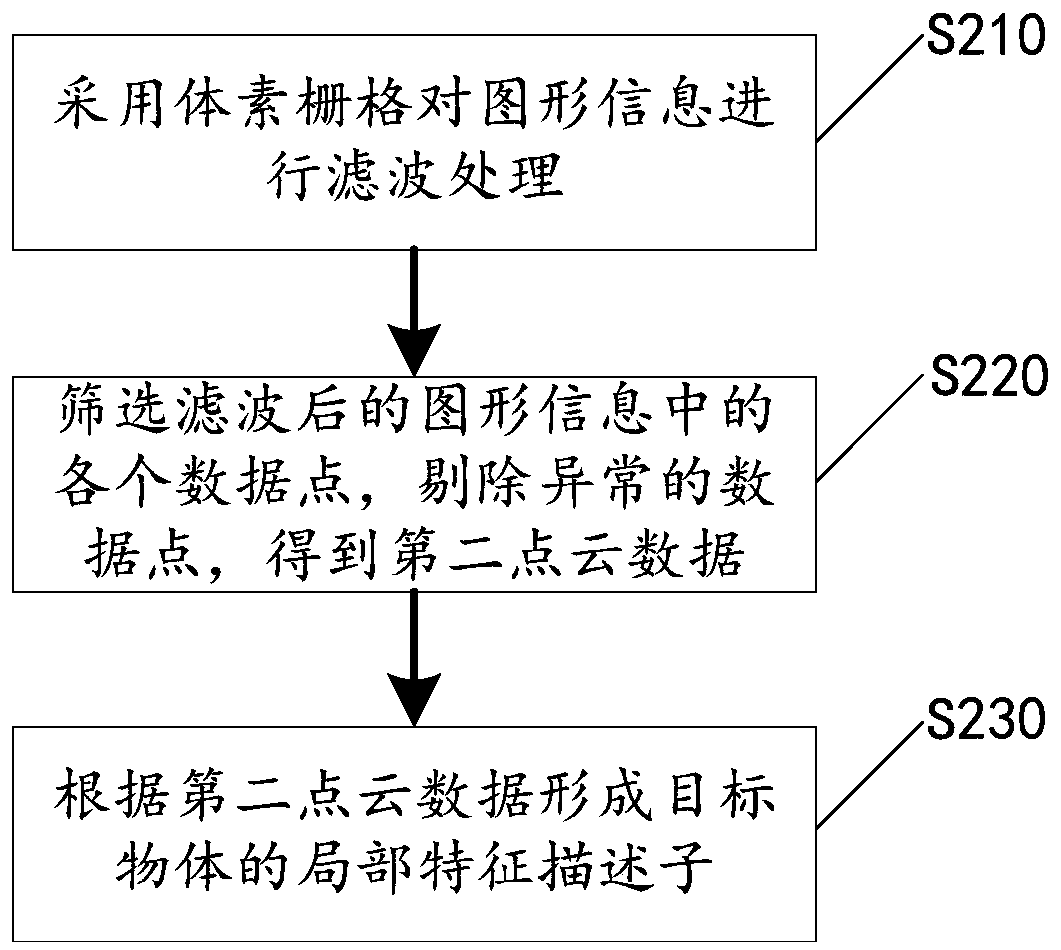

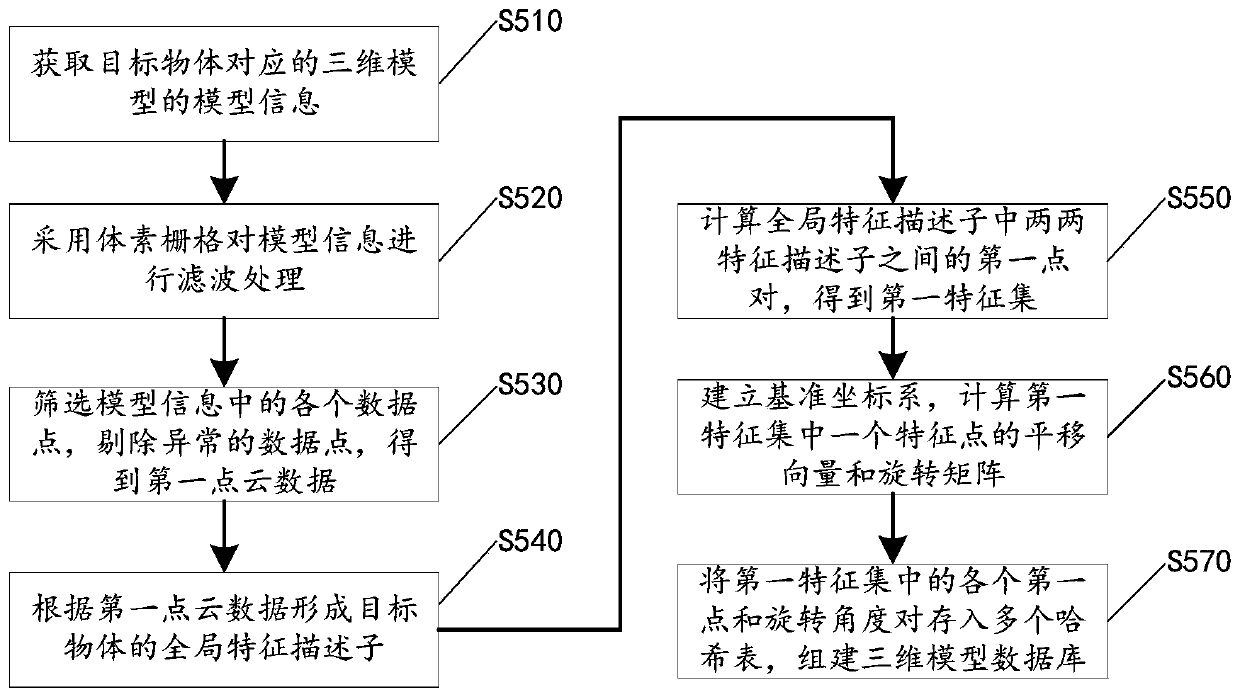

[0051] Step S200, calculate and obtain the local feature descriptor of the target object according to the graphic information. In a specific example, see figure 2 , step S200 may inclu...

Embodiment 2

[0155] Please refer to Figure 11 , the present application discloses a positioning and grasping system for a target object. The positioning and grasping system mainly includes a sensor 11, a processor 12, and a controller 13, which will be described separately below.

[0156] The sensor 11 is used to collect images of the target object to form three-dimensional graphic information of the target object. The sensors 11 here may be some visual sensors with image acquisition functions, such as camera equipment and laser scanning equipment. The target object here may be a product on an industrial assembly line, a mechanical part in an object box, a tool on an operating table, etc., and is not specifically limited.

[0157] The processor 12 is connected to the sensor 11 and used to obtain the pose information of the target object relative to the sensor 11 through the real-time pose estimation method disclosed in the first embodiment.

[0158] The controller 13 is connected to the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com