Interactive blind guiding system and method based on improved Yolov2 target detection and voice recognition

A blind-guiding system and target detection technology, applied in speech recognition, character and pattern recognition, speech analysis, etc., can solve problems such as low security, lack of intelligent interactivity, and large network restrictions, so as to improve image detection speed , good scene description function, and the effect of improving travel safety

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0057] The present invention will be further described in detail through specific embodiments below, but the embodiments of the present invention are not limited thereto.

[0058] In order to better describe the present invention, in the research and implementation of the interactive blind guide system, all the training methods and design principles of deep learning and neural networks quoted in related papers are used, and the symbols that appear are all The corresponding theoretical basis and source code can be found, so I won’t repeat them here.

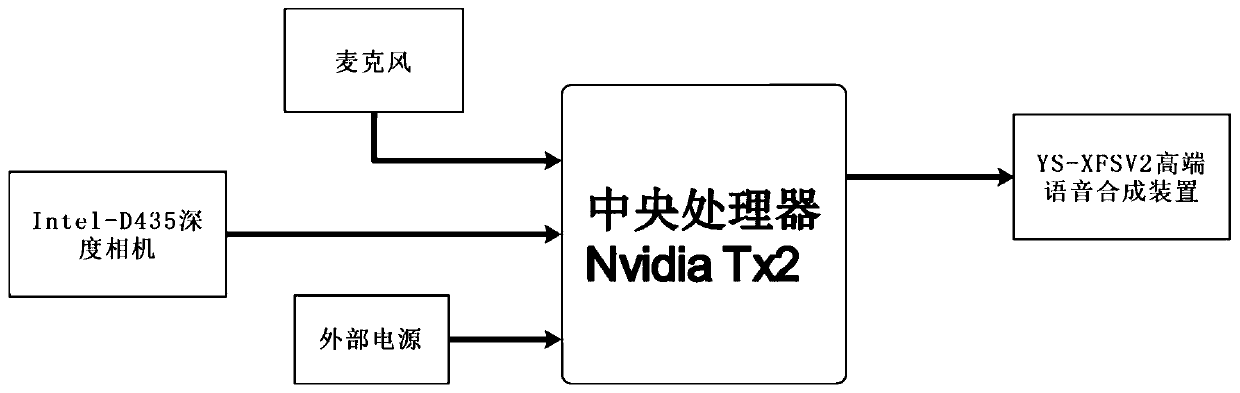

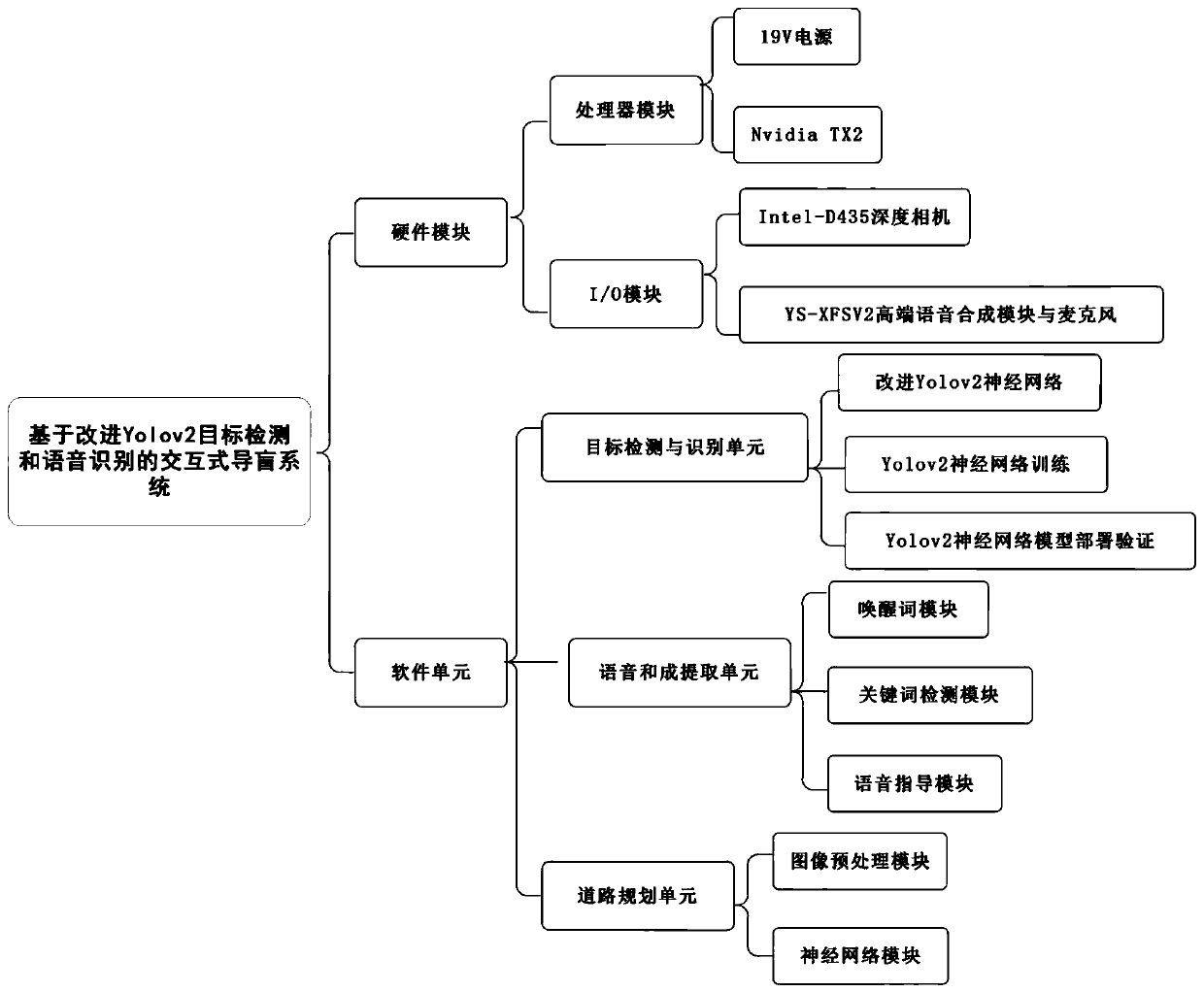

[0059] An interactive blind guide system based on improved Yolov2 target detection and speech recognition, such as figure 1 , 2 As shown, including the central processing unit and its connected depth camera, high-end speech synthesis device, microphone and power supply, among which:

[0060] Central processing unit: used for system control, data processing and signal transmission to ensure the stable operation of the whole syste...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com