Eye movement tracking calibration method based on head-mounted eye movement module

An eye-tracking, head-mounted technology, applied in mechanical mode conversion, character and pattern recognition, acquisition/recognition of eyes, etc., can solve the problems of high user requirements and cumbersome process, low requirements, fast and accurate calibration process , Conducive to the effect of use and promotion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The present application will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

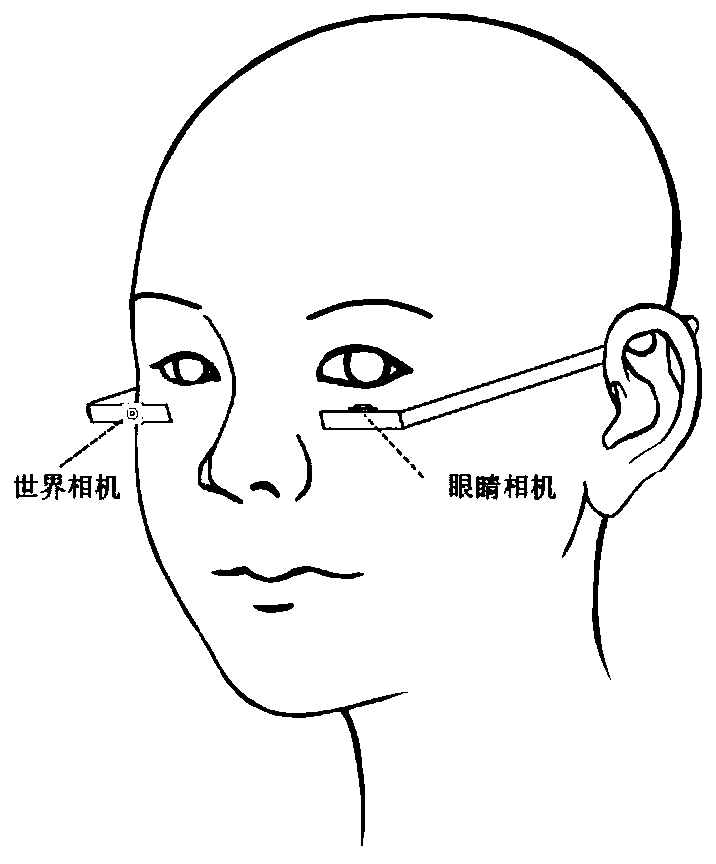

[0023] Such as figure 1 As shown, the user wears an eye movement module to watch the video displayed on the screen. The eye movement module includes two cameras, namely the eye camera that captures the user's eyes and the world camera that captures the display screen.

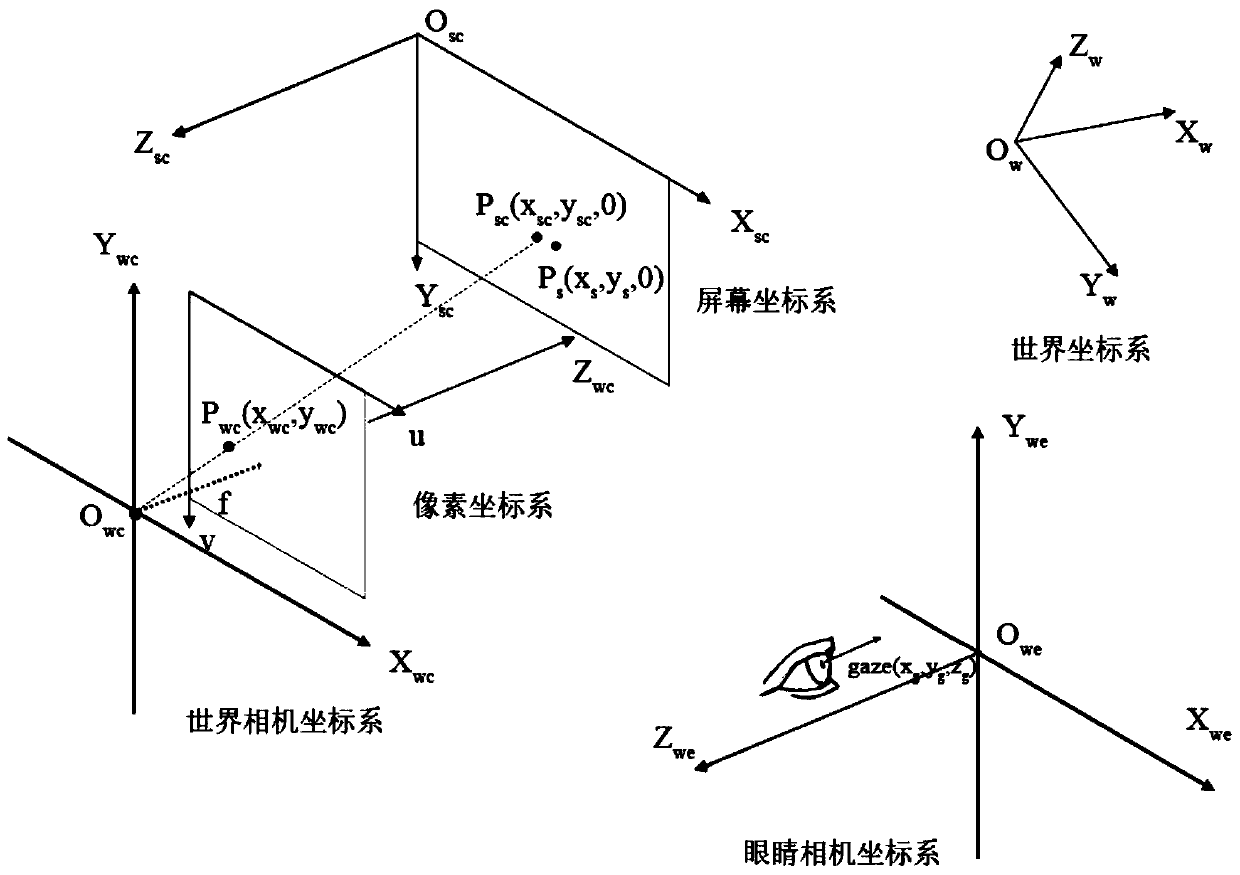

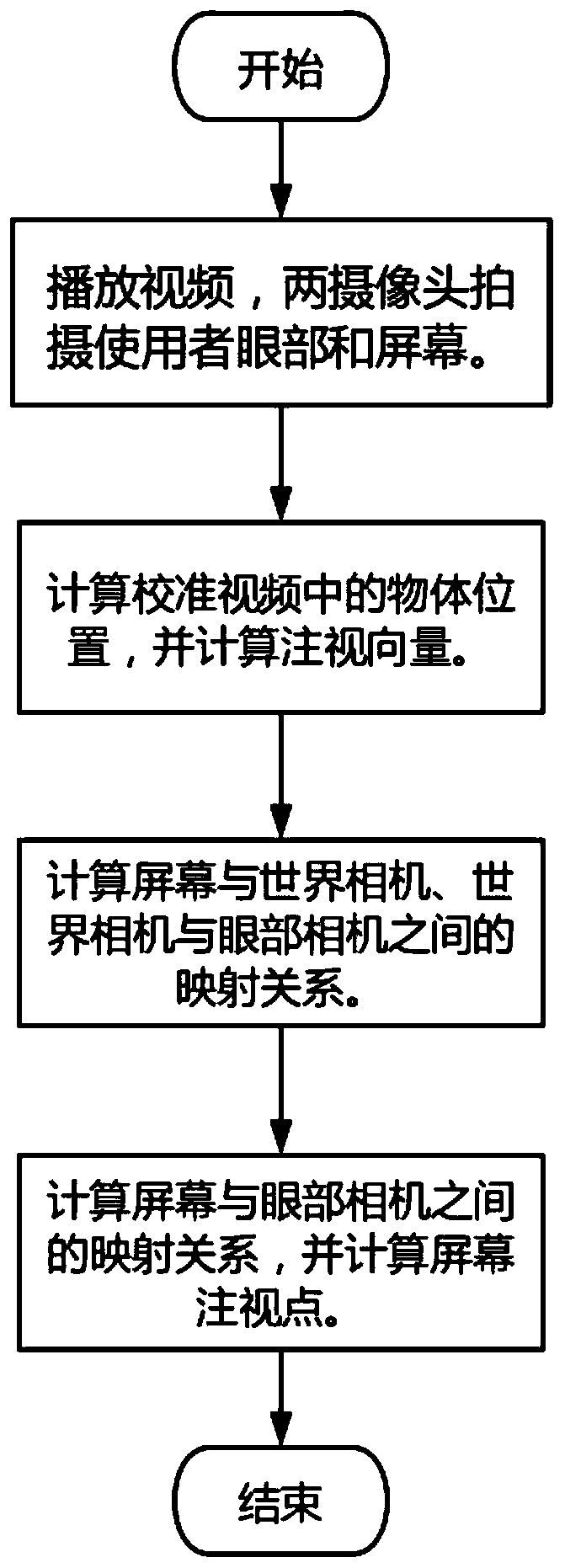

[0024] combine Figure 1 to Figure 3 , the eye-tracking calibration method based on the head-mounted eye-movement module, comprising the following steps:

[0025] An eye tracking calibration method based on a head-mounted eye tracking module, characterized in that: comprising the following steps,

[0026] (1) The user wears the above-mentioned eye movement module;

[0027] (2) Play a calibration video containing a moving object with a frame number of 50 to 300 frames on the screen. The user watches the calibration video, and two cameras capture the user's eyes and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com