Method and device of facial expression generation with data fusion and head-mounted display

A facial expression and data fusion technology, which is applied to the details of 3D image data, the input/output process of data processing, image data processing, etc., can solve the problem of limited facial expression, limited muscle movement, and differences between users and simulated users. Problems such as facial expressions not syncing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

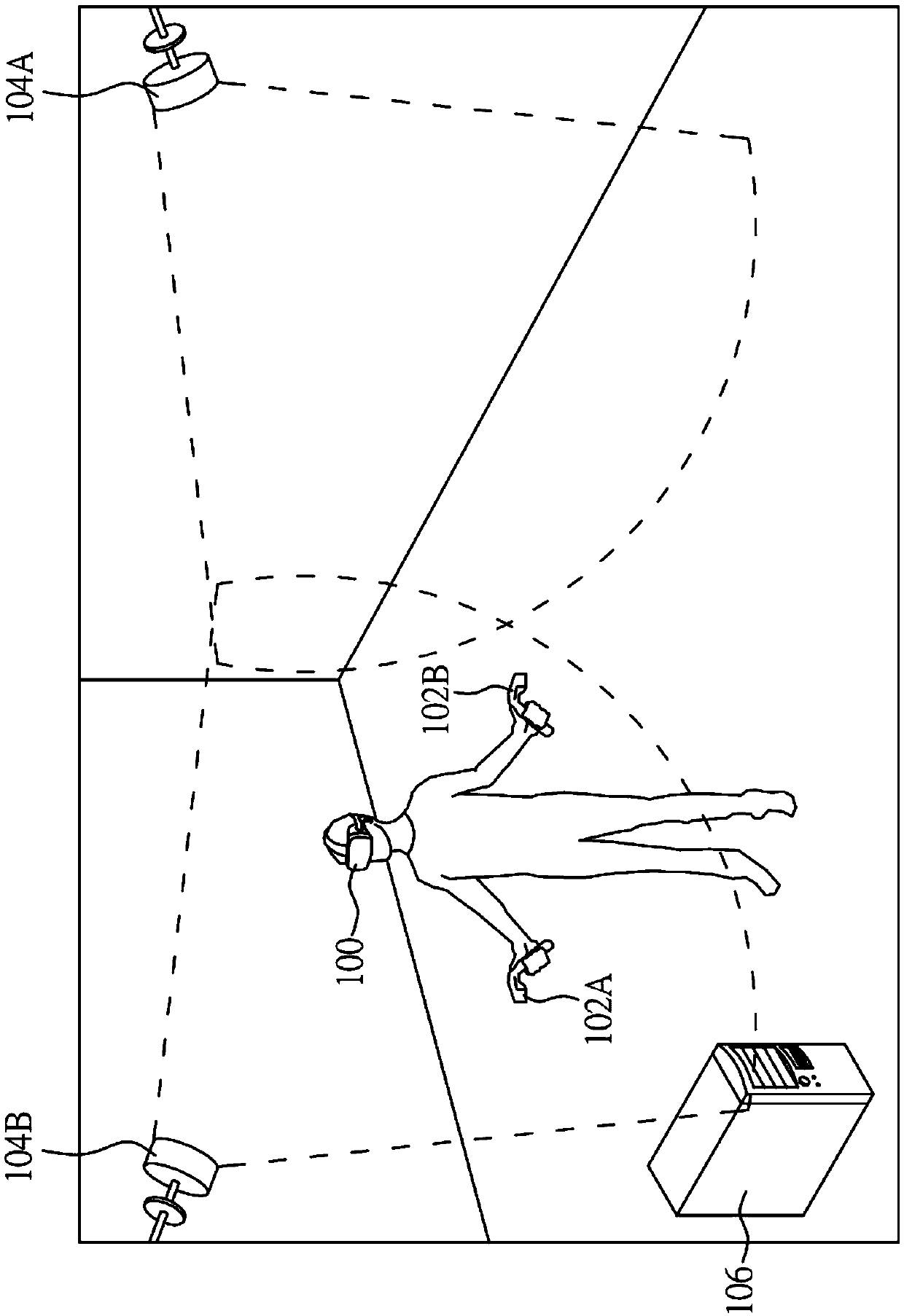

[0024] Please refer to figure 1 , figure 1 It is a schematic diagram of a virtual reality system according to an embodiment of the present invention. It should be noted that the present invention is not limited to virtual reality systems, and it can be used in systems such as augmented reality / mixed reality / extended reality systems. The positional tracking mechanism in virtual reality systems (such as HTC VIVE) allows users to freely move and explore in the virtual reality environment. Specifically, the virtual reality system includes a head-mounted display (HMD) 100 , controllers 102A-102B, lighthouses 104A-104B, and a computing device 106 (such as a personal computer). The lighthouses 104A-104B are used to emit infrared rays, and the controllers 102A-102B are used to generate control signals to the computing device 106, so that the user ca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com