Compression acceleration method of deep convolutional neural network for target detection

A deep convolution and neural network technology, applied in the field of deep learning and artificial intelligence, can solve problems such as limiting the application of convolutional neural network, and achieve the effect of saving calculation amount

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

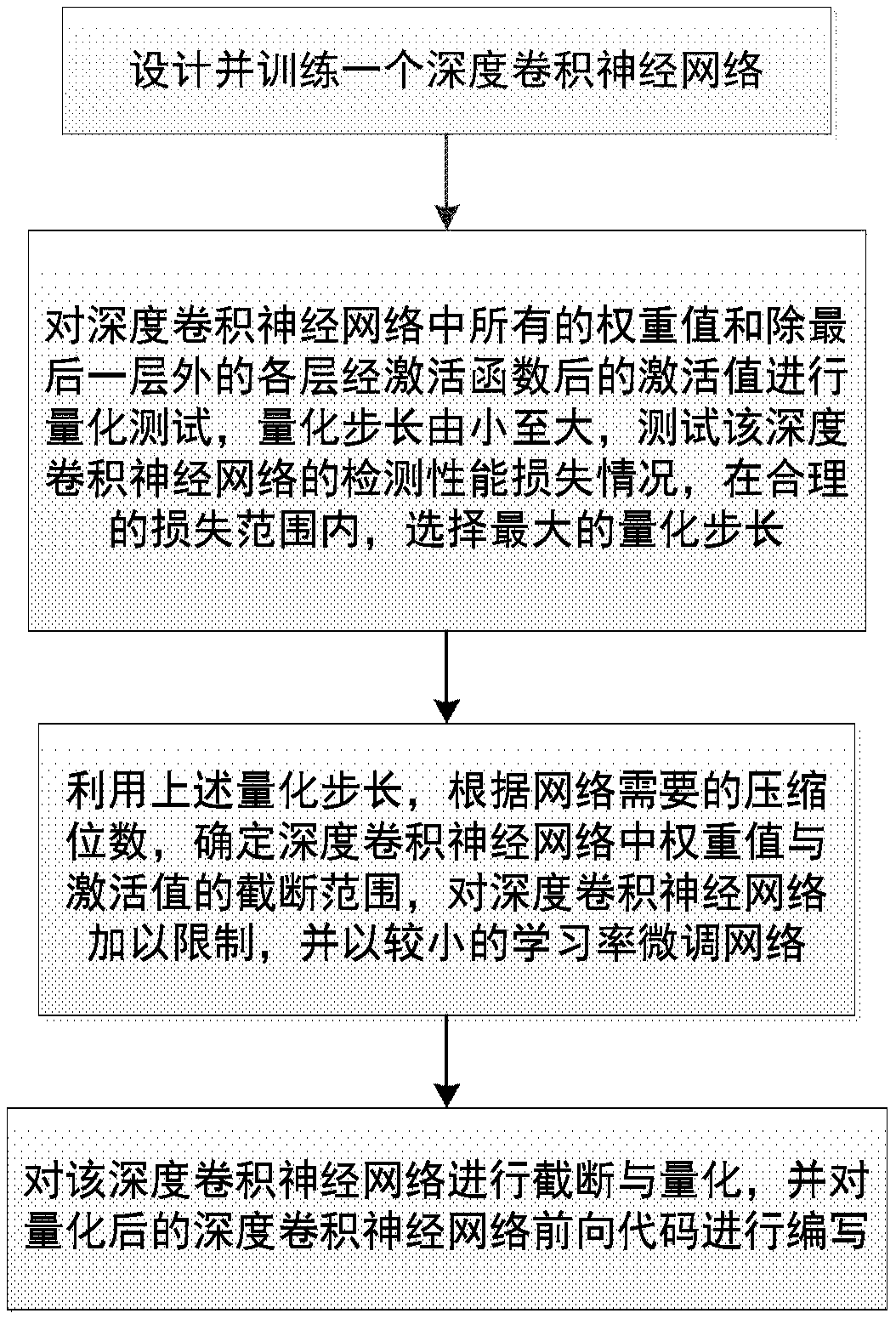

[0037] See figure 1 , the present invention comprises the following four steps:

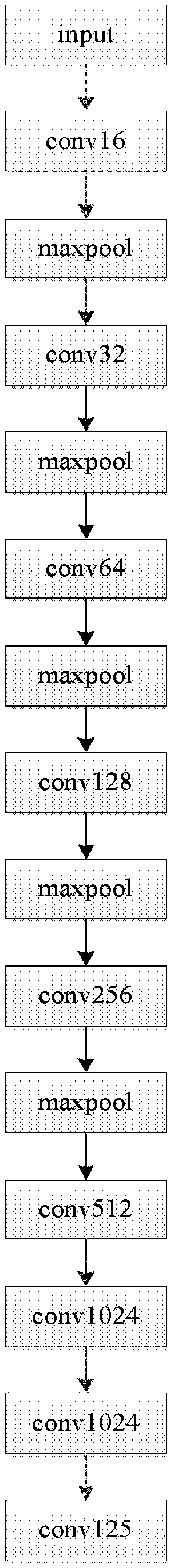

[0038] Step 1: Construct and train a deep convolutional neural network for target detection;

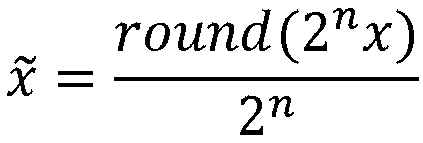

[0039] Step 2: Quantify and test all weight values in the deep convolutional neural network and the activation values of each layer except the last layer after the activation function. The quantization step size is from small to large, and test the deep convolutional neural network Detect performance loss, and select the largest quantization step within the set loss range;

[0040] Step 3: Using the above-mentioned maximum quantization step size, according to the number of compression bits required by the network, determine the truncated rang...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com