Navigation method based on urban real scene

A navigation method and real scene technology, which is applied in the field of real scene navigation to obtain real scene images according to people's visual habits, can solve the problems of large amount of model data and poor transmission effect, so as to achieve improved visual effects, good accuracy, and omission of 3D modeling. effect of the process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

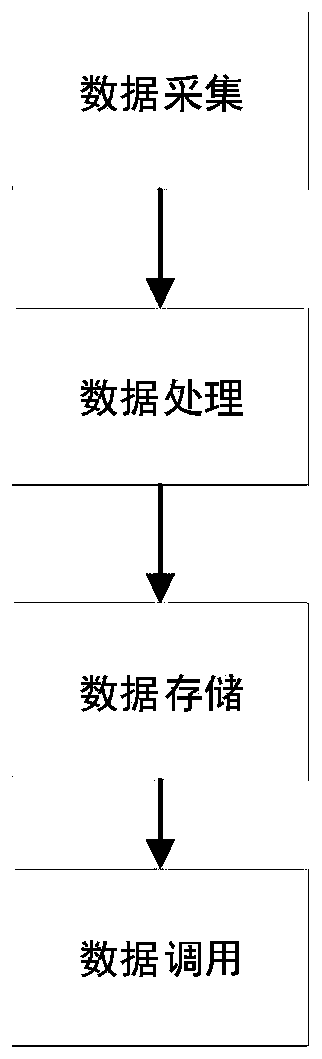

[0115] like figure 1 Shown, a kind of navigation method based on city scene, this method comprises the following steps:

[0116] Data acquisition: image acquisition of real scene image data through acquisition equipment; data acquisition of GNSS data through GPS positioning equipment;

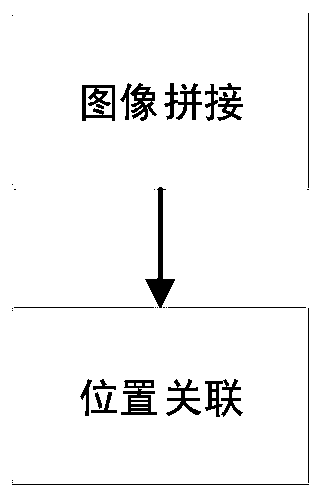

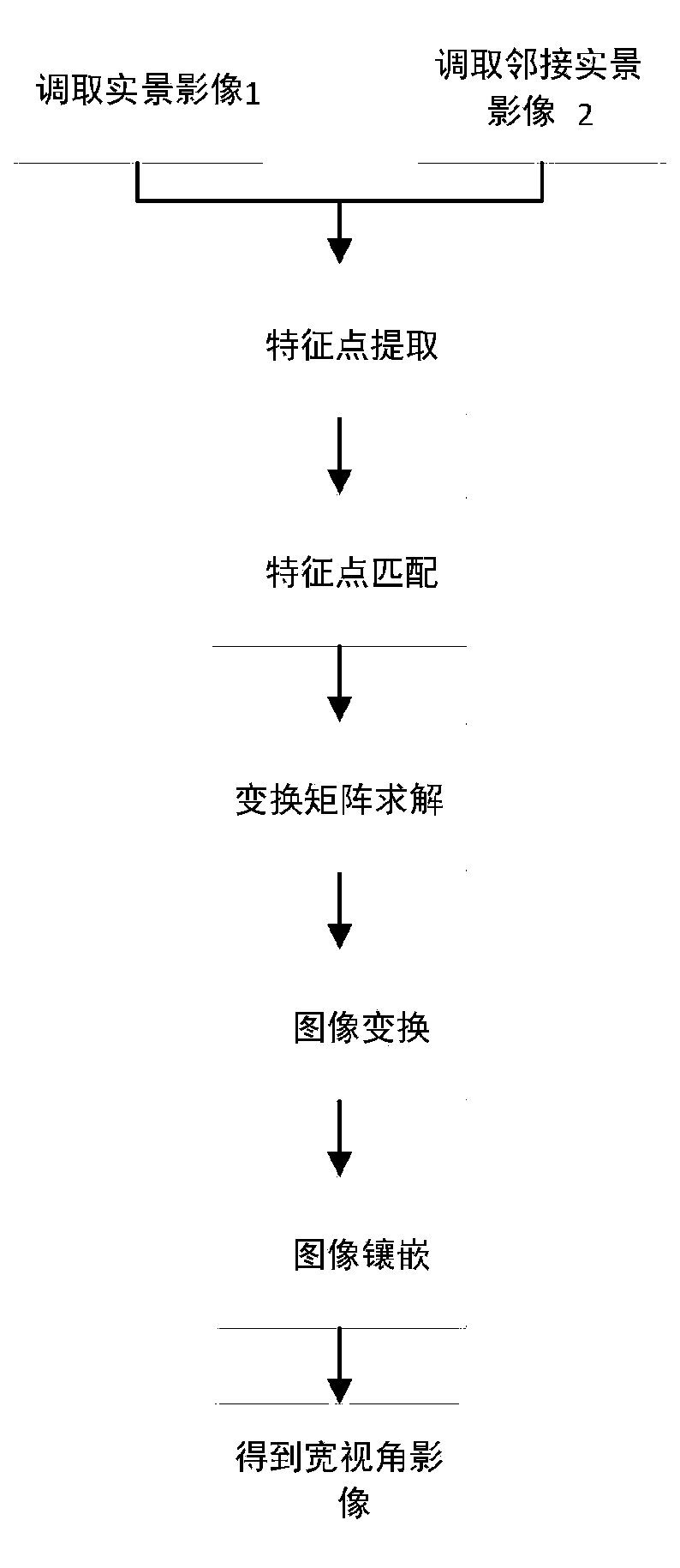

[0117] Data processing: Call two adjacent images in the collected real-scene images; extract feature points from the two real-scene images, and perform initial matching of feature points to obtain the initial matching point set, through the spatial geometric relationship between feature points Constraints, and filter the matching points according to the constraints, obtain a new set of matching points, and calculate the transformation matrix; according to the obtained transformation matrix, perform perspective transformation on the acquired second image; use the weighted average method to mosaic the two images, and obtain Wide viewing angle images; read the time stamps in the real scene image ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com