MPI-based neural network architecture search parallelization method and device

A neural network and layered structure technology, applied in the field of neural network search parallelization, can solve the problems that the local IO processing cannot be greatly accelerated, GPU computing power and video memory are difficult, and achieve easy expansion, simple expansion, and improved efficiency Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

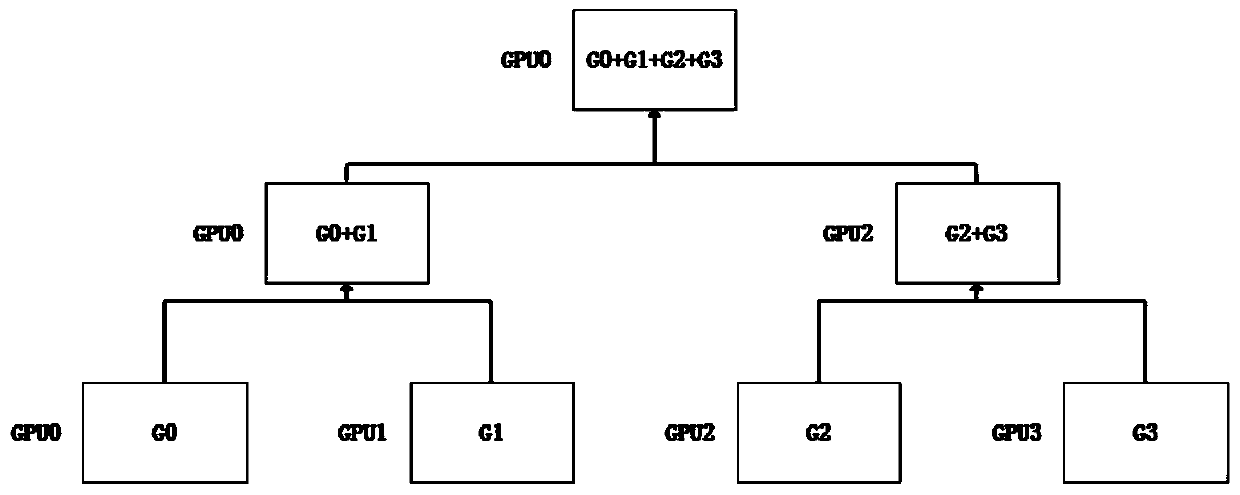

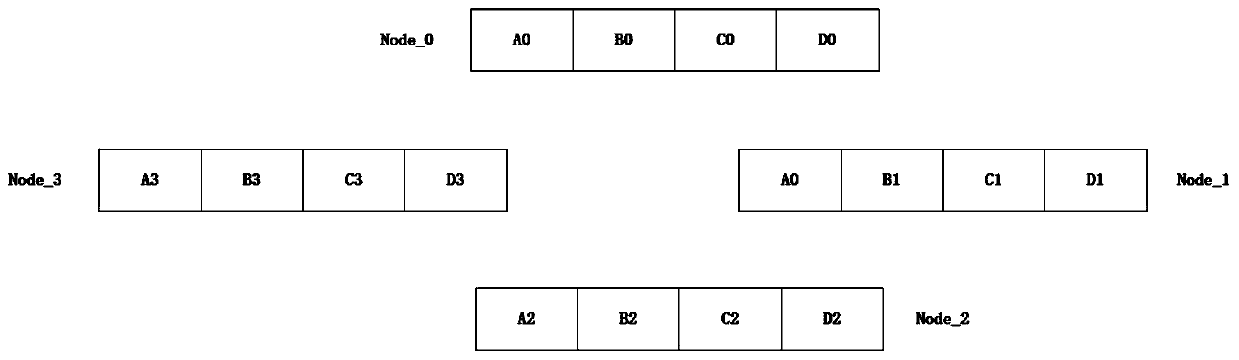

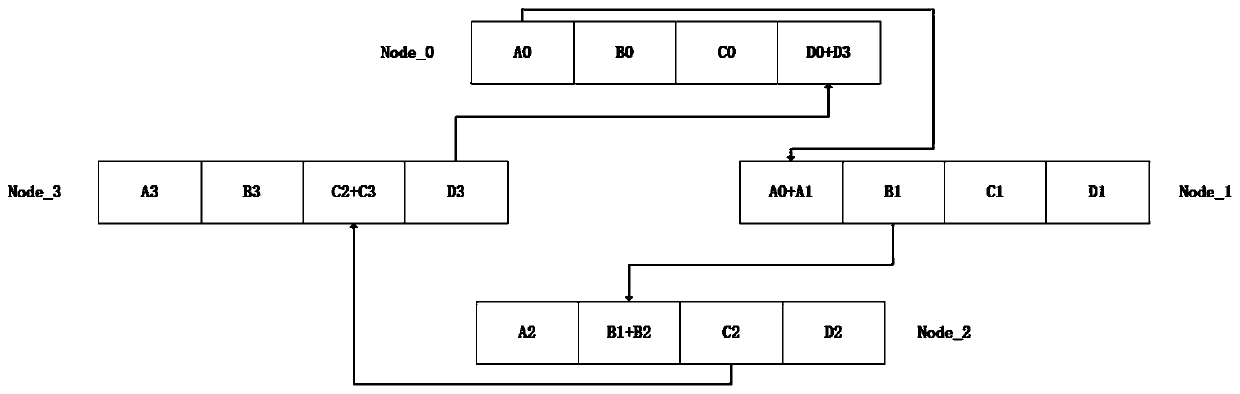

[0044] The embodiment of the present invention provides an MPI-based neural network architecture search parallelization method, the method includes the following steps (such as Figure 16 shown): S101: Start a plurality of MPI processes according to the number of GPUs in the current multi-machine environment, and arrange them in order, wherein the multi-machine environment includes a plurality of machine nodes, and each node in the plurality of machine nodes Including multiple GPUs and multiple MPI task processes, the MPI task process performs neural network architecture search training according to the input parameters; S102: The started MPI process reads data from a specified position in the training set according to its own serial number, and Perform gradient calculation; S103: multiple GPUs of each node perform gradient reduction calculations according to the hierarchical structure, and aggregate the calculation results to the first GPU among the multiple GPUs; S104: the fi...

Embodiment 2

[0090] According to an embodiment of the present invention, the present invention provides an MPI-based neural network architecture search parallelization device, such as Figure 17 As shown, it includes: a memory 10, a processor 12, and a computer program stored on the memory 10 and operable on the processor 12. When the computer program is executed by the processor 12, the above embodiment 1 is realized. The steps of the MPI-based neural network architecture search parallelization method described in .

Embodiment 3

[0092] According to an embodiment of the present invention, the present invention provides a computer-readable storage medium, and the computer-readable storage medium stores a program for implementing information transmission, and when the program is executed by a processor, the above-mentioned embodiment 1 is implemented. Steps of the MPI-based neural network architecture search parallelization method.

[0093] Through the above descriptions about the implementation manners, those skilled in the art can clearly understand that the present application can be realized by software and necessary general-purpose hardware, and of course it can also be realized by hardware. Based on this understanding, the essence of the technical solution of this application or the part that contributes to related technologies can be embodied in the form of software products, and the computer software products can be stored in computer-readable storage media, such as computer floppy disks, Read-on...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com