Encoder-decoder framework pre-training method for neural machine translation

A machine translation and pre-training technology, applied in neural learning methods, natural language translation, biological neural network models, etc., can solve problems such as inability to initialize models and limit the benefits of pre-training models.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be further elaborated below in conjunction with the accompanying drawings of the description.

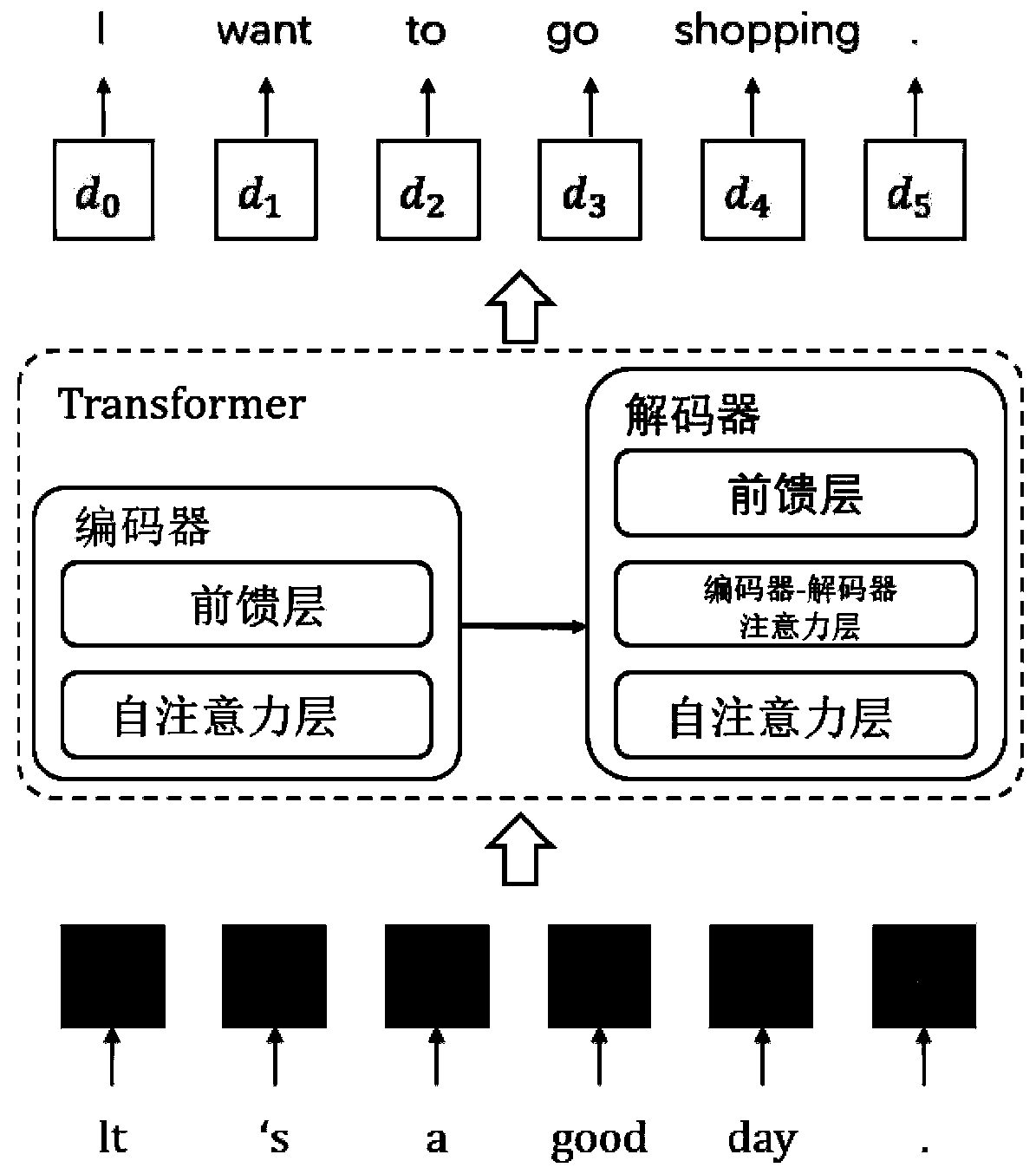

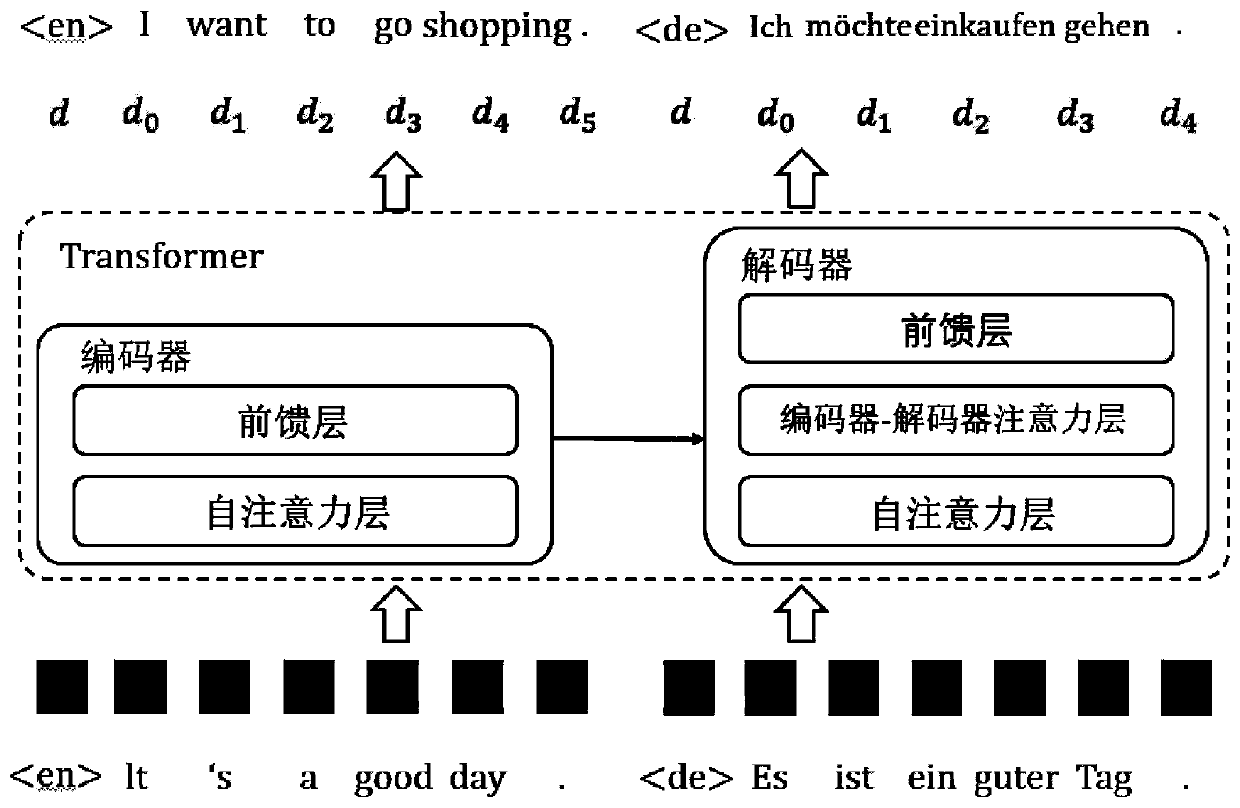

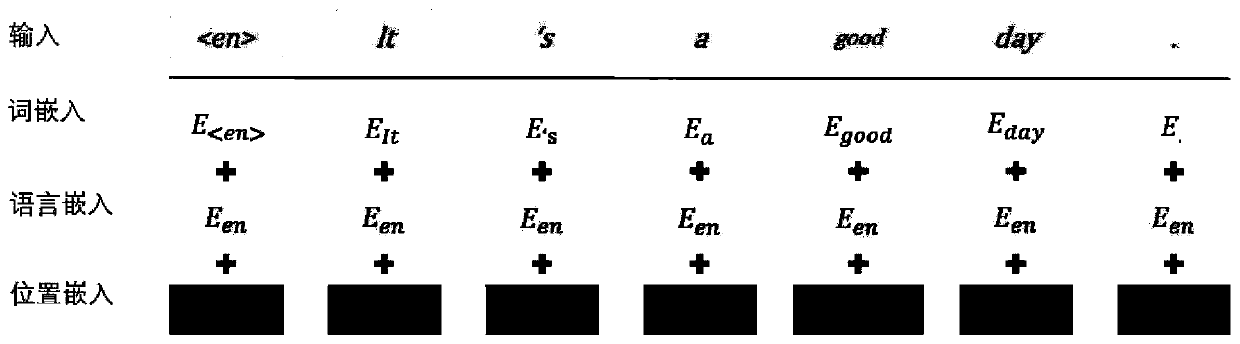

[0038] In the field of natural language processing, models based on the encoder-decoder framework are generally used in conditional generation tasks such as machine translation, text generation, and intelligent dialogue, while pre-training models require massive amounts of data, which means that they can only rely on unlabeled Monolingual data for training. Inspired by document-level machine translation tasks, encoding the context of a sentence is helpful for the translation of the sentence, because adjacent sentences generally share some semantic information. The neural machine translation model extracts the information in the input source language through the encoder, and the decoder generates the target language with the same semantics according to the extracted information. Therefore, the present invention can use the above text of a sentence as...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com