Video pedestrian re-identification method and system based on self-learning local feature representation

A pedestrian re-identification and local feature technology, applied in the field of pedestrian re-identification, can solve the problems of inaccurate alignment of representations, inability to accurately and clearly determine whether two pedestrians are the same person, etc., to achieve enhanced fusion and clear contrast features. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

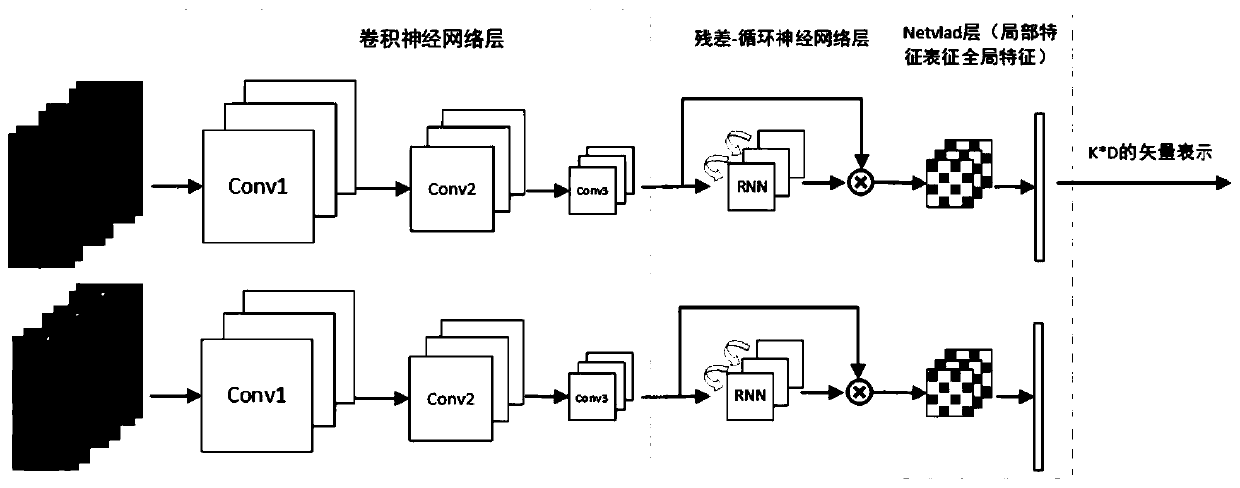

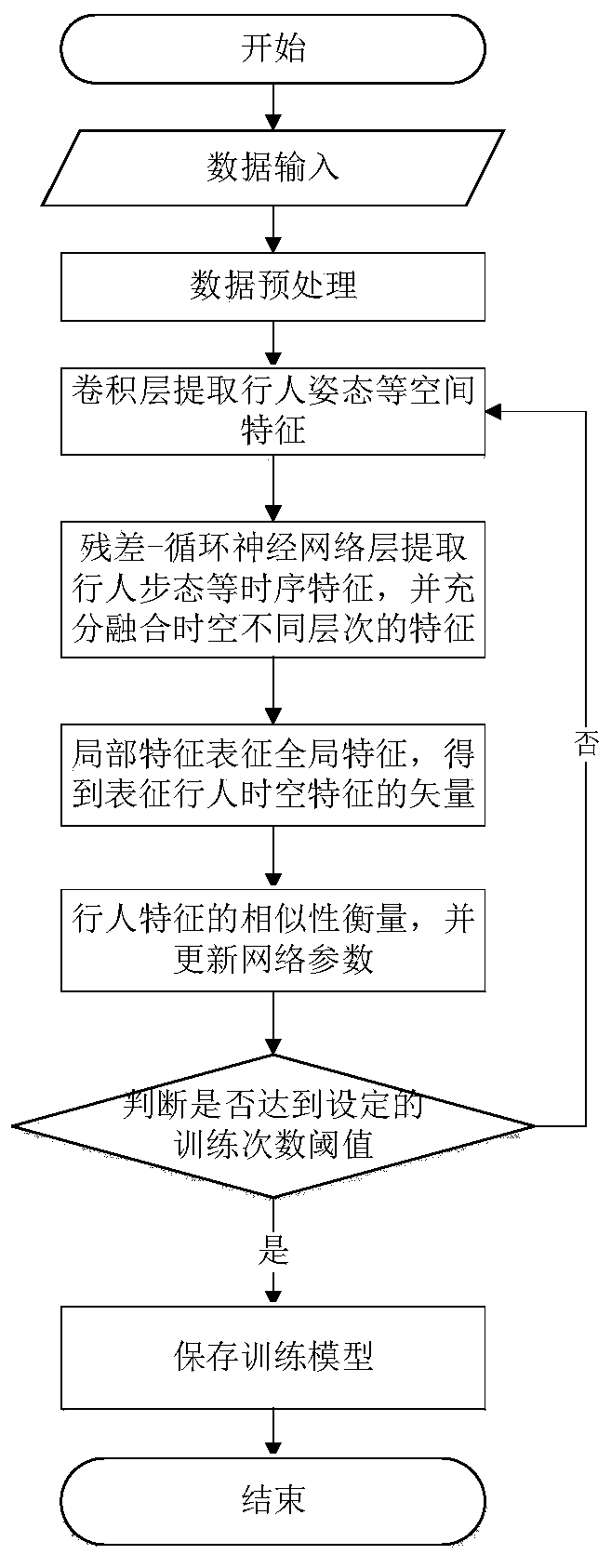

[0033] In one or more embodiments, a video pedestrian re-identification method based on self-learning deep local feature representation is disclosed. Based on the two-input Siamese network, the spatial features of the bottom layer of the pedestrian are extracted through the convolutional neural network, and then the residual -Recurrent neural network extracts the temporal features of pedestrians, uses the local features learned during training (i.e., clustering centers) to represent the temporal and spatial features of pedestrians, obtains the global feature representation of pedestrians in the video, measures the similarity of pedestrian features, and judges the difference between two videos. Whether the included pedestrians are the same person.

[0034] refer to figure 2 , in this embodiment, the video pedestrian re-identification method based on self-learning deep local feature representation includes the following steps:

[0035] Obtain two pieces of video information co...

Embodiment 2

[0066] In one or more implementations, a video pedestrian re-identification system based on self-learning local feature representation is disclosed, including:

[0067] A device for respectively acquiring video information containing continuously changing images of pedestrians to be identified within two set time periods;

[0068] A device for separately processing the acquired two pieces of video information by adopting a twin network structure to obtain aligned vectors representing the temporal and spatial characteristics of pedestrians;

[0069] It is a device for judging whether the pedestrians in two consecutive pieces of image information are the same person by comparing the vector information obtained, and realizing pedestrian re-identification.

[0070] Among them, the twin network structure is two networks with the same structure and parameter sharing, and one of the network structures includes:

[0071] A three-layer convolutional neural network for extracting spati...

Embodiment 3

[0076] In one or more embodiments, a terminal device is disclosed, including a server, the server includes a memory, a processor, and a computer program stored on the memory and operable on the processor, and the processor executes the The program implements the video pedestrian re-identification method based on self-learning local feature representation disclosed in Embodiment 1, and for the sake of brevity, details are not repeated here.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com