A convolutional network accelerator, configuration method, and computer-readable storage medium

A technology of convolutional network and configuration method, which is applied in the direction of data exchange network, digital transmission system, electrical components, etc., can solve the problems of not making full use of FPGA resources, not having a general implementation plan, and not achieving time-division multiplexing, etc. Achieve flexible configurability, balance between time and accelerator operation time, and increase bandwidth

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

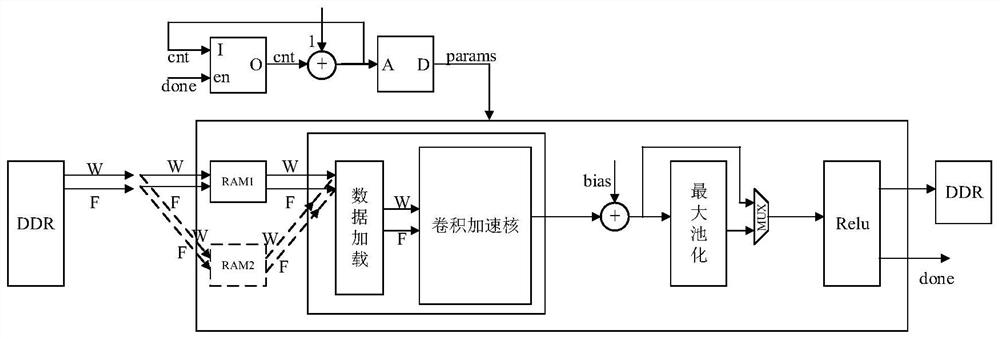

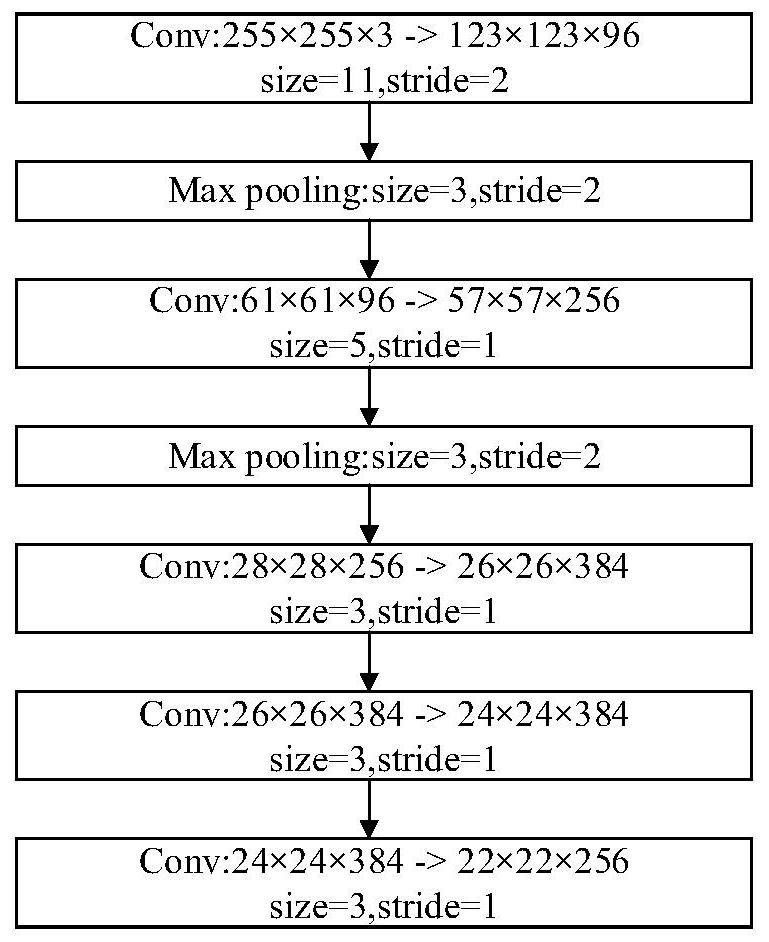

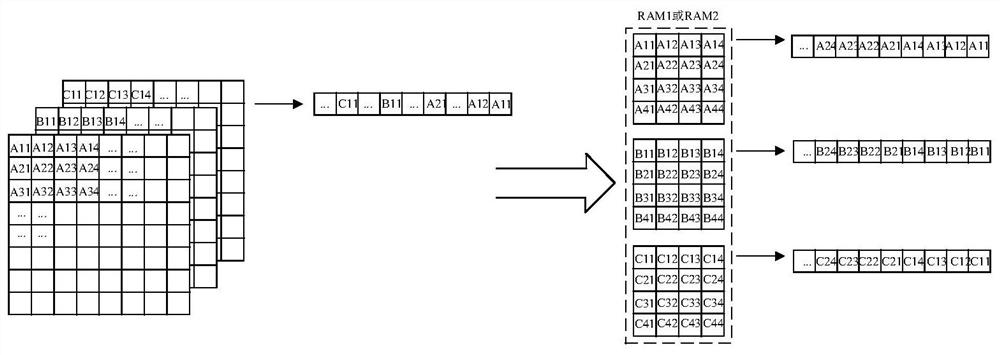

[0068] The FPGA chip-based convolutional network accelerator provided by the embodiment of the present invention is based on the basic structure of a single-layer convolutional network, that is, the structure of convolutional layer + pooling layer + activation layer + batch normalization operation layer. For the number of layers of the overall network model, the executed forward network layer obtains the configuration parameters of the current layer, such as the size of the input and output feature map (length, width, number of channels), and the size of the convolution kernel (length, width, number of channels). , step size of convolution and pooling operations, etc., and load feature maps and weight parameters in batches from DDR (double data rate off-chip memory) through configuration parameters. At the same time, the acceleration kernel of the convolutional layer can also configure the degree of parallelism according to the configuration parameters.

[0069] The present in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com