Data-driven real-time hand action evaluation method based on RGB video

A hand movement, data-driven technology, applied to computer components, instruments, biological neural network models, etc., to achieve the effect of improving accuracy and robustness, improving computing efficiency, and improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

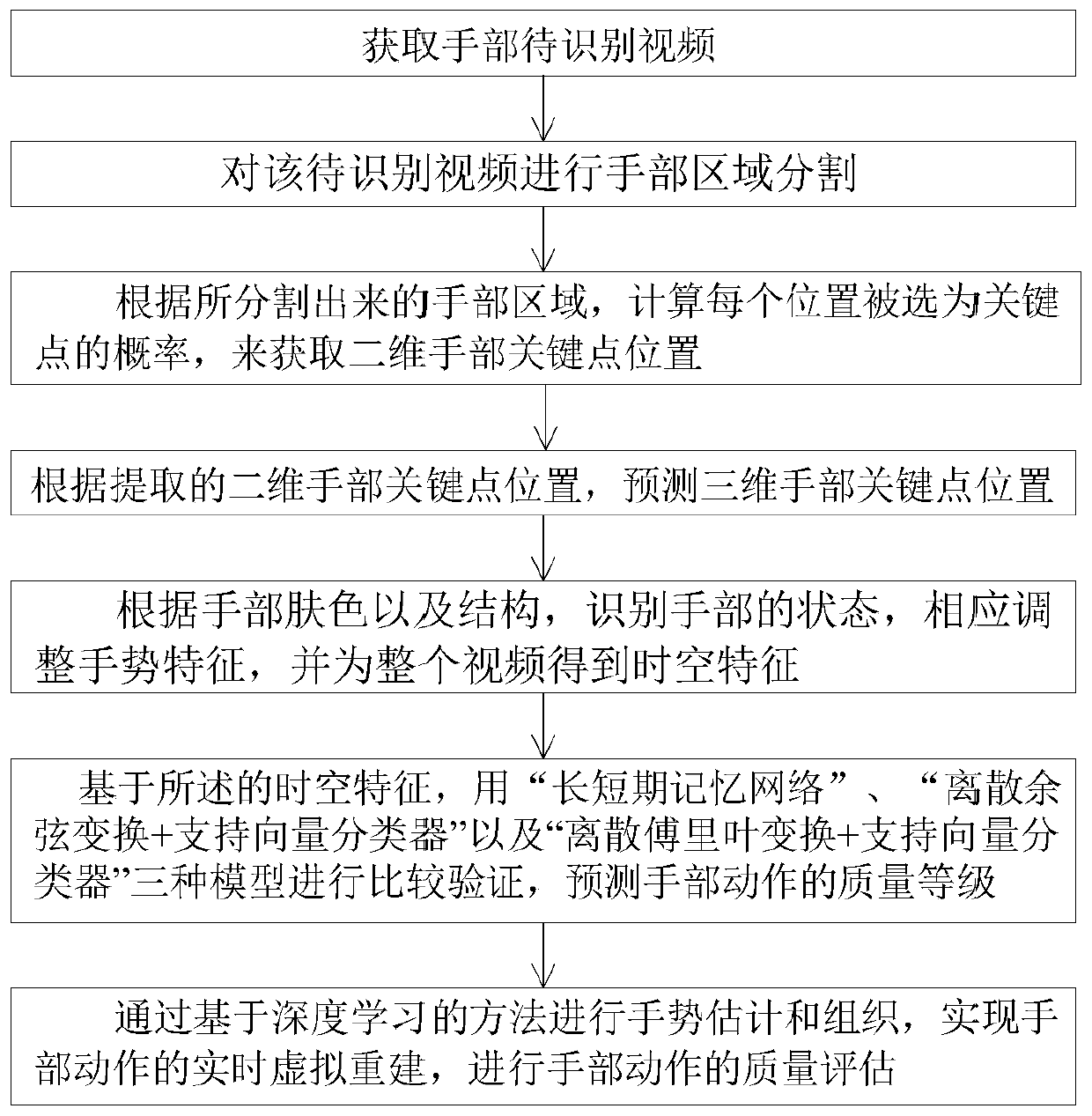

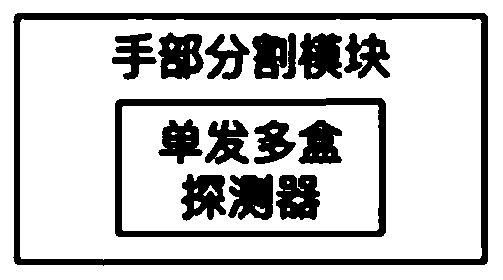

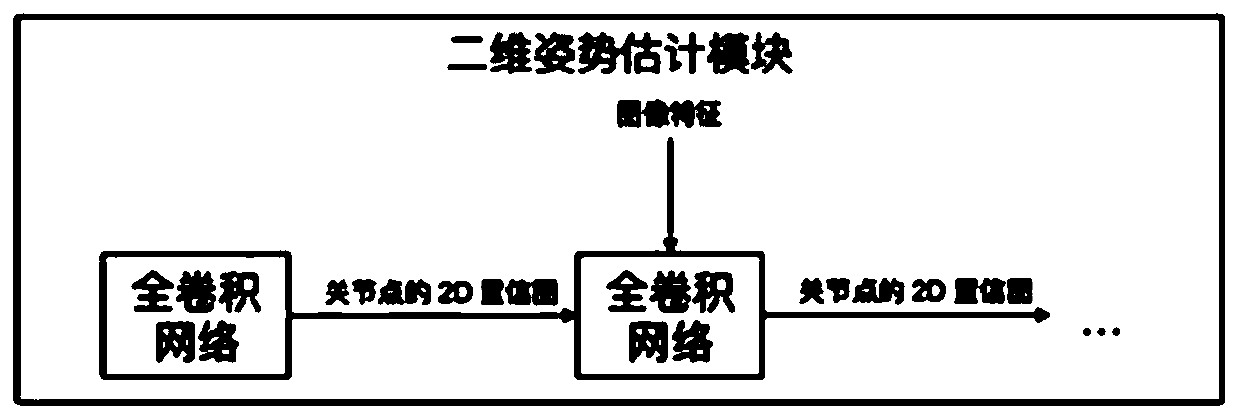

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0062] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

[0063] An image containing depth information (also known as image depth) refers to the number of bits used to store each pixel, and it is also used to measure the color resolution of an image. It determines the number of colors that each pixel of a color image may have, or determines the number of gray levels that each pixel of a grayscale image may have, which determines the maximum number of colors that may appear in a color image, or a grayscale image The maximum gray level in . While the pixel depth or image depth can be very deep, the color depth of various display devices is limited. For example, the standard VGA supports 4-bit 16-color color images, and at least 8-bit 256 colors are recommended for multimedia applications. Due to the limitation of equipment and the limitation of human eye resolution, in general, it is not necessary to pursue a ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com