Lightweight CNN model calculation accelerator based on FPGA

A model computing and accelerator technology, applied in the field of lightweight CNN model computing accelerators, can solve problems such as slow running speed of accelerators, achieve low power consumption, speed up computing speed, and improve computing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

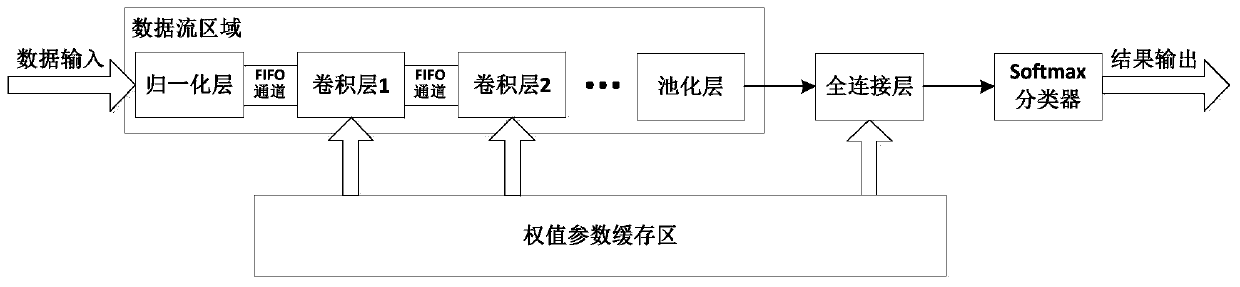

[0033] Specific implementation mode one: refer to figure 1 This embodiment is described in detail. The FPGA-based lightweight CNN model calculation accelerator described in this embodiment is characterized in that it includes: a weight buffer, a normalization layer, a convolution layer, a pooling layer, and a full connection. layer and Softmax classifier.

[0034] A feature of the present invention is that a layer fusion strategy is adopted to fuse and optimize adjacent convolution operations, batch normalization (Batch Norm, hereinafter referred to as BN) operations and activation operations in the neural network model, and use it as Independent functional units are merged into a unified convolutional layer.

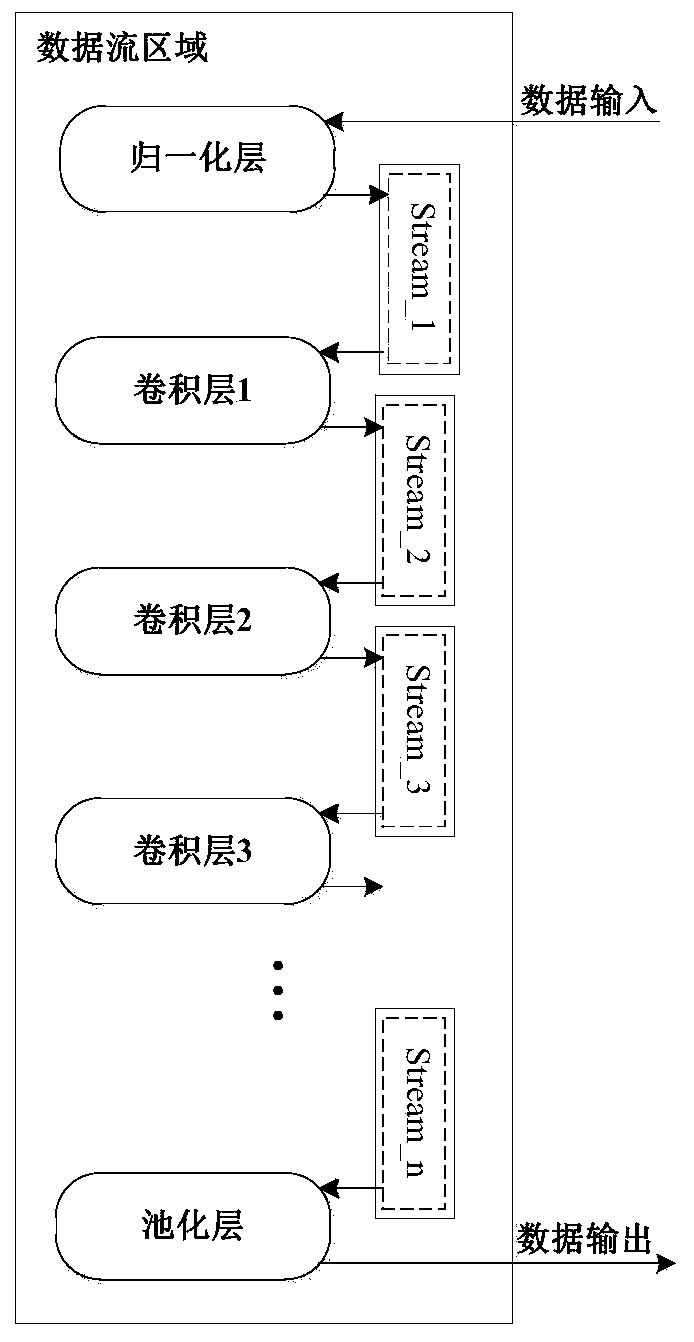

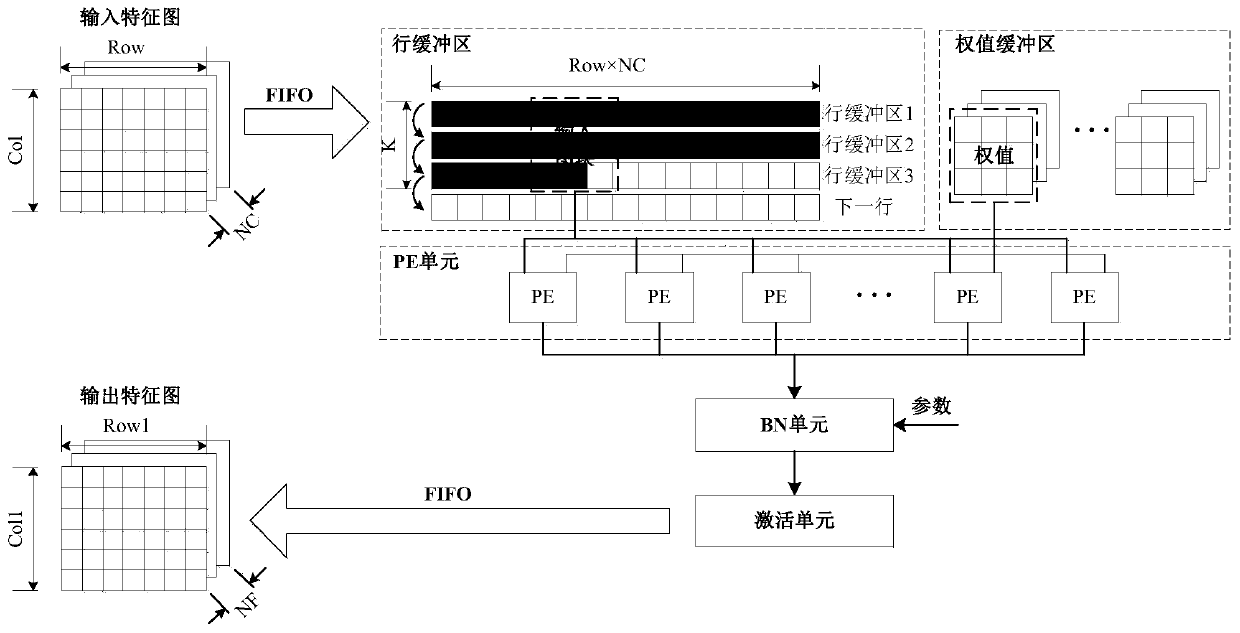

[0035] Another feature of the present invention is that the PE unit in the convolutional layer is designed to be accelerated. Through the two steps of line buffer design and intra-layer pipeline strategy, the data is guaranteed to pass through in the form of data flow...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com