Video denoising method based on voxel-level non-local model

A non-local, video technology, applied in the field of video processing, can solve problems such as unsatisfactory, inability to preserve video details, and poor results.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

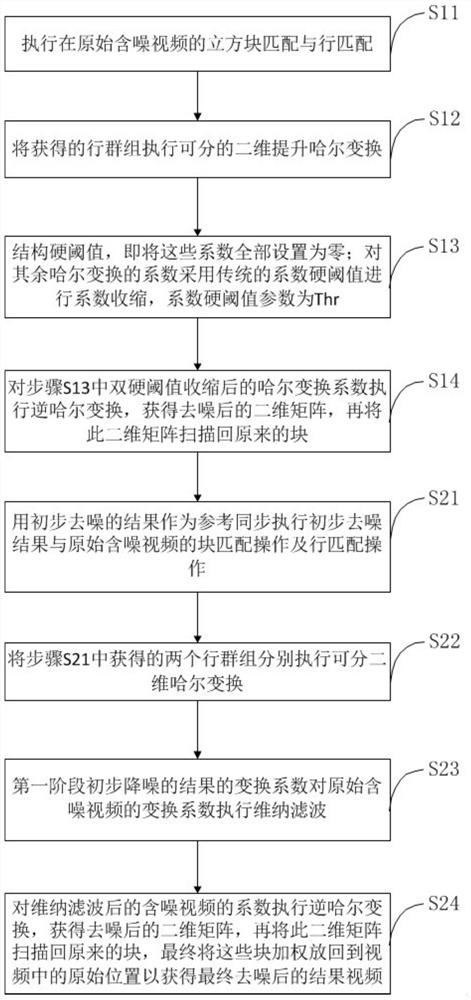

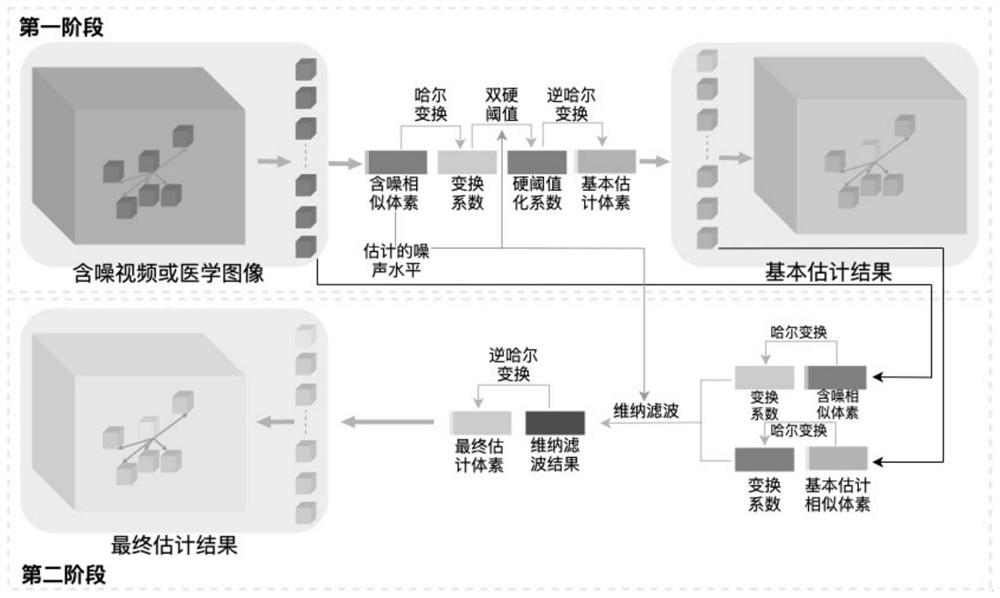

Method used

Image

Examples

Embodiment 1

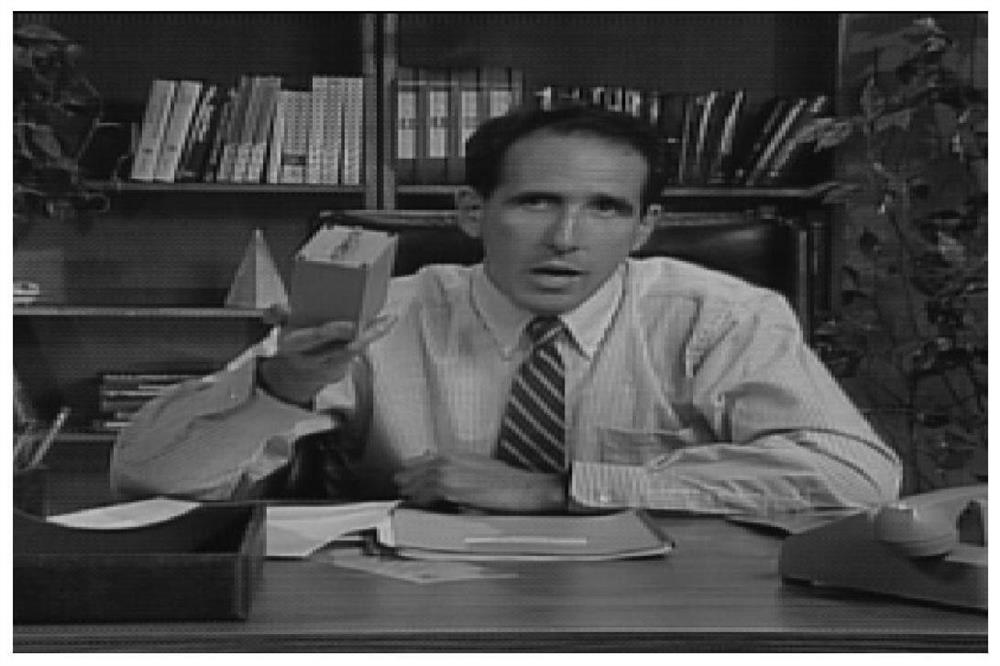

[0065] refer to Figure 2 to Figure 5 As shown, the present invention uses MATLAB software to carry out the video dataset (comprising 8 sections of video) downloaded from the website http: / / www.cs.tut.fi / ~foi / GCF-BM3D / of reference 1 in the background technology Video denoising experiment of the method of the present invention,

[0066] It can be seen from the comparison of video frames that the method of the present invention can not only remove the noise in the video well, but also ideally preserve the details of the video.

[0067] Table 1 is the PSNR value comparison between the video denoising results of the method of the present invention and the video denoising results of the VBM3D method and the VBM4D method in the background technology. The data below each cell is the result of the present invention, the intermediate data is the result of the VBM4D method, and the above The data in is the result of the VBM3D method, and the better one among all the data is indicated ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com