Sound scene classification method based on width and depth neural network

A deep neural network and scene classification technology, applied in the field of machine hearing, can solve problems such as long training time, difficulty in improving classification accuracy, and difficulty in meeting practical requirements.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

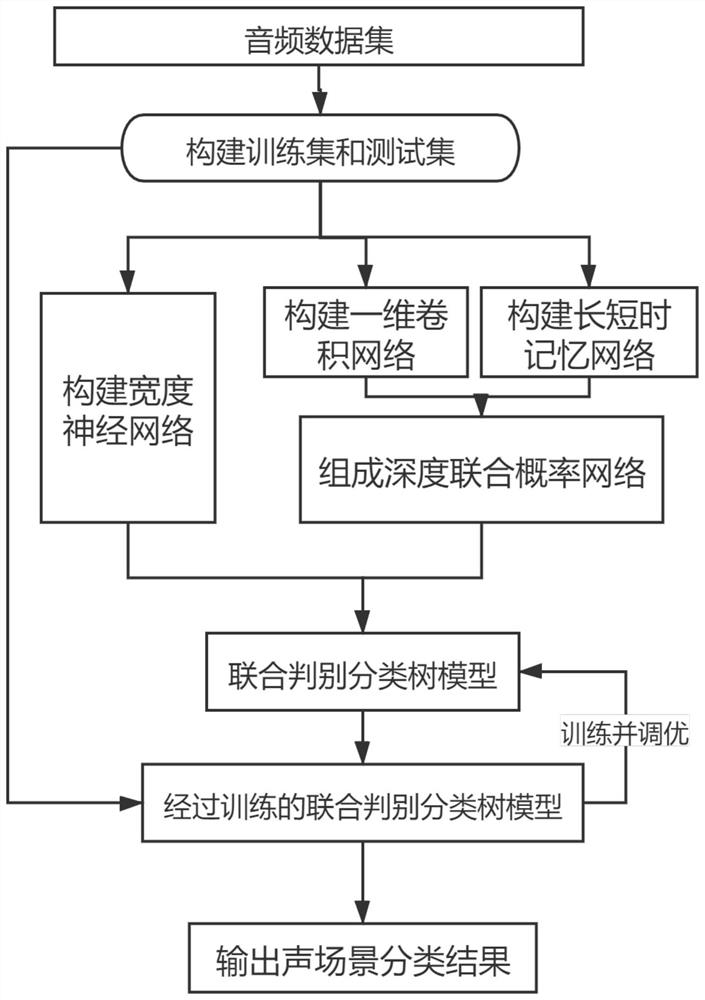

[0066] The present embodiment discloses a sound scene classification method based on the width and depth neural network. The schematic flowchart of the sound scene classification method based on the width and depth neural network is as follows: figure 1 shown, including the following specific steps:

[0067] S1. Create an audio data set:

[0068] S1.1. The DCASE 2018 Task5 public data set is used as the audio sample of the audio data set. The data set continuously records the sound events in a home environment for a week, with a total of 9 sound scene classifications and 72984 audio samples, each of which has the same length. is 10s, the sampling rate is 16kHz, and the number of quantization bits is the same;

[0069] S1.2. Extract the 20-dimensional logarithmic Mel spectrum feature of each audio sample in the audio data set, that is, each audio sample corresponds to a feature map with a pixel number of 20×399, and then perform After the mean is normalized, the map is conver...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com