Pruning method of deep convolutional neural network, computer equipment and application method

A deep convolution and neural network technology, applied in the field of deep convolutional neural networks, can solve problems such as inability to accelerate the network

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

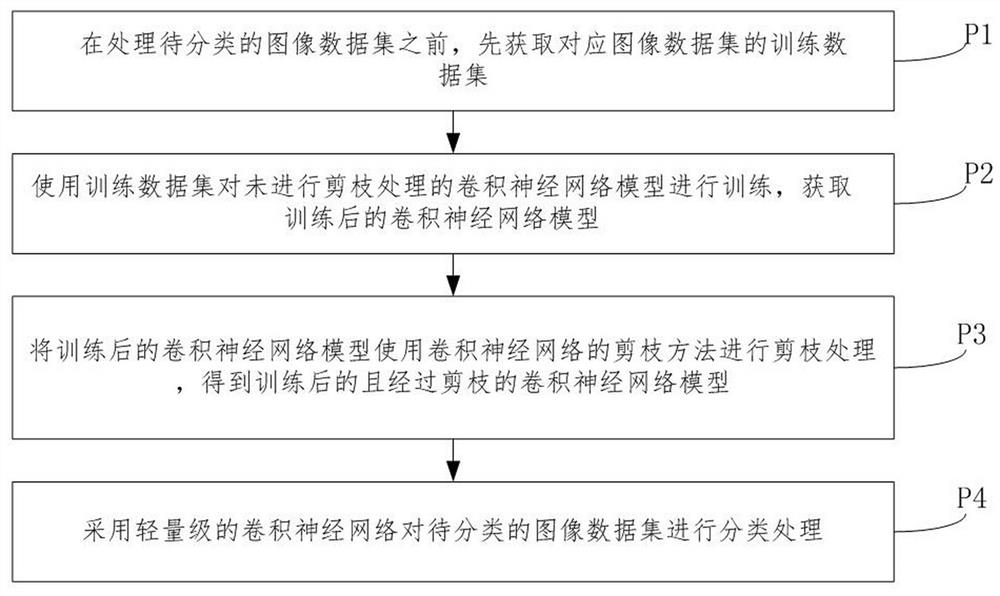

[0106] Such as figure 1 As shown, the embodiment of the present invention shows a processing process based on a pruned deep convolutional neural network applied to any image data set. The specific processing process may include the following steps:

[0107] S1. Before processing the image data set to be classified, first obtain the training data set corresponding to the image data set;

[0108] Usually, the training data set may include a training set and a test set, and the pictures in the training set and the test set are pre-labeled;

[0109] S2. Use the training data set to train the convolutional neural network model without pruning, and obtain the trained convolutional neural network model / convolutional neural network structure;

[0110] S3. Pruning the trained convolutional neural network model using a convolutional neural network pruning method to obtain a trained and pruned convolutional neural network model, which is a lightweight model;

[0111] S4. Using a lightwei...

Embodiment 2

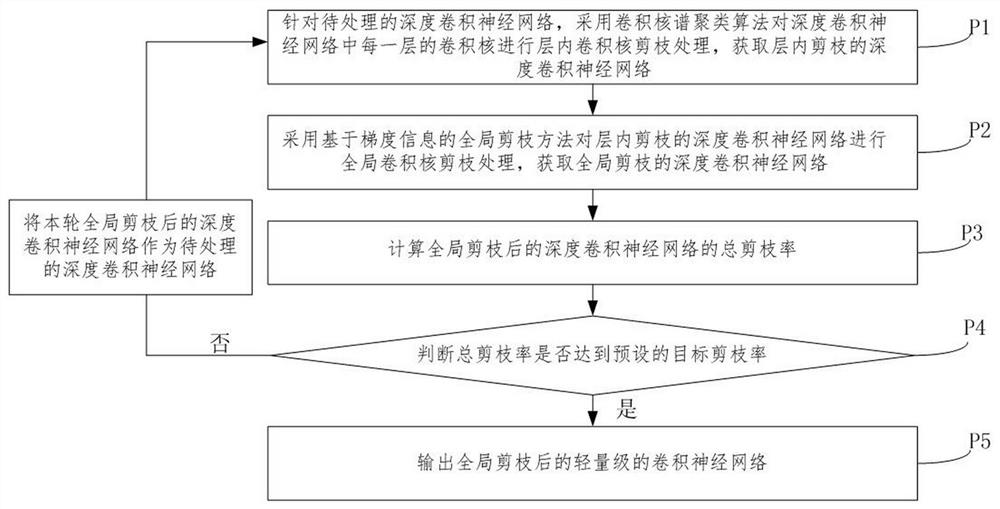

[0117] Such as figure 2 As shown, the embodiment of the present invention provides a pruning method of a deep convolutional neural network, and the method may include the following steps.

[0118] P1. For the deep convolutional neural network to be processed, use the convolution kernel spectral clustering algorithm to perform intra-layer convolution kernel pruning on the convolution kernel of each layer in the deep convolutional neural network, and obtain the pruning value of the layer deep convolutional neural network;

[0119] P2. Use the global pruning method based on gradient information to perform global convolution kernel pruning on the deep convolutional neural network pruned in the layer, and obtain the deep convolutional neural network with global pruning;

[0120] P3. Calculate the total pruning rate of the deep convolutional neural network of global pruning;

[0121] In this embodiment, the total pruning rate of the current round is equal to the sum of the prunin...

Embodiment 3

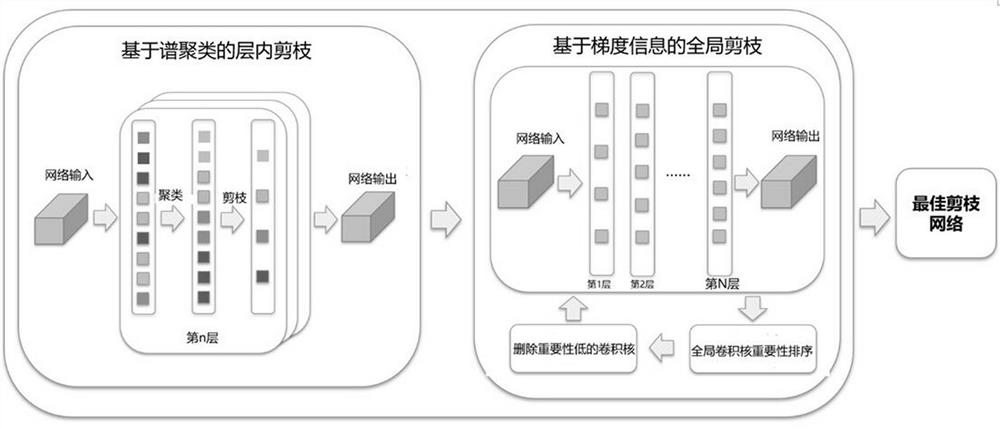

[0131] An embodiment of the present invention provides a pruning method of a deep convolutional neural network, such as image 3 As shown, the execution subject of the method of this embodiment can be any computer device / computing platform, and the computer device / computing platform of this embodiment executes the steps of stage A, stage B and stage C in sequence, and then obtains the final pruned lightweight convolutional neural network.

[0132] In this embodiment, the process of phase A is the above figure 2 Detailed description of steps in P1, B as above figure 2 Detailed description of steps in P2, C as above figure 2 Detailed description of steps P3, P4 and P5 in .

[0133] The process of Phase A is detailed as follows:

[0134] Step A01. Obtain the spectral clustering pruning rate for the i-th round of intra-layer pruning processing ;

[0135] Step A02, for the deep convolutional neural network to be processed in the i-th round, perform a convolution kernel sp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com