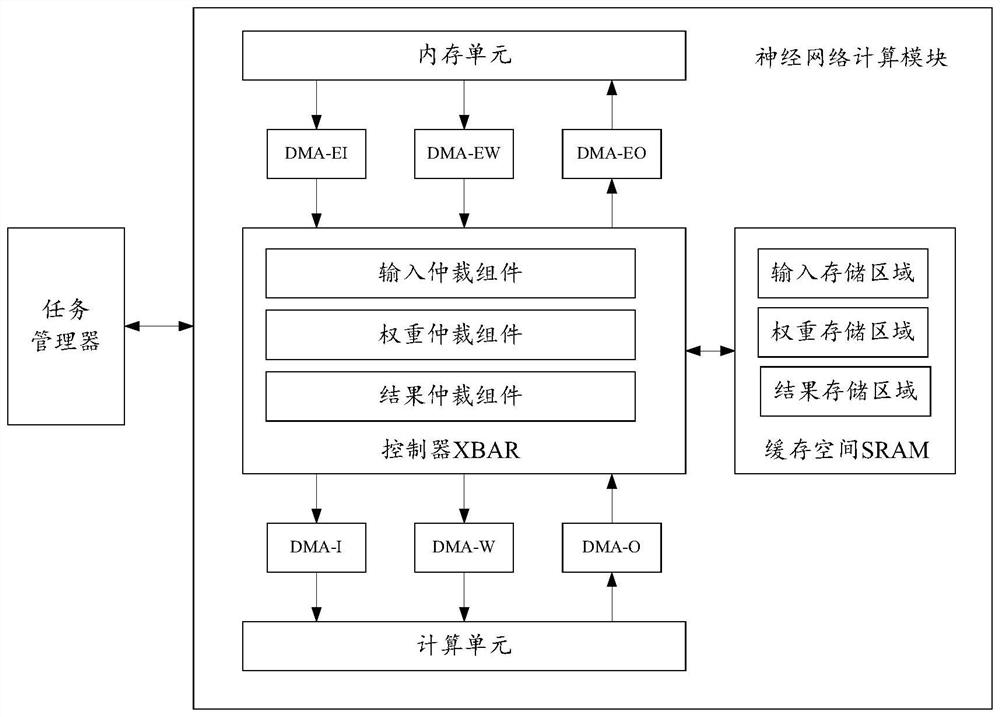

Data management method, neural network processor and terminal equipment

A neural network and processor technology, applied in the fields of data management, neural network processors and terminal equipment, can solve the problems of slow calculation speed and long data caching process.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] The data management method provided by the embodiment of the present application can be applied to mobile phones, tablet computers, wearable devices, vehicle-mounted devices, augmented reality (augmented reality, AR) / virtual reality (virtual reality, VR) equipment, notebook computers, super mobile personal computers In the neural network processors of terminal devices such as ultra-mobile personal computer (UMPC), netbook, and personal digital assistant (personal digital assistant, PDA), the embodiment of the present application does not impose any limitation on the specific type of the terminal device.

[0024] It should be understood that the first, second and various numbers mentioned herein are only for convenience of description, and are not intended to limit the scope of the present application.

[0025] It should also be understood that the term "and / or" in this article is only an association relationship describing associated objects, indicating that there may be...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com