Voice key information separation method based on deep learning

A technology of voice information and key information, which is applied in the field of separation of key voice information based on deep learning, can solve problems such as complex processes, numerous steps, and decreased accuracy, and achieve the effects of avoiding cumulative errors, reducing manual intervention, and improving effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

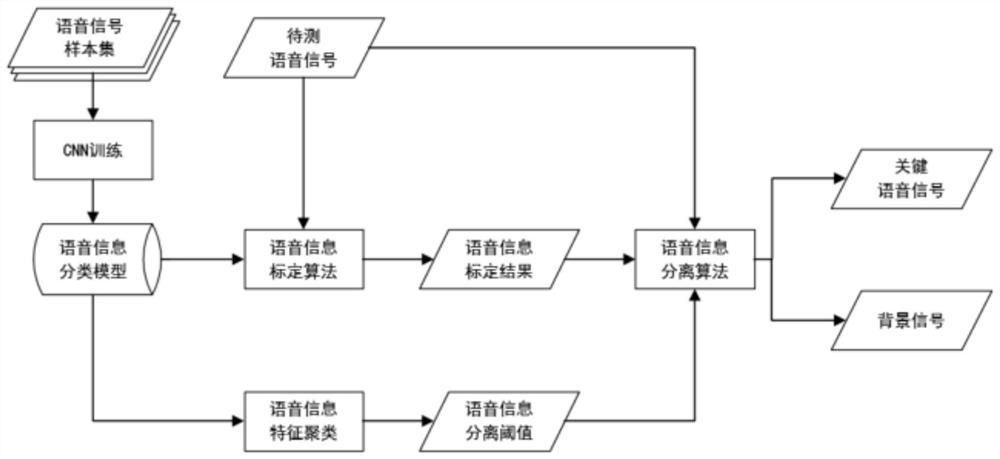

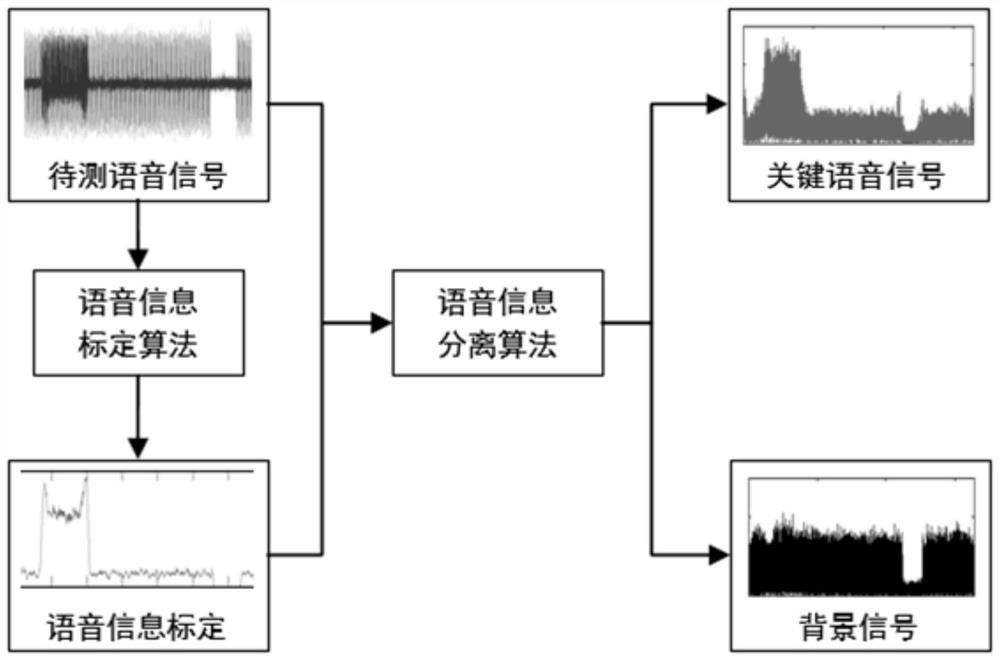

[0028] refer to Figure 1 to Figure 4 , a method for separating key speech information based on deep learning, comprising the following steps:

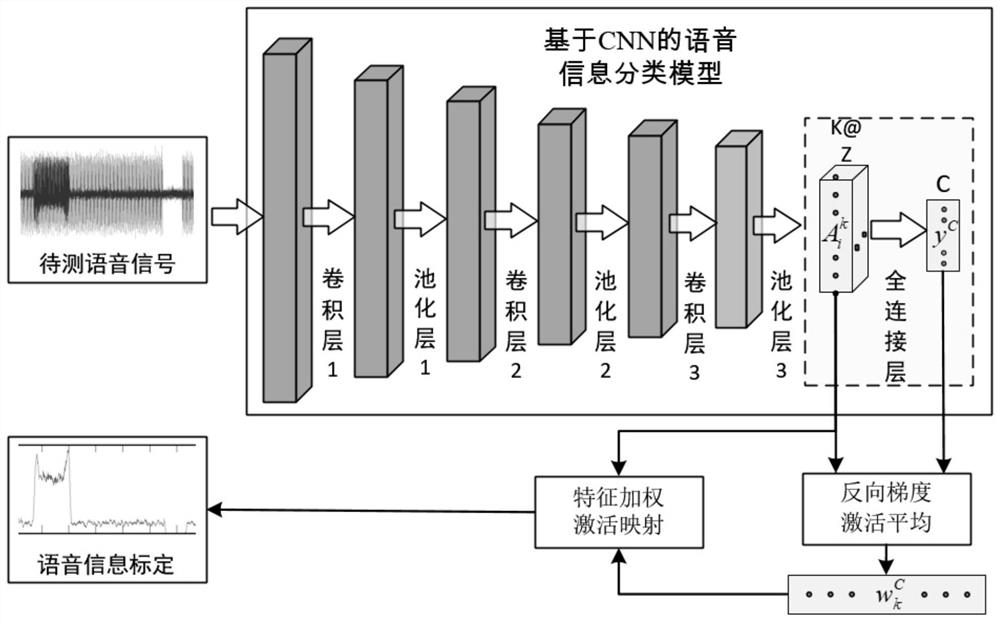

[0029] S1, CNN training: the voice signal sample set is used as training data, and the key information to be tested is used as the label, and the CNN convolutional neural network is used to train the voice signal sample set to obtain a voice information classification model, and the voice information obtained after training The classification model can distinguish whether different speech signals contain key information that needs attention, such as determining whether there is "ID card" related information in a speech.

[0030] S2. Voice information calibration: based on the trained voice information classification model, pass the voice signal to be tested through the voice information classification model, and use the reverse gradient activation average algorithm and feature weighted activation mapping algorithm to automatically cal...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com