Robot motion posture visual estimation method

A technology for robot motion and pose estimation, which is applied in instrumentation, calculation, image data processing, etc., can solve the problem that robot estimation is susceptible to interference, achieve the effect of eliminating cumulative errors and reducing hardware costs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

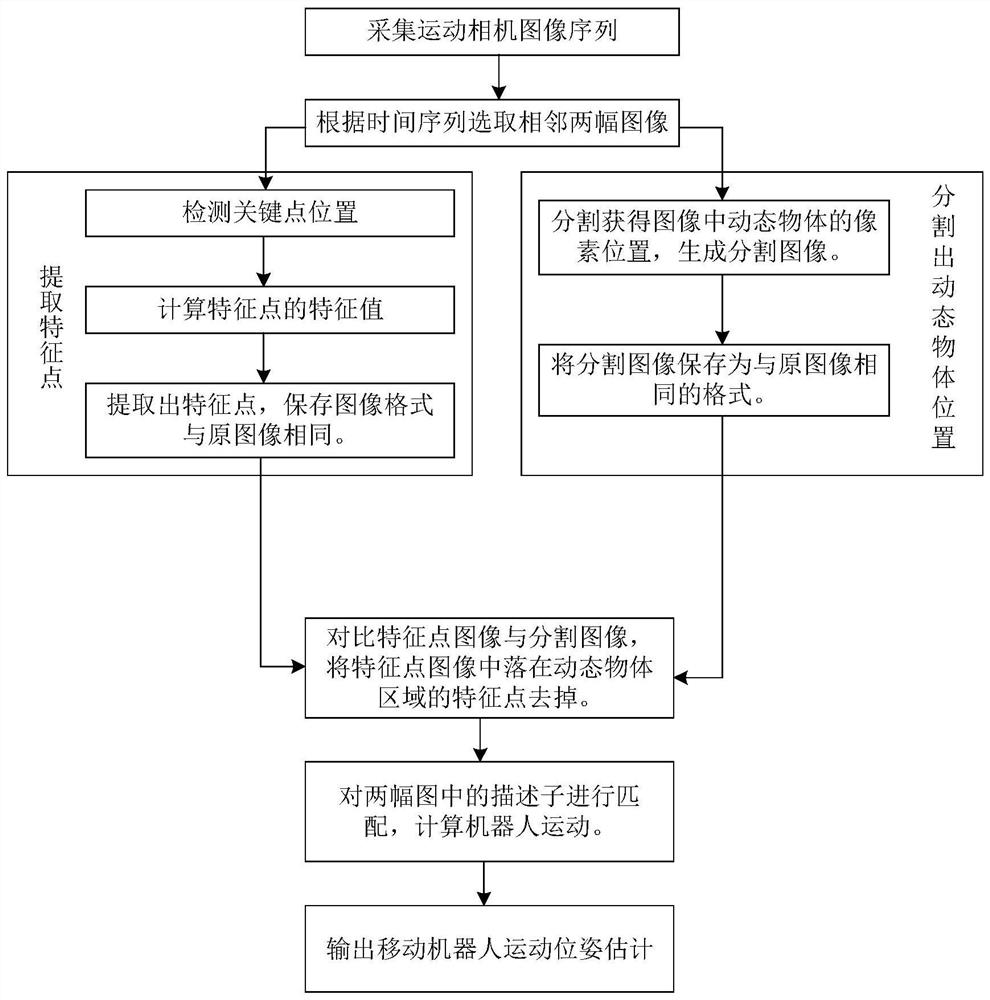

[0050] In order to illustrate the technical solution of the present invention more clearly, the technical solution of the present invention is described in further detail below in conjunction with the accompanying drawings:

[0051] Such as figure 1 Described; A kind of robot movement posture visual estimation method, comprises the following steps:

[0052] Step (1.1), collecting continuous video images in a dynamic environment, selecting two of the collected images, detecting key points, calculating key point feature values, detecting and saving feature point positions;

[0053] Step (1.2), segmenting the original image, obtaining and saving the position of the pixel area where the dynamic object is located in the image;

[0054] Step (1.3), comparing the image for storing the feature point information with the image for saving the segmentation result, removing the feature points distributed in the pixel area where the dynamic object is located in the image for storing the f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com