Style migration method for target edge sharpening

A clear and stylistic technology, applied in the field of image processing, can solve the problems of limited retention of semantic content and spatial layout of images, blurred boundaries, image distortion, etc., and achieve the effect of reducing parameters, reducing the amount of parameters, and increasing the depth of the network.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

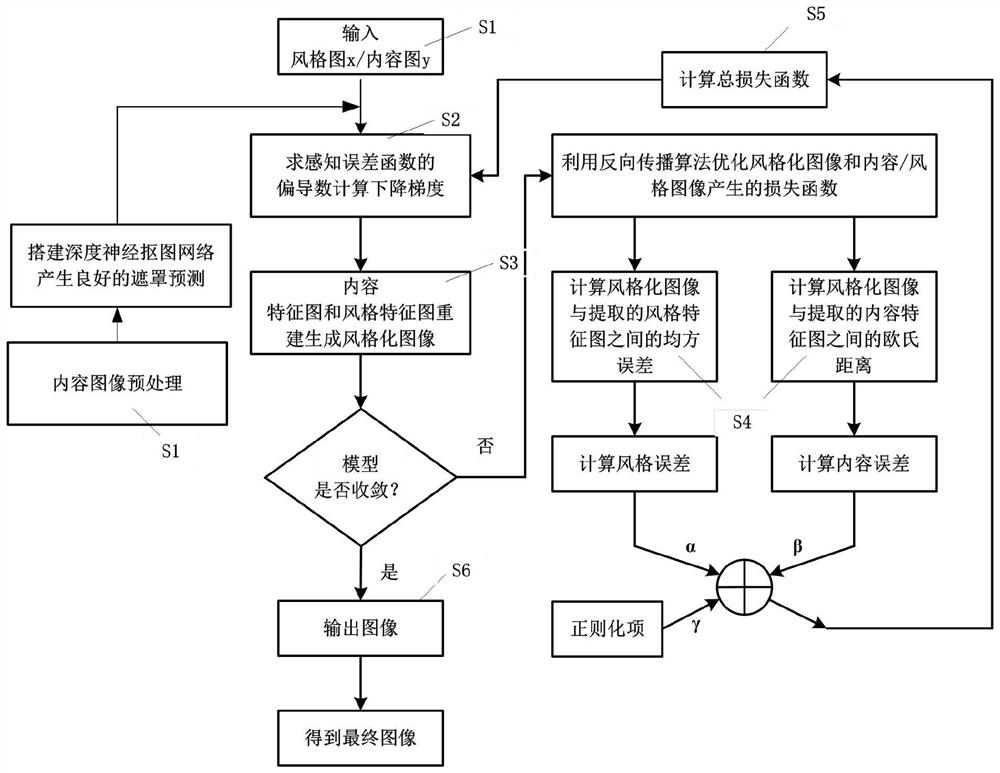

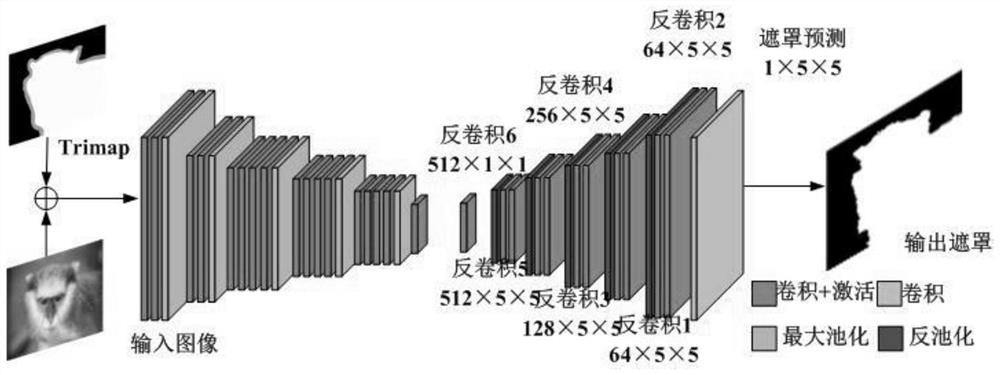

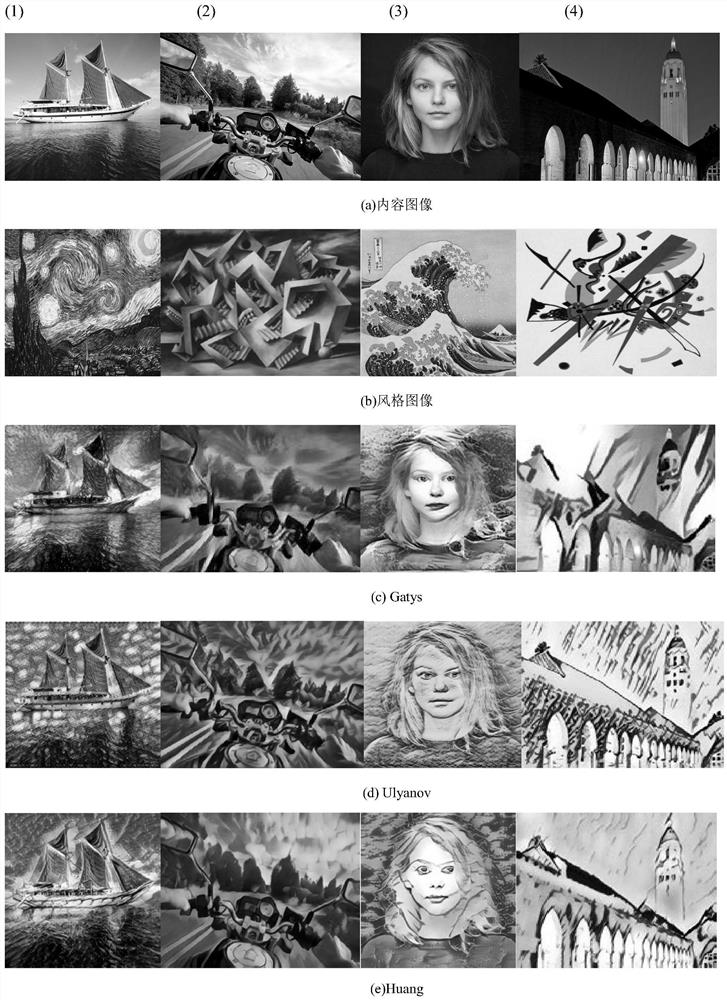

[0034] The present invention proposes a style transfer method for object edge clarity. The principle of the present invention is as follows: firstly, a codec deep neural network is built, and the encoder converts the input into down-sampled features through subsequent convolutional layers and maximum pooling layers. map, the decoder uses an unpooling layer and a convolutional layer to upsample the feature map. Secondly, under the condition of ensuring the same receptive field, replace the large convolution kernel with a small convolution kernel, increase the depth of the network, reduce parameters, and obtain a more accurate matting mask. Again, a normalization layer is added to the conventional convolutional layer of the migration network. The normalization layer learns the affine parameters to match the statistical information of the content image and the style image, realizes parameter sharing between images with similar styles, and reduces the cost of the migration model. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com