Game character action recognition and generation method based on neural network

A game character and neural network technology, applied in biological neural network models, animation production, neural architecture, etc., can solve the problems of high requirements for producers, independent and discontinuous data, and long time-consuming, and achieve simple and predictable 3D poses. Robust results, less sensitive to noisy data

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

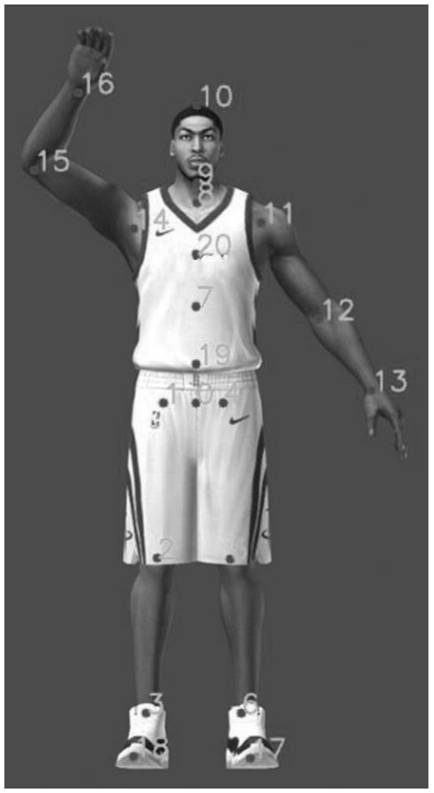

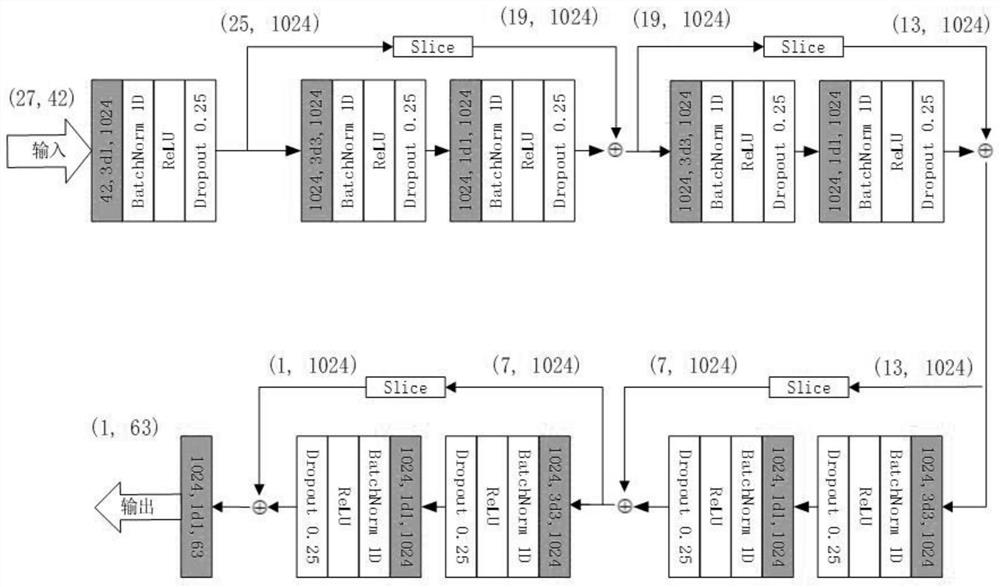

[0044] Such as Figure 1 to Figure 4 As shown, the present embodiment provides a neural network-based game character action recognition generation method, which includes the following steps:

[0045] In the first step, the user obtains a video file containing human body movement, which can be taken by a mobile phone or downloaded from the Internet. This article uses a video camera.

[0046] In the second step, the user uploads the video file containing human body movement to the server.

[0047] In the third step, the server divides the video file uploaded by the user into continuous frame pictures according to a certain frame rate.

[0048] The fourth step is to input the continuous frames of pictures into the human body 2D key point detection neural network, the steps are as follows:

[0049] (1) Convert the picture to a uniform size.

[0050] (2) The 2D key point coordinate data of each frame of the human body is obtained through the human body key point detection neural...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com