High-precision indoor positioning method based on joint vision and wireless signal characteristics

A wireless signal, indoor positioning technology, applied in the direction of location information-based services, specific environment-based services, neural learning methods, etc. The effect of external environment influence and improving positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

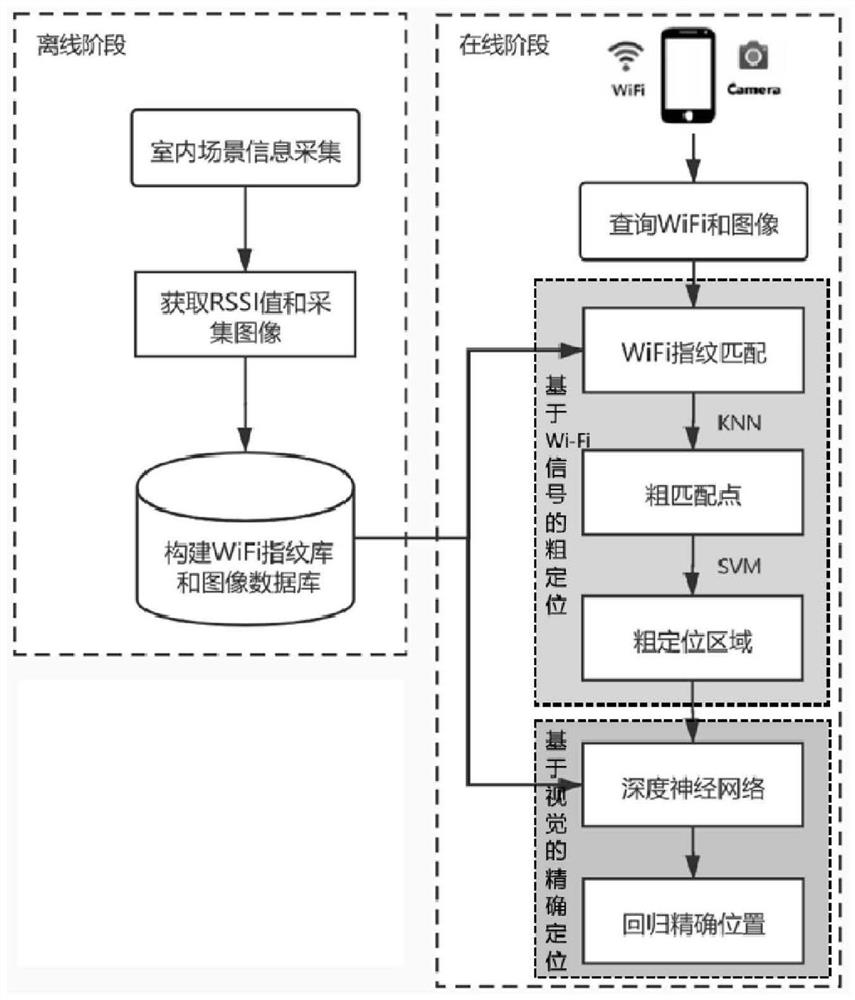

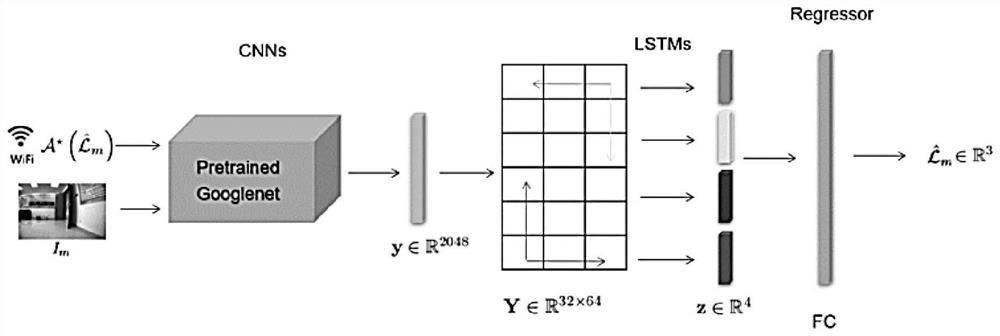

[0017] Such as Figure 4 As shown, this embodiment involves a high-precision positioning method that combines vision and wireless signal features. The test is carried out under the specific environment setting of a 4000-square-meter office building corridor. Because there are floating people in the corridor environment of the office building, the Wi- Such an environment poses a challenge to achieve good Wi-Fi signal-based positioning. Moreover, there are many windows on one side of the corridor, and the light conditions will change greatly during the day. This method can achieve a positioning accuracy of 0.62m at a grid size of 1.5m. The method specifically includes: figure 1 Steps shown:

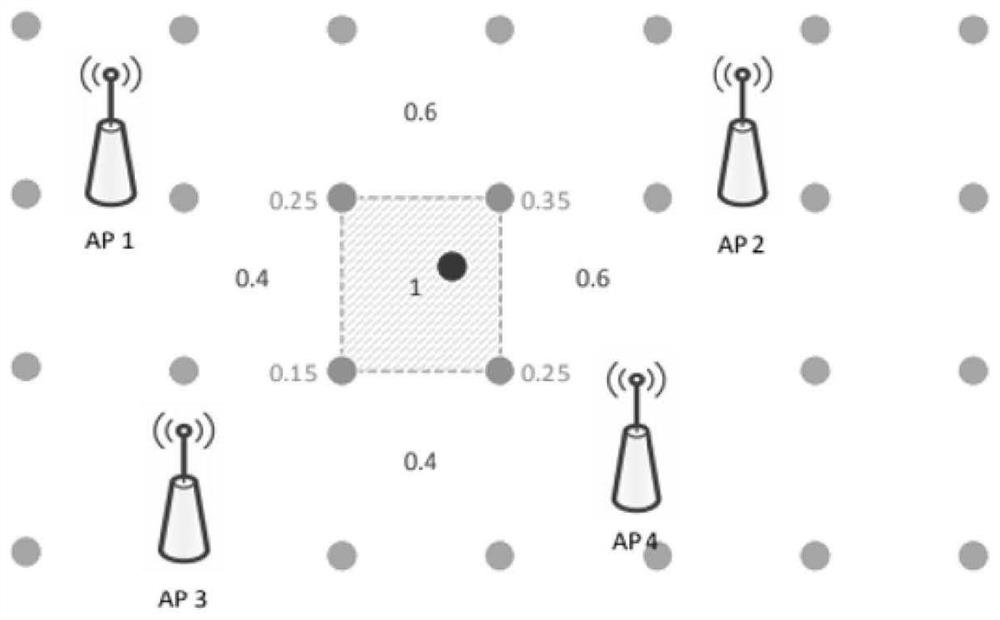

[0018] Step 1: Collect indoor scene information, obtain RSSI value and image data, specifically: in the offline stage, in the predetermined range of indoor scenes, use such as Figure 5 The mobile robot shown with a Wi-Fi module and an image acquisition module establishes communication wi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com