Precision dynamic adaptive accumulation module for bit width incremental addition tree

A dynamic adaptive, additive tree technology, applied in the field of calculation and calculation, to achieve the effect of low power consumption and reduced power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

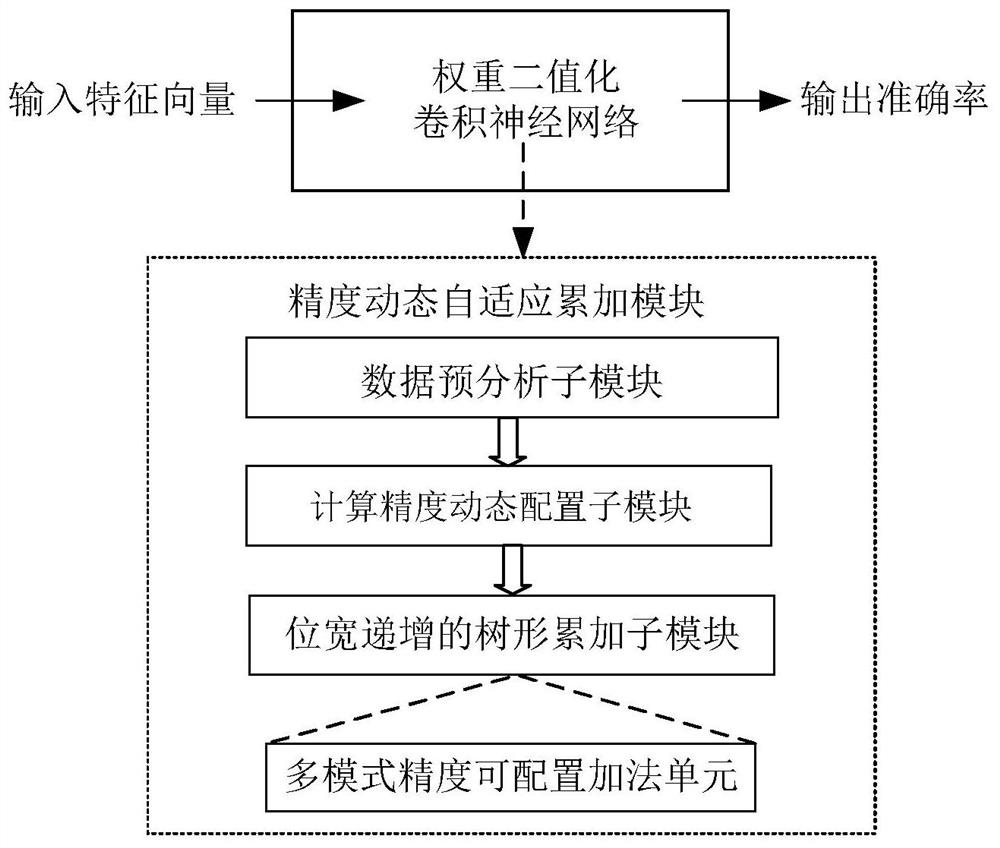

[0032] Example 1. The present invention proposes a precision dynamic self-adaptive accumulation module for bit-width increment tree, and its overall structure is as follows: figure 1 shown. The precision dynamic adaptive accumulation module includes: data pre-analysis sub-module, calculation precision dynamic configuration sub-module, and tree-shaped accumulation sub-module with increasing bit width; among them, the tree-shaped accumulation sub-module with increasing bit width adopts an N-layer addition tree structure, in which Each layer contains M multi-mode precision configurable addition units, and satisfies M=2 N-1 , where N is the level of the additive tree.

[0033] The input feature vector of the weight binarized convolutional neural network is input into the data pre-analysis sub-module, and the data pre-analysis sub-module provides the approximate bit width obtained from the pre-analysis to the calculation precision dynamic configuration sub-module, and the calcula...

Embodiment 2

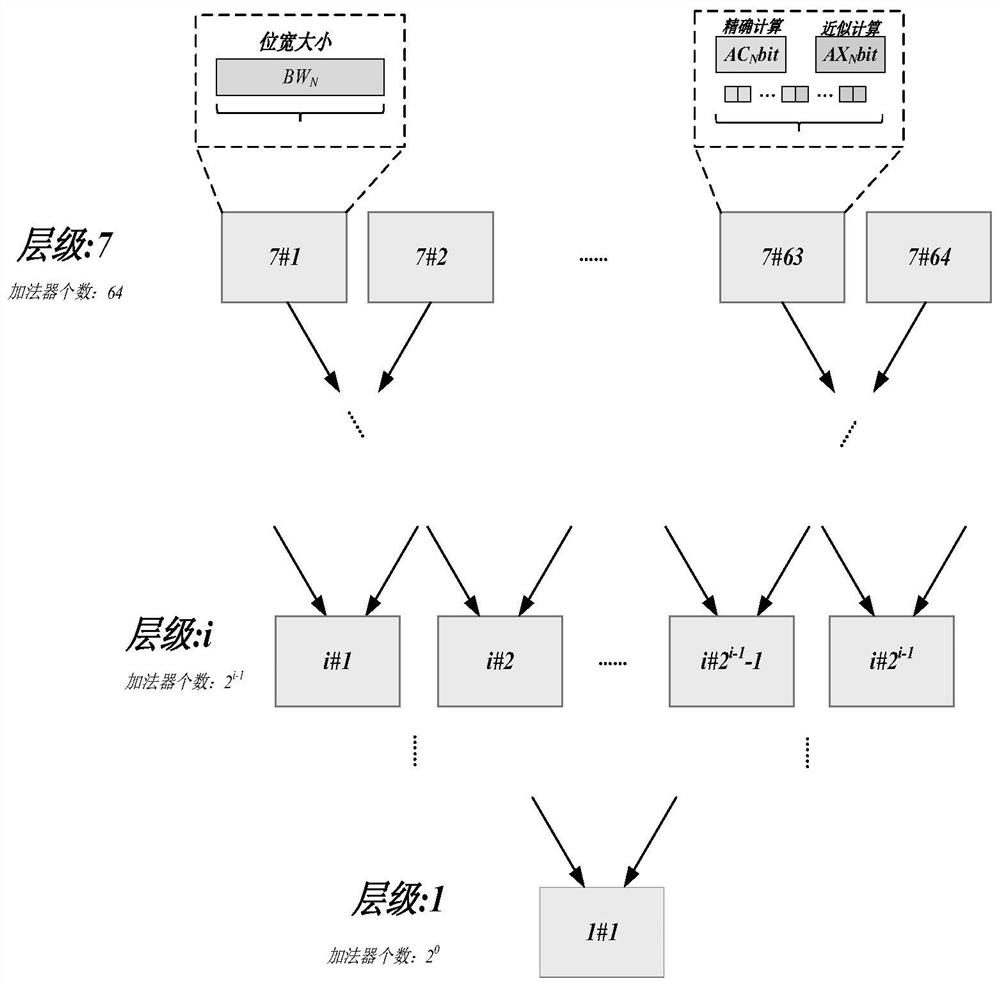

[0036] Example 2. In this preferred embodiment, the tree-shaped accumulation sub-module with increasing bit width adopts a 7-layer addition tree structure, and its structure is shown as figure 2 shown. The specific parameters and data scale of the weight binarized convolutional neural network also match the tree-shaped accumulation sub-module of the 7-layer additive tree structure with increasing bit width.

[0037] from figure 2 It can be seen that the first layer of the tree accumulation submodule with increasing bit width contains 2 0 = 1 multi-mode precision configurable addition unit whose hardware number is 1#1; the i-th layer contains 2 i-1 A multi-mode precision configurable addition unit, whose hardware numbers are i#1, i#2, ... i#2 i-1 -1, i#2 i-1 , and so on, the seventh layer contains 2 6 = 64 multi-mode precision configurable adding units, the hardware numbers of which are 7#1, 7#2, ... 7#63, 7#64.

[0038] Therefore, in the calculation process, no more t...

Embodiment 3

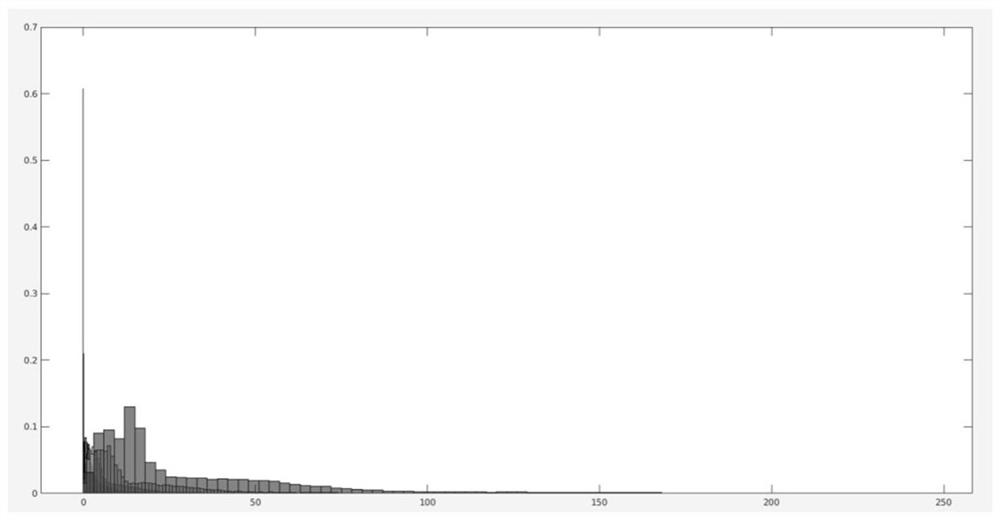

[0042] Example 3. The approximate number of digits of the data pre-analysis sub-module is determined by the specific distribution of the input data, and the selection of the approximate number of digits at this level is determined by judging whether the sum of the decimal digits of the input data is greater than 1, that is, at 2 -n Bits are counted, if the last count exceeds 2 n , then the final sum is greater than 2 -n ·2 1n = 1

[0043] The approximate number of digits of the data pre-analysis sub-module is determined by the specific distribution of the input data. The specific implementation method is to use a counter to count the number of 1 bits for each bit, and set the counter threshold to determine the bit width of the precise calculation component and the approximate calculation component. size. In scenarios with high computing accuracy requirements, reduce the number of approximate computing components, and in scenarios with low computing accuracy requirements, i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com