Robot intelligent grabbing method based on digital twinning and deep neural network

A deep neural network and robot intelligence technology, applied in biological neural network models, neural architectures, manipulators, etc., can solve problems such as difficulty in grasping work and difficult to meet the needs of intelligent production, to ensure the success rate, improve learning ability and generalization. The ability to improve the ability to ensure the accuracy of the effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention will be described in detail below with reference to the accompanying drawings and preferred embodiments, and the purpose and effect of the present invention will become clearer. It should be understood that the specific embodiments described here are only used to explain the present invention and are not intended to limit the present invention.

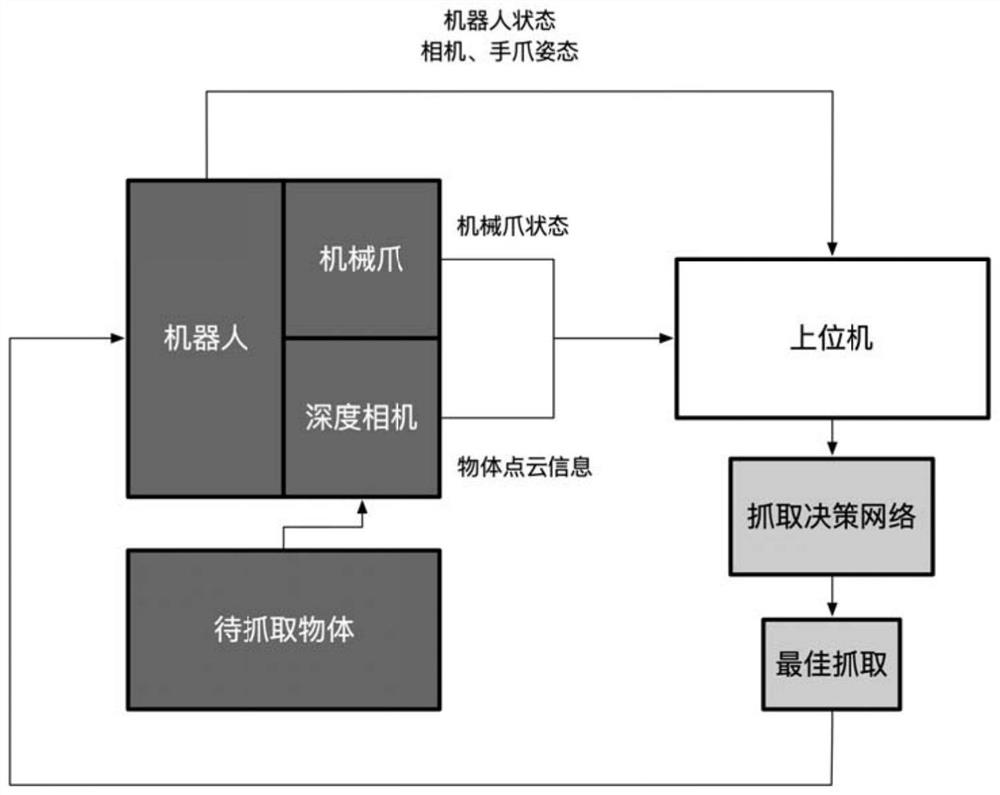

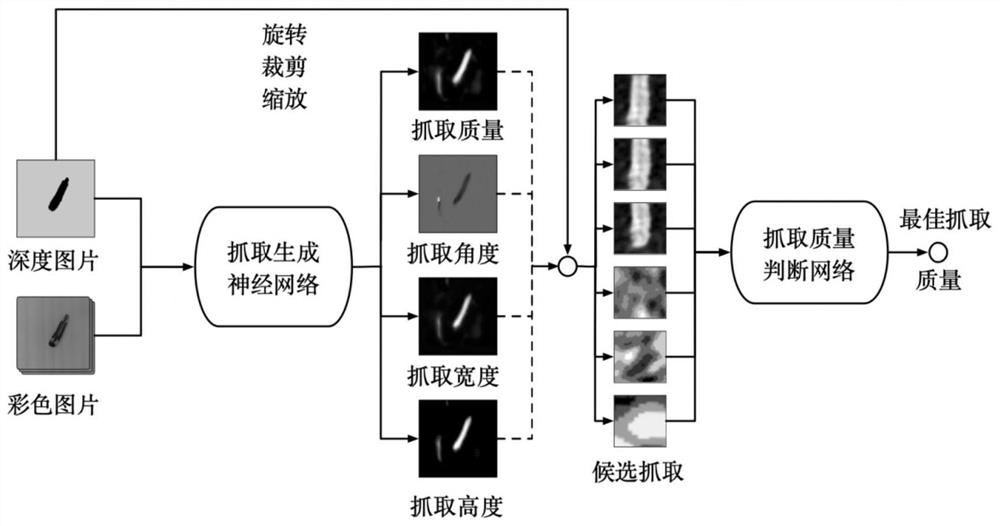

[0034] Such as figure 1 According to the robot intelligent grasping method based on the digital twin and deep neural network of the present invention, the robot intelligent grasping includes a physical grasping environment, a virtual grasping judgment environment and a grasping decision neural network.

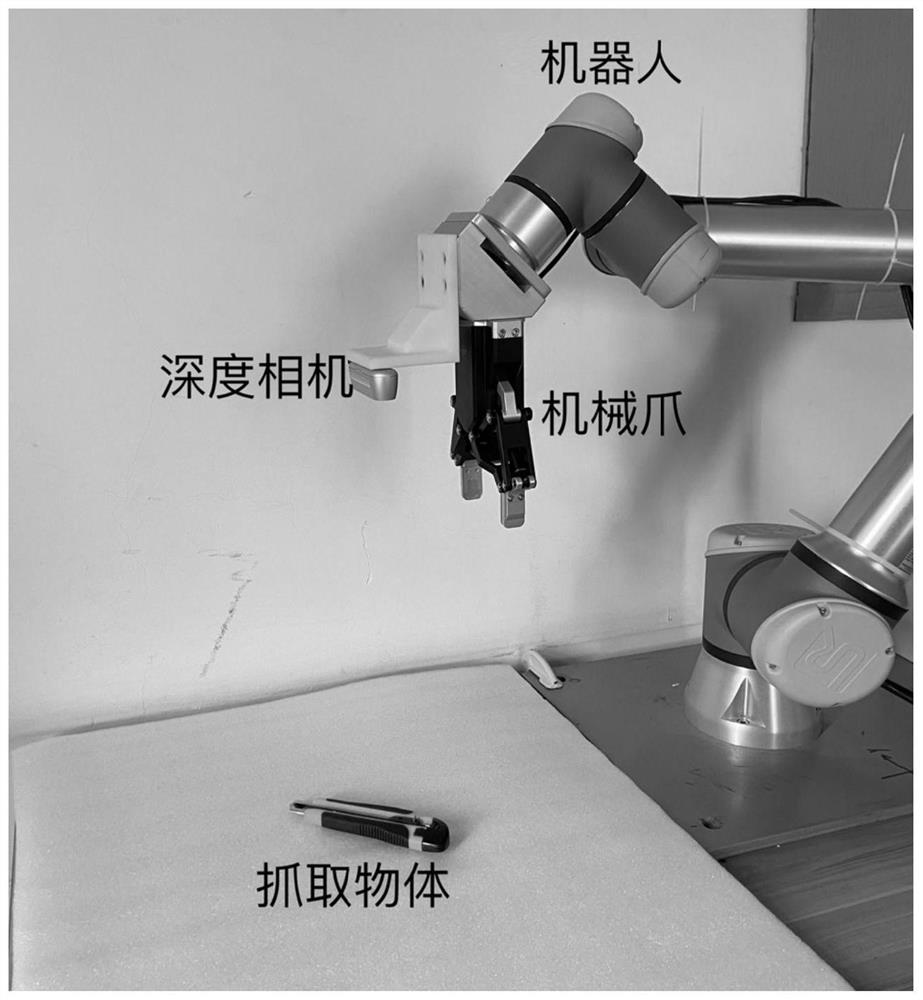

[0035] Such as figure 2 As shown, the physical grasping environment includes a physical robot, a two-finger parallel adaptive gripper, a depth camera, and a collection of objects to be grasped; the robot and the two-finger parallel adaptive gripper are the main actuators for grasping, and are responsible ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com