Hybrid lane-changing decision-making method for emergency vehicles based on reinforcement learning and avoidance strategy

An emergency vehicle and reinforcement learning technology, applied in the direction of neural learning methods, biological neural network models, control devices, etc., can solve the problems of not making full use of real-time traffic data, not considering the impact of normal traffic flow, ignoring the delay of response time, etc., to achieve Improve data utilization, have both stability and exploratory, and stimulate the effect of intelligent agent learning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0172] The following is a detailed description of the effect of the present invention on the decision-making of intelligent network emergency vehicle sections through specific examples:

[0173] 1. First of all, the reinforcement learning part of the algorithm has achieved a good convergence effect, such as Figure 4 As shown, it is described that the effect of the loss function value tending to zero after nearly 200,000 steps of training is significant;

[0174] 2. During the training process, monitor the speed convergence of the DQN strategy and the "DQN+avoidance" hybrid strategy, such as Figure 5 As shown, all can converge to a lower transit time than the baseline (the default car-following model: shown by the dotted line in the figure);

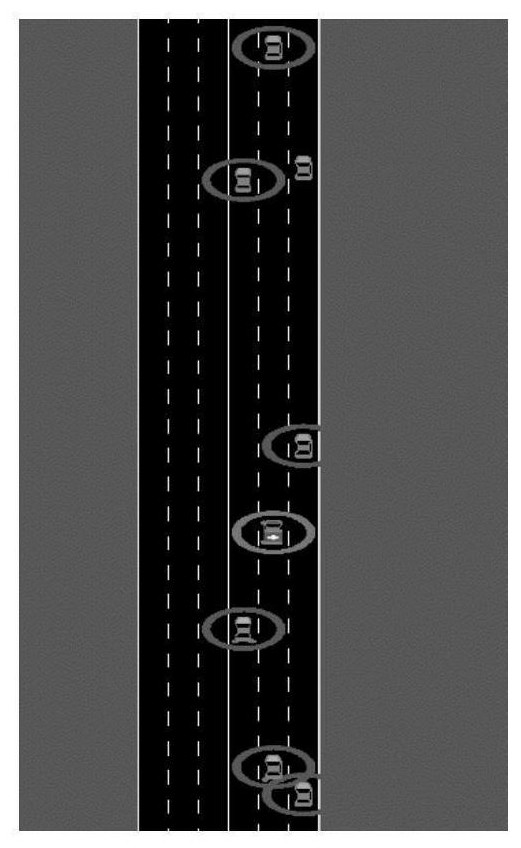

[0175] 3. The mixed strategy should have been more stable, but if Figure 5 It can be seen that this is not the case, and often occurs as Image 6 In the scenario shown, the vehicle in front continues to perform evasive actions due t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com