Laser radar and camera combined calibration method

A lidar and joint calibration technology, applied in image analysis, image enhancement, instruments, etc., can solve problems such as low accuracy and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

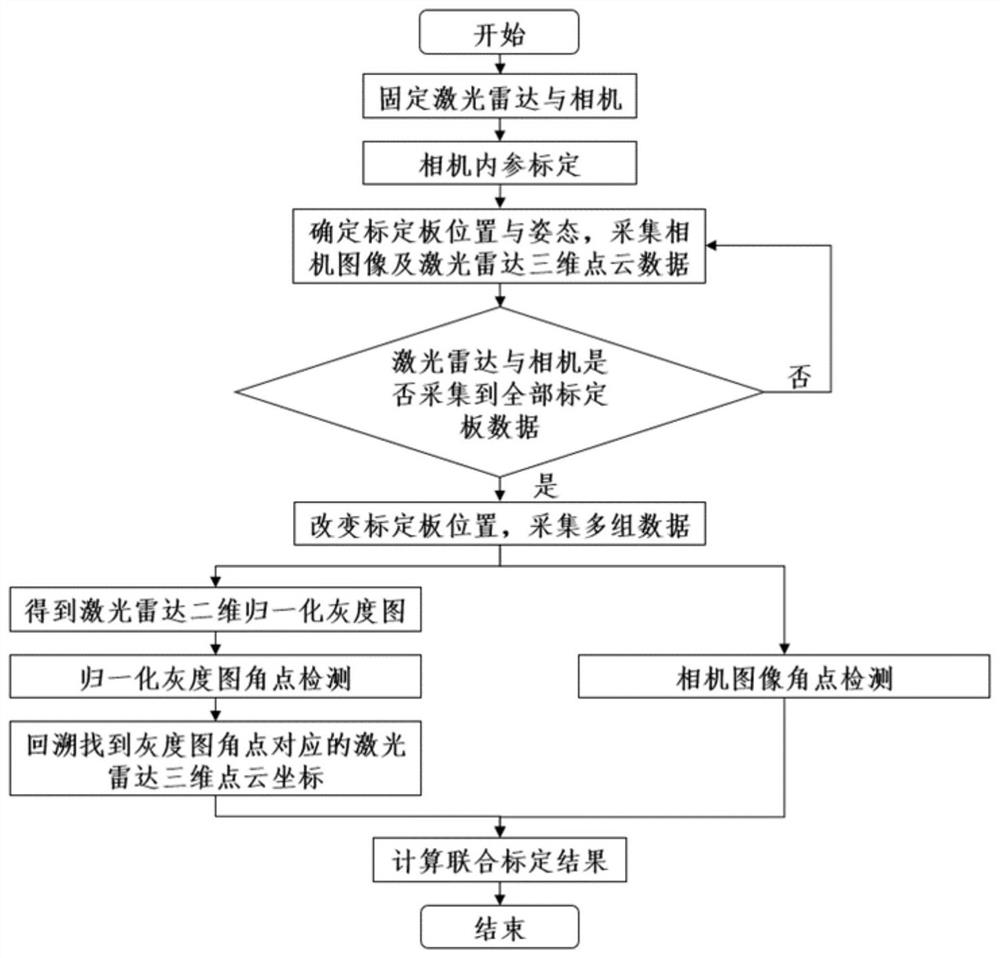

[0046]Combinefigure 1 , A laser radar is combined with the camera, the steps are as follows:

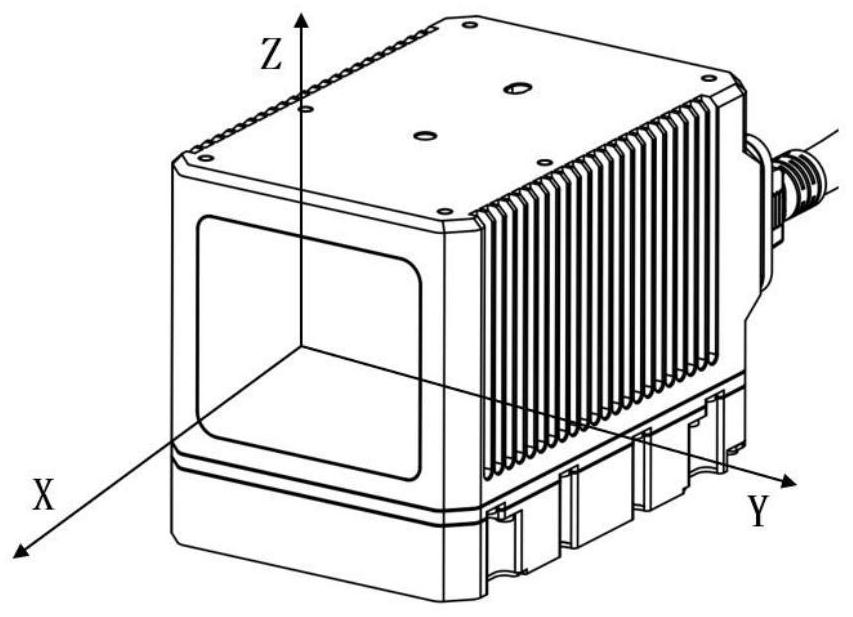

[0047]Step 1: Secure the laser radar to the same side in the same side toward the same direction, and the laser radar is in the camera-absorbing view of the camera to more than 50%.

[0048]Step 2: Calibrate the camera to get the cameraflyx, FyIndicates the camera focal length, Cx, CyIndicates the offset of the camera optical axis on the image coordinate system.

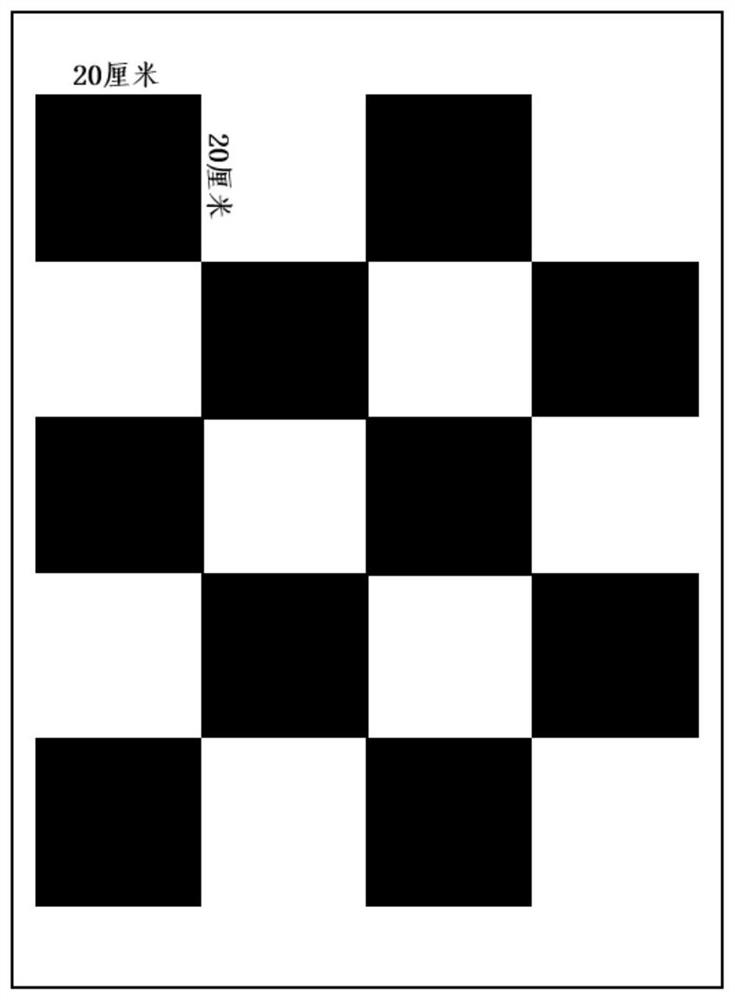

[0049]Step 3: In order to be able to fully collect the camera image and the three-dimensional point cloud data at different locations at different positions in the zone, A, B, C, D, E, F, G, H, I are selected in the combination of view. Total 9 different locations (such asFigure 4 , Arrangements in concentric circles of different radii). At each location, the camera collects one frame image data, and the laser radar collects 20 seconds three-dimensional point cloud data. Among them, the chessboard lattice usedfigure 2 As shown, in order t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com