Model accelerated training method and device based on training data similarity aggregation

A technology of training data and training methods, applied in neural learning methods, biological neural network models, character and pattern recognition, etc., can solve problems such as slowing down model training efficiency, improve training efficiency, and reduce the risk of falling into local optimal solutions Probability, the effect of reducing the number of iterations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

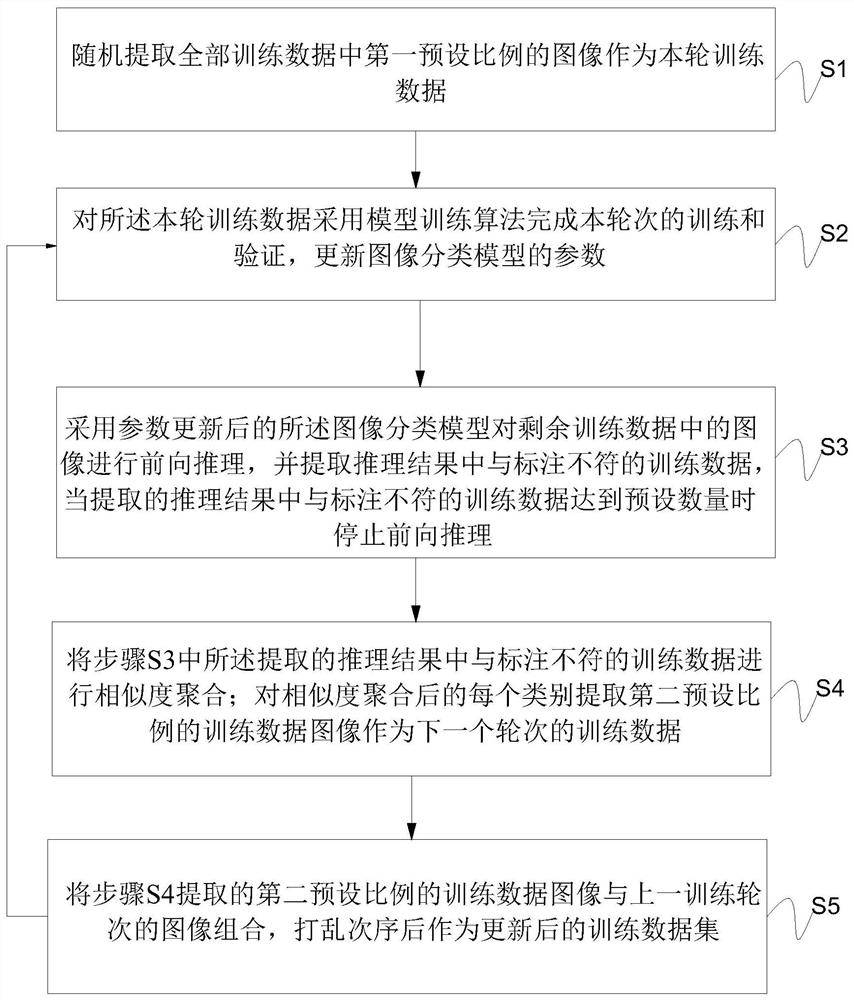

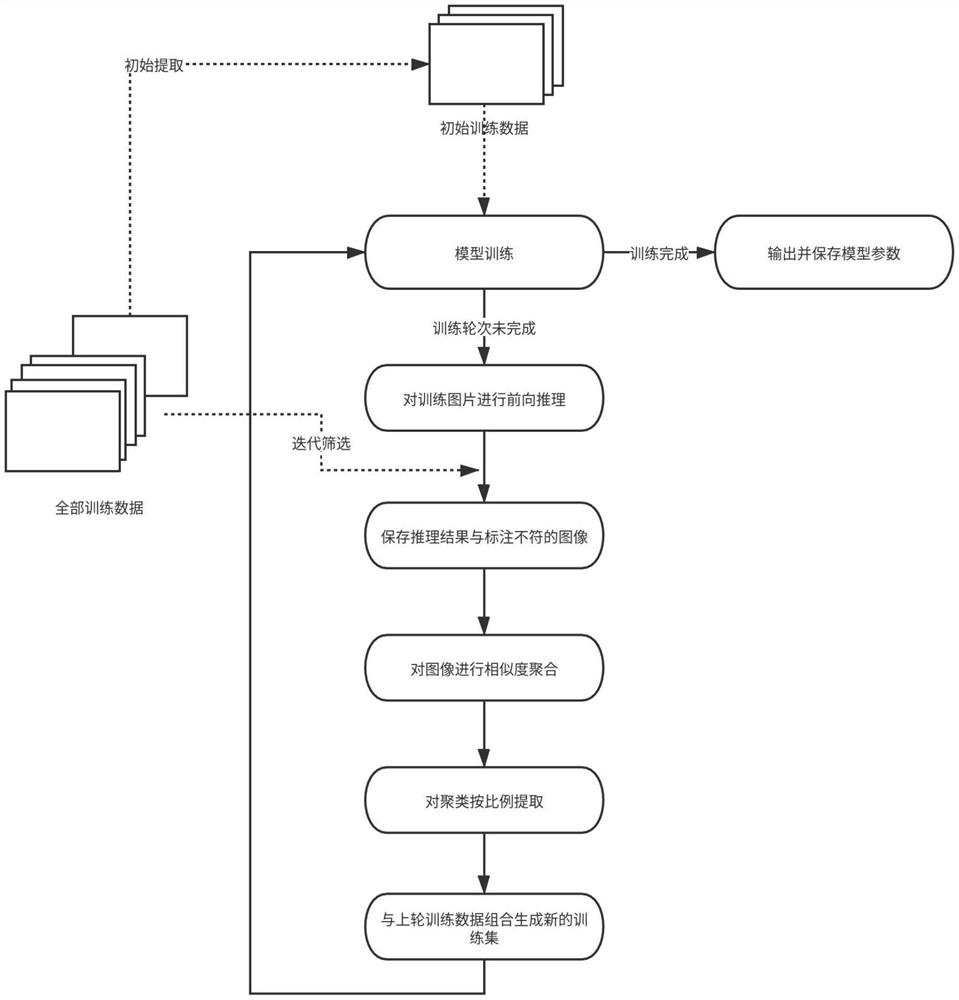

[0046] see figure 1 with figure 2, providing a model acceleration training method based on training data similarity aggregation, including the following steps:

[0047] S1: Randomly extract images of the first preset proportion in all training data as the current round of training data;

[0048] S2: Using a model training algorithm for the current round of training data to complete the current round of training and verification, and update the parameters of the image classification model;

[0049] S3: Use the image classification model after the parameter update to perform forward inference on the images in the remaining training data, and extract the training data inconsistent with the label in the inference result. Stop forward reasoning when the number is set;

[0050] S4: Perform similarity aggregation on the training data that is inconsistent with the label in the inference results extracted in step S3; extract a second preset proportion of training data images for ea...

Embodiment 2

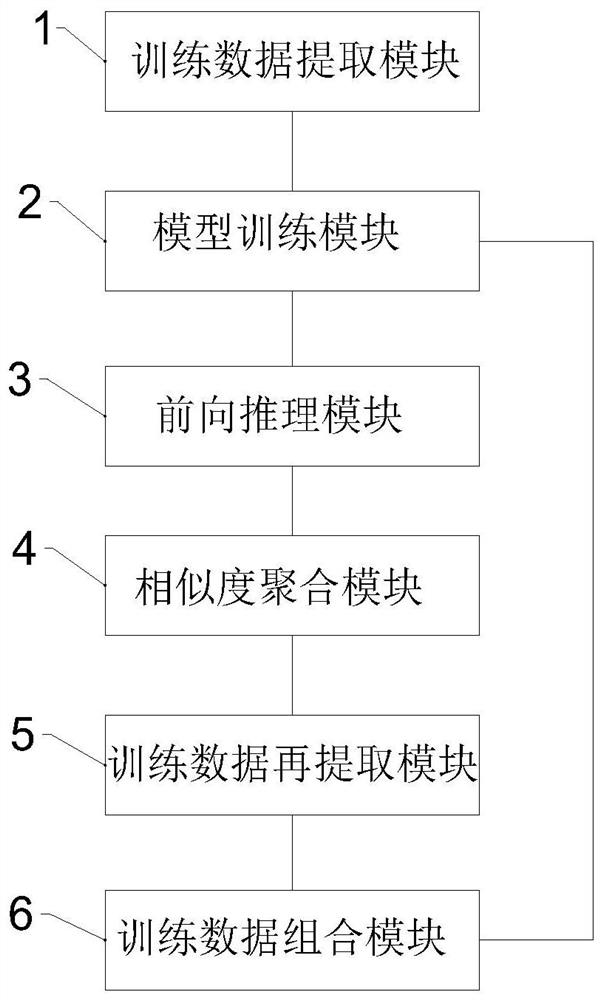

[0064] see image 3 , the present invention also provides a model acceleration training device based on training data similarity aggregation, using the above-mentioned model acceleration training method based on training data similarity aggregation, including:

[0065] Training data extraction module 1, for randomly extracting the image of the first preset ratio in all training data as the current round of training data;

[0066] The model training module 2 is used to complete the training and verification of the current round using the model training algorithm for the training data of the current round, and update the parameters of the image classification model;

[0067] The forward inference module 3 is used to perform forward inference on the images in the remaining training data by using the image classification model after the parameter update, and extract the training data inconsistent with the label in the inference result. Stop forward reasoning when the inconsistent...

Embodiment 3

[0083] The present invention provides a computer-readable storage medium. The computer-readable storage medium stores program codes for accelerated training of models based on training data similarity aggregation. Instructions for a model acceleration training method based on training data similarity aggregation among possible implementations.

[0084] The computer-readable storage medium may be any available medium that can be accessed by a computer, or a data storage device such as a server, a data center, etc. integrated with one or more available media. The available medium may be a magnetic medium (for example, a floppy disk, a hard disk, or a magnetic tape), an optical medium (for example, DVD), or a semiconductor medium (for example, a solid state disk (SolidStateDisk, SSD)) and the like.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com