Speech Emotion Recognition Method Based on Multimodal Feature Extraction and Fusion

A speech emotion recognition and feature extraction technology, applied in neural learning methods, natural language data processing, biological neural network models, etc., can solve problems such as poor interpretability, inability to classify model emotion discrimination, etc. The effect of presentation skills

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] In order to better understand the contents of the present invention, an example is given here.

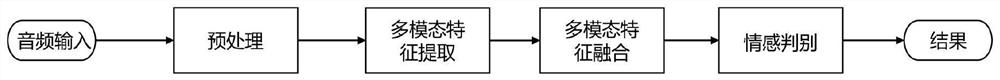

[0056] The invention discloses a speech emotion recognition method based on multimodal feature extraction and fusion, figure 1 It is an overall flowchart of the speech emotion recognition method of the present invention, and its steps include:

[0057] S1, data preprocessing;

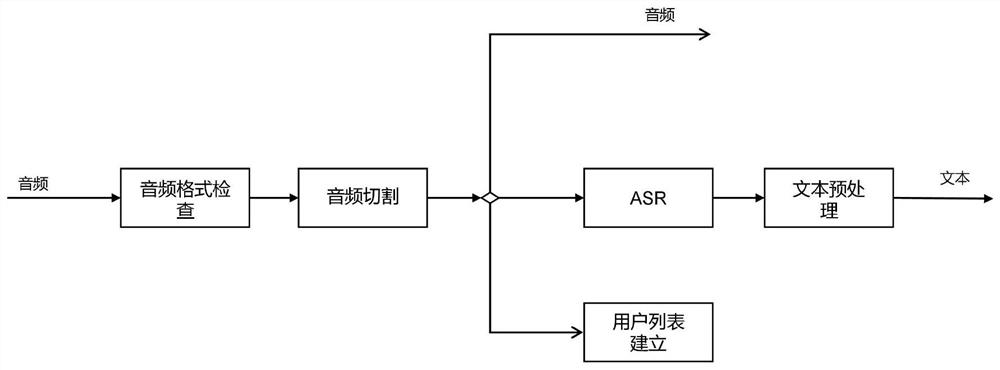

[0058] S11, audio file preprocessing, figure 2 For the specific flow chart of audio preprocessing, it includes:

[0059] S111 , check the legality of the audio file format, only the legal audio format can correctly extract the acoustic features, and convert the illegal audio format into a legal audio format before performing subsequent processing. The specific method is to check the suffix name of the audio file, if the suffix name is in the legal suffix name list (including '.mp3', '.wav'), then pass the file format check; if it is not in the legal suffix name list, then Utilizes pyAudio open so...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com