Lip language recognition method based on generative adversarial network and time convolutional network

A technology of convolutional network and recognition method, applied in the field of lip recognition based on generative adversarial network and temporal convolutional network, which can solve the problems of poor performance, influence of lip features, and the accuracy of attention mechanism needs to be improved.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0031] Specific examples of the present invention are given below. The specific embodiments are only used to further describe the present invention in detail, and do not limit the protection scope of the claims of the present application.

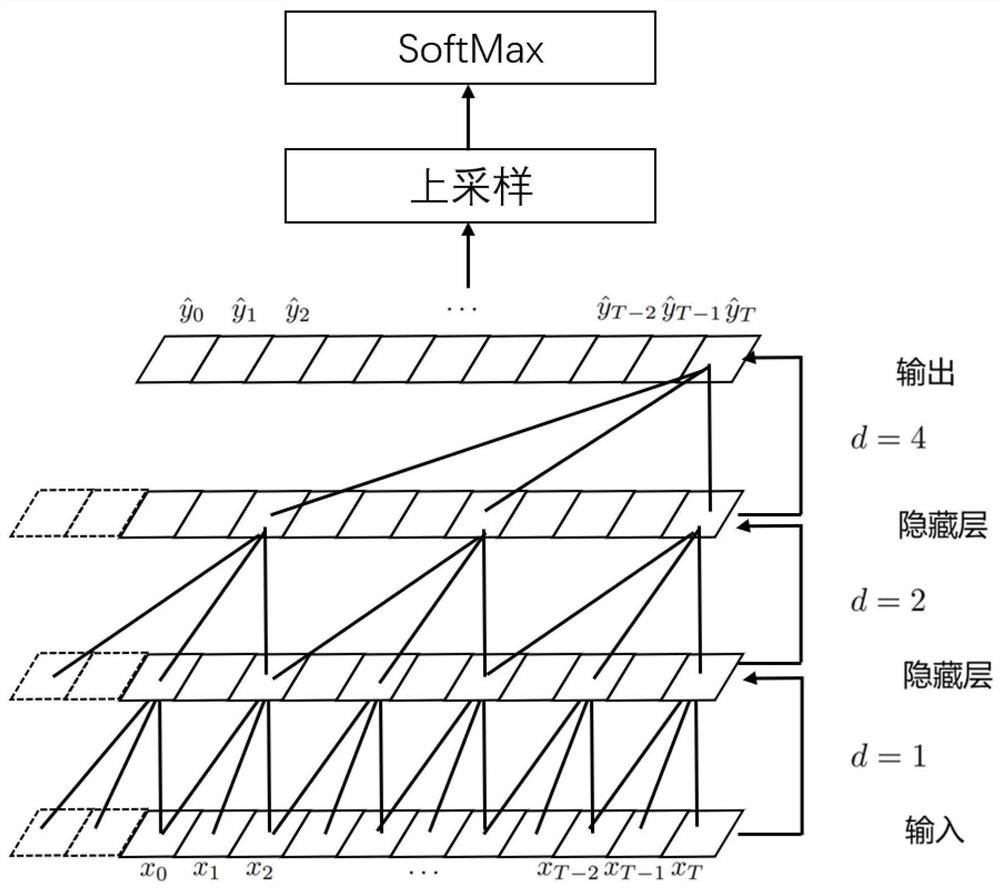

[0032] The present invention provides a kind of lip language recognition method (abbreviation method) based on generation confrontation network and time convolutional network, it is characterized in that, this method comprises the following steps:

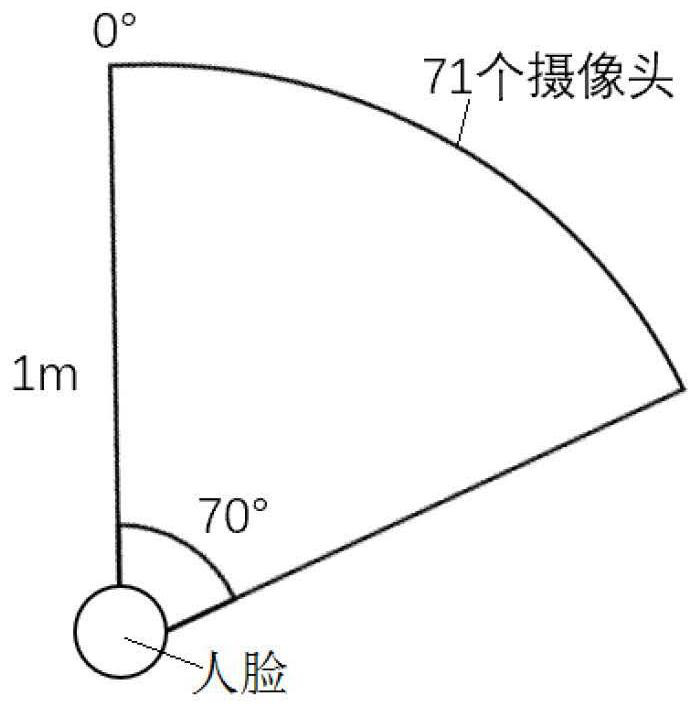

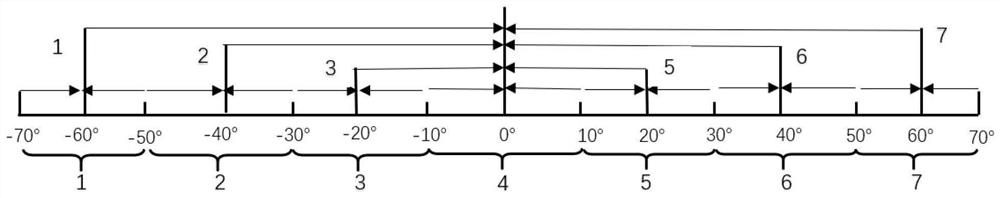

[0033] S1, making raw data; the raw data includes recognition network raw data and dense multi-angle lip change raw data;

[0034] Preferably, in S1, making the original data of the recognition network is: obtaining the source video and subtitle files from the network through a Python web crawler, using the YOLOv5 face detection algorithm to obtain the face area in the source video, and then segmenting the face, And corresponding to the subtitle file, the original data of the identification net...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com