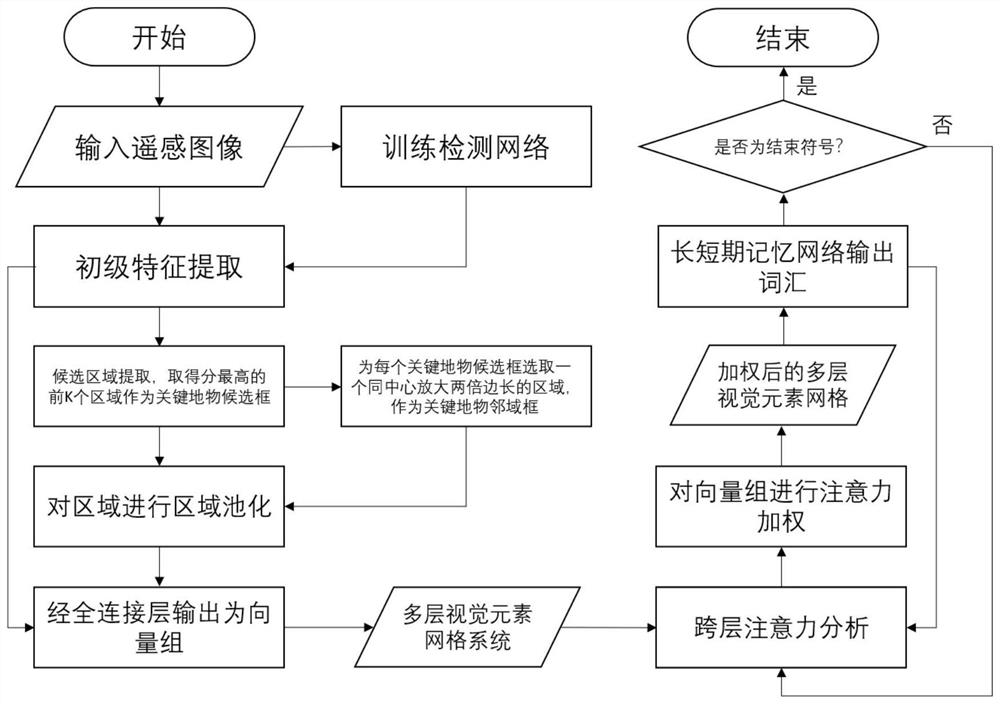

Remote sensing image text description generation method with multi-semantic-level attention capability

A remote sensing image and multi-semantic technology, applied in the field of machine learning, can solve the problems that remote sensing images cannot obtain sufficient reasonable and fine area attention, difficulty in comprehensive understanding, obvious differences in semantic distribution and density between images, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment

[0037] 1. Experimental conditions

[0038] This embodiment runs on Nvidia GTX1070 with 8G video memory and Windows operating system, and uses Python to carry out simulation experiments.

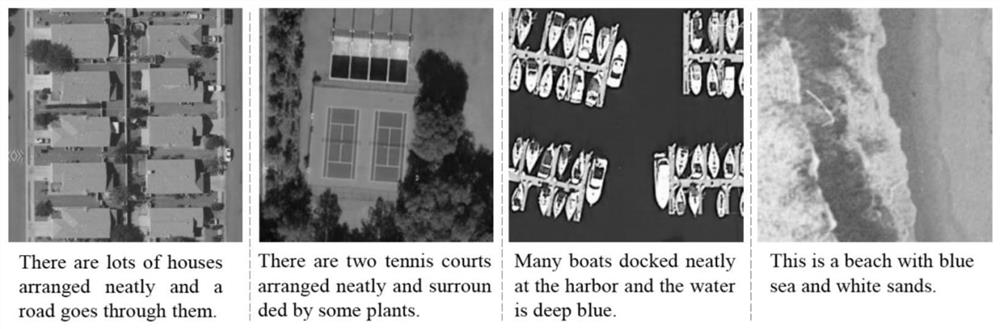

[0039]The data used in the simulation is the public remote sensing description task dataset, and the UCM-Captions dataset is used in this experiment. The dataset contains about 20,000 remote sensing images, and each image has 5 annotation sentences.

[0040] 2. Simulation content

[0041] First, three metrics, BLEU, CIDEr and ROUGE-L, which are used to measure the closeness of sentences, are introduced to measure the quality of the sentences generated by the present invention. In order to prove the effectiveness of the present invention, the experimental results are compared with the method based on space traditional Attention and the method of Attention with input attribute information. Among them, the method based on traditional spatial Attention is described in the literature "X.Lu, B.W...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com