Patents

Literature

106 results about "Visual attentiveness" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Visual attention and emotional response detection and display system

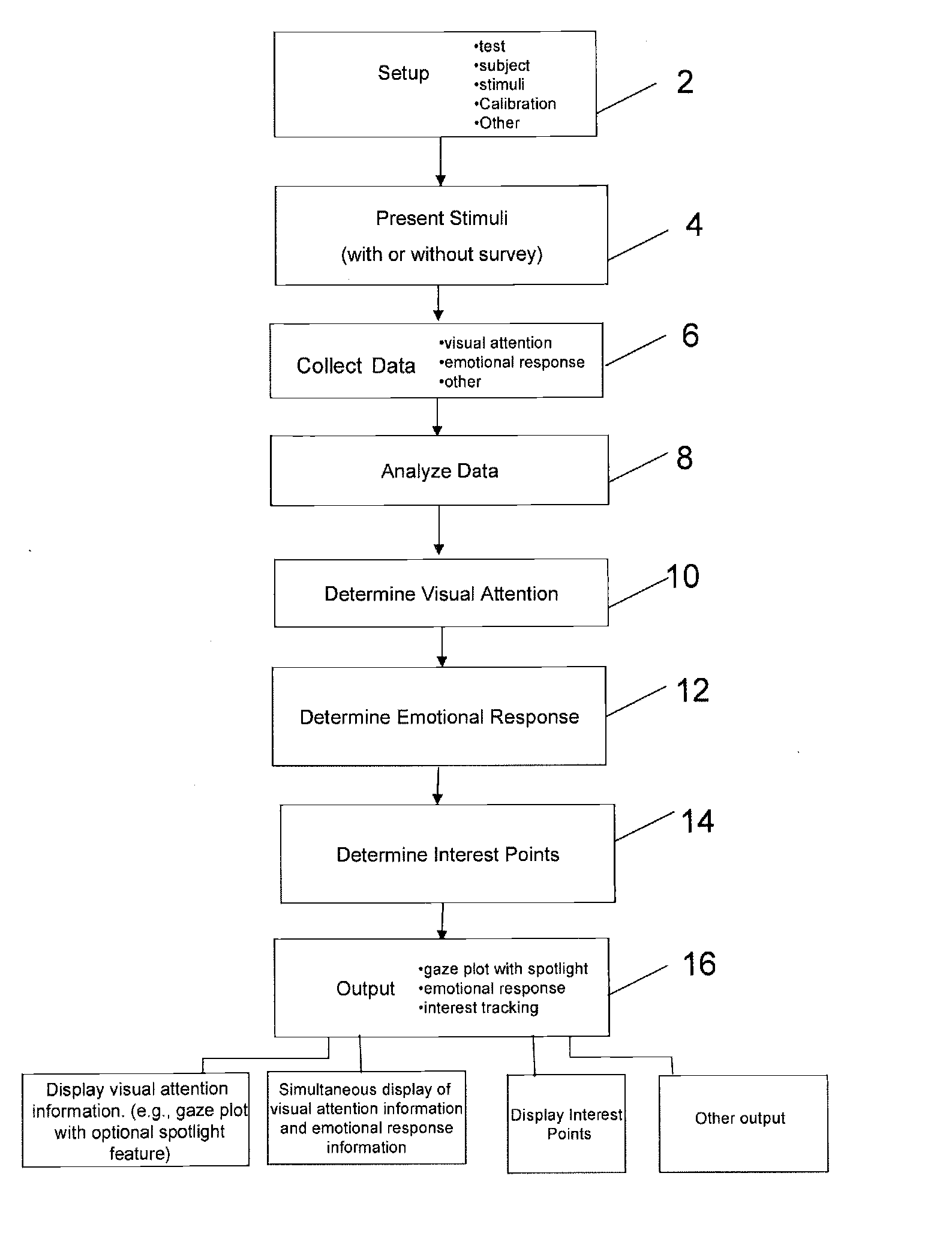

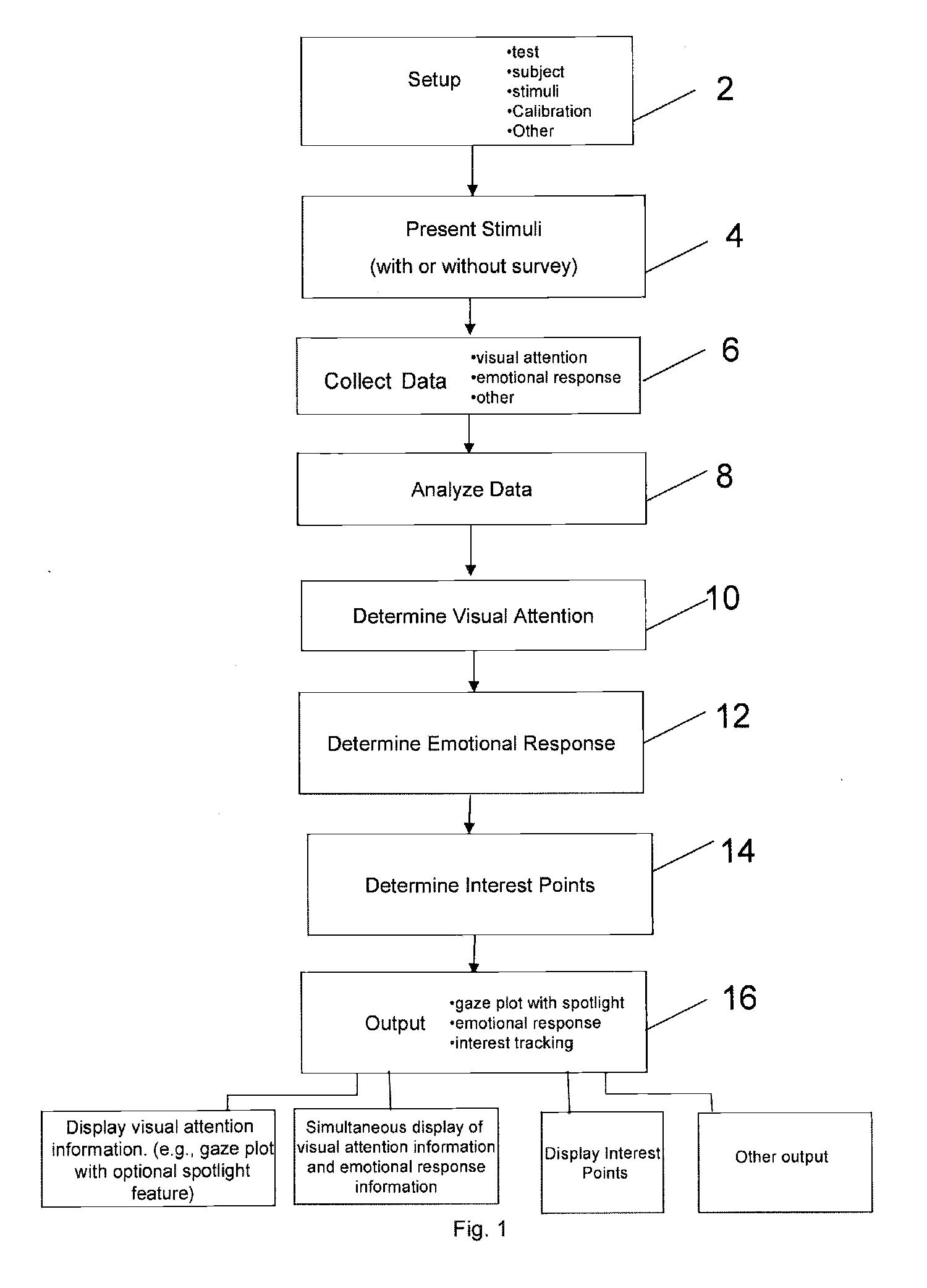

The invention is a system and method for determining visual attention, and supports the eye tracking measurements with other physiological signal measurements like emotions. The system and method of the invention is capable of registering stimulus related emotions from eye-tracking data. An eye tracking device of the system and other sensors collect eye properties and / or other physiological properties which allows a subject's emotional and visual attention to be observed and analyzed in relation to stimuli.

Owner:IMOTIONS EMOTION TECH

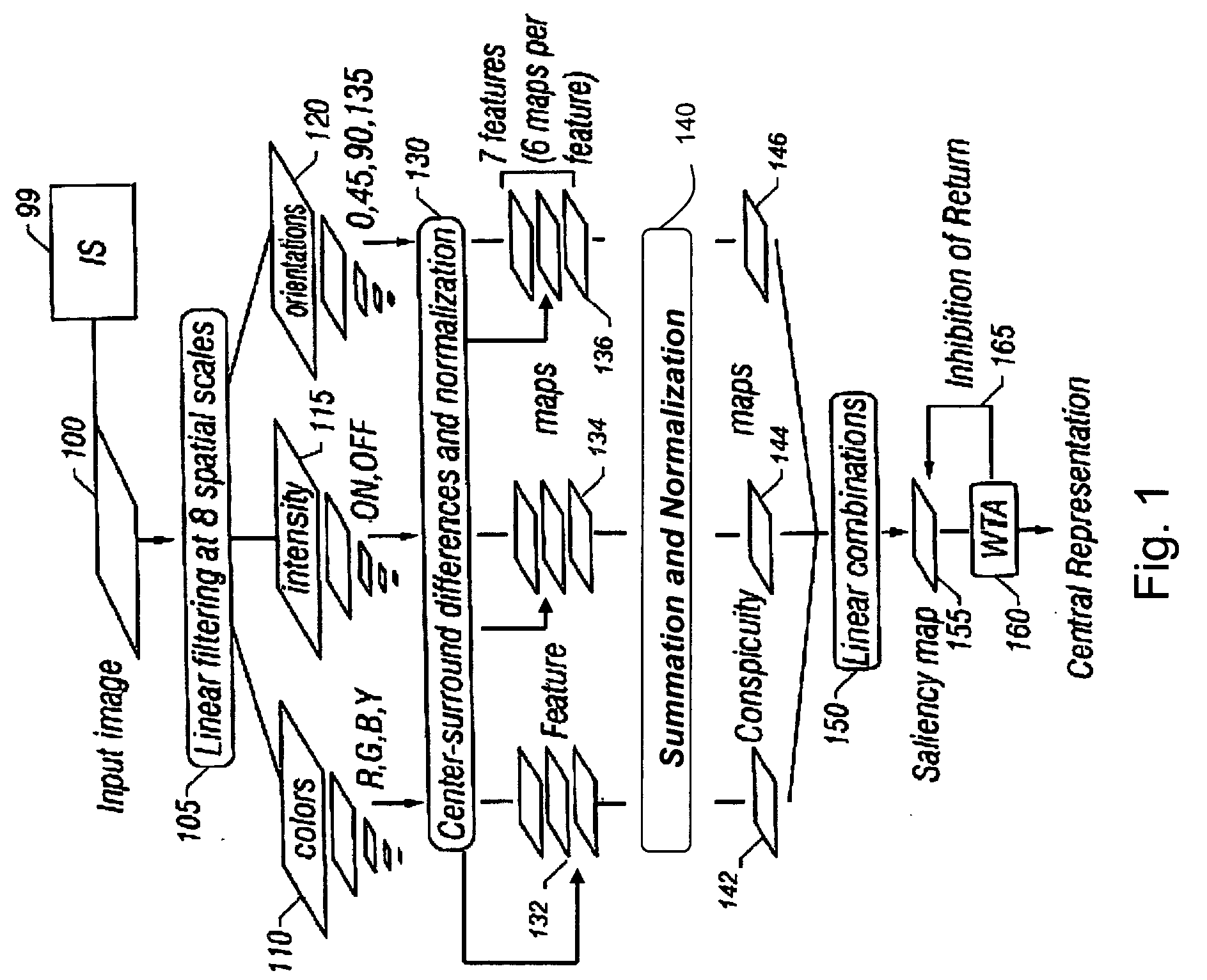

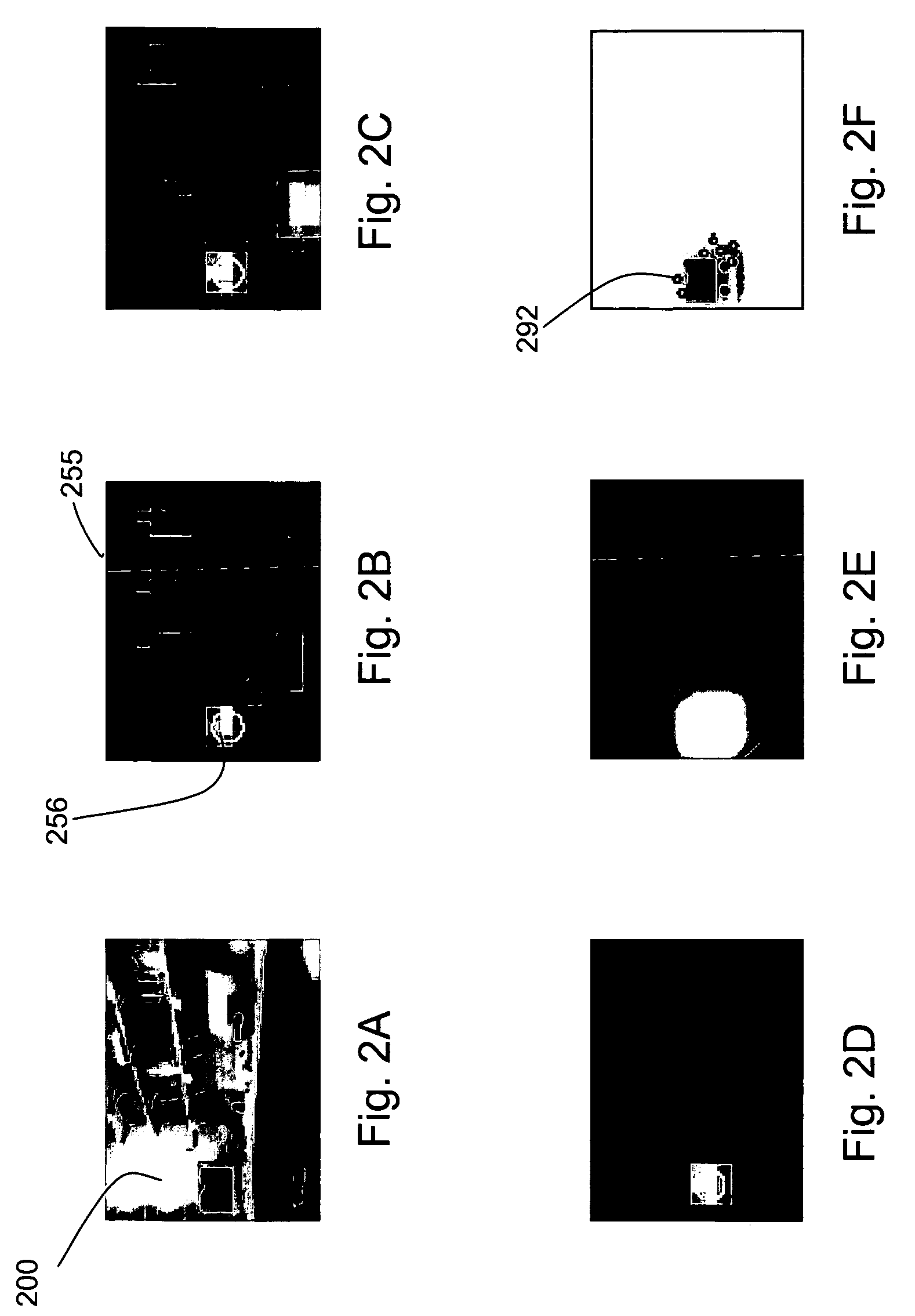

System and method for attentional selection

InactiveUS20050047647A1Overcome limitationsCharacter and pattern recognitionPattern recognitionVisual perception

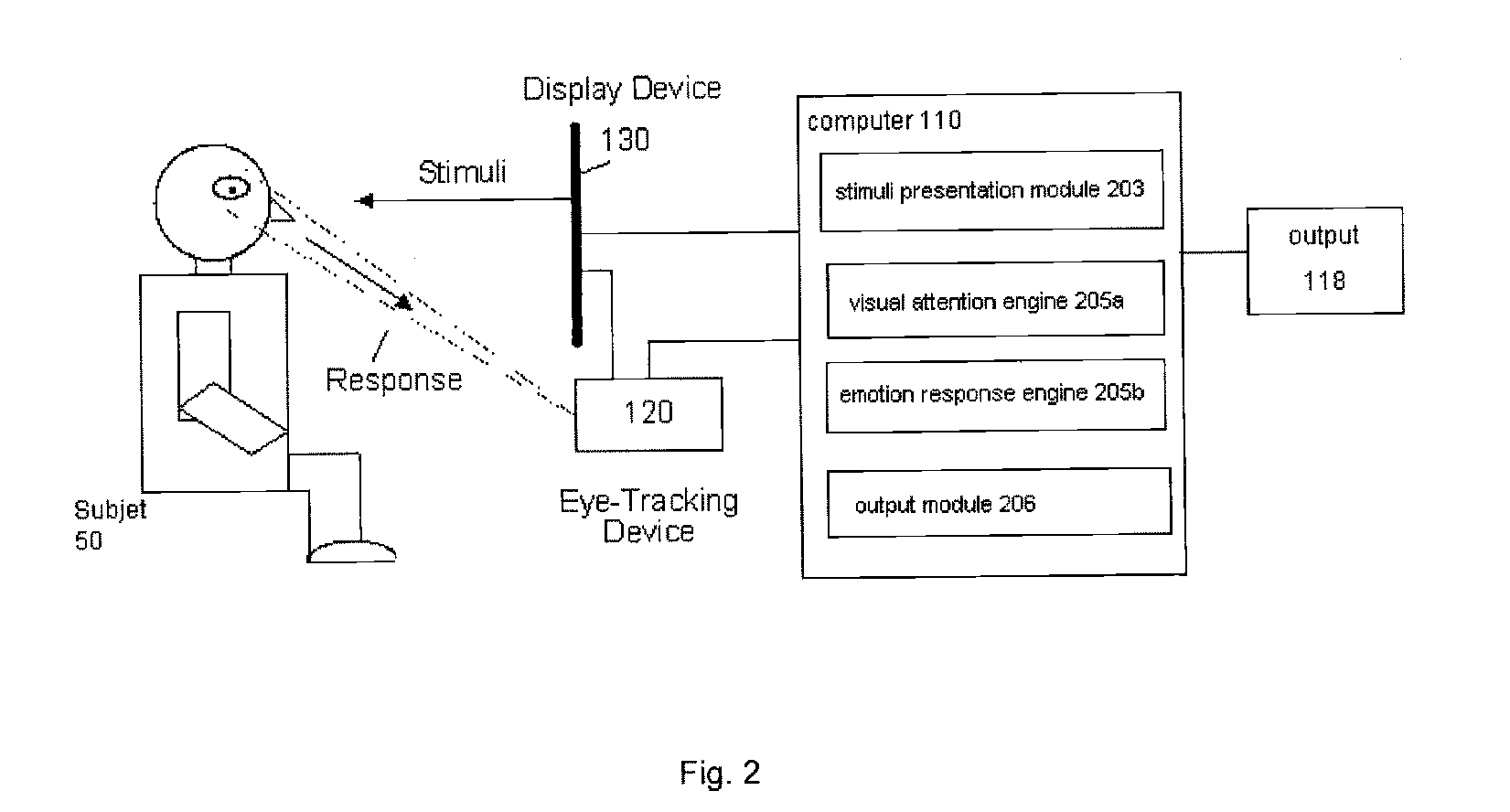

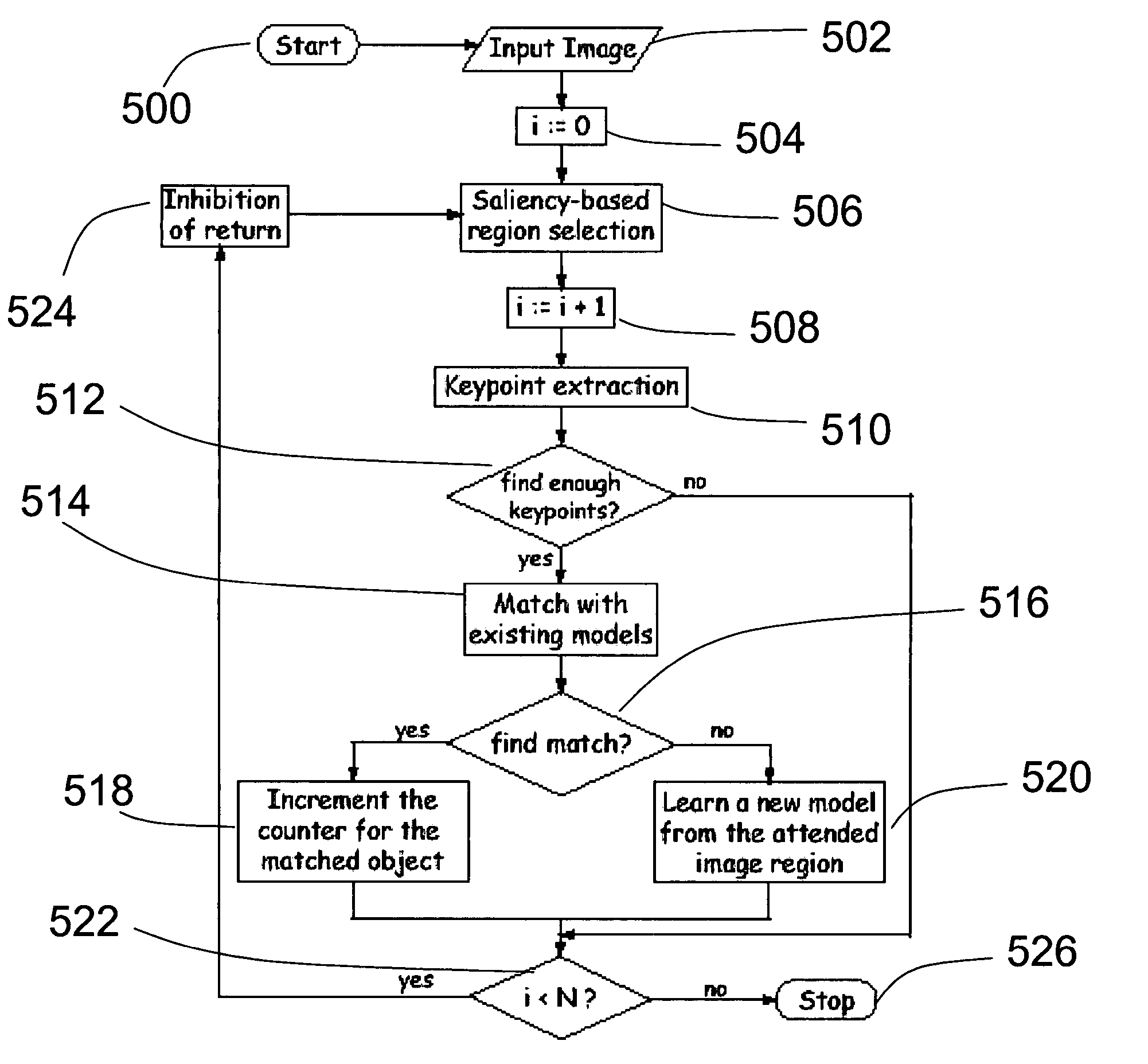

The present invention relates to a system and method for attentional selection. More specifically, the present invention relates to a system and method for the automated selection and isolation of salient regions likely to contain objects, based on bottom-up visual attention, in order to allow unsupervised one-shot learning of multiple objects in cluttered images.

Owner:CALIFORNIA INST OF TECH

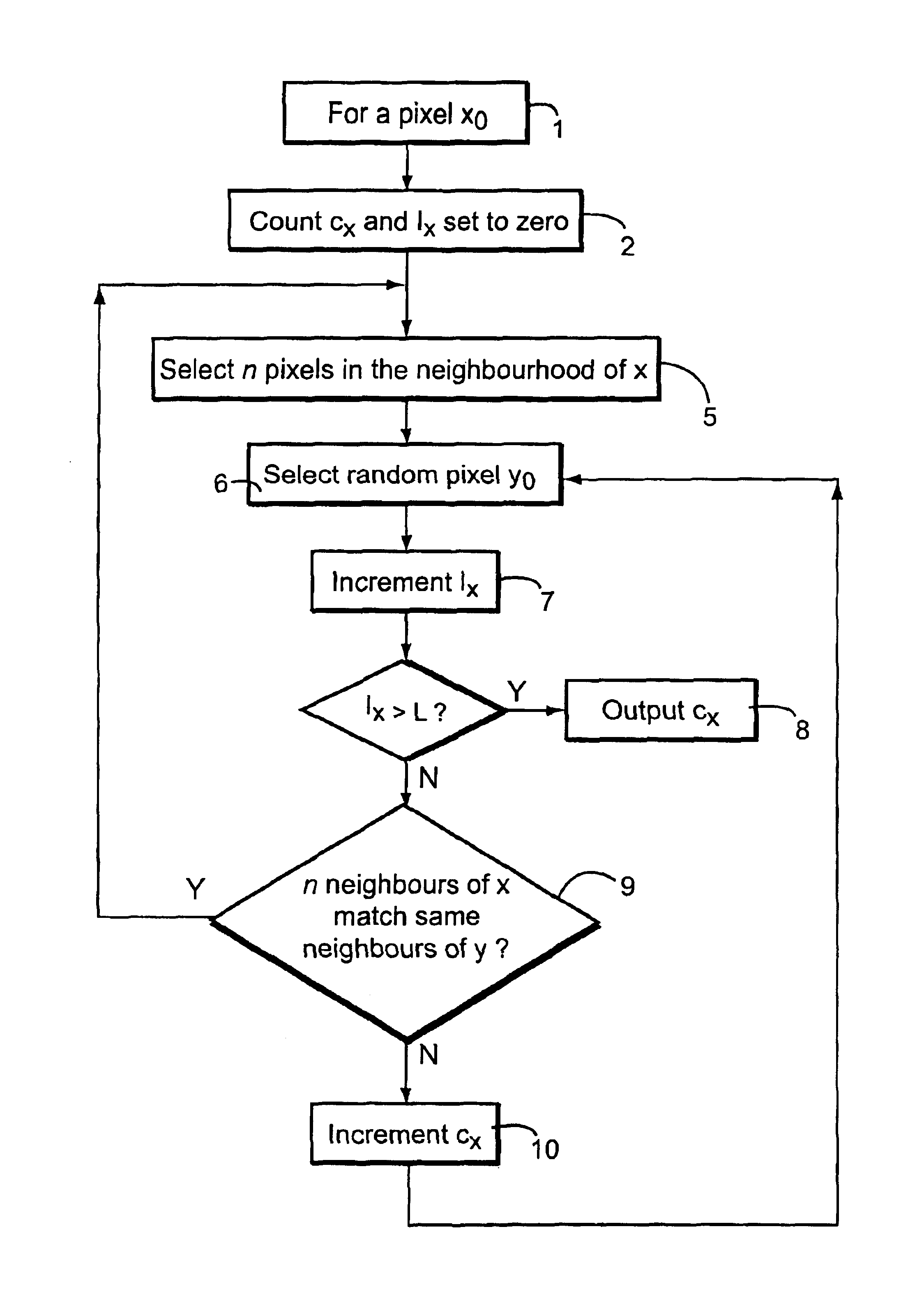

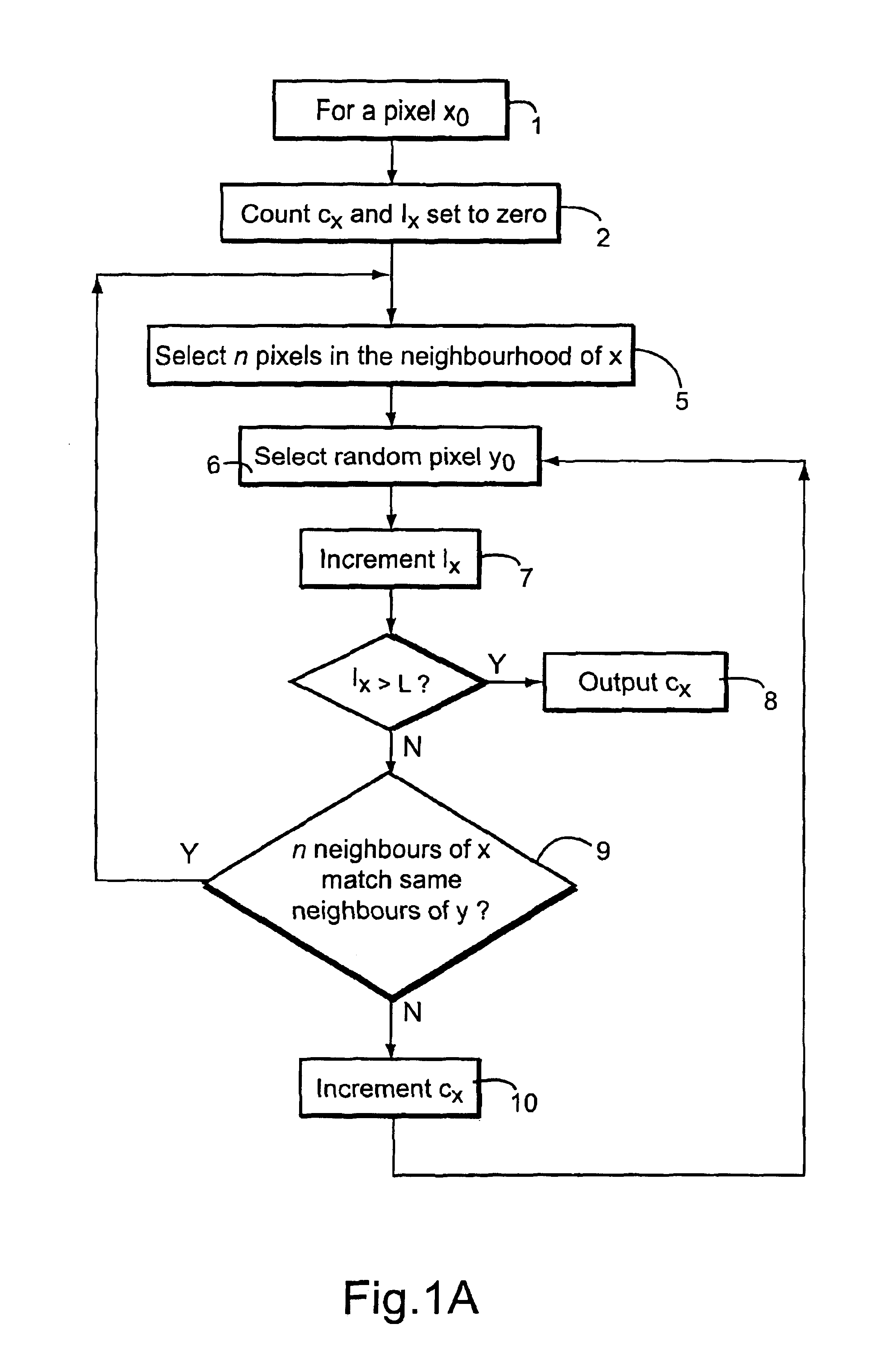

Visual attention system

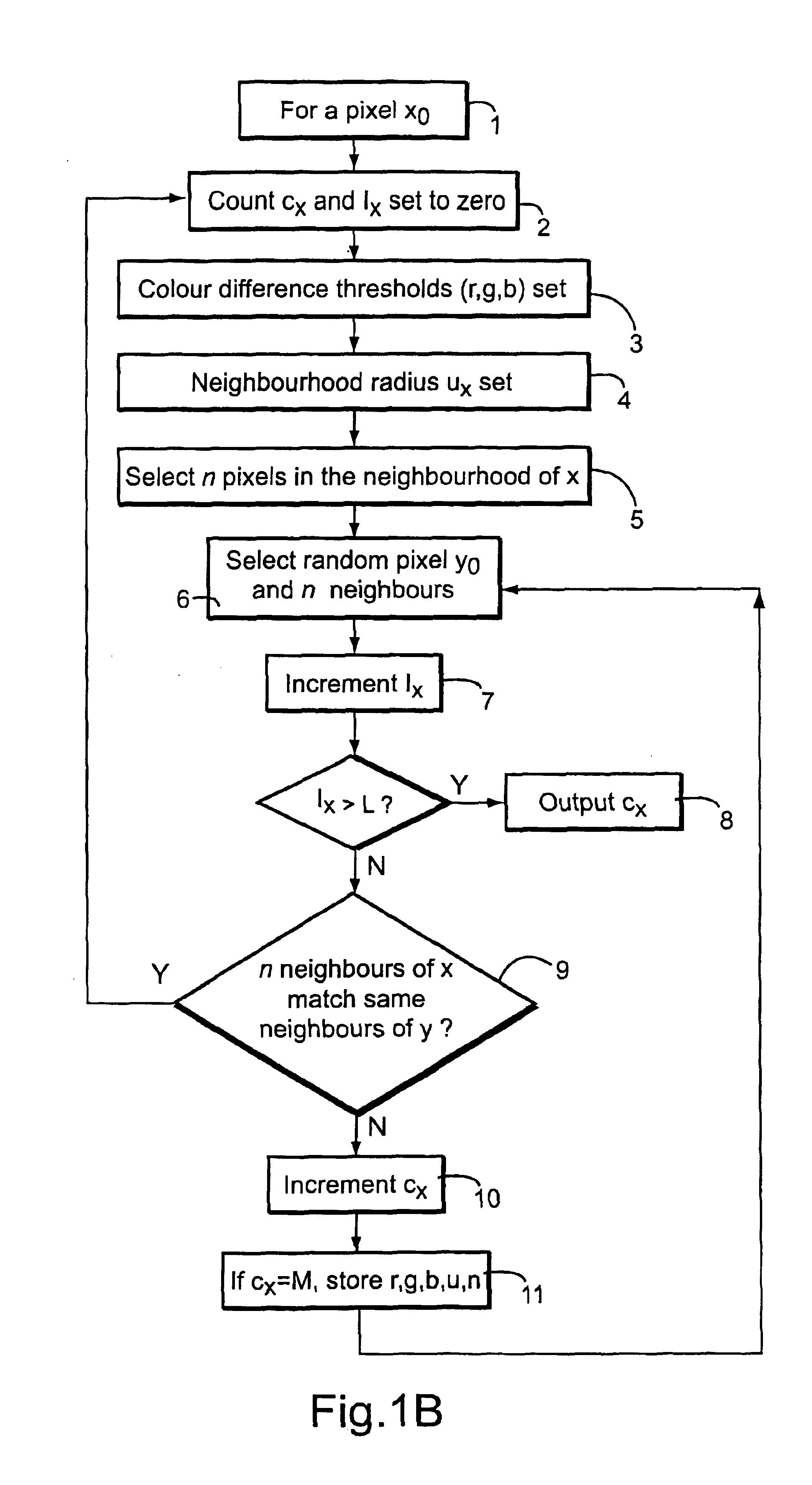

InactiveUS6934415B2Reduce usageEasy to operateColor television with pulse code modulationImage analysisVisibilityPattern recognition

The most significant features in visual scenes, is identified without prior training, by measuring the difficulty in finding similarities between neighbourhoods in the scene. Pixels in an area that is similar to much of the rest of the scene score low measures of visual attention. On the other hand a region that possesses many dissimilarities with other parts of the image will attract a high measure of visual attention. A trial and error process is used to find dissimilarities between parts of the image and does not require prior knowledge of the nature of the anomalies that may be present. The use of processing dependencies between pixels avoided while yet providing a straightforward parallel implementation for each pixel. Such techniques are of wide application in searching for anomalous patterns in health screening, quality control processes and in analysis of visual ergonomics for assessing the visibility of signs and advertisements. A measure of significant features can be provided to an image processor in order to provide variable rate image compression.

Owner:BRITISH TELECOMM PLC

Method and Device for Detecting Safe Driving State of Driver

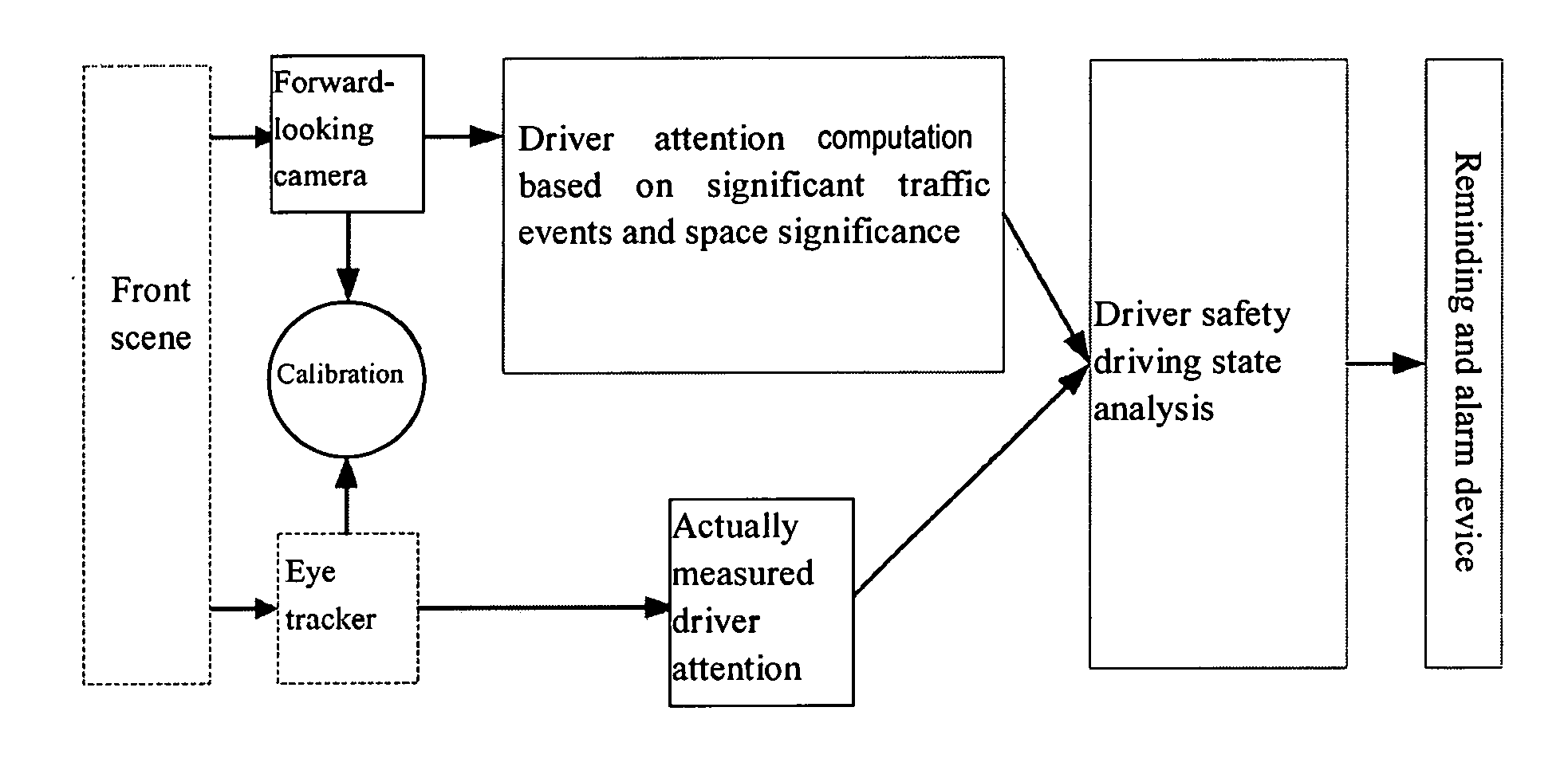

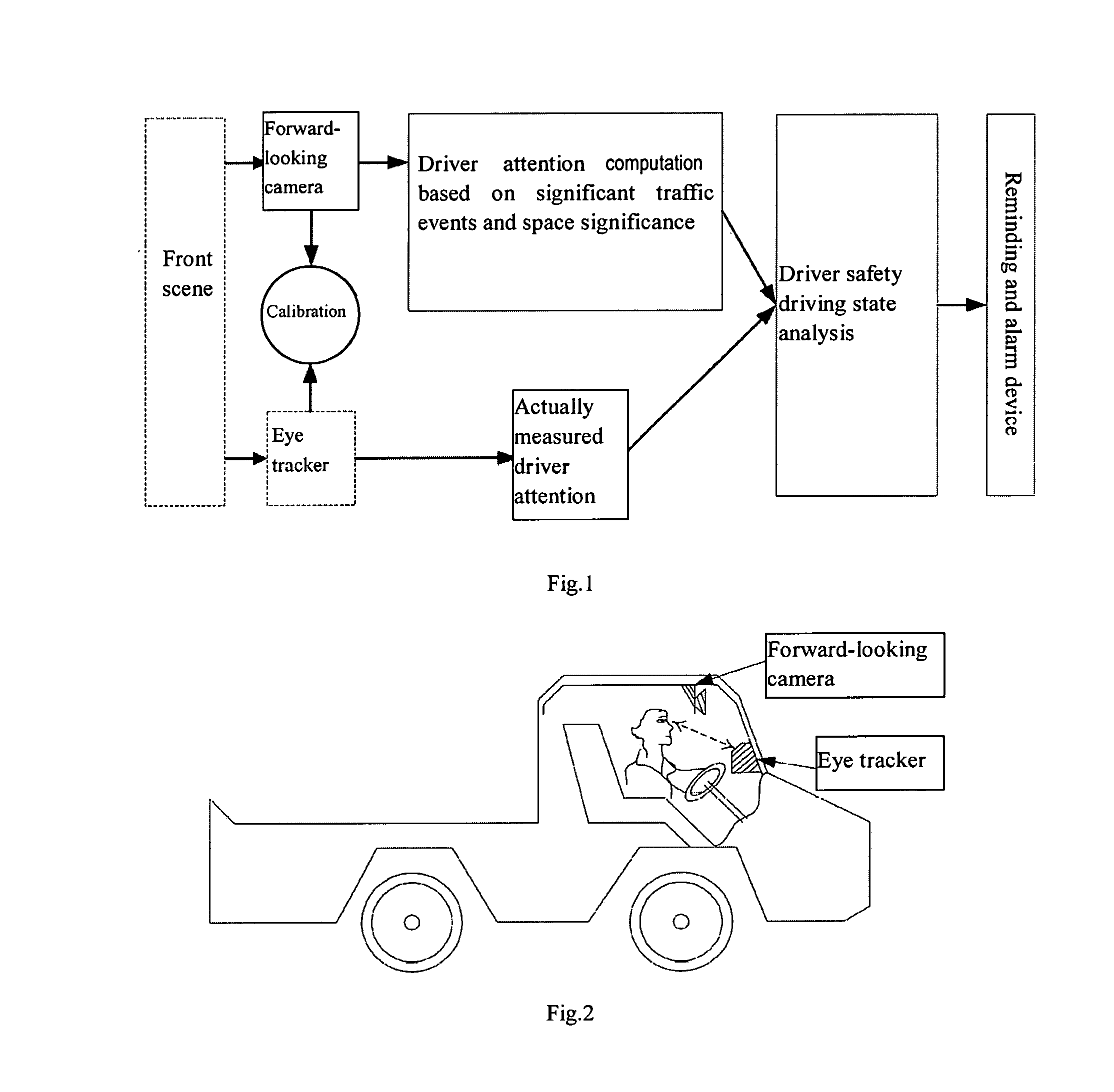

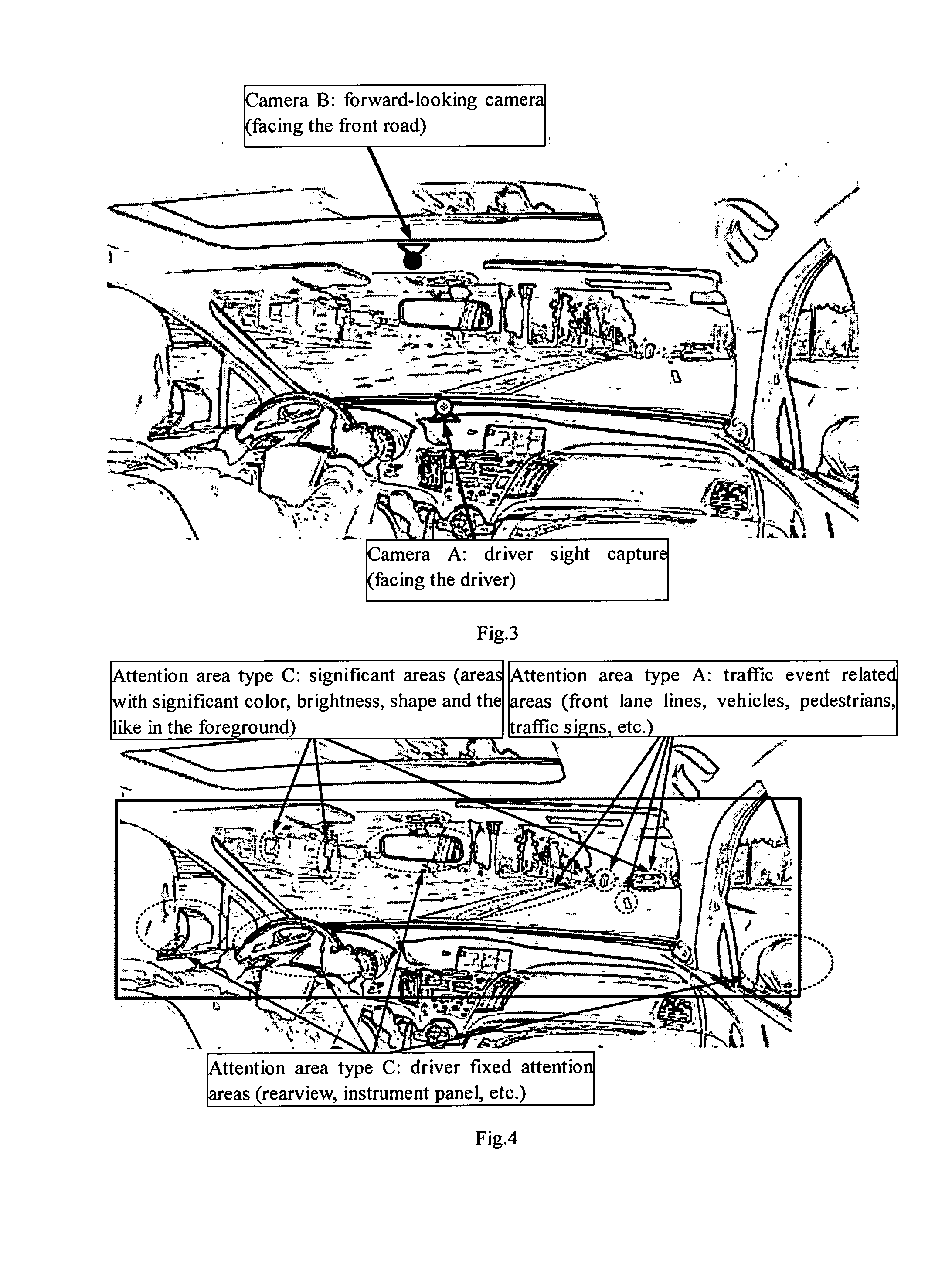

ActiveUS20170001648A1Simple principleEasy to implementCharacter and pattern recognitionExternal condition input parametersDriver/operatorTraffic crash

Disclosure is a method for detecting the safety driving state of a driver, the method comprises the following steps: (a) detecting the current sight direction of a driver in real time and acquiring a scene image signal in a front view field of the driver when a vehicle runs; (b) processing the acquired current road scene image signal according to a visual attention calculation model to obtain the expected attention distribution of the driver under the current road scene; and (c) performing fusion analysis on the real-time detected current sight direction of the driver in the step (a) and the calculated expected attention distribution of the driver in step (b), and judging whether the current driver is in a normal driving state and whether the driver can timely make a proper response to the sudden road traffic accident. The device is used for implementing the method and has the advantages of simple principle, easy realization, direct reflection of the real driving state of a driver, and improvement of the driving safety.

Owner:NAT UNIV OF DEFENSE TECH

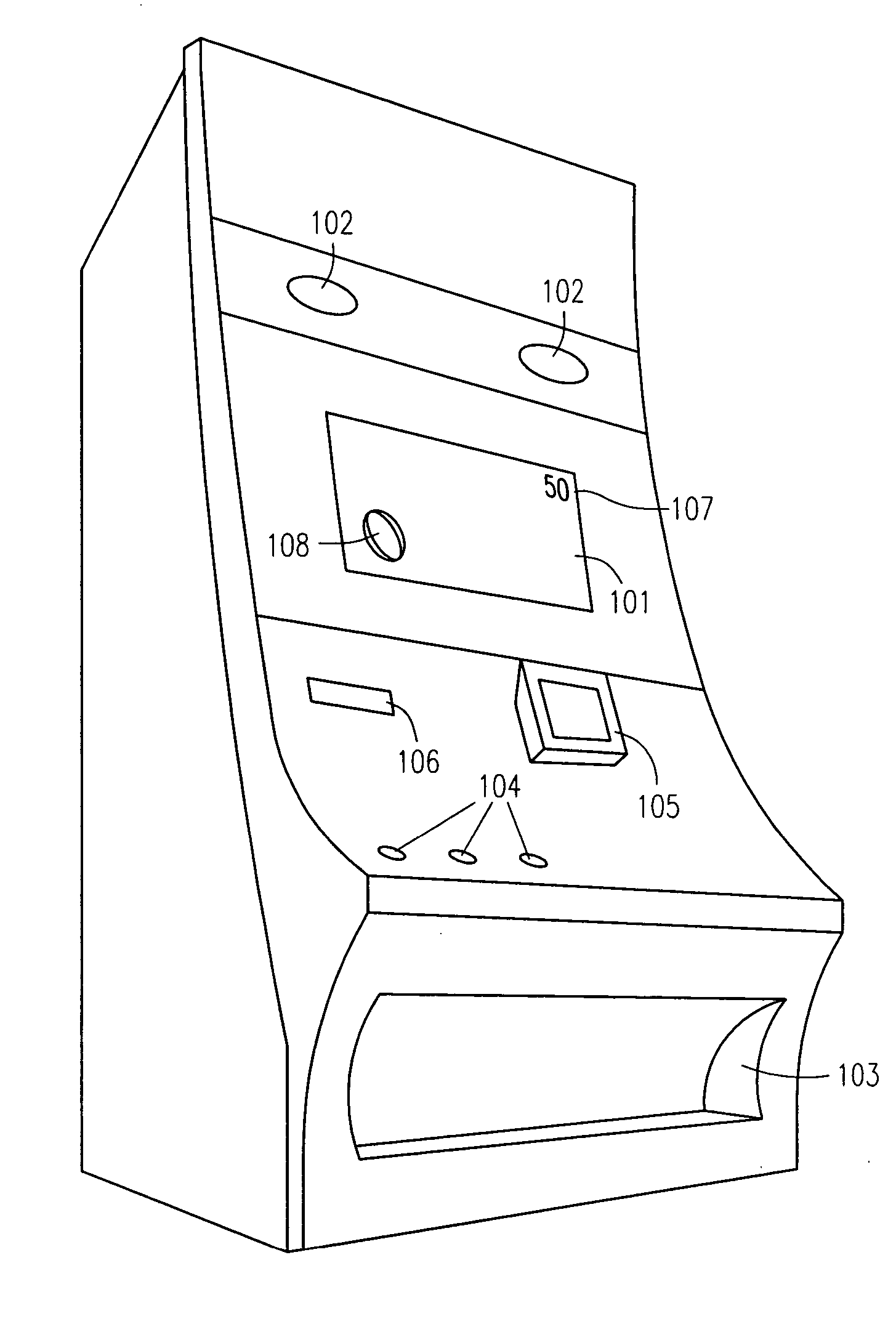

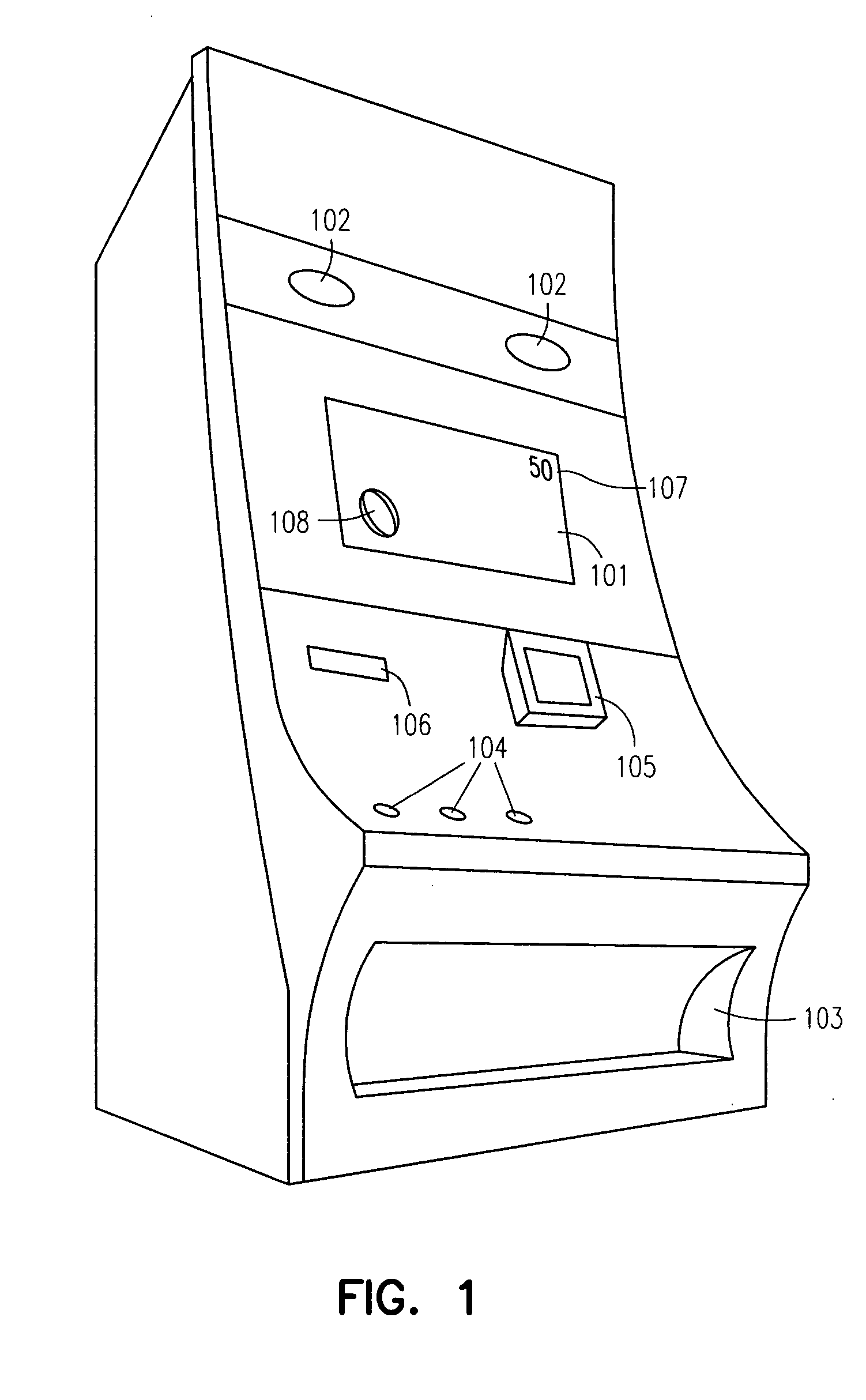

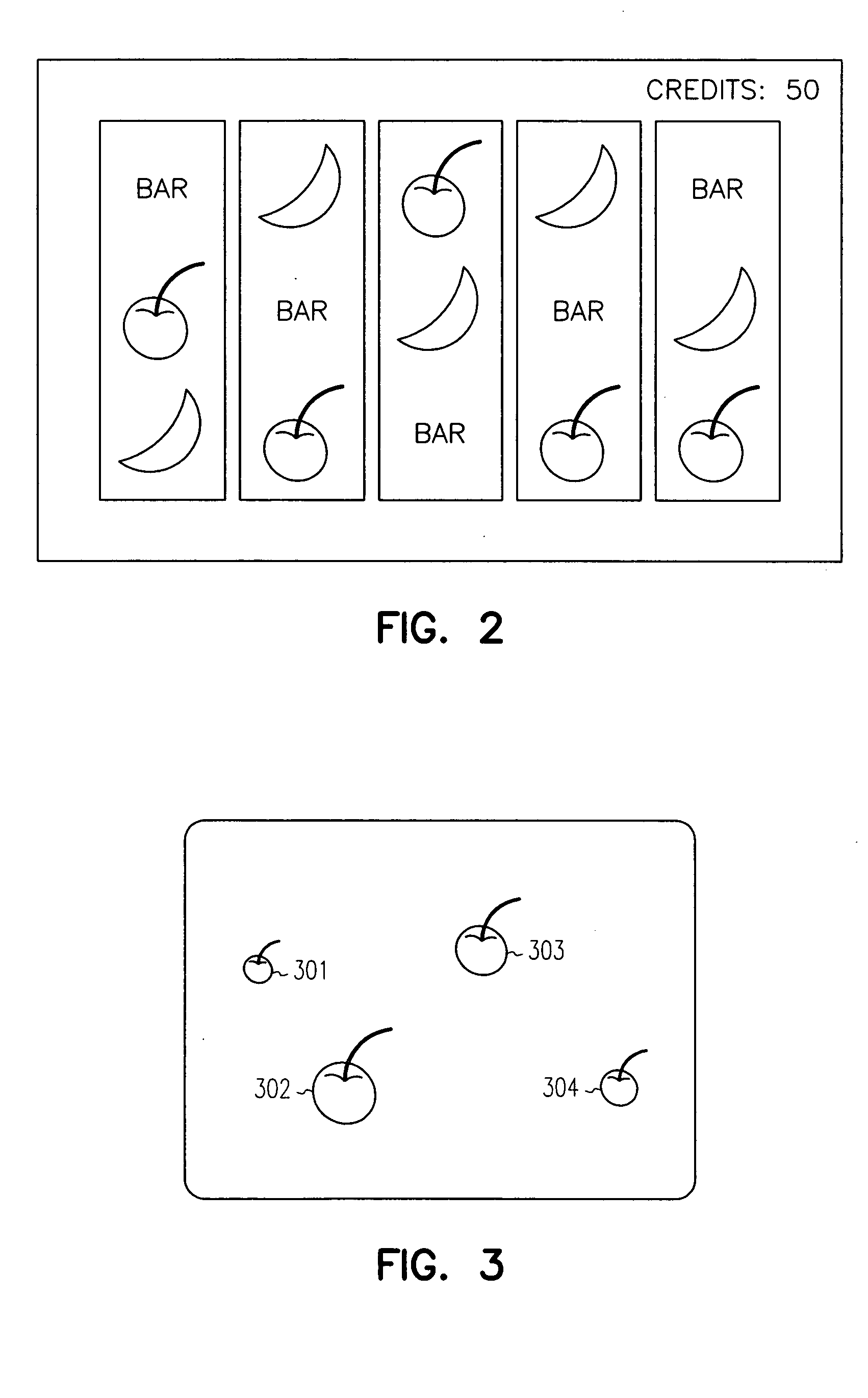

Gaming device with directional audio cues

InactiveUS20050164787A1Apparatus for meter-controlled dispensingVideo gamesGame elementMonetary value

A computerized gaming system has an audio module operable to play audio cues to direct the visual attention of a player of the gaming system, the audio cues comprising representation of the physical location of a game element presented on a video screen by variation in at least one of pitch, instrument, rhythm, volume, echo, phase, and location-specific sounds. The gaming system further comprises a gaming module, which includes a processor and gaming code which is operable when executed on the processor to conduct a game of chance on which monetary value can be wagered.

Owner:BALLY GAMING INC

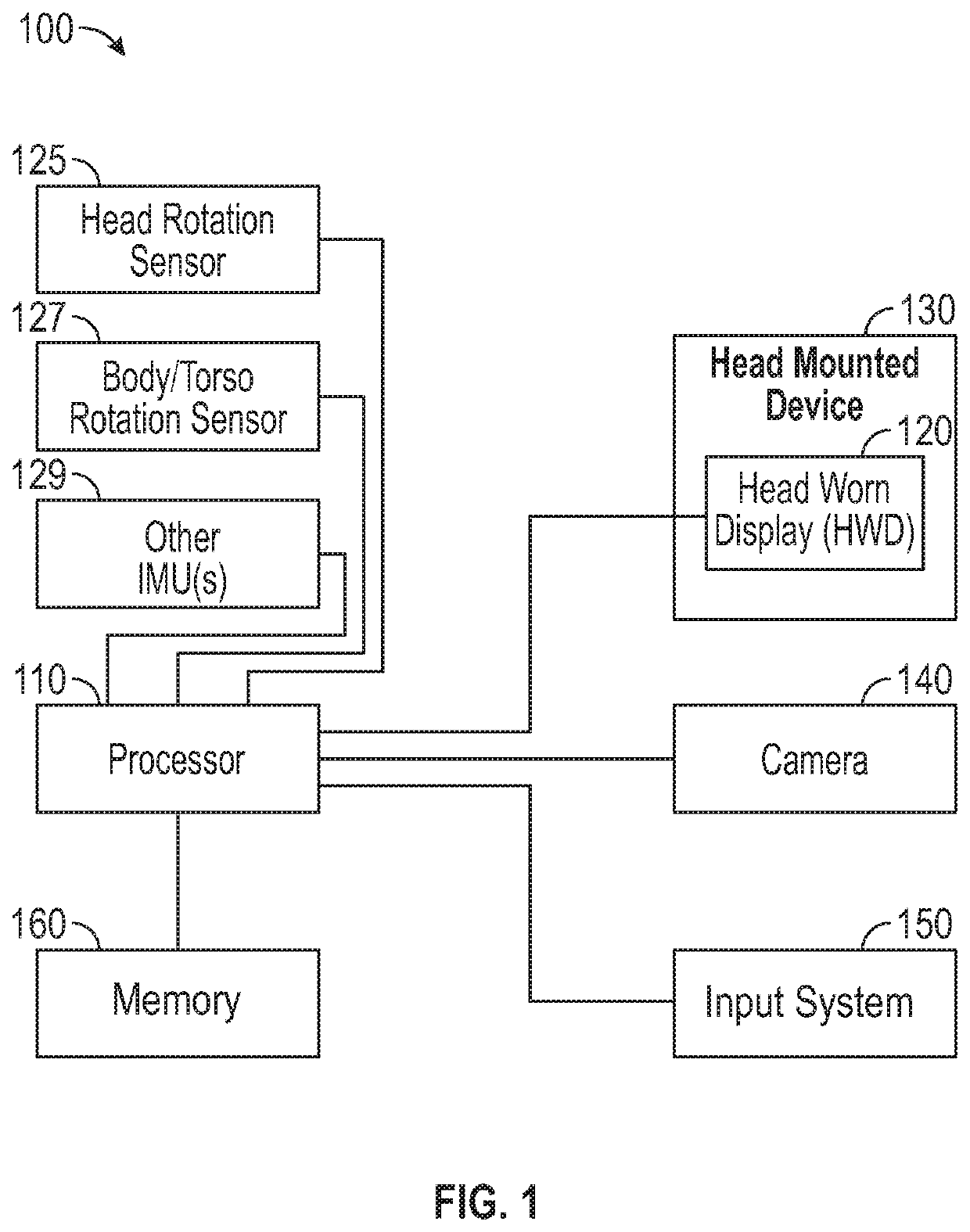

Method and system for user-related multi-screen solution for augmented reality for use in performing maintenance

ActiveUS20200035203A1High data accuracyImprove detection accuracyInput/output for user-computer interactionCathode-ray tube indicatorsSensing dataMixed reality

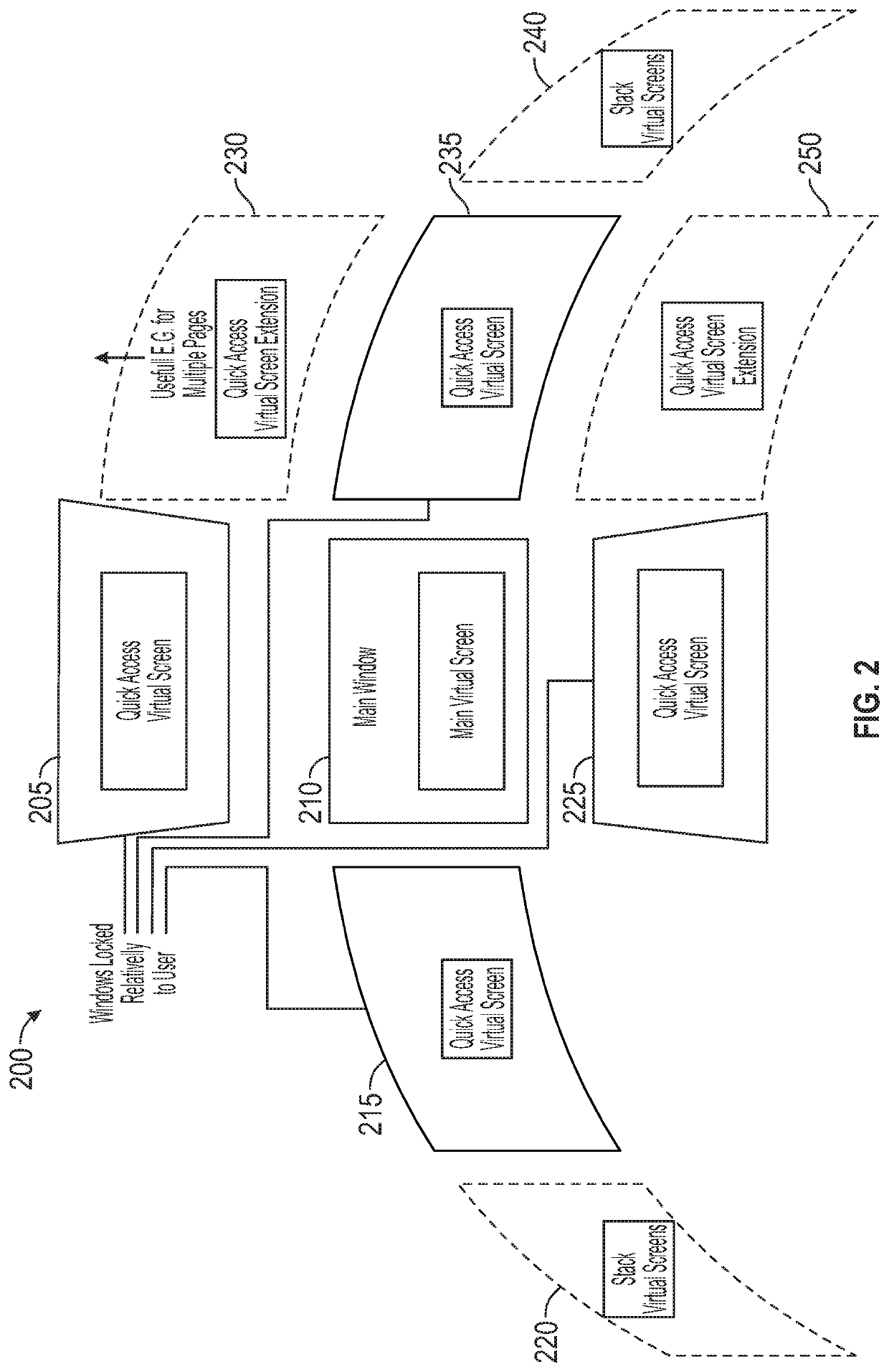

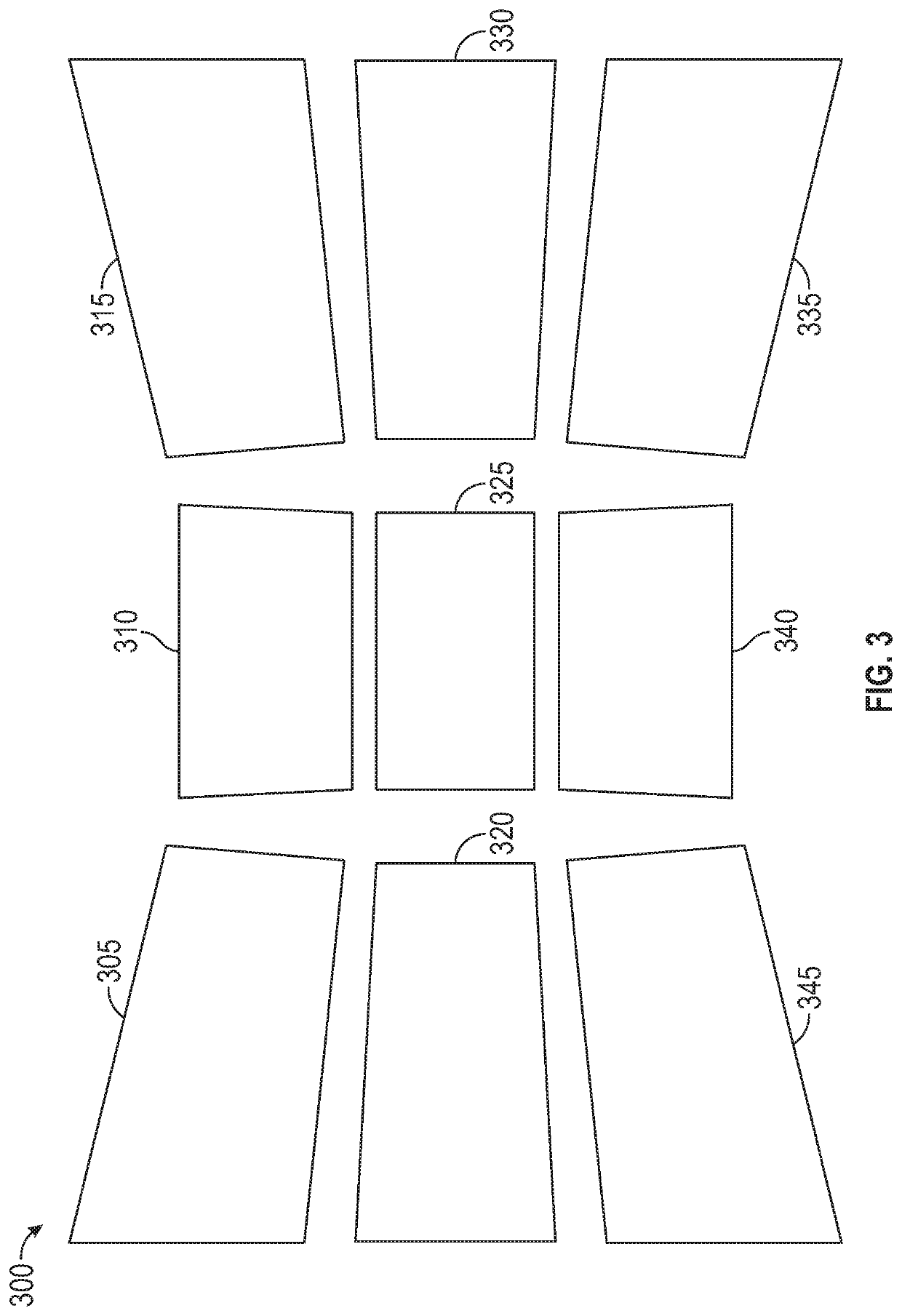

A method to augment, virtualize, or present mixed reality of content to display a larger amount of content on a head worn device (HWD) including: creating a set of virtual screens in the display of the HWD deployed in a manner to both surround and positionally shift from a movement of the viewer to provide an immersive viewing experience wherein the set of virtual screens include: at least a primary virtual screen and one or more secondary virtual screens; enabling the immersive viewing experience by generating dynamic virtual screen arrangements; connecting a first sensor attached to a head of the viewer and a second sensor attached to a torso of the viewer for generating sensed data of rotating differences of the positionally shift of the viewer; and configuring the content on each of the virtual screens to determine a particular virtual screen having a visual attention of the viewer.

Owner:HONEYWELL INT INC

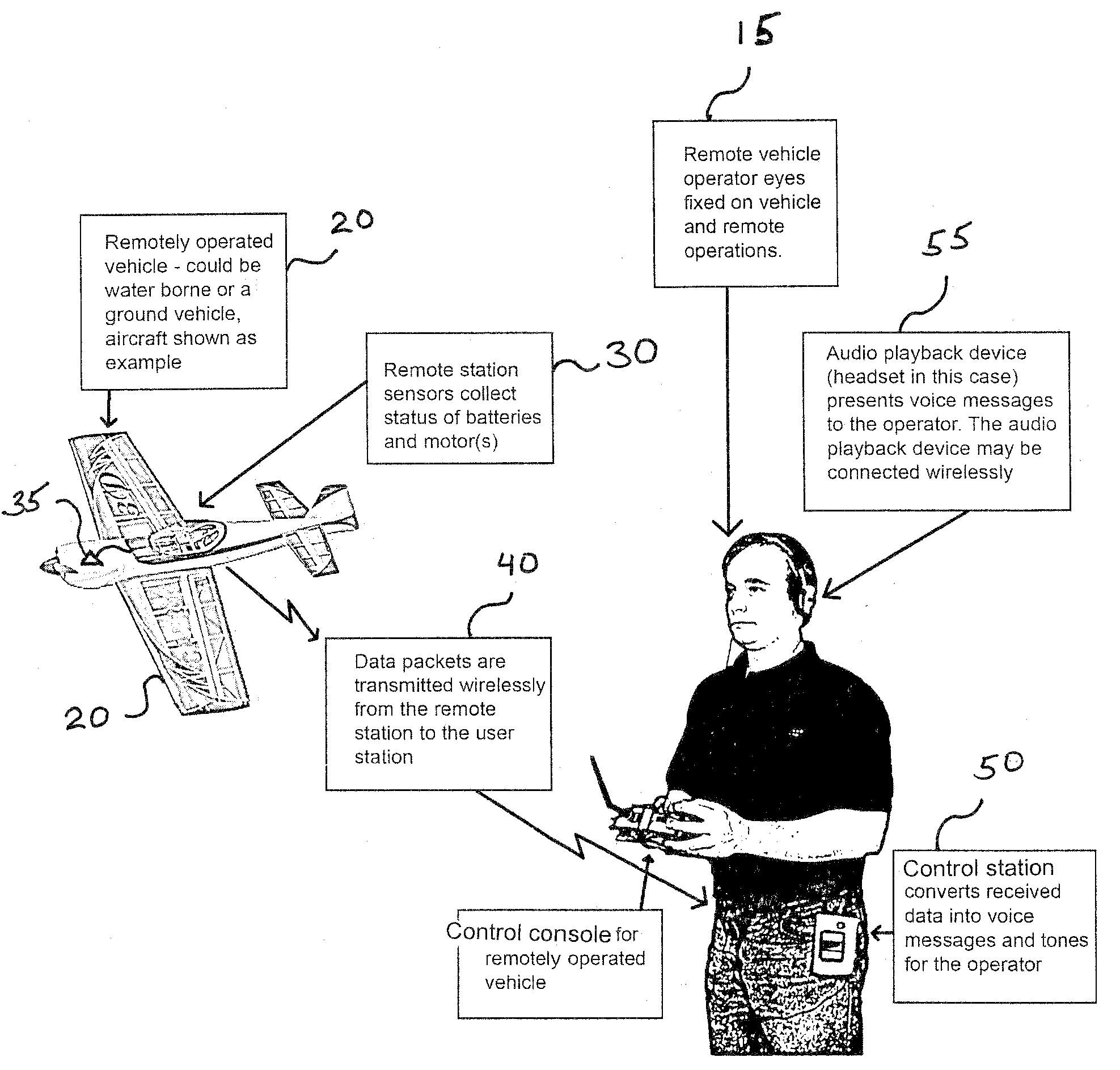

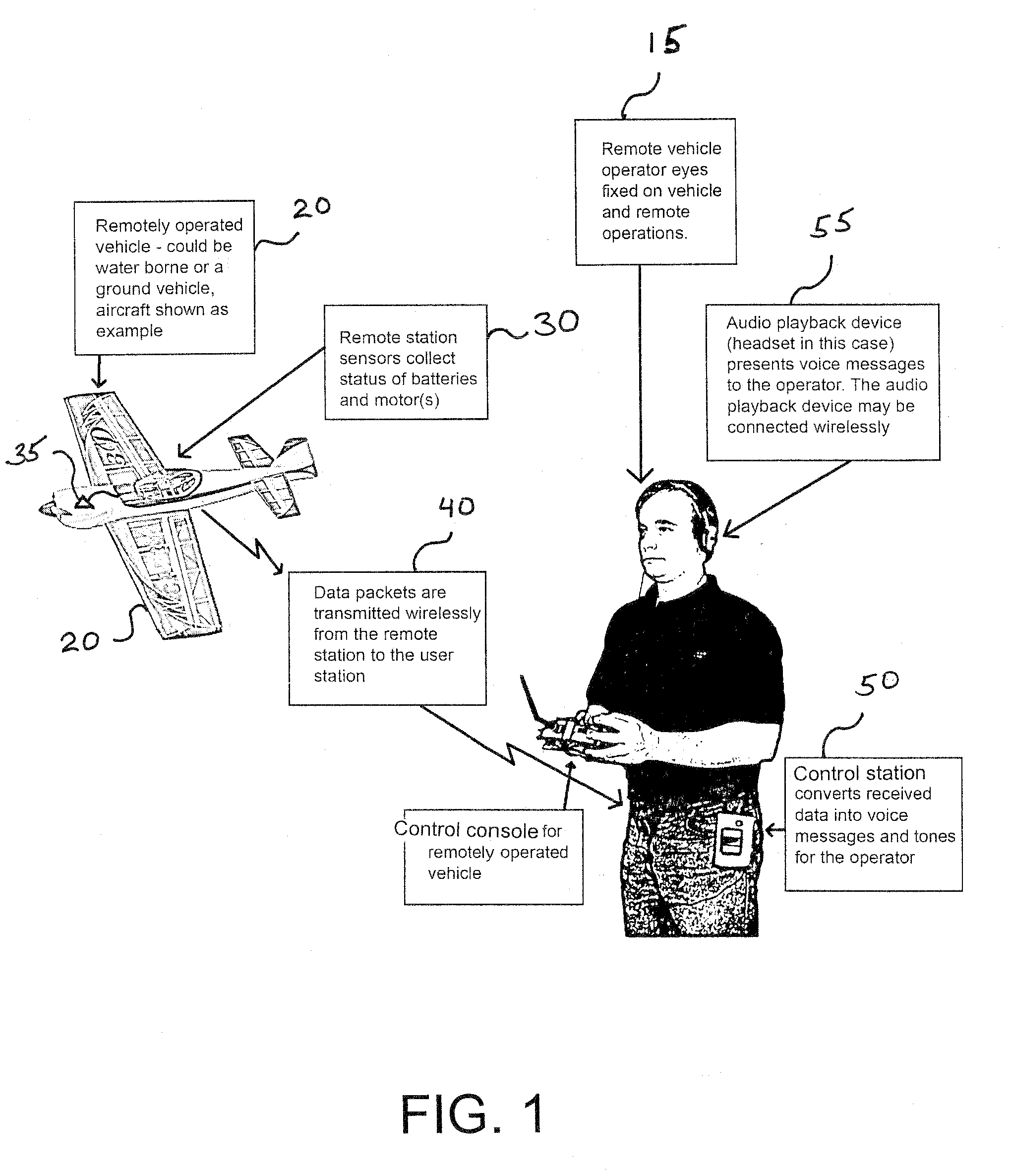

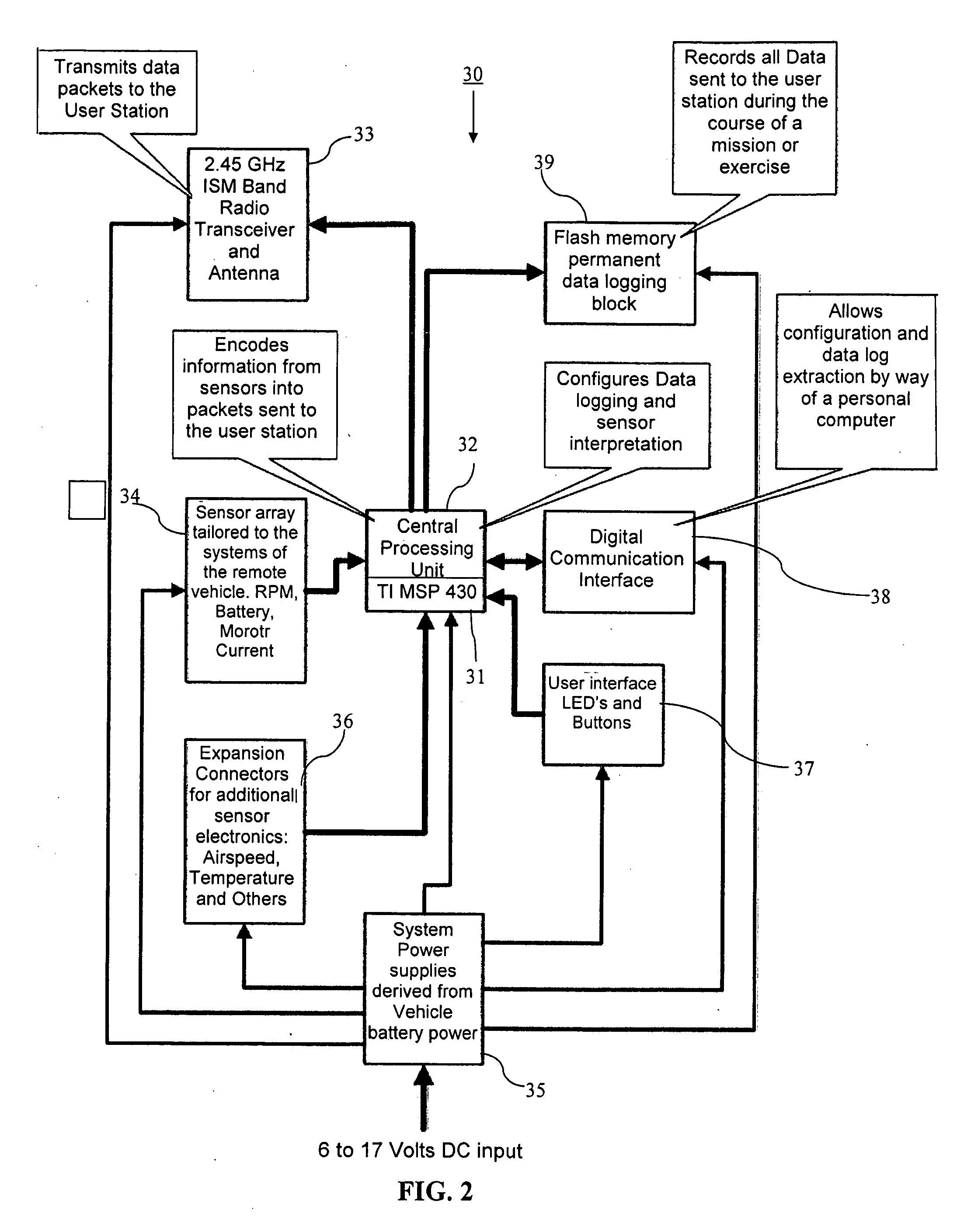

Method and apparatus for communication between a remote device and an operator

InactiveUS20090262002A1Understand clearlyAvoid damageElectric signal transmission systemsEqual length code transmitterVisual perceptionSpeech sound

Embodiments of the invention allow sensors positioned on or near a remotely controlled device to monitor the status and / or internal conditions of the device and transmit the status information through a data link to a receiver station, or control station, where the information can be provided to a user or operator via sensory stimulation signals. Such sensory stimulation signals can include auditory stimulation signals that can convey the information to the user while allowing the user to maintain visual attention on the remote device. A remote station can obtain information from sensors operably connected to the remote device. The sensor information can be transmitted as digital information from the remote station to a control station, or receiver station, where it is converted to sensory stimulation signals, such as sounds. Such sounds can include tones, alarms, speech, other audible sounds, or combinations thereof. An operator controlling the remote device can, upon receiving the sensory stimulation signals, provide input signals for controlling the remote device.

Owner:ALEXANDER JOHN F +4

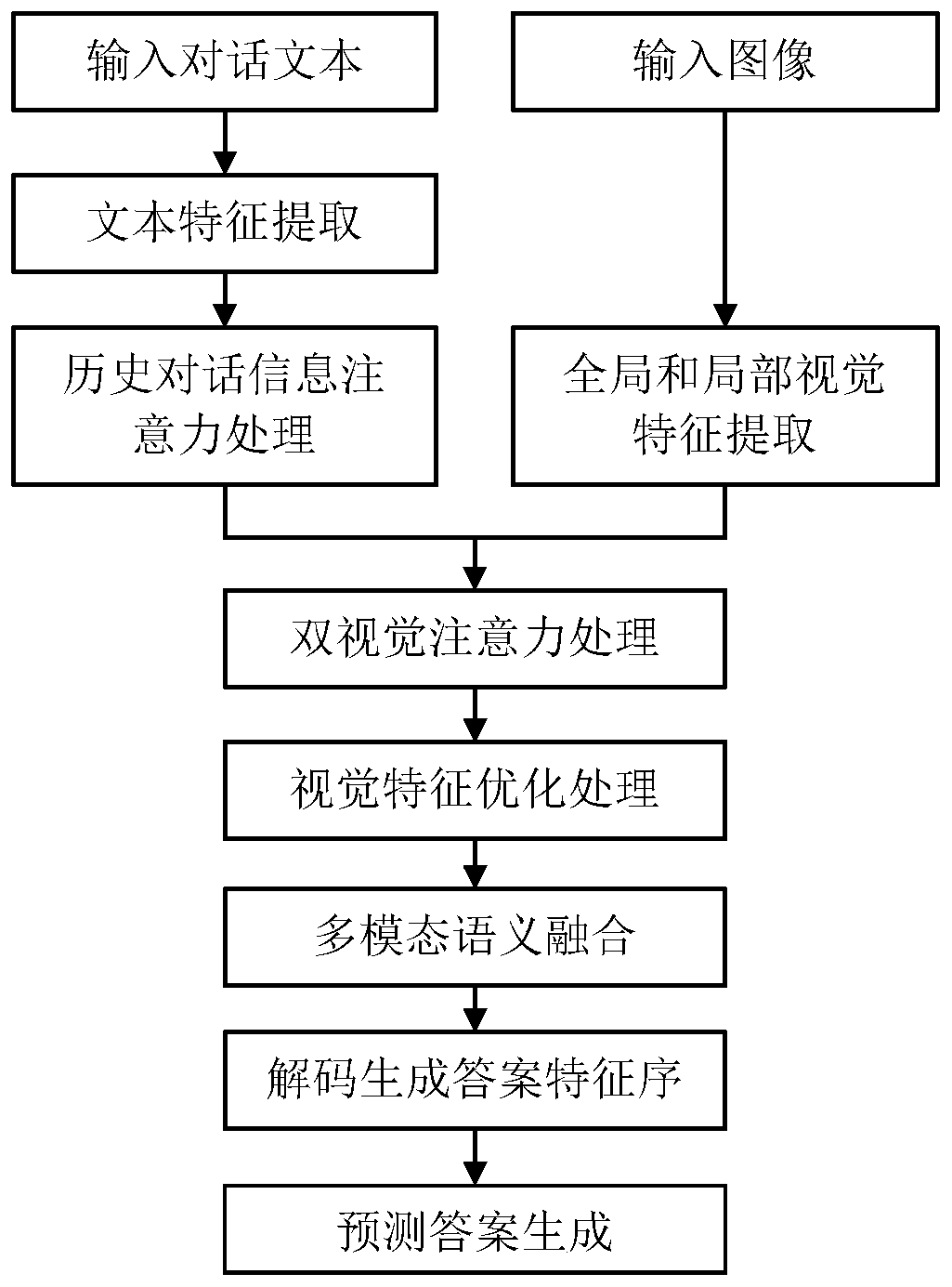

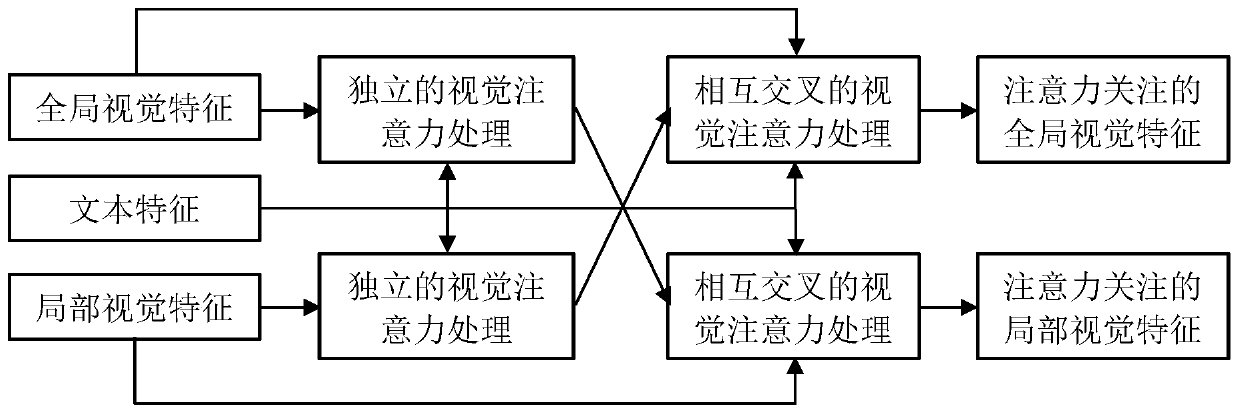

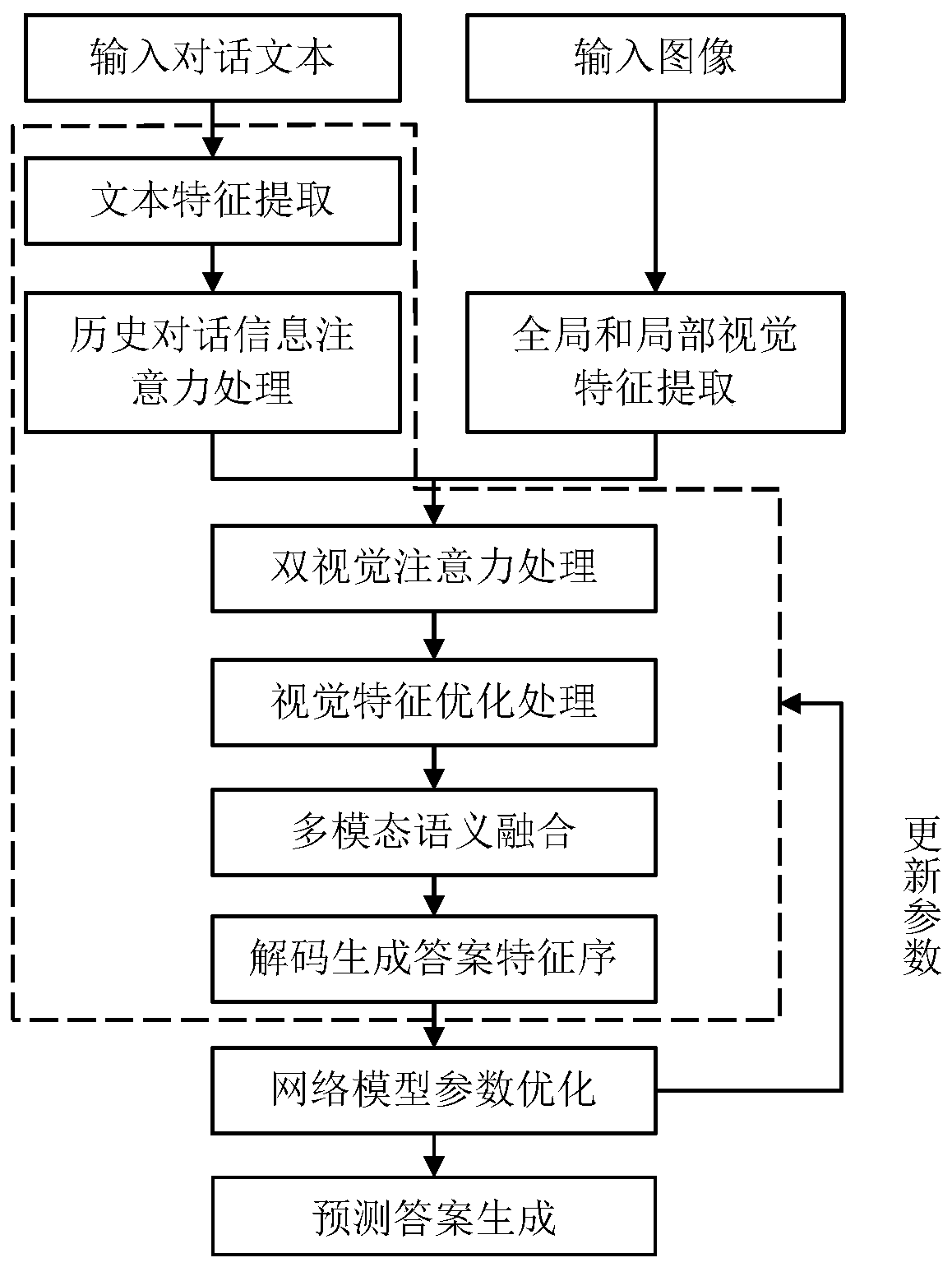

Visual dialogue generation method based on double visual attention network

InactiveCN110647612AVisual Semantic Information SpecificComplete visual featuresText database indexingSpecial data processing applicationsFeature extractionWord list

The invention discloses a visual dialogue generation method based on a double visual attention network. The method comprises the following steps: 1, preprocessing text input in a visual dialogue and constructing a word list; 2, feature extraction of a dialogue image and feature extraction of a dialogue text; 3, attention processing is performed on the historical dialogue information based on the current problem information; 4, independent attention processing of the double visual features is carried out; 5, attention processing of mutual intersection of the double visual features; 6, optimizing the visual features; 7, performing multi-modal semantic fusion and decoding to generate an answer feature sequence; 8, optimizing parameters of a visual dialogue generation network model based on the double visual attention networks; 9, prediction answer generation. According to the invention, more complete and reasonable visual semantic information and finer-grained text semantic information can be provided for the intelligent agent, so that the reasonability and accuracy of answers predicted and generated by the intelligent agent to questions are improved.

Owner:HEFEI UNIV OF TECH

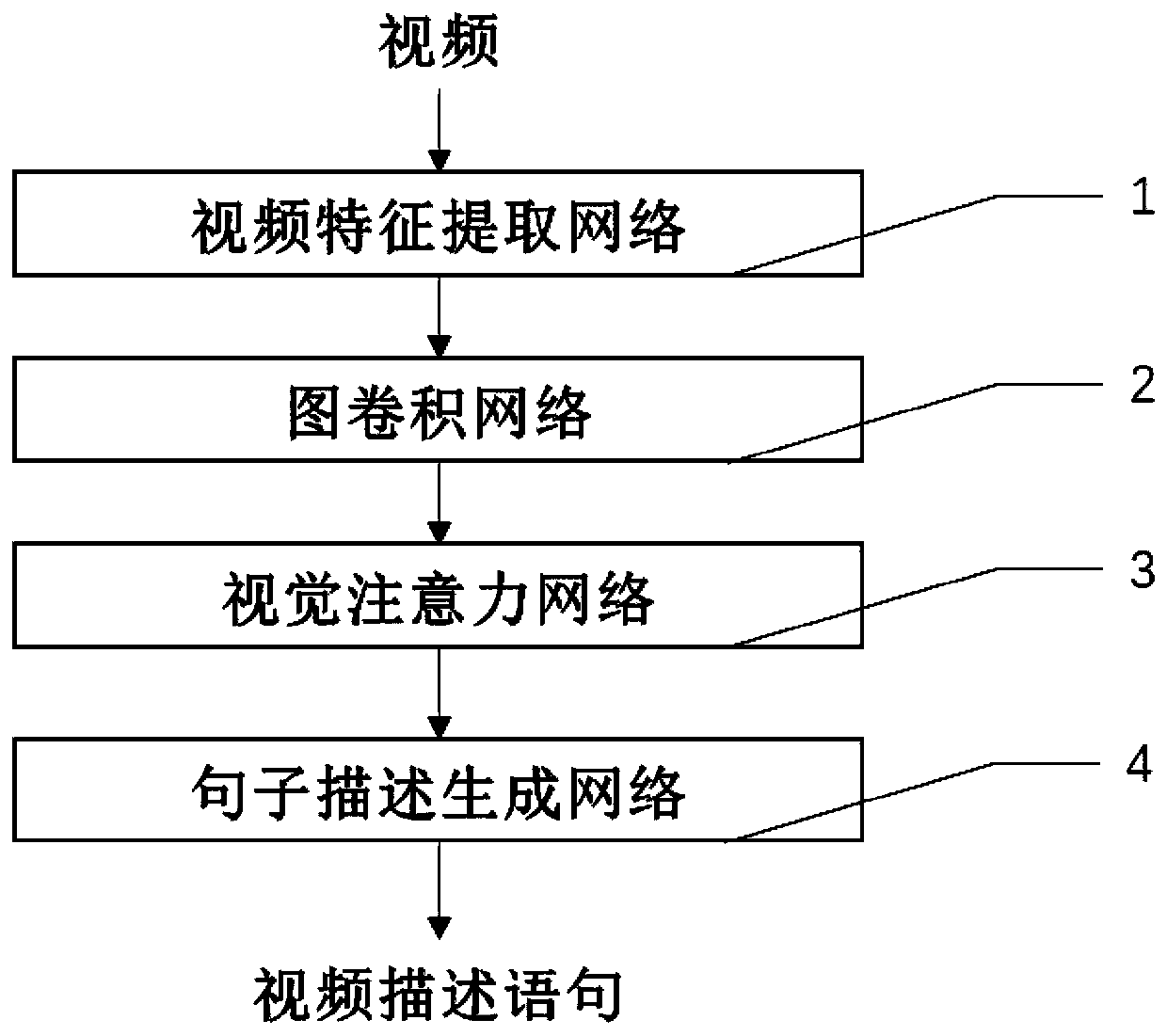

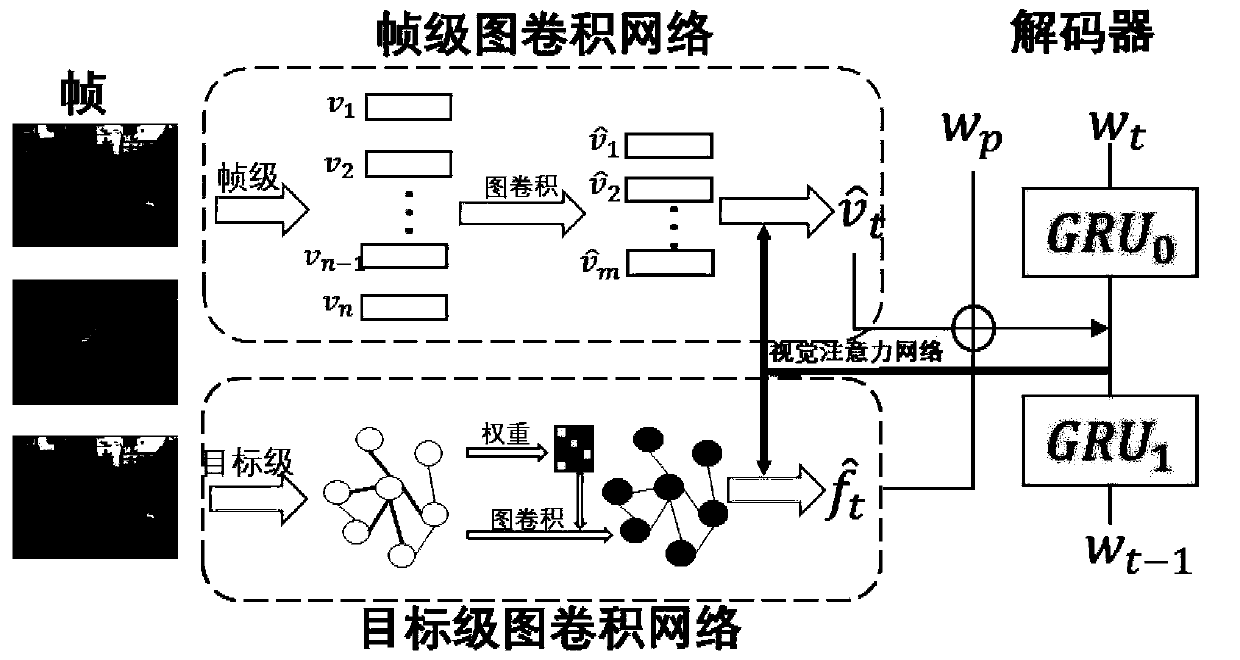

Video description generation system based on graph convolution network

PendingCN111488807AGenerate accuratelyImprove experienceCharacter and pattern recognitionNeural architecturesFeature extractionVideo reconstruction

The invention belongs to the technical field of cross-media generation, and particularly relates to a video description generation system based on a graph convolution network. The video description generation system comprises a video feature extraction network, a graph convolution network, a visual attention network and a sentence description generation network. The video feature extraction network performs sampling processing on videos to obtain video features and outputs the video features to the graph convolution network. The graph convolution network recreates the video features accordingto semantic relations and inputs the video features into sentence descriptions to generate a recurrent neural network; and the sentence description generation network generates sentences according tofeatures of video reconstruction. The features of a frame-level sequence and a target-level sequence in the videos are reconstructed by adopting graph convolution, and the time sequence information and the semantic information in the videos are fully utilized when description statements are generated, so that the generation is more accurate. The invention is of great significance to video analysisand multi-modal information research, can improve the understanding ability of a model to video visual information, and has a wide application value.

Owner:FUDAN UNIV

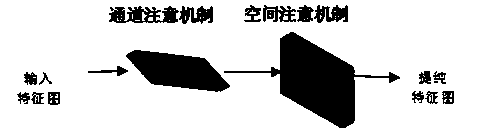

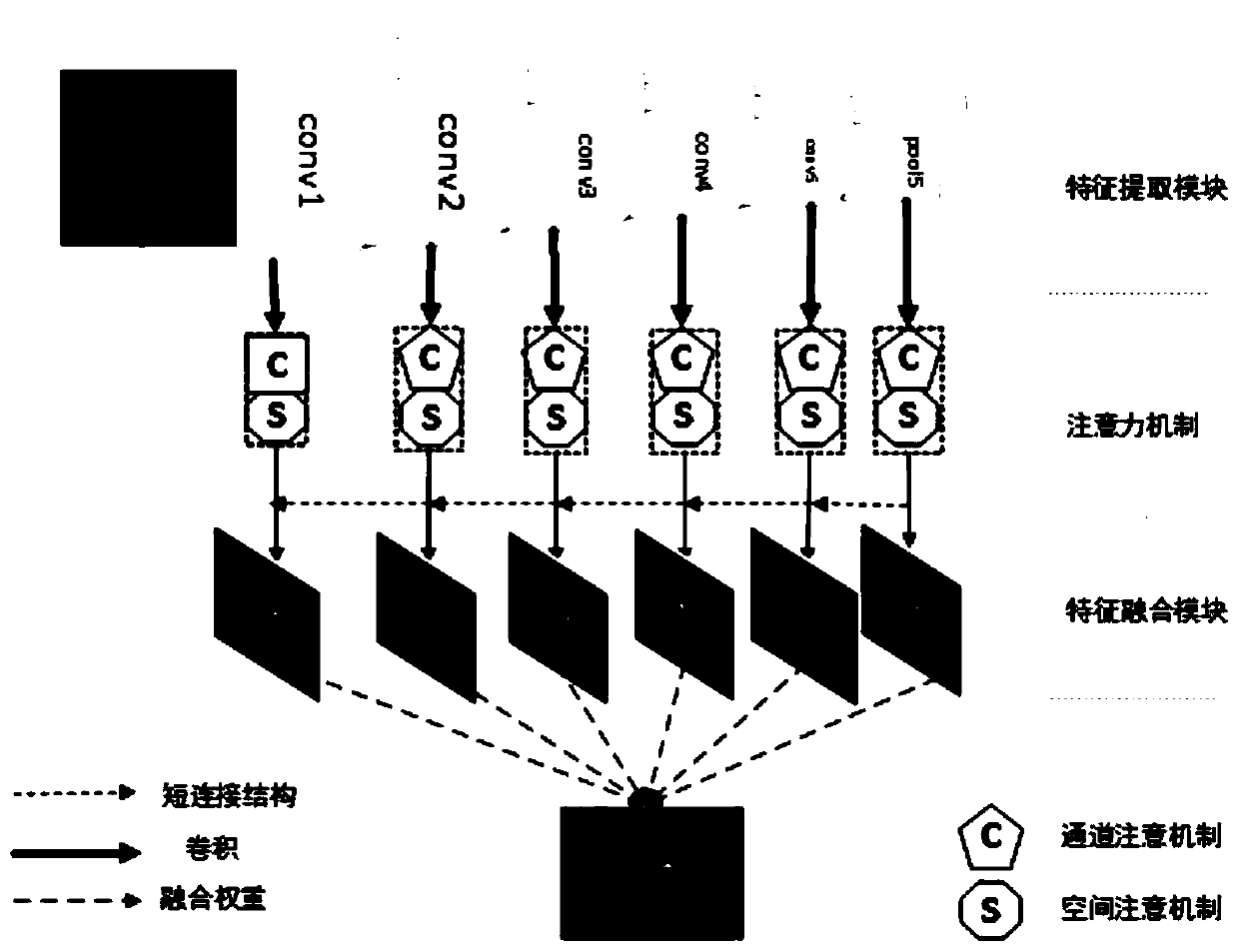

Full convolutional network fabric defect detection method based on attention mechanism

InactiveCN110866907AEnhanced Representational CapabilitiesAvoid interferenceImage enhancementImage analysisPattern recognitionSaliency map

The invention provides a full convolutional network fabric defect detection method based on an attention mechanism. The full convolutional network fabric defect detection method comprises the steps: firstly extracting a multi-stage and multi-scale intermediate depth feature map of a fabric image through an improved VGG16 network, and carrying out the processing through the attention mechanism, andobtaining a multi-stage and multi-scale depth feature map; then, performing up-sampling on the multi-level and multi-scale depth feature maps by utilizing bilinear interpolation to obtain multi-levelfeature maps with the same size, and performing fusion by utilizing a short connection structure to obtain a multi-level saliency map; and finally, fusing the multistage saliency maps by adopting weighted fusion to obtain a final saliency map of the defect image. According to the full convolutional network fabric defect detection method, complex defect characteristics and various backgrounds of the fabric image are comprehensively considered, and the representation capability of the fabric image is improved by simulating an attention mechanism of human visual attention cognition, and the noise influence in the image is eliminated, so that the detection result has higher adaptivity and detection precision.

Owner:ZHONGYUAN ENGINEERING COLLEGE

Key frame extraction method based on visual attention model and system

The invention discloses a key frame extraction method based on a visual attention model and a system. In a spatial domain, the extraction method uses binomial coefficients to filter the global contrast for salience detection, and uses an adaptive threshold for carrying out extraction on a target region. The algorithm can well maintain the salient target region boundary, and the salience in the region is uniform. Then, in a time domain, the method defines the motion salience, motion of the target is estimated via a homography matrix, a key point is adopted for replacing the target for salience detection, data of salience in the spatial domain is converged, and a boundary extension method based on an energy function is brought forward to acquire a bounding box to serve as the salient target region of the time domain. Finally, the method reduces richness of the video through the salient target region and an online clustering lens adaptive method is adopted for key frame extraction.

Owner:SUN YAT SEN UNIV +1

Image processing apparatus and method

ActiveUS20130106844A1Reduce visual fatigueValid choiceImage enhancementImage analysisParallaxImaging processing

An image processing apparatus including a region of interest (ROI) configuration unit may generate a visual attention map according to visual characteristics of a human in relation to an input three dimensional (3D) image. A disparity adjustment unit may adjust disparity information, included in the input 3D image, using the visual attention map. Using the disparity information adjusted result, a 3D image may be generated and displayed which reduces a level of visual fatigue a user may experience in viewing the 3D image.

Owner:SAMSUNG ELECTRONICS CO LTD

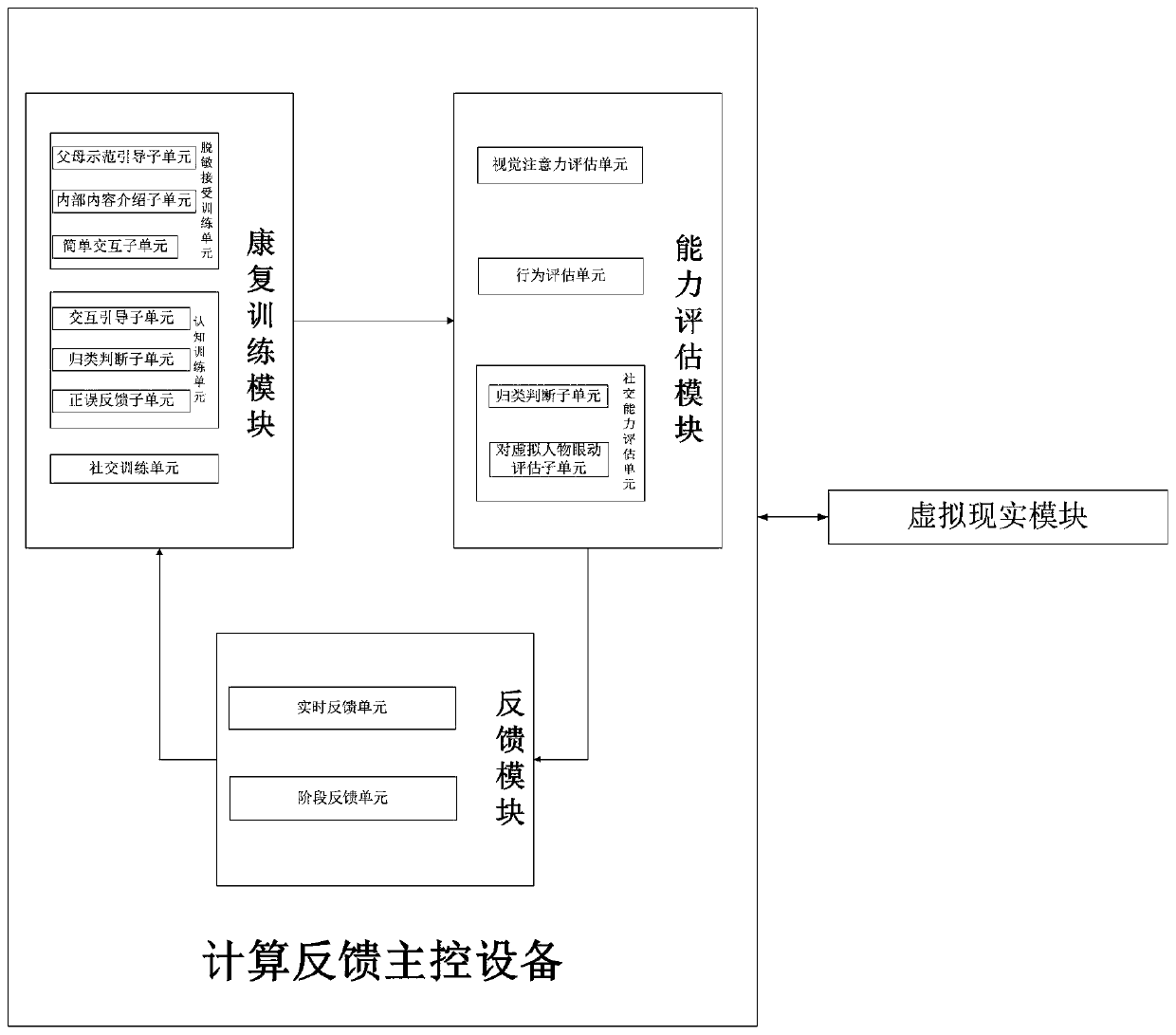

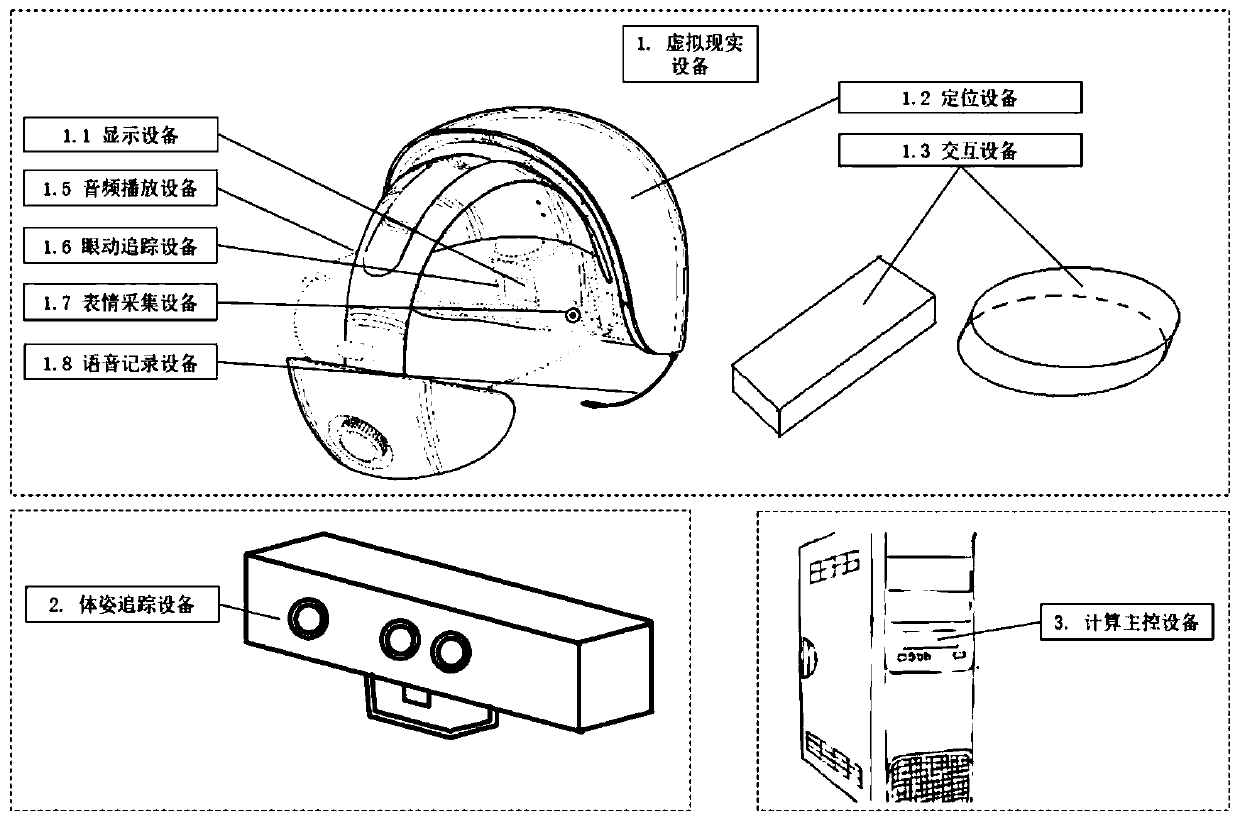

Alzheimer's disease rehabilitation training and ability evaluation system based on virtual reality

PendingCN111063416APhysical therapies and activitiesMedical automated diagnosisEvaluation resultPhysical medicine and rehabilitation

The invention provides an Alzheimer's disease rehabilitation training and ability evaluation system based on virtual reality. The system comprises a rehabilitation training module, an ability evaluation module and a feedback module, wherein the rehabilitation training module is used for conducting rehabilitation training on an Alzheimer's disease patient in the aspects of cognition and social contact; the ability evaluation module is used for collecting the visual attention information, the behavior mode information and social communication ability information generated by the rehabilitation training module, carrying out comprehensive evaluation according to the information and generating a comprehensive evaluation result; and the feedback module is used for feeding back the evaluation result to the rehabilitation training module and changing the content and process of rehabilitation training. According to the invention, the behavior mode of the testee is amplified by using the virtualreality technology, the data is analyzed and processed by combining an artificial intelligence technology, a comprehensive evaluation result can be obtained by comparing the data with a standard control group and an Alzheimer's disease control group, and the content and process of rehabilitation training can be adjusted by combining real-time feedback and stage feedback to adapt to the current state of the Alzheimer's disease patient.

Owner:JIAXING SEC VIEW INFORMATION TECH CO LTD

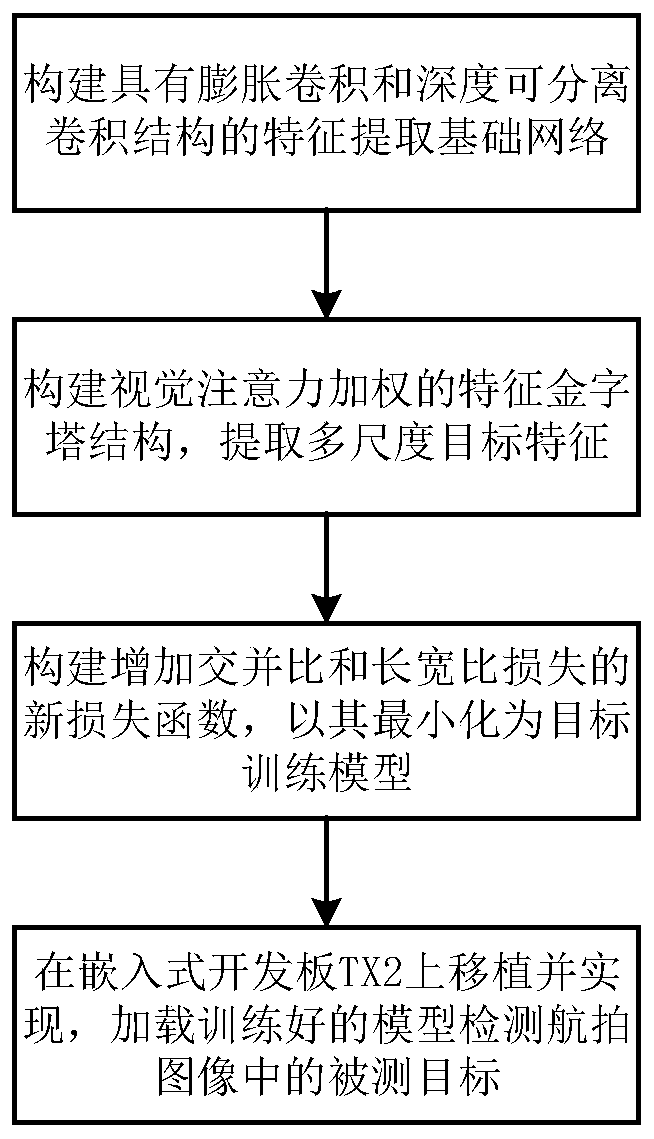

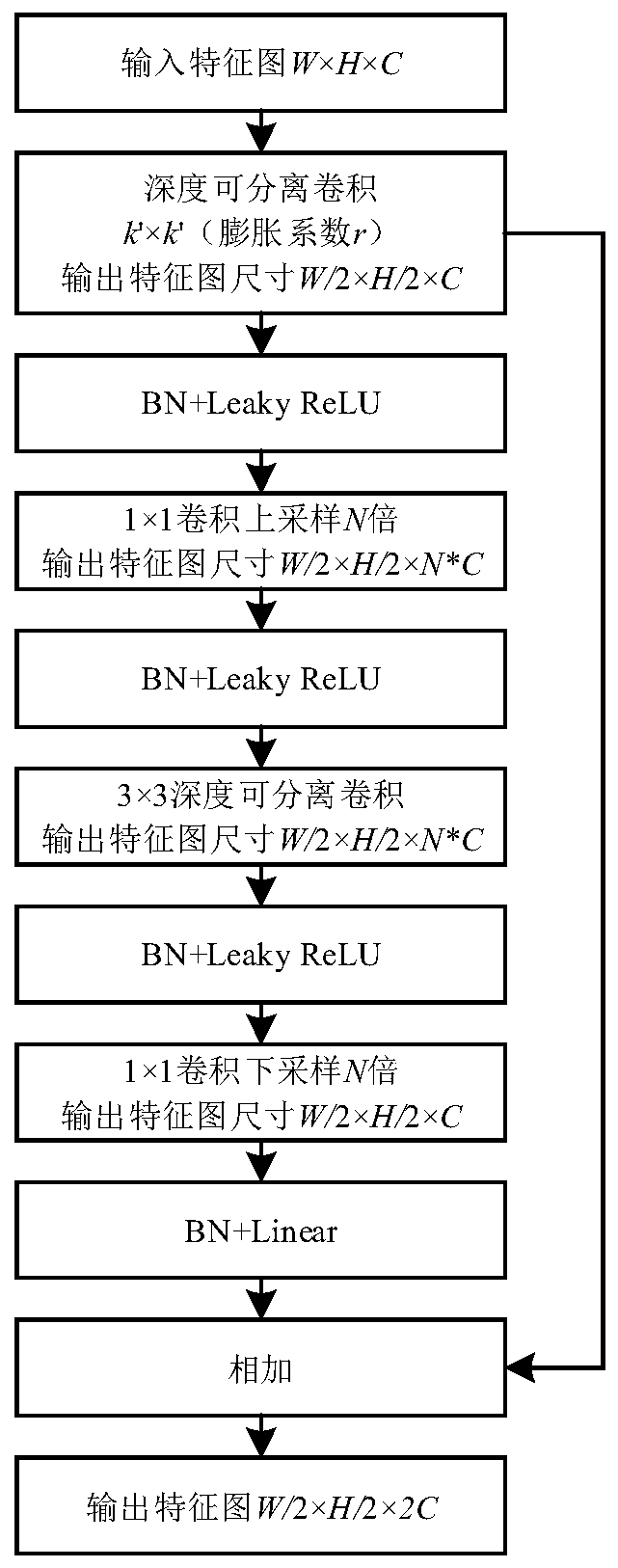

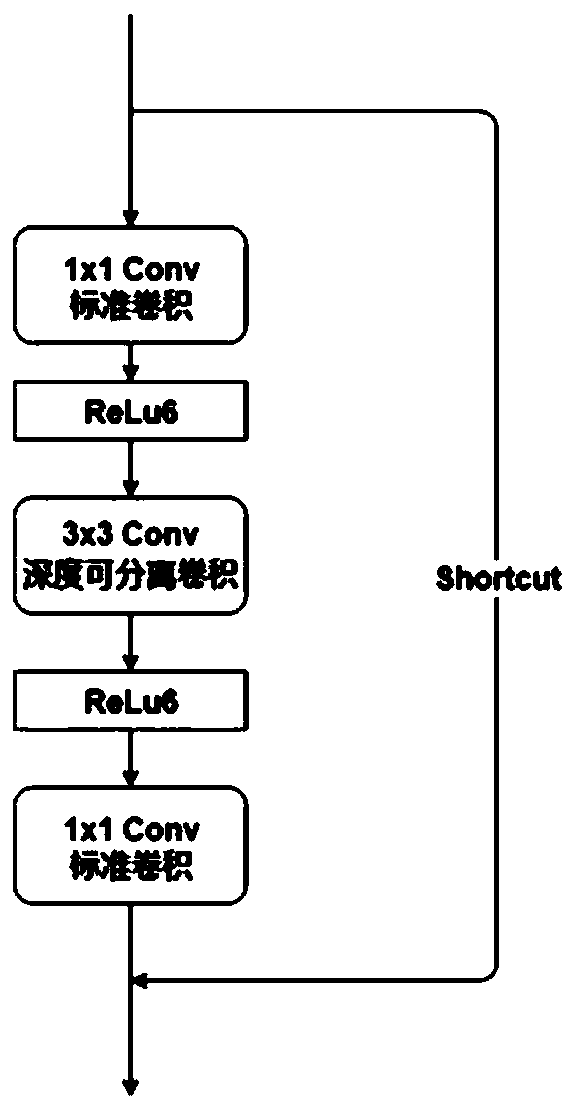

Airborne photoelectric video target intelligent detection and identification method

ActiveCN111507271AHigh precisionImprove positioning accuracyScene recognitionNeural architecturesComputation complexityComputer graphics (images)

The invention discloses an airborne photoelectric video target intelligent detection and identification method. On the basis of a YOLOv3 model, rectangular convolution is adopted to extract strip-shaped target features such as a bridge, expansion convolution is adopted to expand a receptive field and reserve spatial structure information of a multi-scale target, a visual attention mechanism is introduced into a sampling branch on a feature pyramid to endow the model with different weights of target features of different regions and different channels, and a convolution mode of a residual module is improved into depth separable convolution to reduce calculation complexity. The method has the following advantages: high aerial photography target detection precision is kept, and meanwhile, high aerial photography target detection and recognition speed can be achieved on an airborne embedded system.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY +2

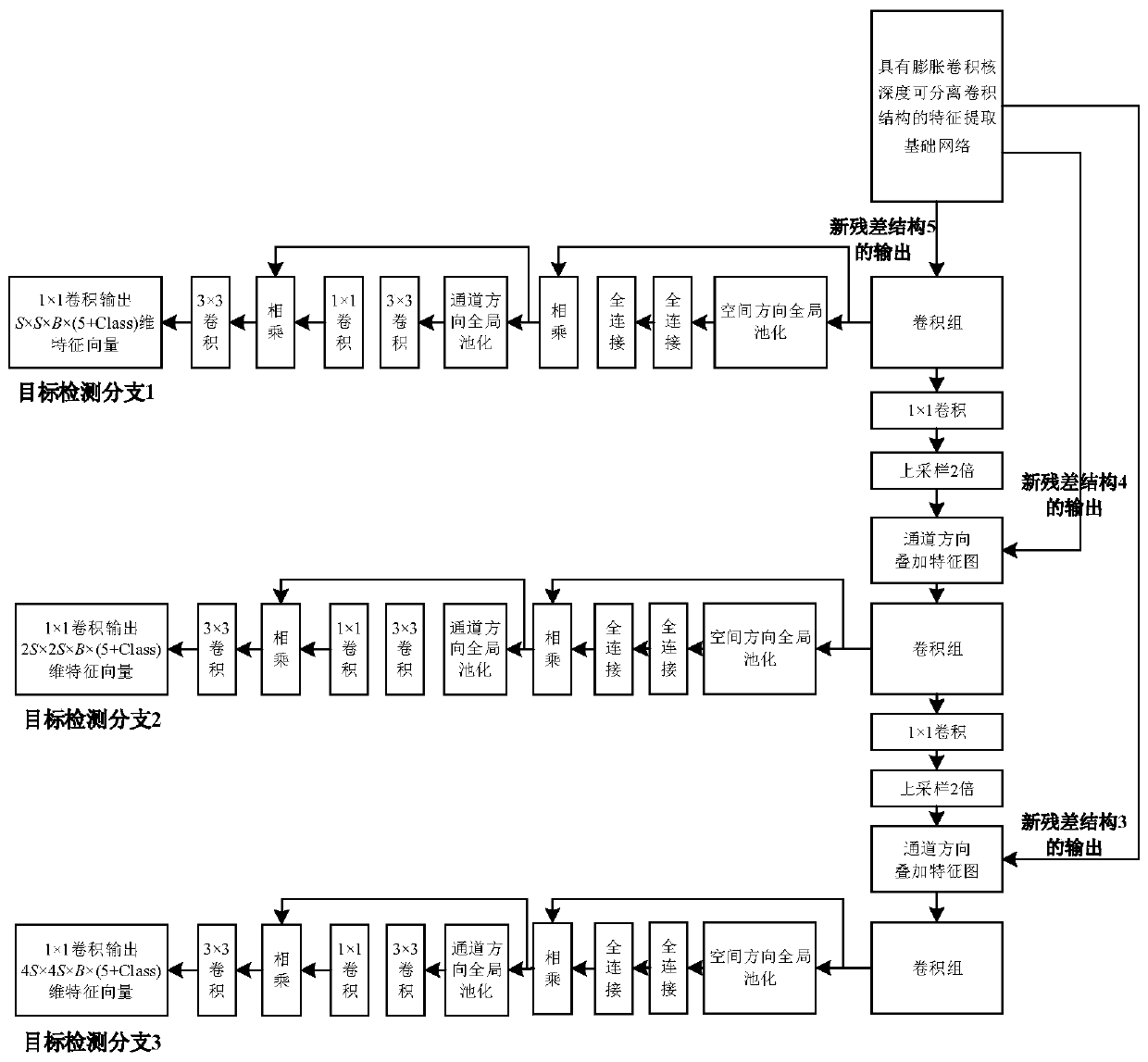

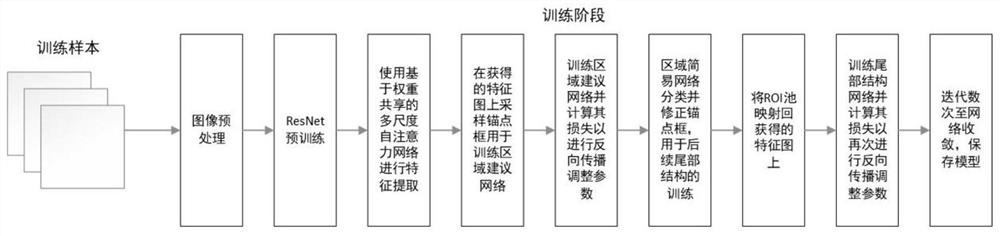

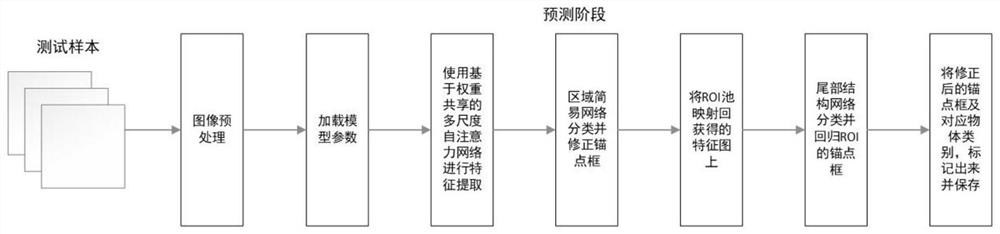

Multi-scale self-attention target detection method based on weight sharing

InactiveCN111967480AAdd input imageReduce redundancyCharacter and pattern recognitionNeural architecturesData setFeature extraction

The invention provides a multi-scale self-attention target detection method based on weight sharing. A device for the method is composed of a weight-shared multi-scale convolutional network and a visual self-attention module. According to the method, multi-scale, an attention mechanism, fine-grained feature extraction and lightweight of a model can be considered to a certain extent; a residual block and expansion convolution technology is innovatively fused into a multi-scale convolution network; the method has the capability of realizing deep feature extraction while the lightweight of the model is ensured; the visual attention mechanism is introduced into the multi-scale convolution network, so that the network can pay attention to key areas in the image, and computing resources are saved. The method is wide in adaptability and high in robustness, and can be used for various target detection tasks. The method is experimented on a well-known data set, experimental results show that the method has high accuracy while ensuring the lightweight of the model, average accuracy of 73.6 is obtained, and effectiveness of the method is proved.

Owner:SHANGHAI MARITIME UNIVERSITY

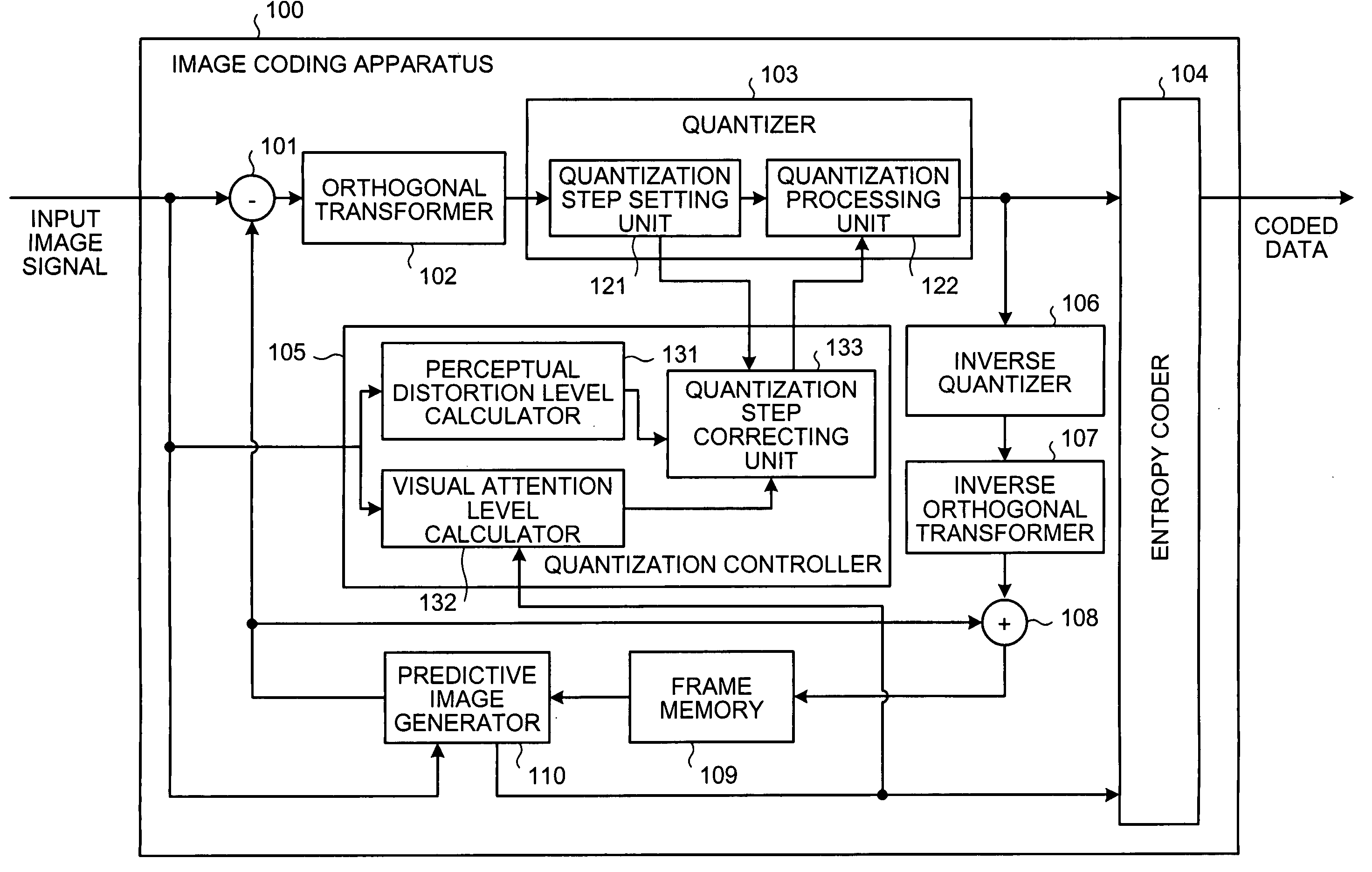

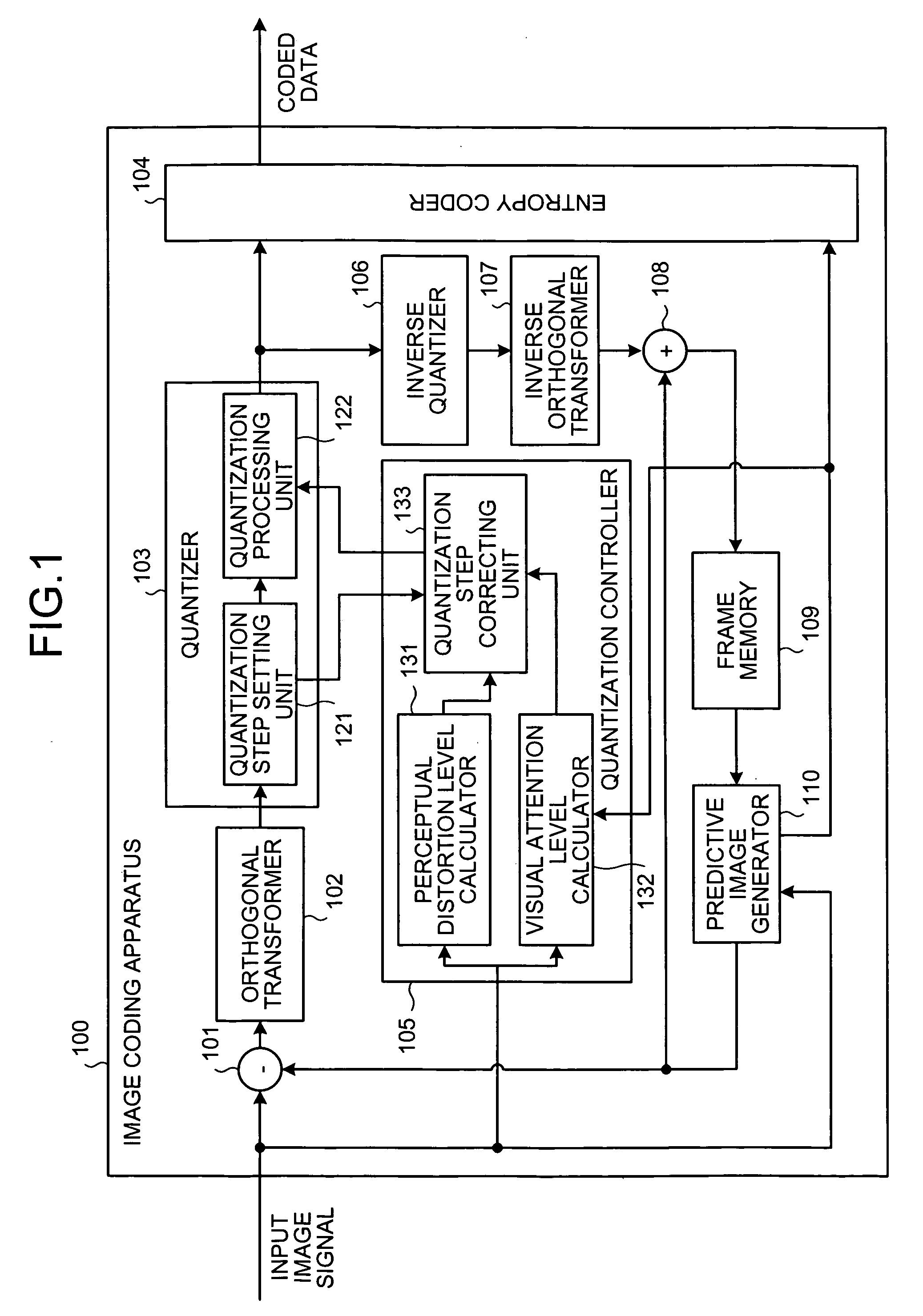

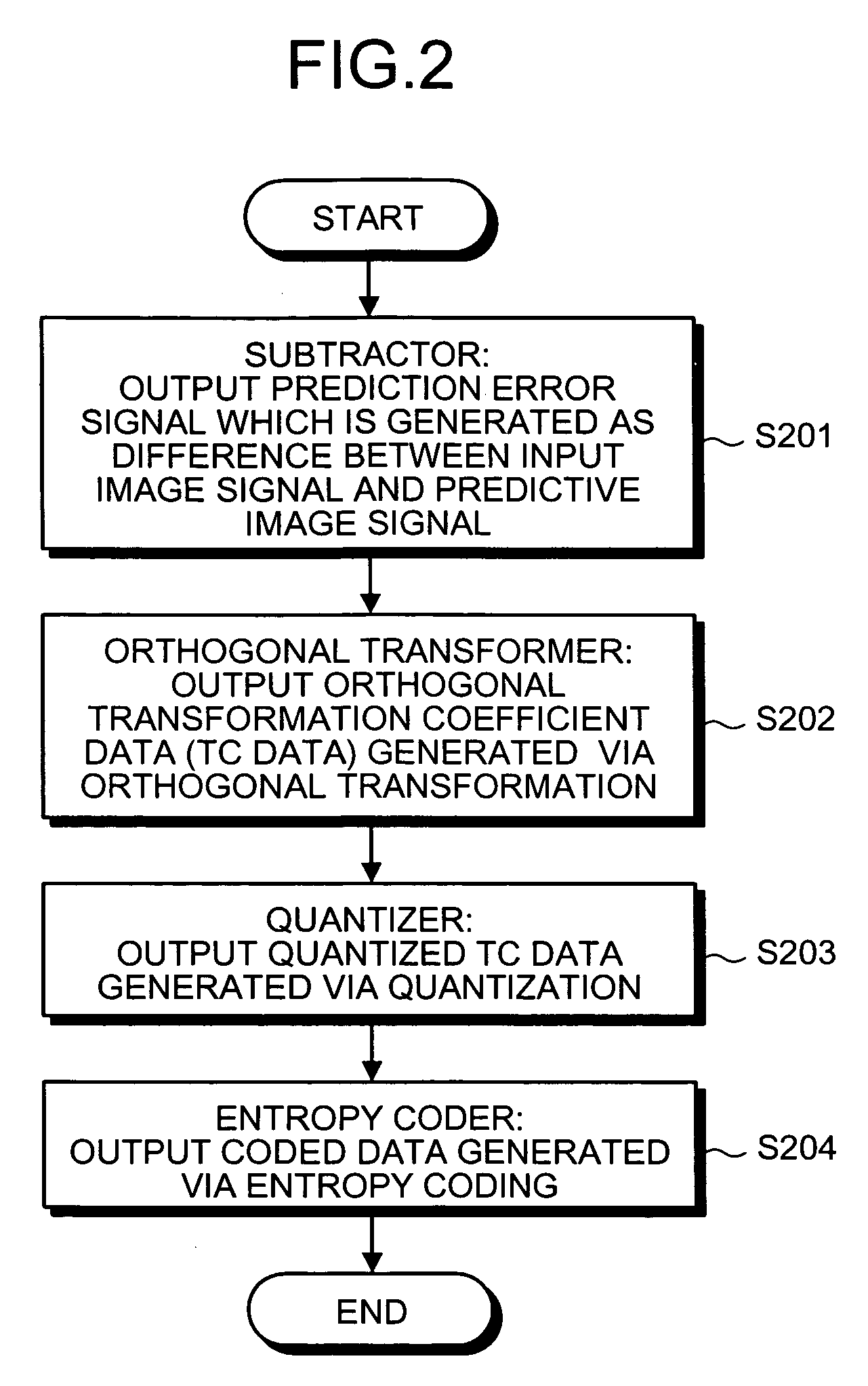

Apparatus and method for coding image based on level of visual attention and level of perceivable image quality distortion, and computer program product therefor

InactiveUS20050286786A1Easily visually perceivedImage codingCharacter and pattern recognitionCoding blockImaging quality

An apparatus for coding an image includes a setting unit that sets quantization width for each coded block of an image frame of image data. The apparatus also includes a visual attention calculating unit that calculates a level of visual attention to a first element for each coded block of the image frame; and a perceptual distortion calculating unit that calculates a level of perceptual distortion of a second element whose distorted image quality is easily visually perceived, for each coded block of the image frame. The apparatus also includes a correcting unit that corrects the quantization width based on the level of visual attention and the level of perceptual distortion; and a quantizing unit that quantizes the image data based on the corrected quantization width.

Owner:KK TOSHIBA

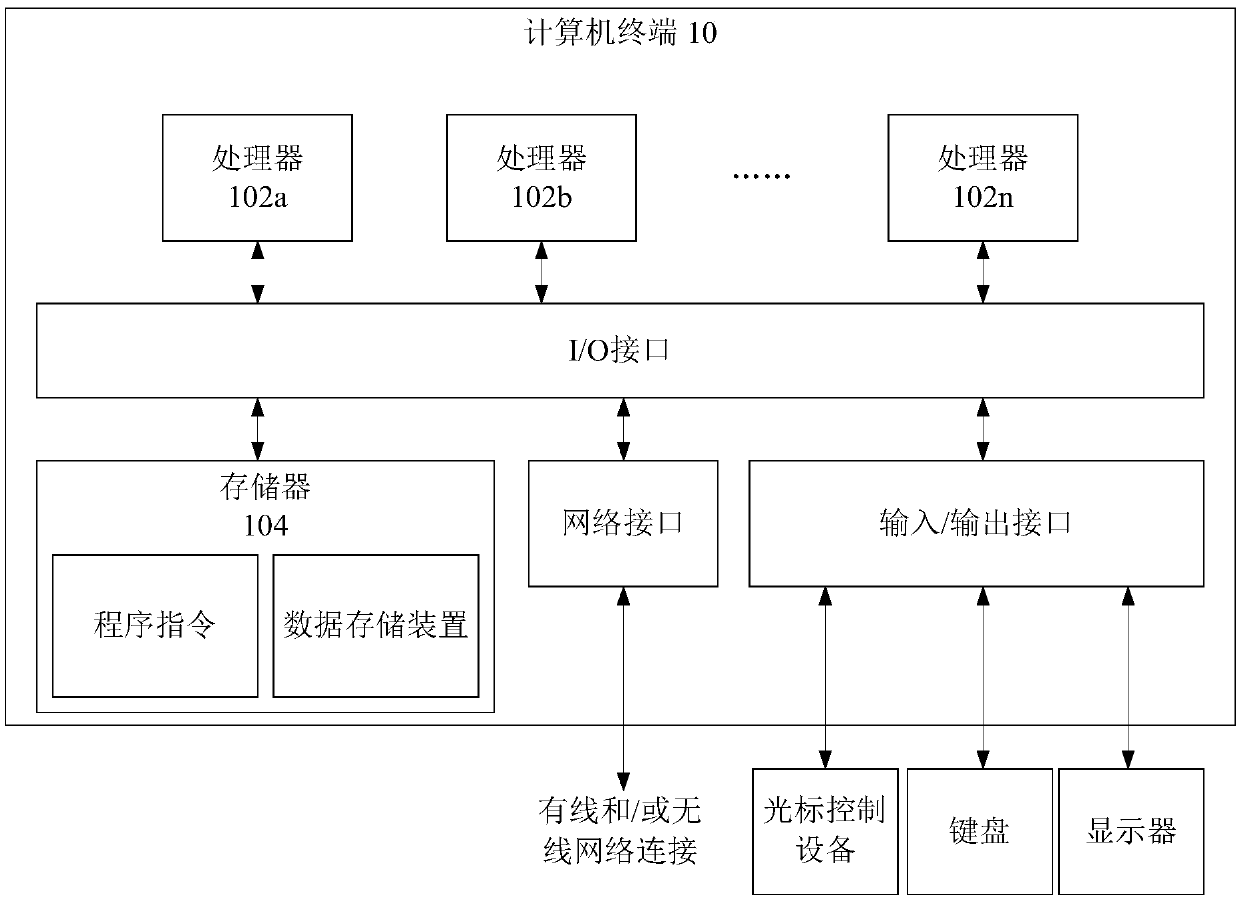

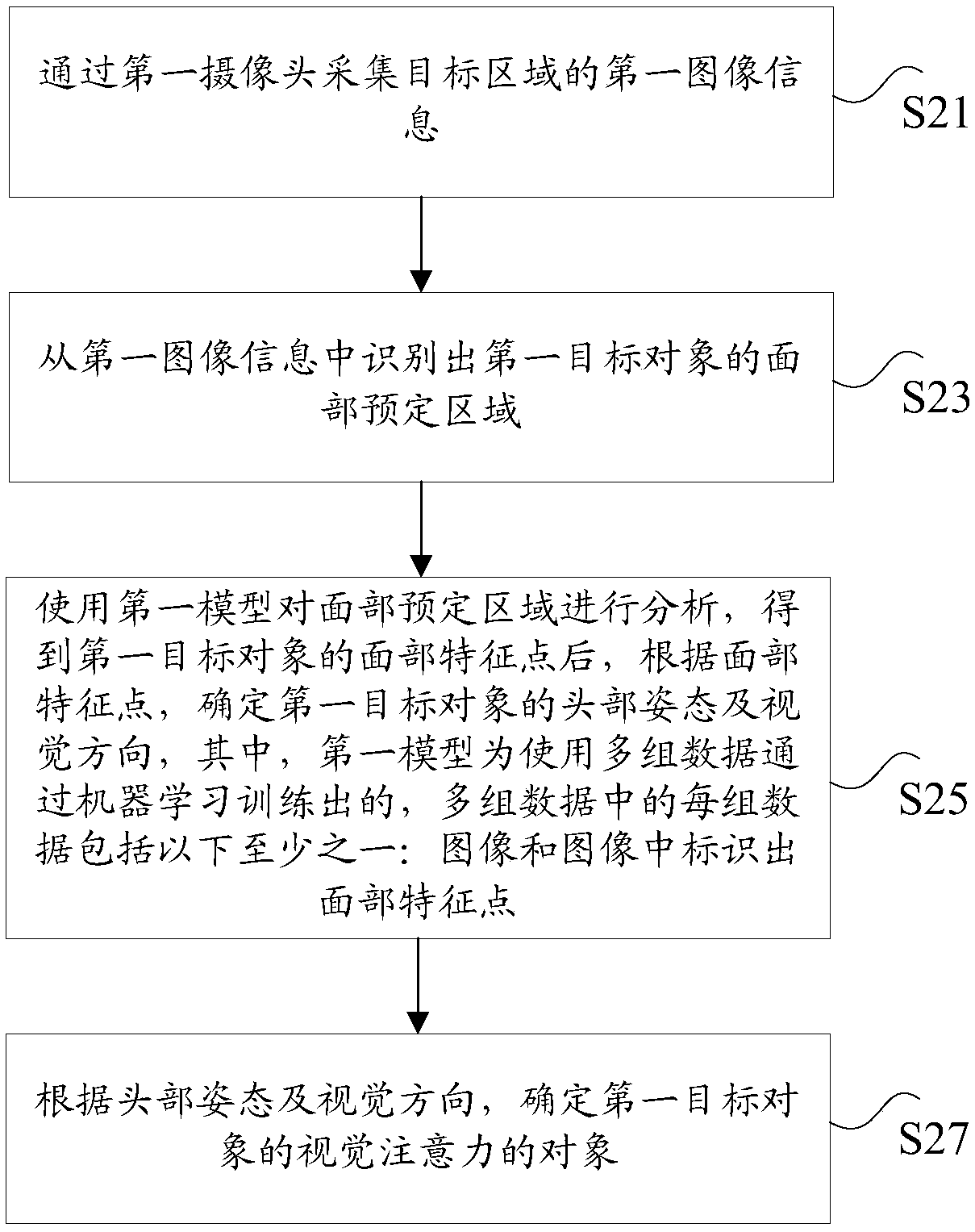

Visual attention recognition method and system, storage medium and processor

PendingCN110674664ASolve technical problems that cannot accurately recognize the user's attentionCharacter and pattern recognitionMachine learningRadiologyNuclear medicine

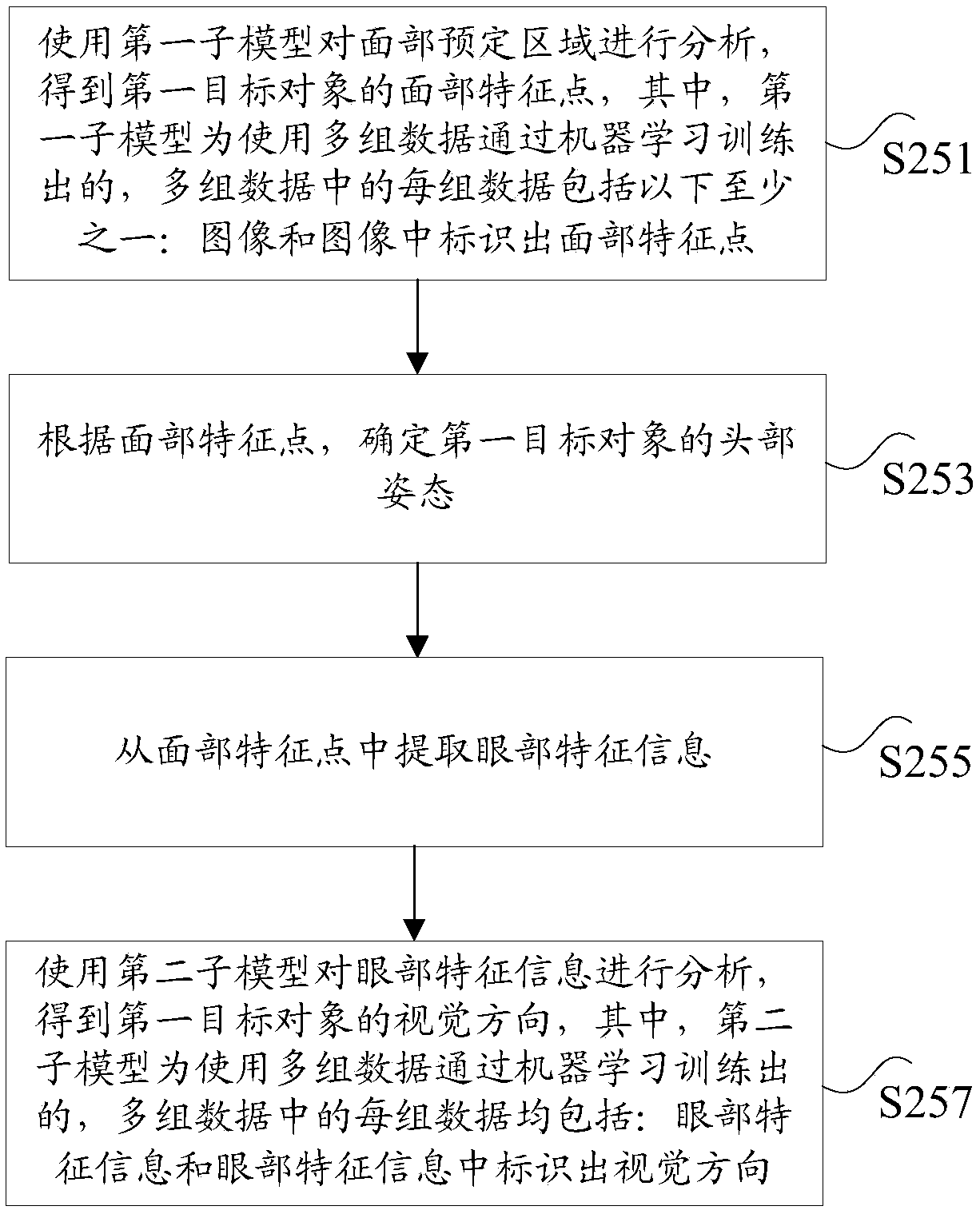

The invention discloses a visual attention recognition method and system, a storage medium and a processor. The method comprises the following steps: acquiring first image information of a target areathrough a first camera; identifying a face predetermined area of the first target object from the first image information; analyzing the predetermined area of the face by using a first model to obtain the facial feature points of the first target object, then determining the head posture and the visual direction of the first target object according to the facial feature points, training the firstmodel through machine learning by using multiple sets of data, wherein each set of data in the multiple sets of data comprises at least one of the following: an image and the facial feature points identified in the image; and determining an object of visual attention of the first target object according to the head posture and the visual direction. The technical problem that the attention of theuser cannot be accurately recognized in the prior art is solved.

Owner:BANMA ZHIXING NETWORK HONGKONG CO LTD

Ship target detection method based on adaptive layered high-resolution SAR image

ActiveCN111008585AImprove execution efficiencyReduce instabilityScene recognitionSaliency mapEngineering

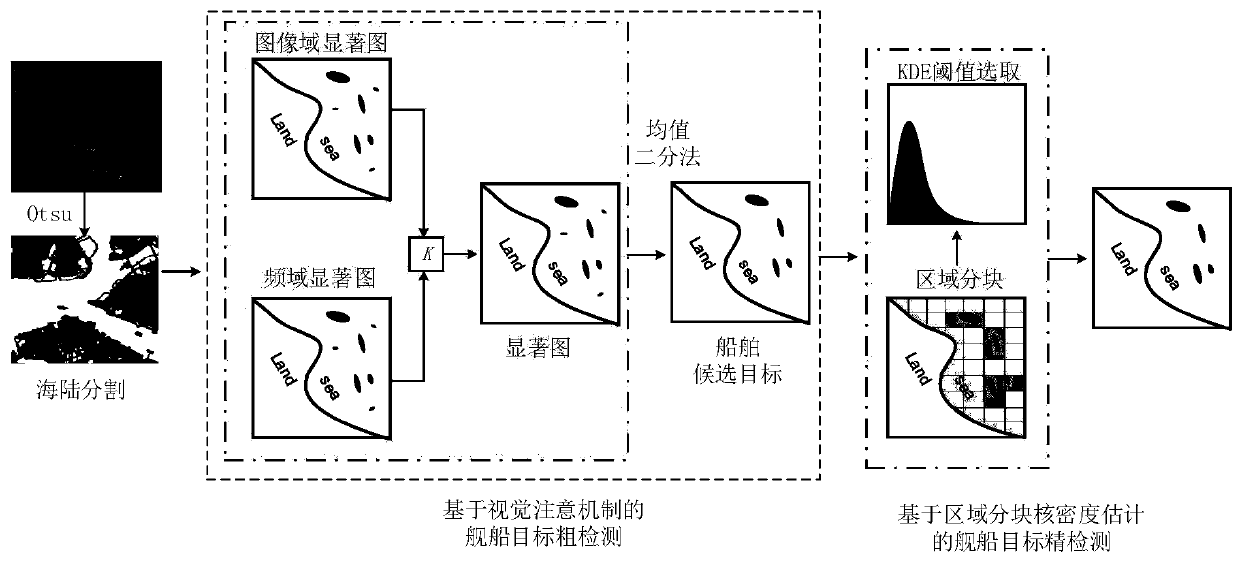

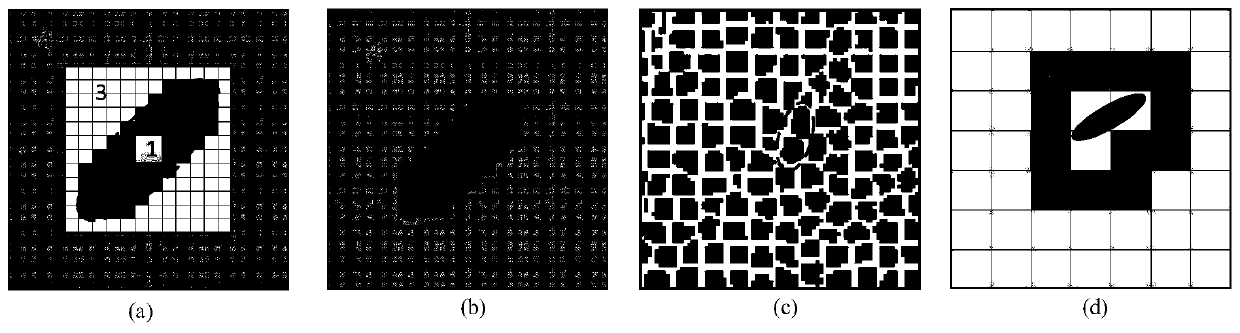

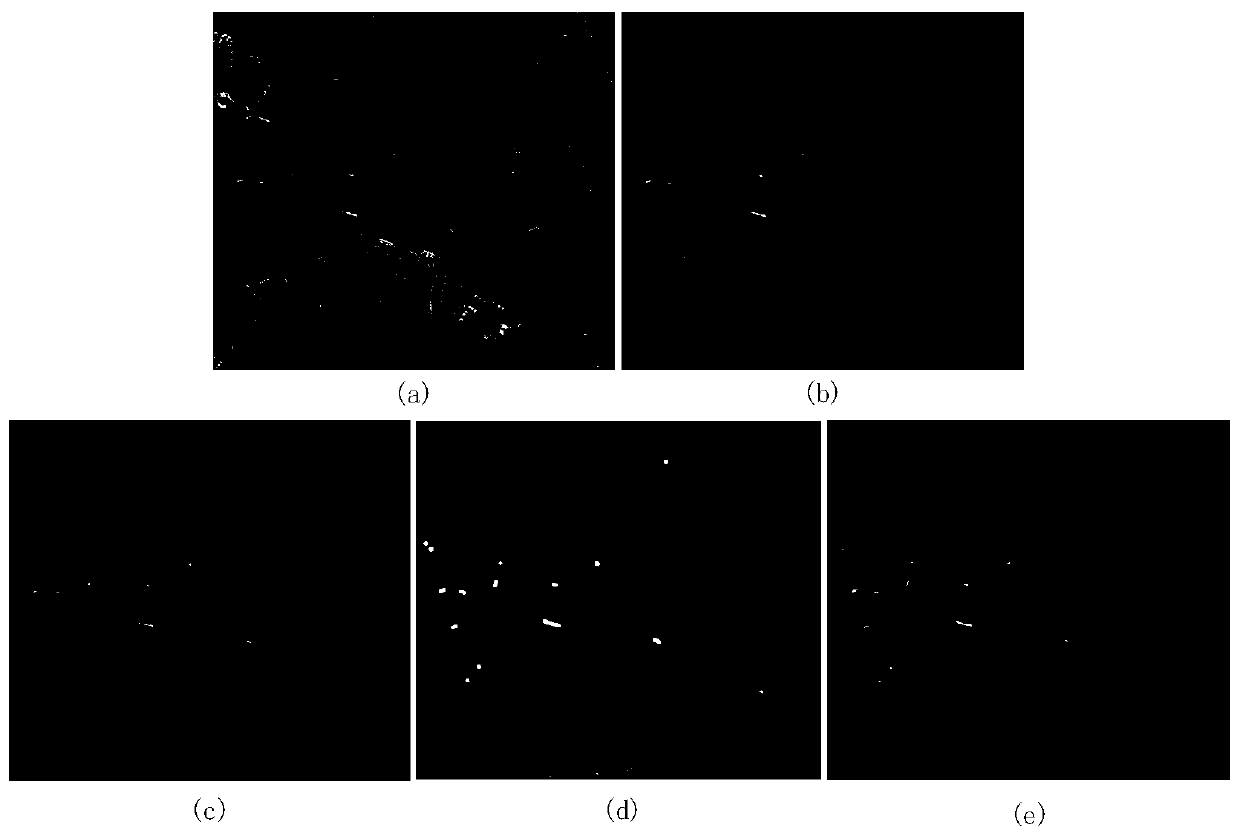

The invention discloses a ship target detection method based on anadaptive layered high-resolution SAR image. The method comprises the steps that firstly a visual attention mechanism combining an image domain and a frequency domain is constructed, not only can better maintain the details of a saliency map, but also has an obvious speed advantage compared with other attention mechanisms; secondly,a candidate target region segmentation threshold value is obtained by adopting a mean dichotomy, so that instability caused by hard threshold value selection is eliminated; and finally, ship target fine detection is carried out by using a frequency domain accelerated block kernel density estimation method. Calculation burden caused by super-pixel segmentation is avoided due to the idea of region partitioning. The mismatch risk of a clutter model is eliminated through kernel density estimation. In addition, the execution efficiency of the detection method is further improved through a frequencydomain acceleration method of the detection method. In addition, compared with an existing high-resolution SAR image ship target detection method, the method has the advantages that the constant false alarm characteristic can be kept while the target is detected.

Owner:XIDIAN UNIV

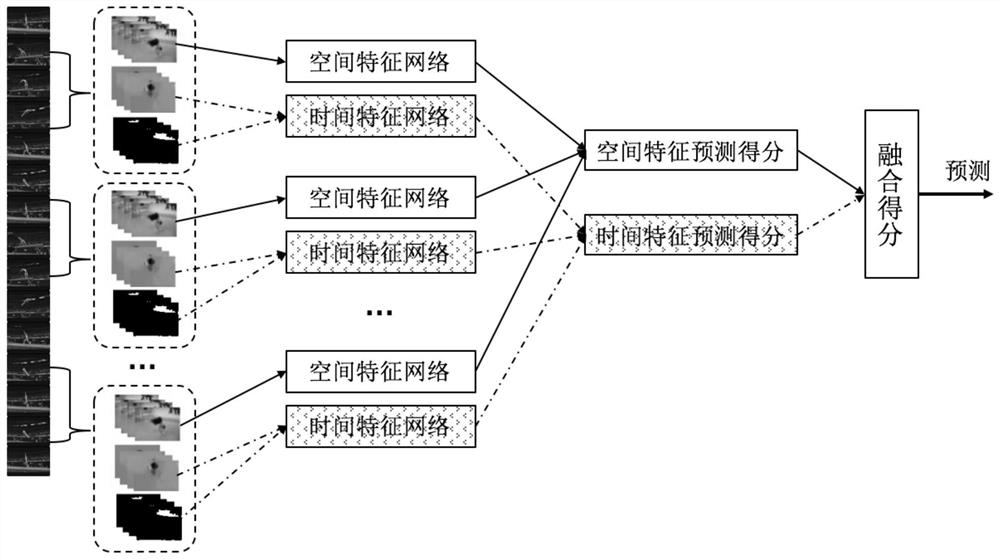

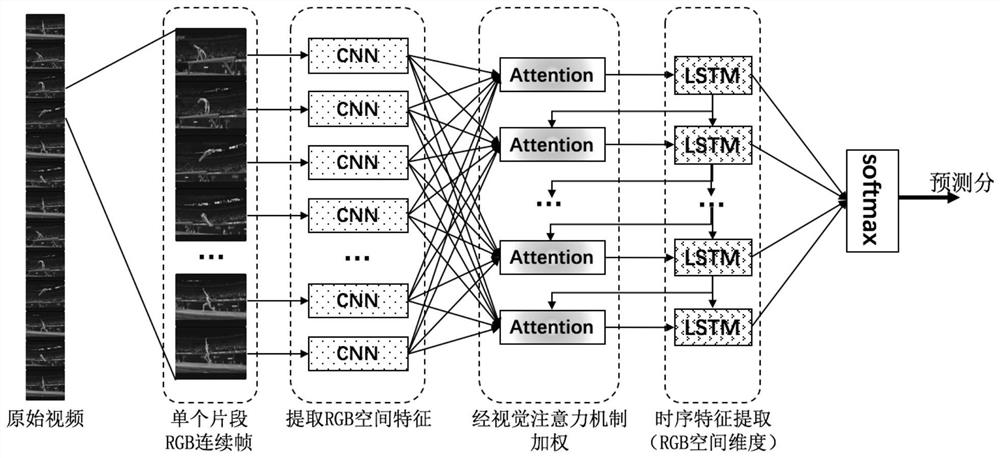

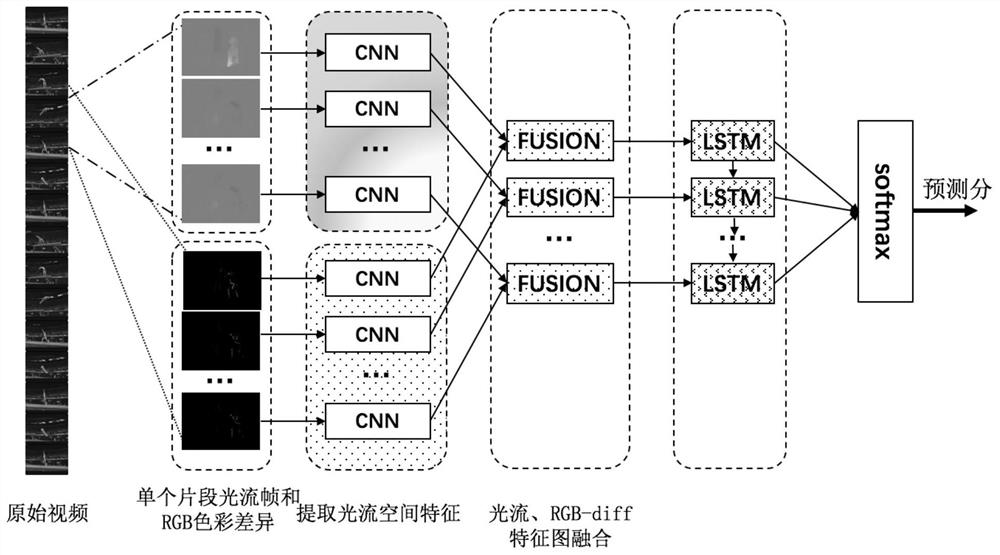

Video behavior recognition method based on spatial-temporal feature fusion deep learning network

PendingCN111950444ALose weightIncrease influenceCharacter and pattern recognitionNeural architecturesTemporal informationData set

The invention discloses a video behavior identification method based on a spatio-temporal feature fusion deep learning network, which adopts two independent networks to extract time and space information of a video respectively, adds LSTM learning video time information to each network on the basis of a CNN, and fuses the time and space information with a certain strategy. The accuracy of the FSTFN on a data set is improved by 7.5% compared with a network model without introducing a space-time network proposed by Tran, the accuracy on the data set is improved by 4.7% compared with a common double-flow network model, a segmentation mode is adopted for a video, each video sample samples a network composed of a plurality of segments and inputs the segments into a CNN and an LSTM, and by covering the time range of the whole video. The problem of long-term dependence in video behavior recognition is solved, a visual attention mechanism is introduced to the tail end of the CNN, the weight ofa non-visual subject in a network model is reduced, the influence of the visual subject in a video image frame is improved, and the spatial features of the video are better utilized.

Owner:BEIJING NORMAL UNIV ZHUHAI

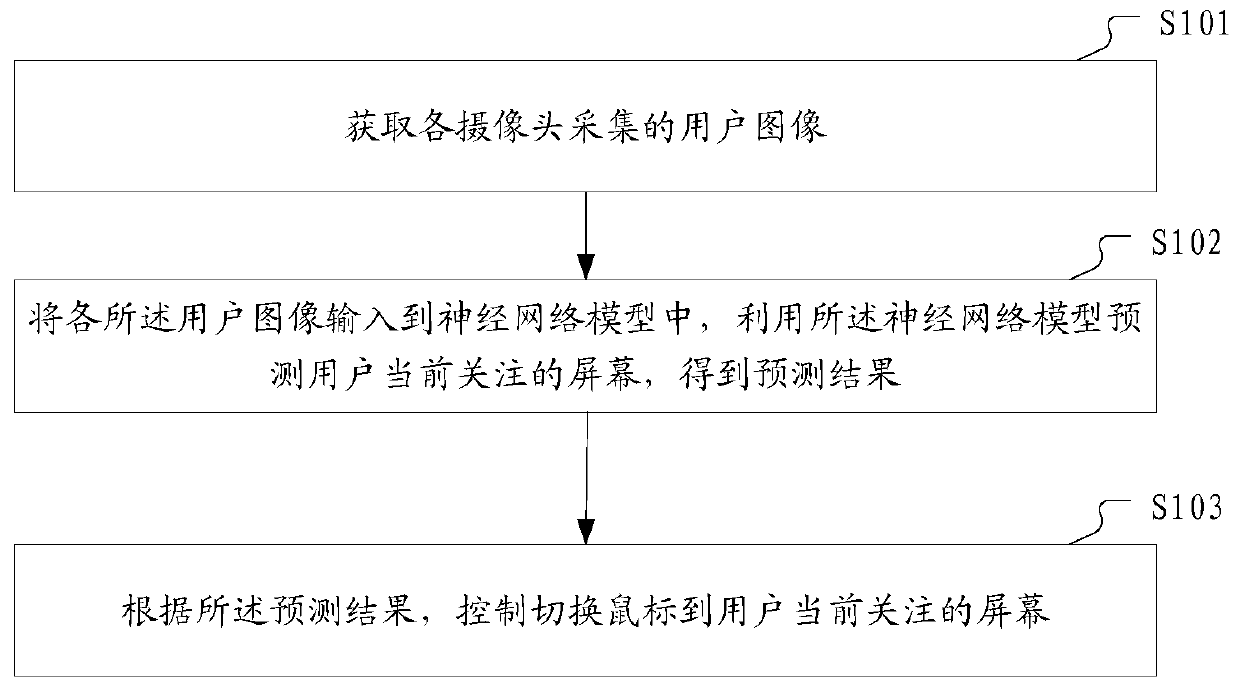

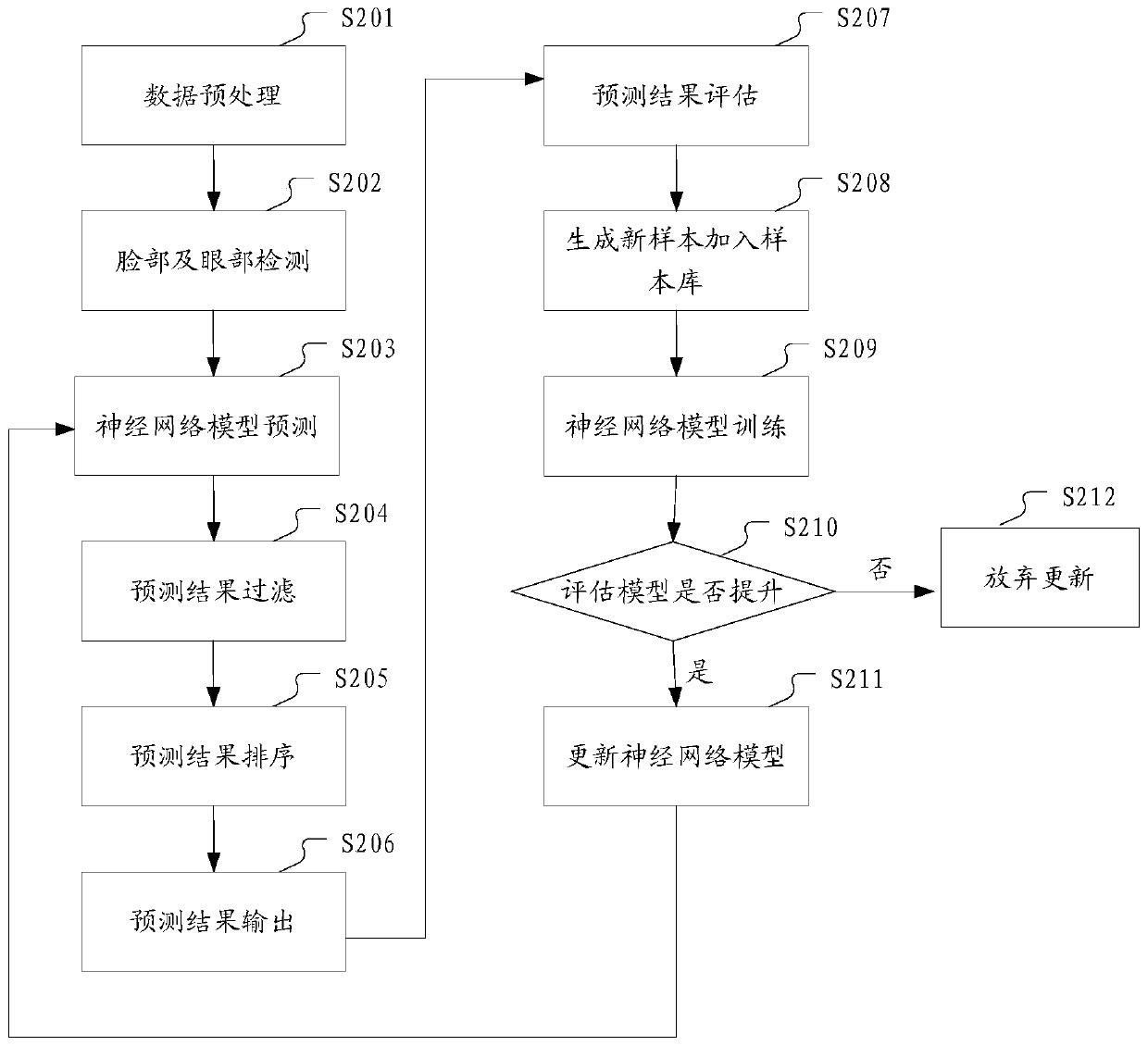

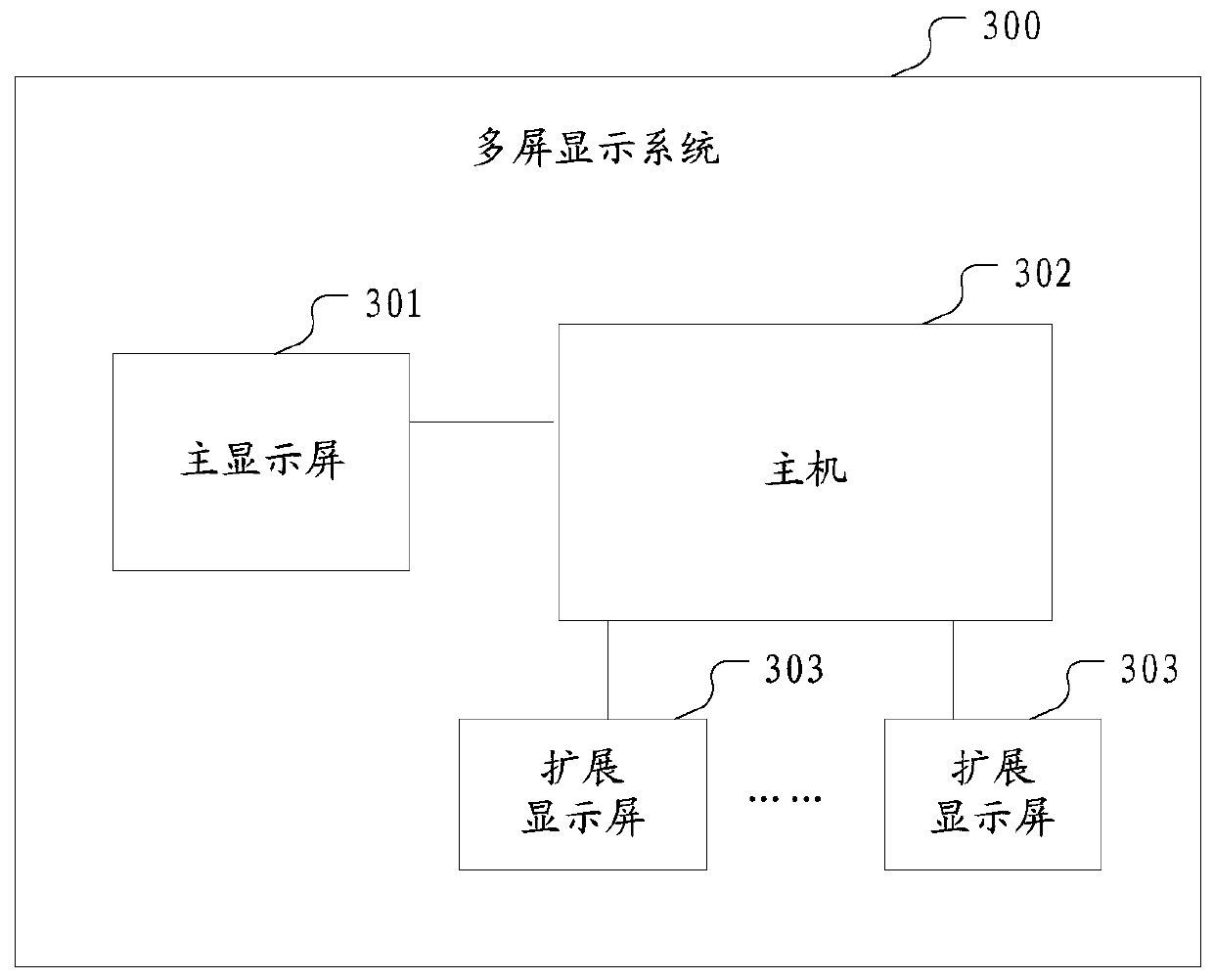

Multi-screen display system and mouse switching control method thereof

ActiveCN111176524AQuick Response OperationImprove experienceStatic indicating devicesAcquiring/recognising eyesMedicineComputer graphics (images)

The invention discloses a multi-screen display system and a mouse switching control method, and the mouse switching control method is applied to the multi-screen display system comprising a main display screen and at least one extended display screen, and comprises the steps: obtaining a user image collected by each camera; the cameras are installed on the main display screen and the extended display screen respectively. Inputting each user image into a neural network model, and predicting a screen currently concerned by a user by utilizing the neural network model to obtain a prediction result; and according to the prediction result, controlling to switch the mouse to the screen which the user pays attention to currently. According to the multi-screen display system and the mouse switching control method, on the basis of visual attention self-learning, the current user operation screen is predicted by analyzing the visual attention pointing of the current user, the mouse is automatically switched to the corresponding screen position, and the user experience is improved.

Owner:GOERTEK INC

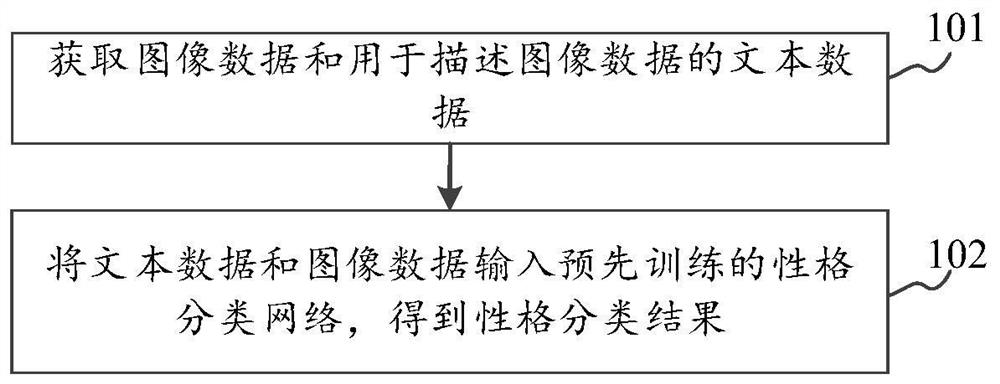

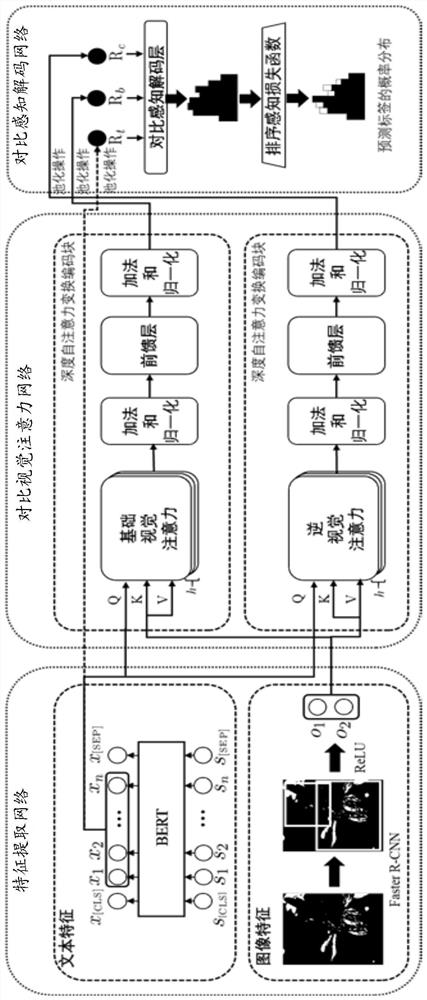

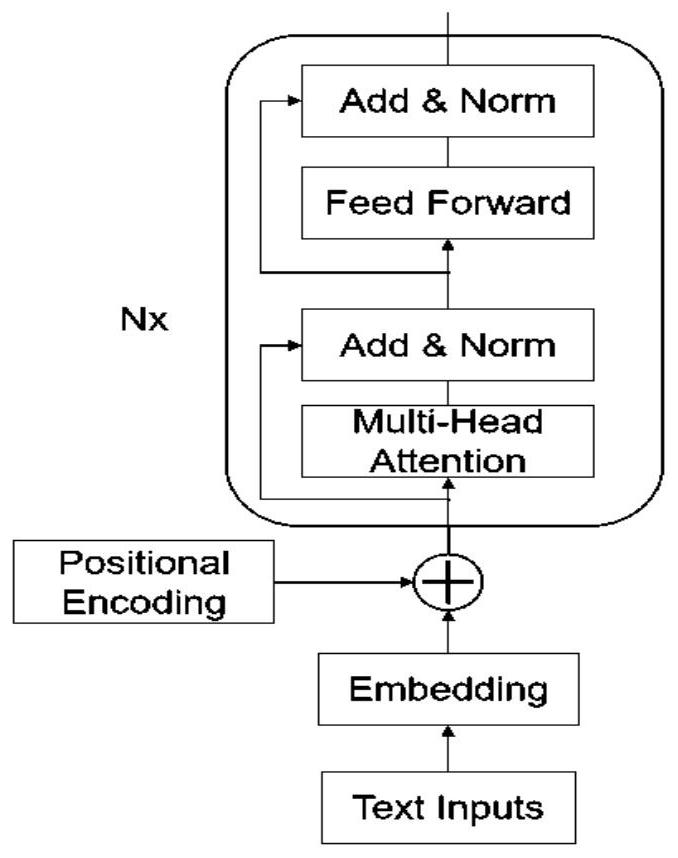

Text and image fused bimodal character classification method and device

ActiveCN112949622AAddress limitationsSolve the problem of not being able to capture information about cognitive differencesCharacter and pattern recognitionNeural architecturesFeature extractionImaging Feature

The invention relates to a text and image fused bimodal character classification method and device, and belongs to the technical field of artificial intelligence. The method comprises the following steps: inputting text data and image data into a pre-trained character classification network, and obtaining a character classification result, The character classification network comprises a feature extraction network, a contrast visual attention network and a contrast perception decoding network; a text feature extraction branch in the feature extraction network is used for extracting a word embedding vector of the text dataa text feature extraction branch in the feature extraction network, and an image feature extraction branch is used for extracting an image region vector of the image dataan image feature extraction branch; a basic visual attention branch in the contrast visual attention network is used for extracting an image object aligned with the text data and calculating aligned visual representation, and an inverse visual attention branch is used for extracting an image object not aligned with the text data and calculating non-aligned visual representation the inverse visual attention branch; and the comparison perception decoding network is used for predicting character categories. The problems that the classification performance is poor and cognitive difference information cannot be captured are solved.

Owner:SUZHOU UNIV

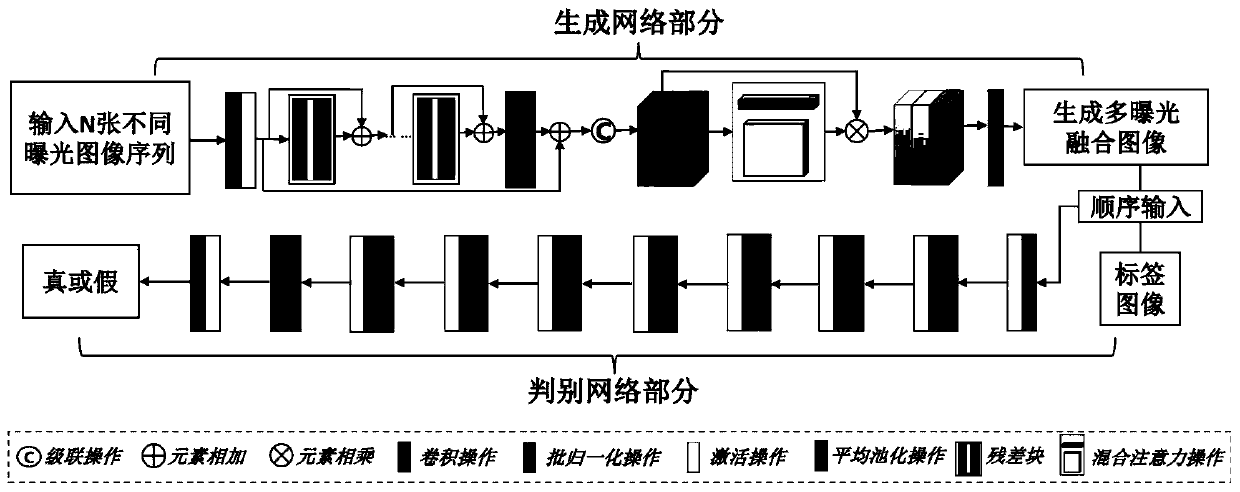

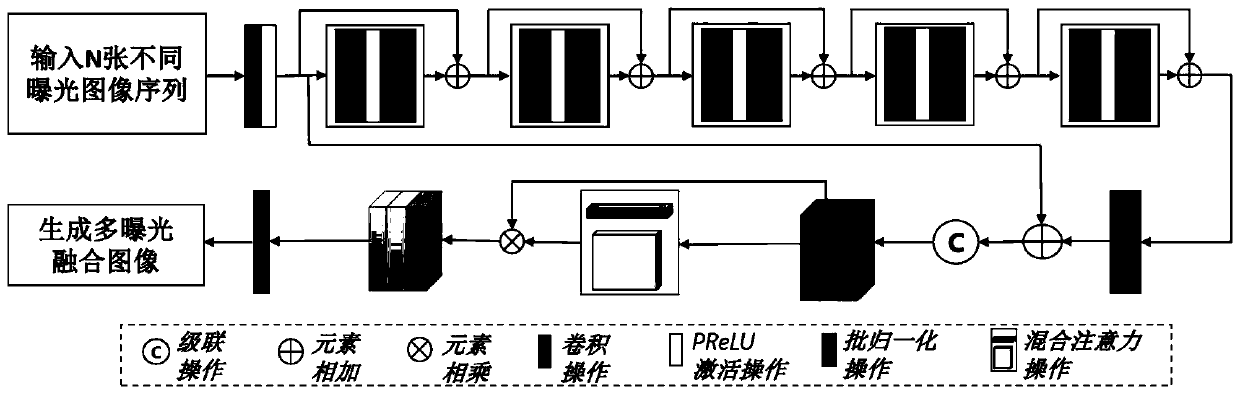

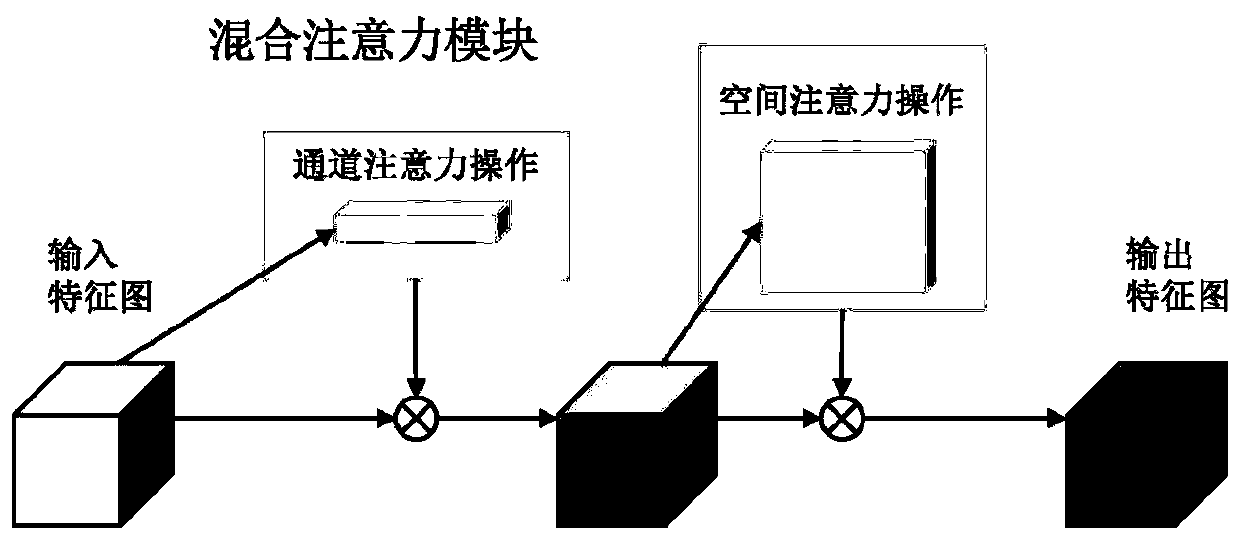

Multi-exposure image fusion method based on attention generative adversarial network

PendingCN111429433AEasy to integrateImage enhancementImage analysisPattern recognitionGenerative adversarial network

The invention provides a multi-exposure image fusion method based on an attention generative adversarial network. The thought of the attention mechanism is highly matched with the detail weighting problem in multi-exposure fusion, the weight of each input image can be adaptively selected by applying channel attention, and the weights of different spatial positions are adaptively selected by usingspatial attention. The technology has a wide application prospect in various multimedia vision fields. According to the algorithm, a new attention generative adversarial network is designed to be usedfor a multi-exposure image fusion task, and a visual attention mechanism is introduced into the generative network, so that the network can be helped to adaptively learn weights of different input images and different spatial positions to achieve a better fusion effect.

Owner:BEIJING UNIV OF TECH

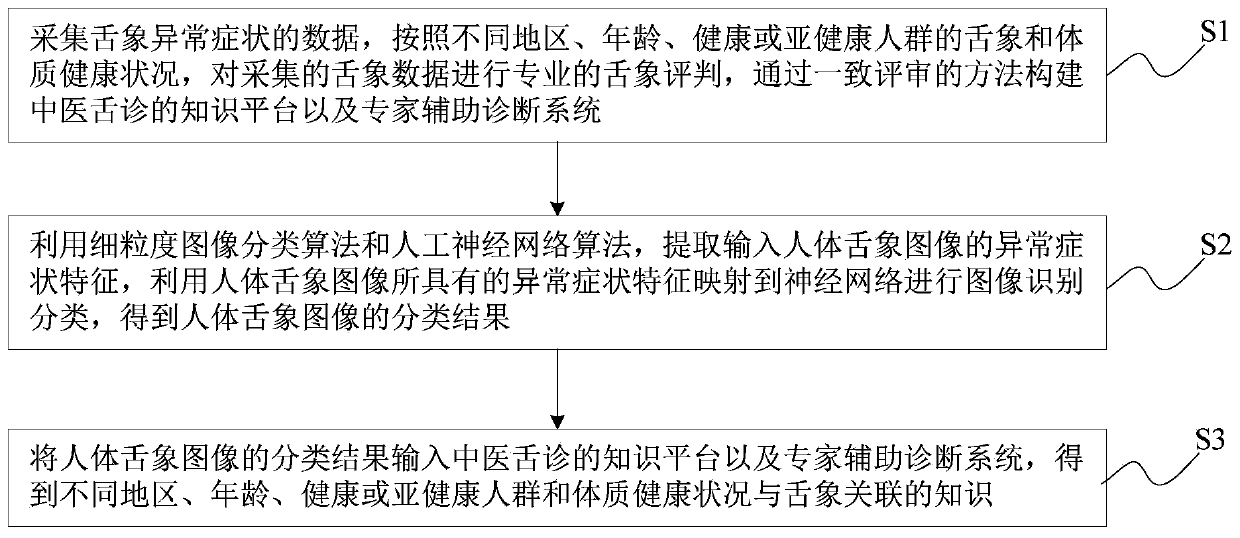

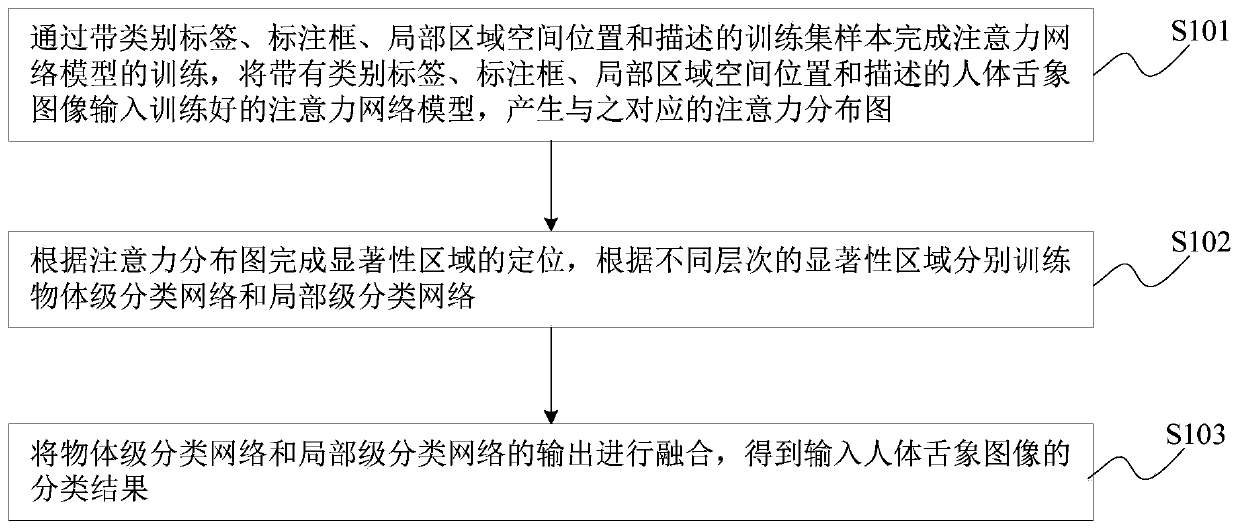

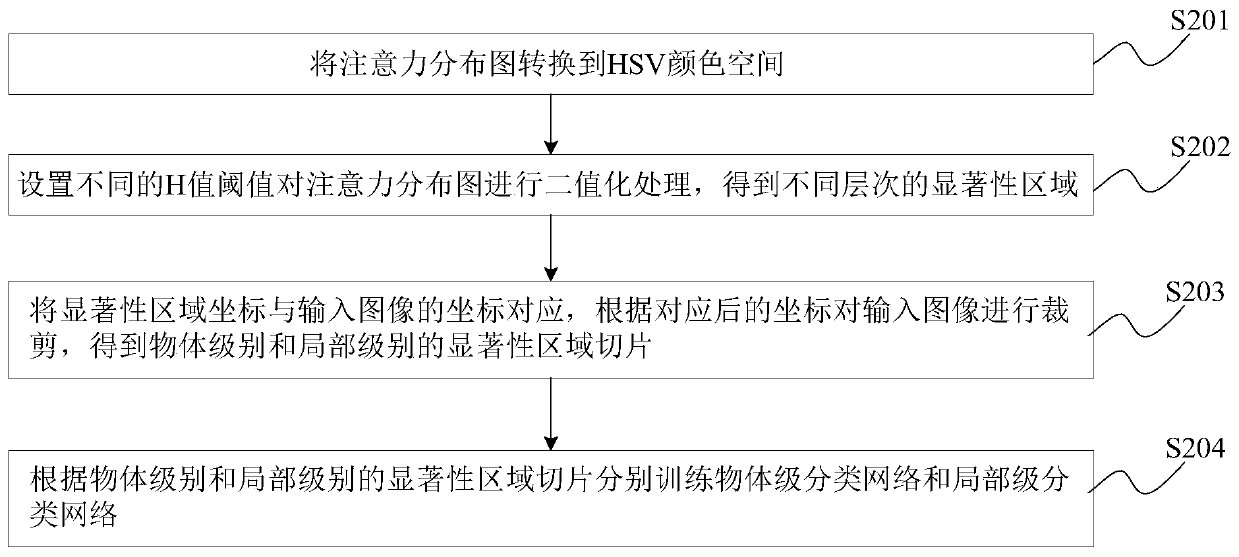

Intelligent screening method and system thereof for abnormal tongue images

PendingCN111524093AValid representationShow wellImage enhancementImage analysisMedicineScreening method

The invention provides an intelligent screening method and an intelligent screening system for abnormal tongue image. The intelligent screening method and the intelligent screening system have the beneficial effects that: by constructing a knowledge platform of traditional Chinese medicine tongue diagnosis and an expert auxiliary diagnosis system, an objective and quantitative measurement means isprovided for tongue diagnosis information judgment, and the diagnosis correctness is improved; a fine-grained image classification algorithm based on an attention mechanism is adopted, and the attention mechanism reflects the perception difference of a human vision system to the surrounding environment, that is, attention is focused on a salient region in the environment; a convolutional neural network is used for simulating visual attention properties of human beings, so that information of classification targets is fully utilized, and the accuracy rate of tongue image fine-grained image classification can be improved; under the inspiration of the visual attention mechanism, spatial position features are introduced into classification and modeling is carried out in the process of utilizing the fine-grained image classification algorithm, namely, a plurality of key regions of a tongue image are positioned, modeling is carried out according to the spatial relationship among all parts of the tongue image, and the integrity and discrimination of part features can be improved.

Owner:中润普达(十堰)大数据中心有限公司

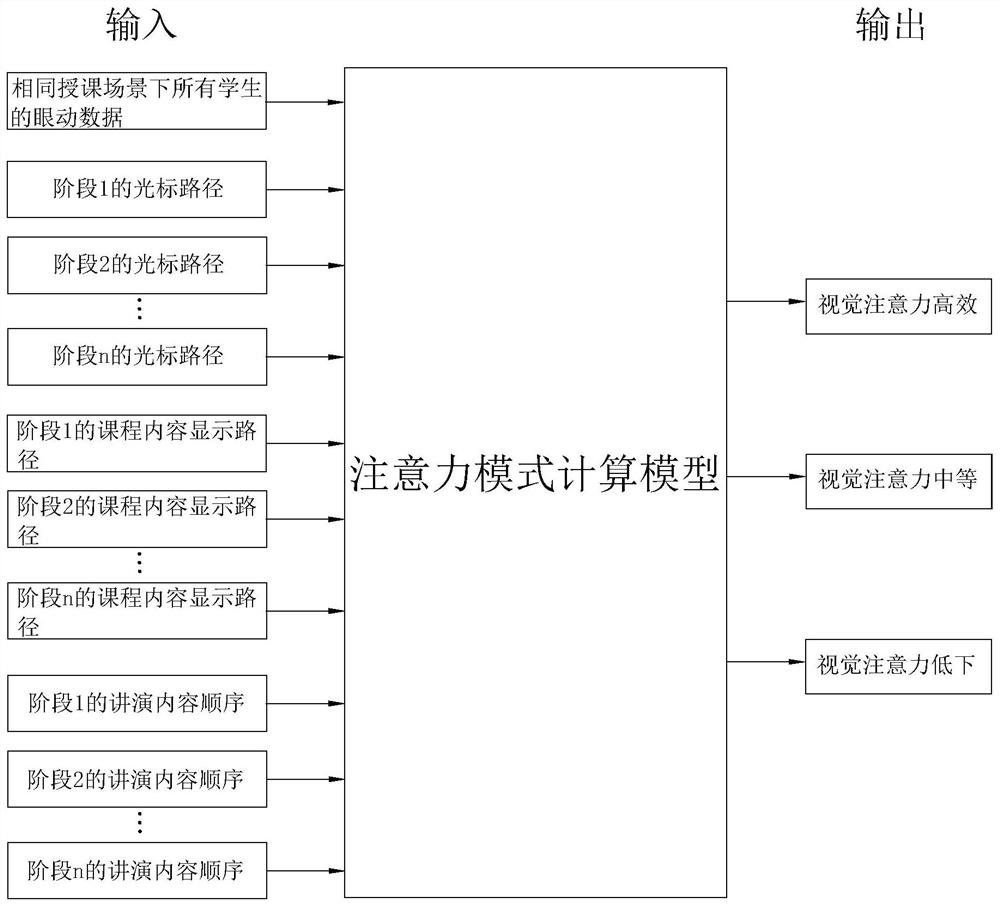

Teaching quality evaluation method, device and system based on eye movement tracking

PendingCN112070641AQuantify Learning EfficiencyAchieving Quality of LearningInput/output for user-computer interactionData processing applicationsMedicineEngineering

The invention discloses a teaching quality evaluation method based on eye movement tracking, and the method comprises the steps: calculating a visual attention score of a student according to the correlations between a visual path of the student and a cursor path in a teaching process of a teacher, a display path of course contents in the teaching process of the teacher, and a teaching content sequence in the teaching process of the teacher; and generating the attention mode of the student according to the relationship between the attention score and the preset attention score threshold, so that the attention of the student in the online learning process is visualized, and the learning efficiency of the student can be quantified. Furthermore, according to the attention mode, the quantitative index and the assessment result of the previous course of the same subject of the student, the attention mode, the quantitative index and the assessment result of the future course of the subject of the student are predicted, and prediction of future learning quality and assessment results of the student is realized. In addition, the invention also discloses a teaching quality evaluation deviceand system based on eye movement tracking, and a computer readable storage medium.

Owner:东莞市东全智能科技有限公司

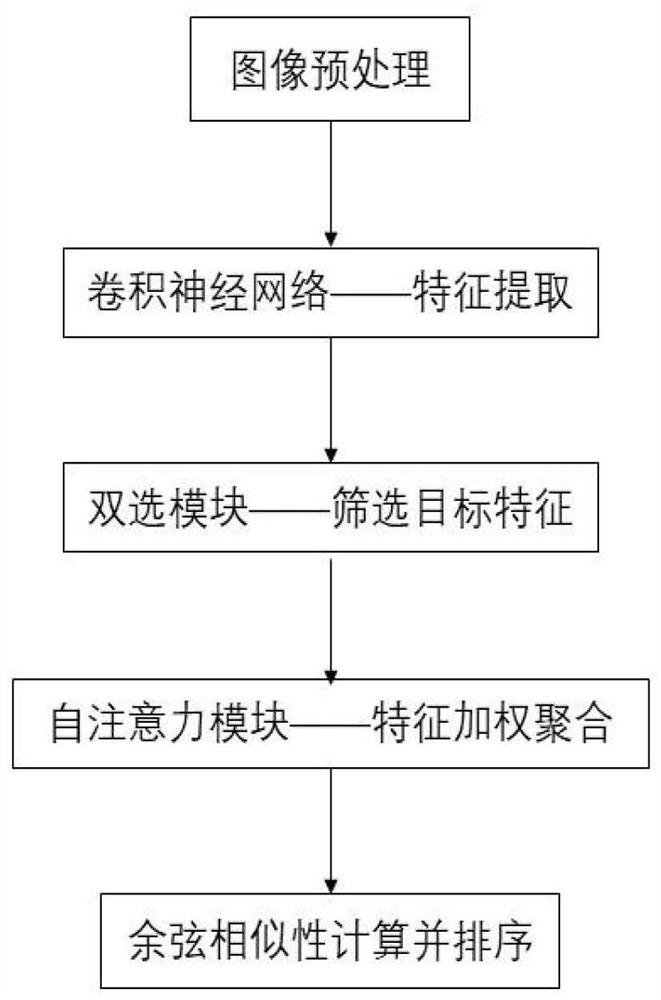

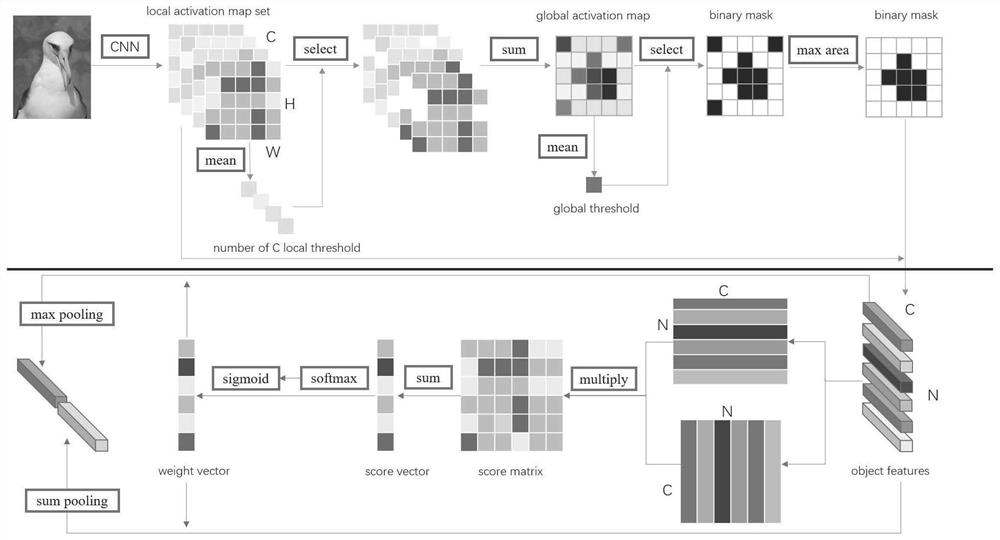

Fine-grained image retrieval method based on self-attention mechanism weighting

ActiveCN111984817AImprove accuracyHas practical valueDigital data information retrievalCharacter and pattern recognitionVisual technologyVisual perception

The invention relates to the technical field of image retrieval and computer vision, in particular to a fine-grained image retrieval method based on visual attention mechanism weighting. The method comprises the following steps of: image preprocessing: setting the length of the longest side of an image to be 500 pixels; feature extraction: inputting the image into a convolutional neural network, and then selecting and outputting the features of the last convolutional layer; target feature selection: firstly, optimizing a local activation graph, and then selecting a local feature vector according to an activation graph result, so as to realize more accurate target feature selection; feature weighted aggregation: evaluating the importance degree of each feature, so as to enable the weightedfine-grained local features to still be embodied during pooling aggregation and improve the precision of fine-grained retrieval; and performing image retrieval, and calculating cosine similarity between the characteristic vectors of the queried image and a database image. An image feature extraction and coding detail graph is shown in figure 1. According to the method, fine-grained image retrievalcan be realized, and the retrieval accuracy is improved.

Owner:HUNAN UNIV

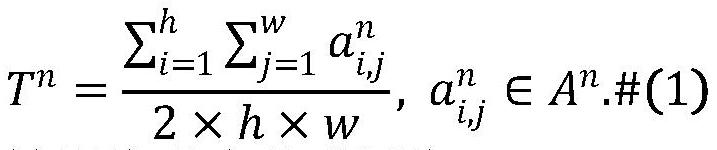

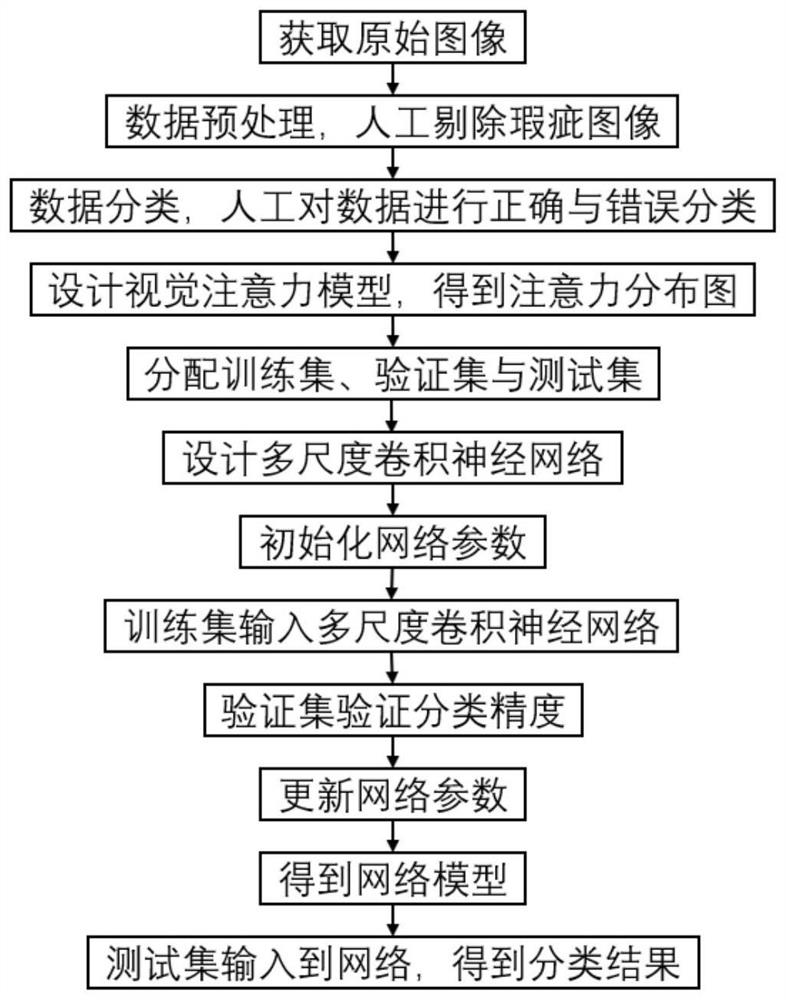

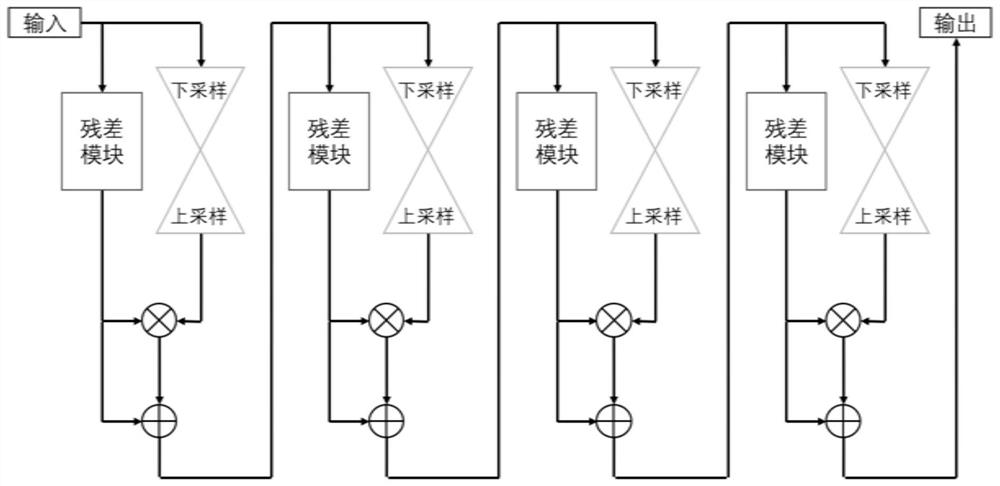

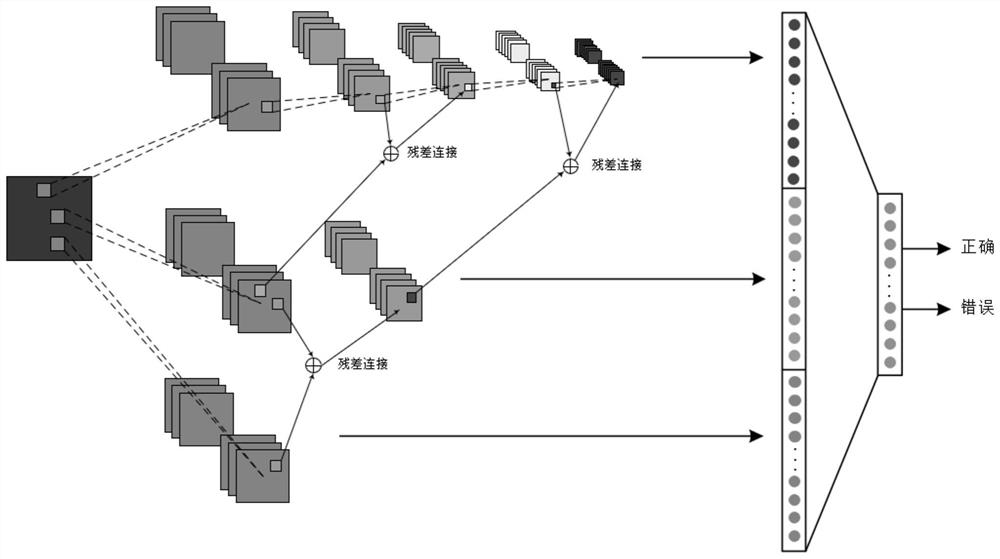

Air conditioner outdoor unit portrait intelligent detection method based on visual attention and multi-scale convolutional neural network

InactiveCN112668584AAchieving feature fusionImprove performanceCharacter and pattern recognitionNeural architecturesFeature vectorTest sample

The invention relates to an air conditioner outdoor unit portrait intelligent detection method based on visual attention and a multi-scale convolutional neural network, and the method comprises the following steps: (1) data preprocessing: carrying out the manual classification of an air conditioner outdoor unit portrait sample, and generating a correct label and a wrong label; (2) reading the preprocessed sample image, inputting the preprocessed sample image into a visual attention network, and generating an attention distribution diagram; (3) inputting into a multi-scale network for training to obtain a deep fusion feature vector; (4) taking the deep fusion feature vector as the input of a softmax classifier model for training; (5) inputting the verification sample set into a softmax classifier model to verify the classification precision to obtain a trained softmax classifier model; and (6) inputting the test sample set into the trained softmax classifier model to obtain a correct or wrong classification result of the test sample set. And the conduction gradient is helped in the reverse process, so that a deeper model can be successfully trained, and the performance of the network is improved.

Owner:SHANDONG UNIV

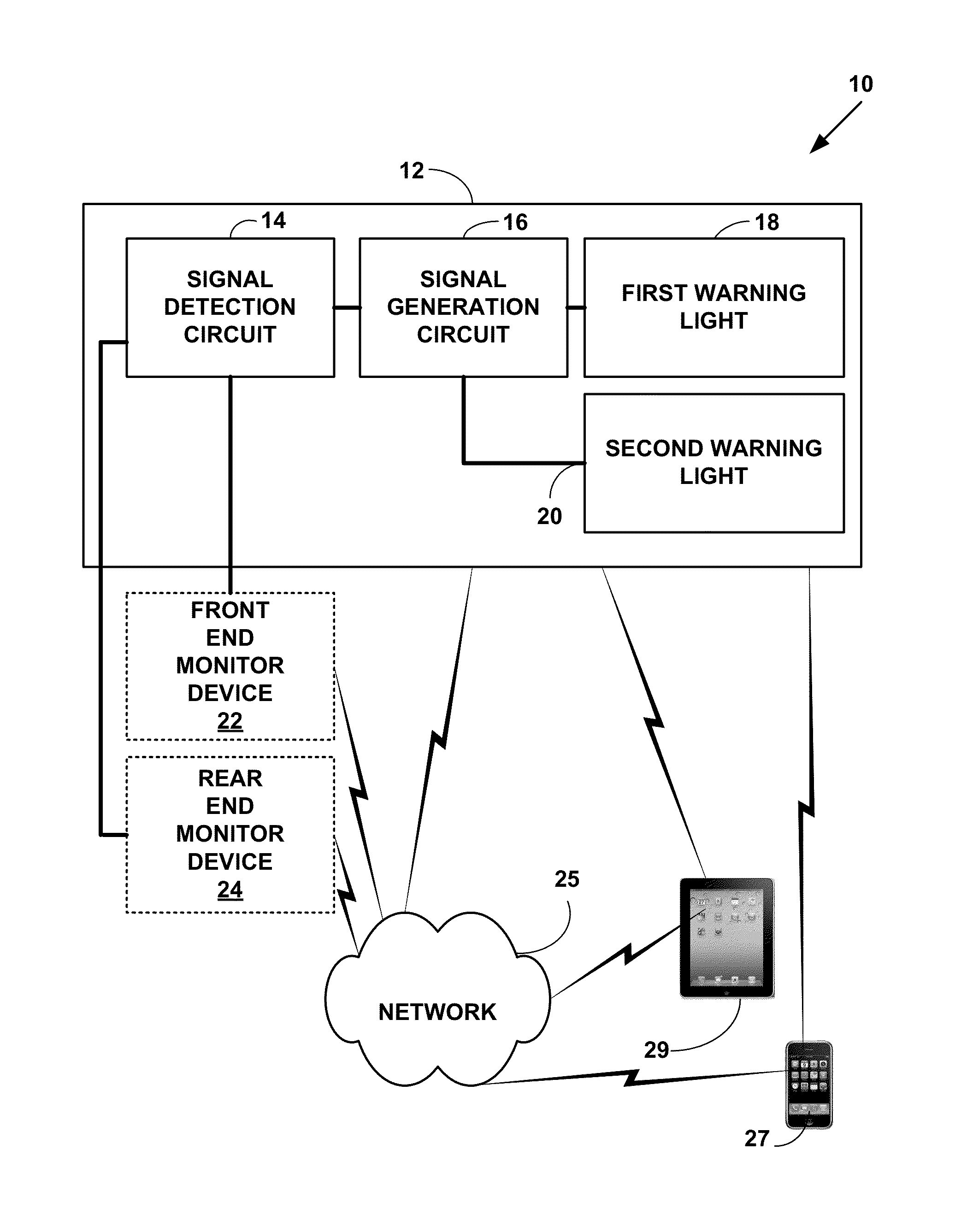

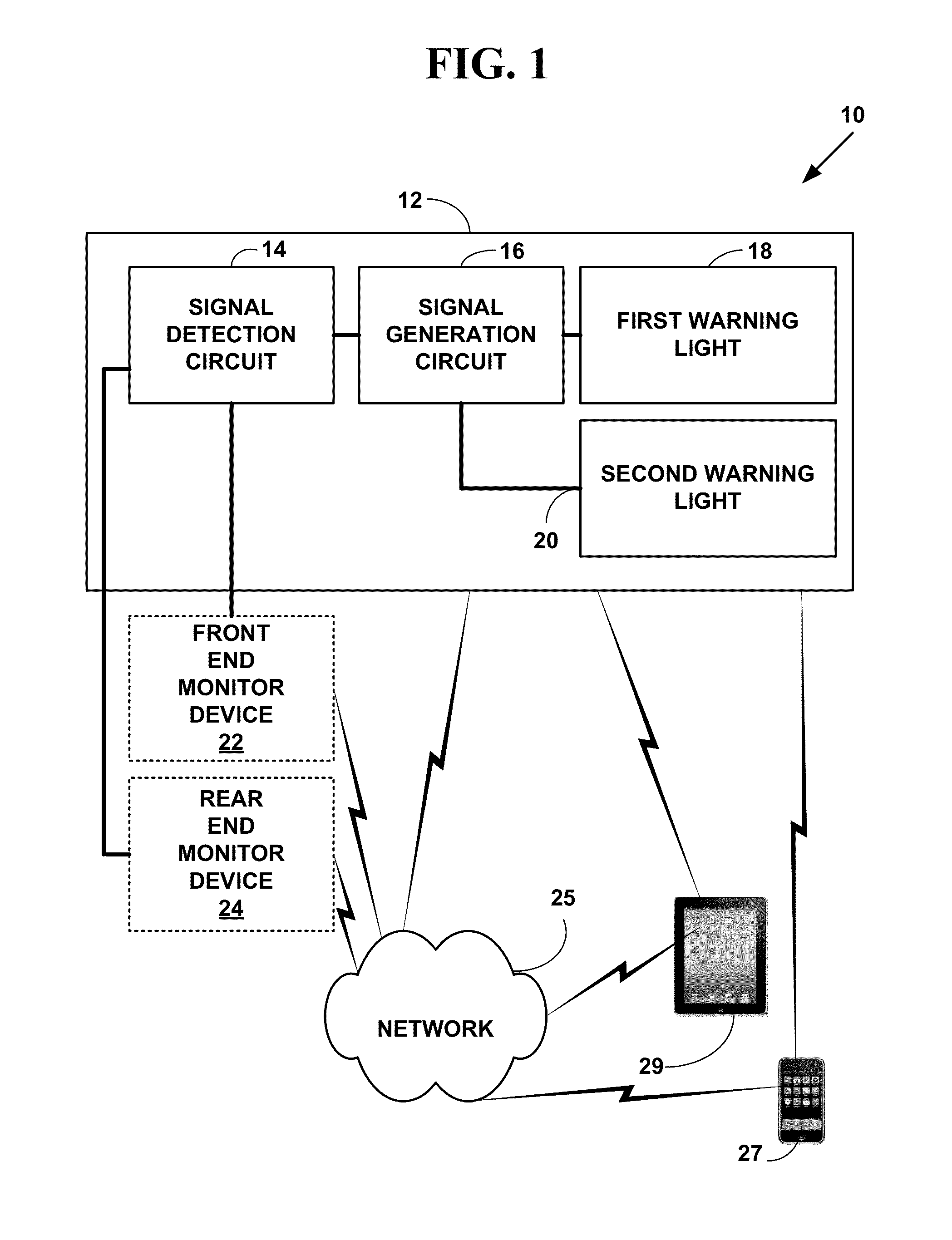

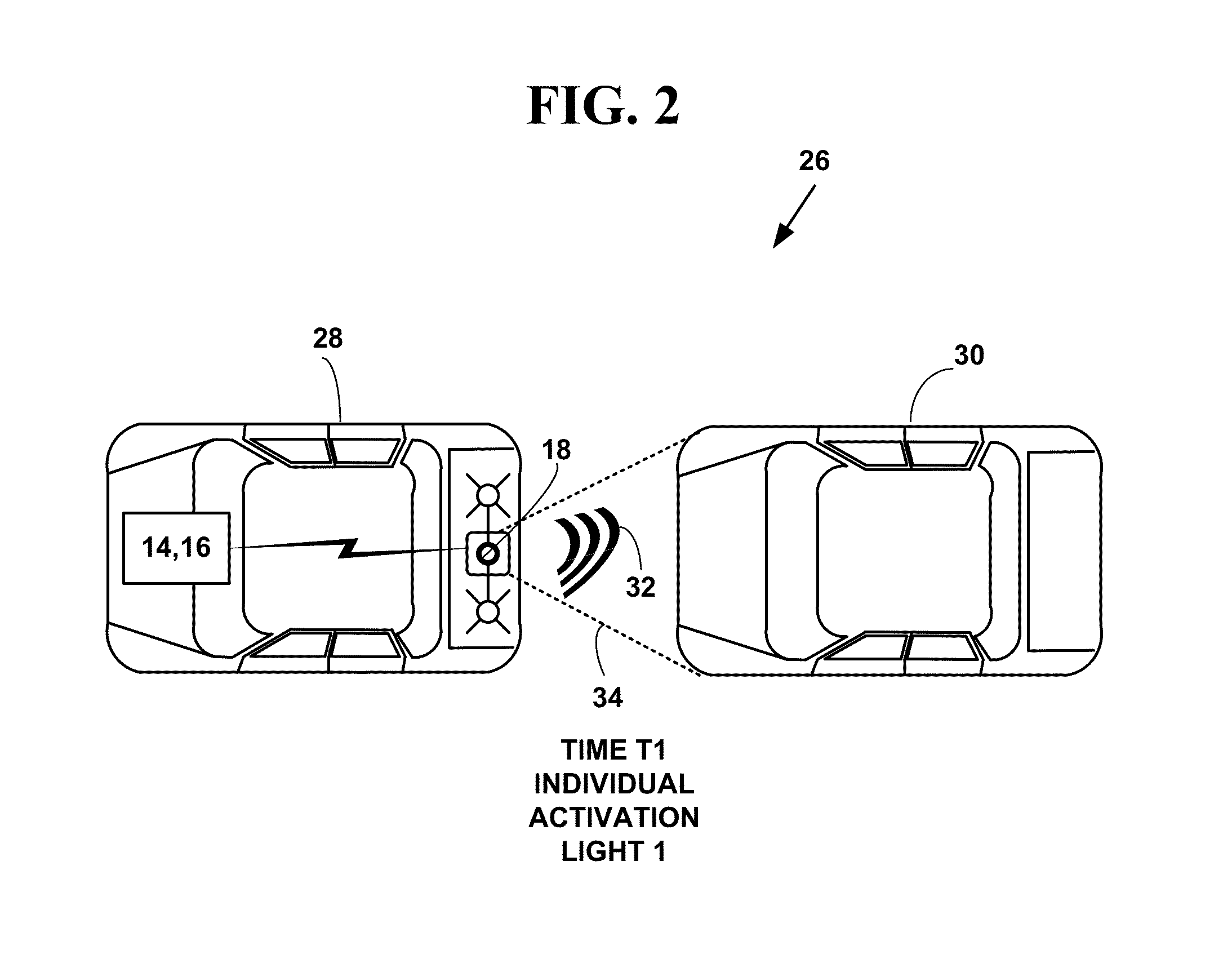

Rear end collision prevention apparatus

InactiveUS9090203B2Reduce and prevent rear end collisionReducing or preventing driver acclimatizationAnti-collision systemsOptical signallingRear-end collisionDriver/operator

A rear end collision apparatus. The rear end collision apparatus includes a first warning light to capture a driver's attention indicating a slow down or stop event is about to occur or is occurring and re-focuses a drivers' visual attention point and a different type of second warning light indicating the vehicle has not yet started re-accelerating after a slowing or stop event. The rear end collision prevention apparatus may be integrated into a Center High Mount Stop Lamp (CHMSL) on a vehicle or used as a separate apparatus. The read end collision apparatus helps reduce or prevent rear end collisions of vehicles and reducing or preventing driver acclimatization to warning lights in a CHMSL or other rear end collision warning lights.

Owner:SEIFERT JERRY A

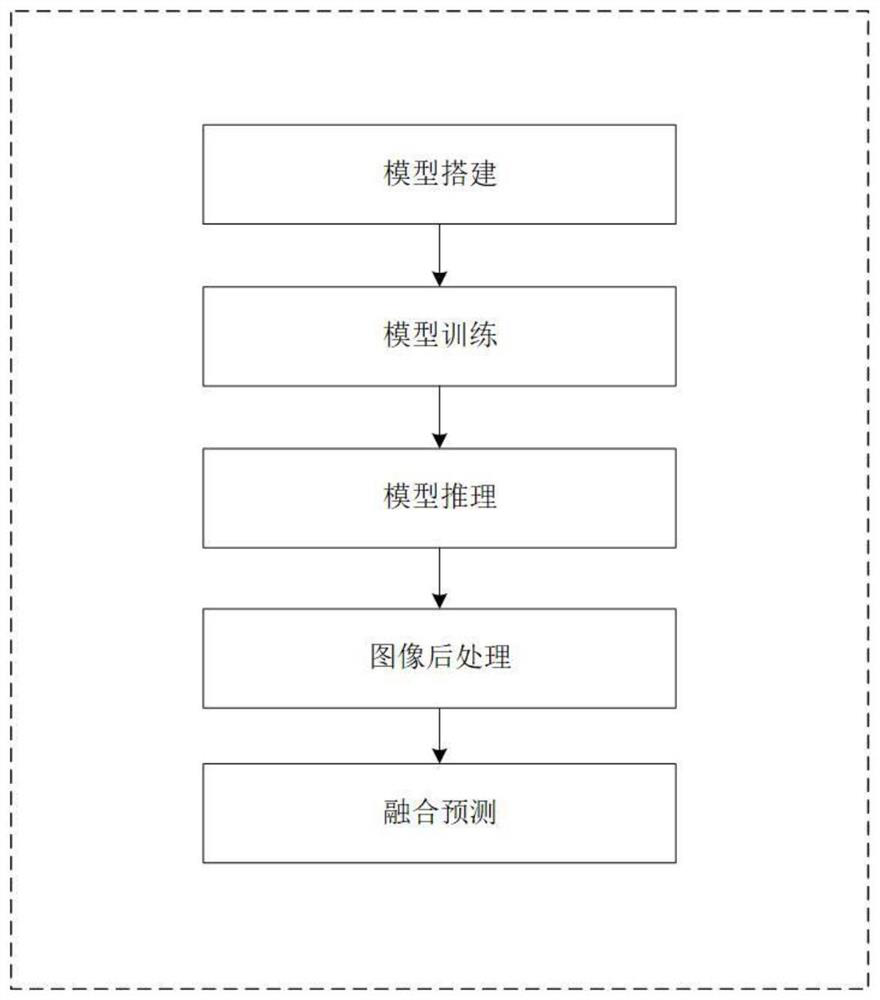

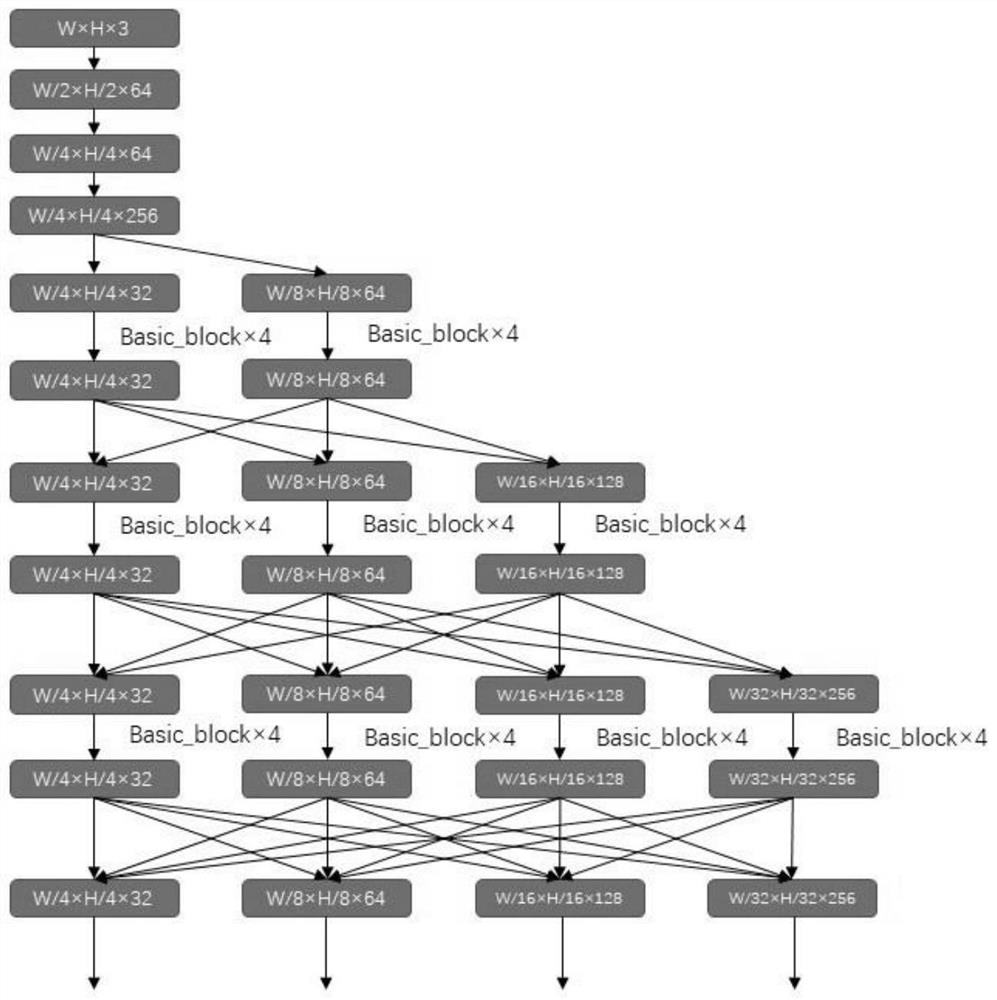

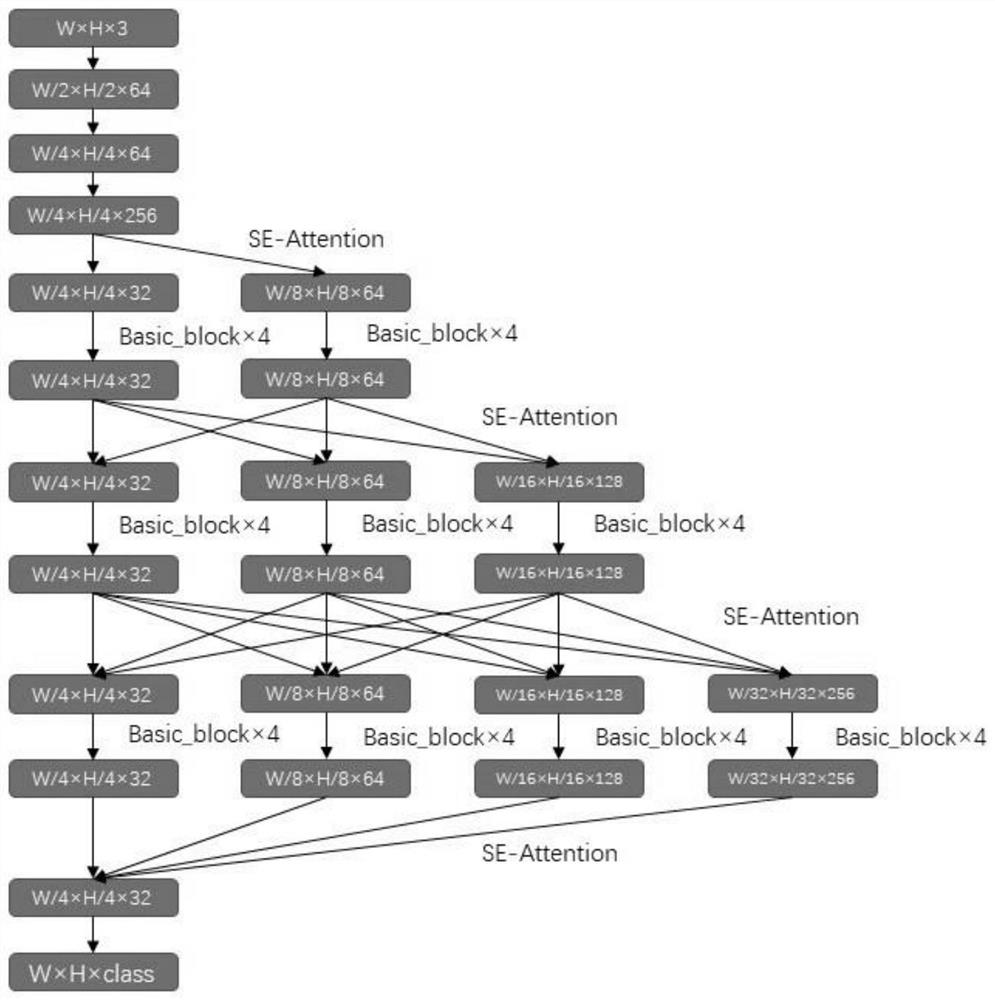

Road scene semantic segmentation method based on multi-model fusion

PendingCN114693924AImprove recognition accuracyImprove connectivityScene recognitionNeural architecturesPattern recognitionImaging processing

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

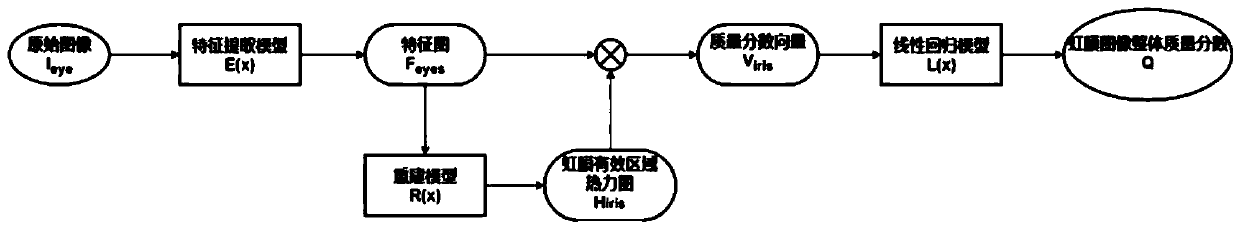

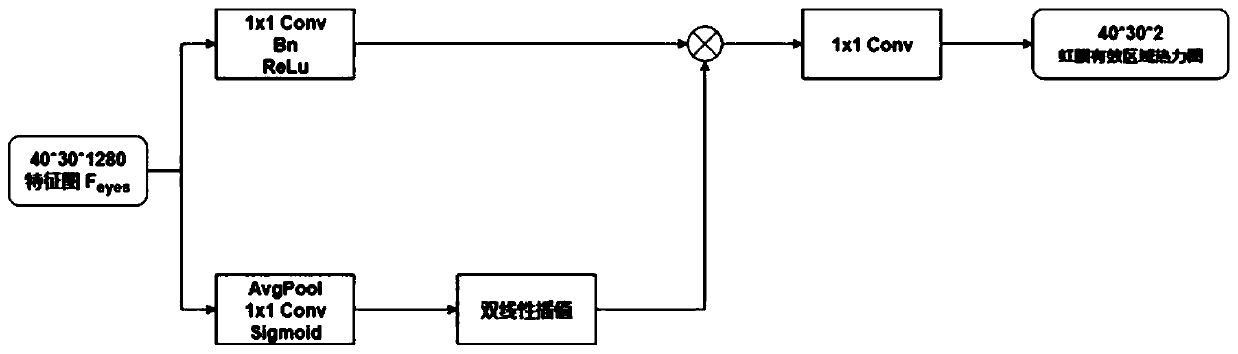

Novel efficient iris image quality evaluation method based on deep neural network

ActiveCN111340758AImprove accuracyShort timeImage enhancementImage analysisFeature extractionIris image

The invention discloses a novel efficient iris image quality evaluation method based on a deep neural network. A feature extraction model is used to extract a feature map of an iris image in an inputimage, then a reconstruction model is used to estimate an iris effective area thermodynamic diagram from the feature map of the iris image, finally a quality prediction model uses the iris effective area as an area of interest, and the overall quality score of the iris image is calculated from the feature map. According to the method, other processes such as preprocessing or segmentation and positioning do not need to be carried out on the collected eye image;, the deep neural network can be directly used for extracting the global features of the eye image, the thermodynamic diagram of the iris effective area is automatically estimated according to the extracted features, the global features of the iris and the thermodynamic diagram of the iris effective area are combined through a visualattention mechanism, and quality evaluation is carried out on the iris image. The iris image quality evaluation method provided by the invention is simple in process, high in calculation speed and high in robustness and adaptability.

Owner:天津中科智能识别有限公司

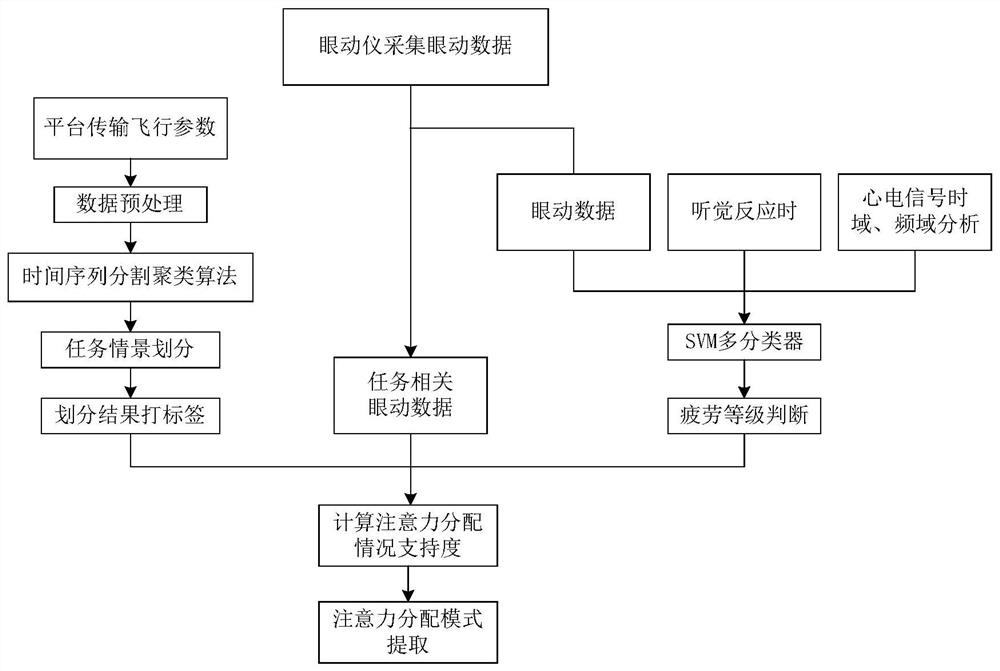

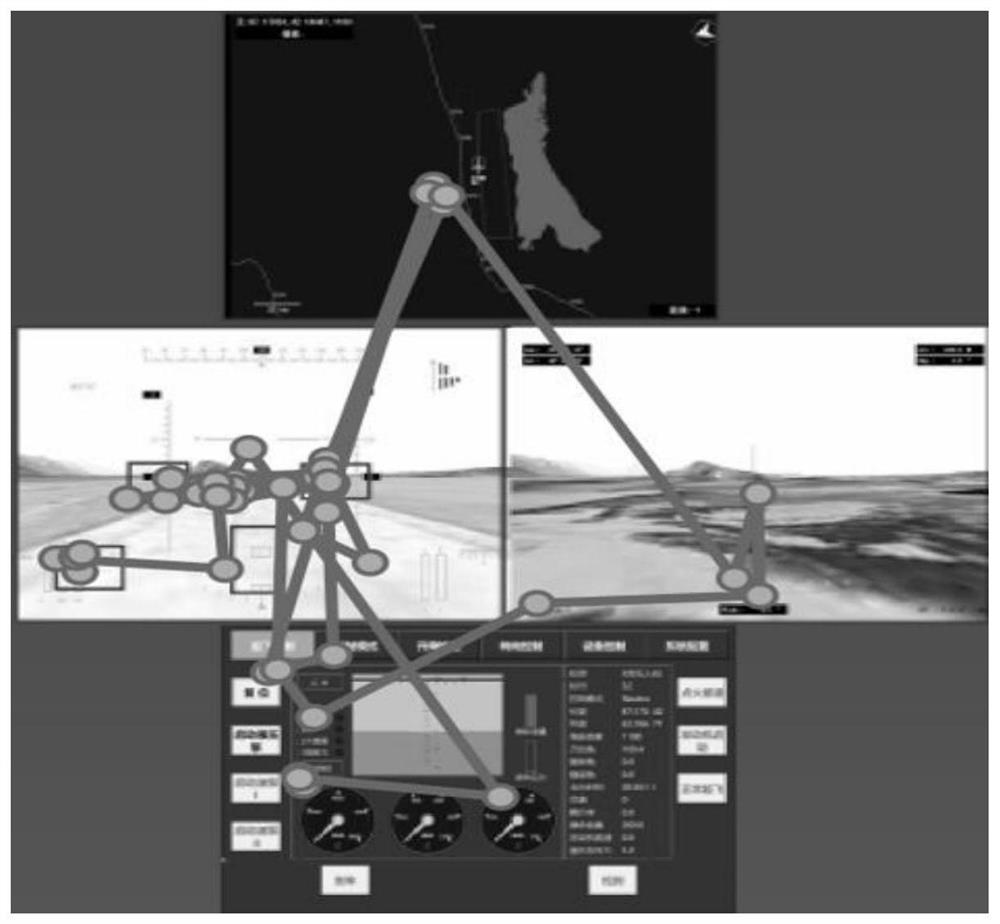

Task scene associated unmanned aerial vehicle pilot visual attention distribution mode extraction method

ActiveCN111951637AImprove accuracyCharacteristic reflectionCosmonautic condition simulationsCharacter and pattern recognitionFixation pointUncrewed vehicle

The invention discloses a task scenario associated unmanned aerial vehicle pilot visual attention distribution mode extraction method, which comprises the steps of obtaining pilot attention distribution data through an eye tracker, dividing different task scenarios through flight parameter data, extracting pilot physiological signals to divide fatigue levels of the pilot physiological signals, anddistinguishing pilot attention distribution situations under different task scenes and different fatigue levels. An eye tracker collects eye movement data when a pilot executes a task under the simulation platform; performs segmentation clustering on the flight parameter high-dimensional time sequence, and dividing different task scenes; collects pilot electrocardiogram data for time-frequency domain analysis, and establishes a fatigue state discrimination classifier in combination with eye movement data and auditory response; attention distribution characteristics are represented through thepercentage of fixation points in the region of interest, the number of times of review and the importance degree, the attention distribution situation with the highest support degree is extracted toserve as a mode, the guidance effect can be achieved on control over pilots of the unmanned aerial vehicle, and the important significance is also achieved on optimization of interface setting of an unmanned aerial vehicle control platform.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com