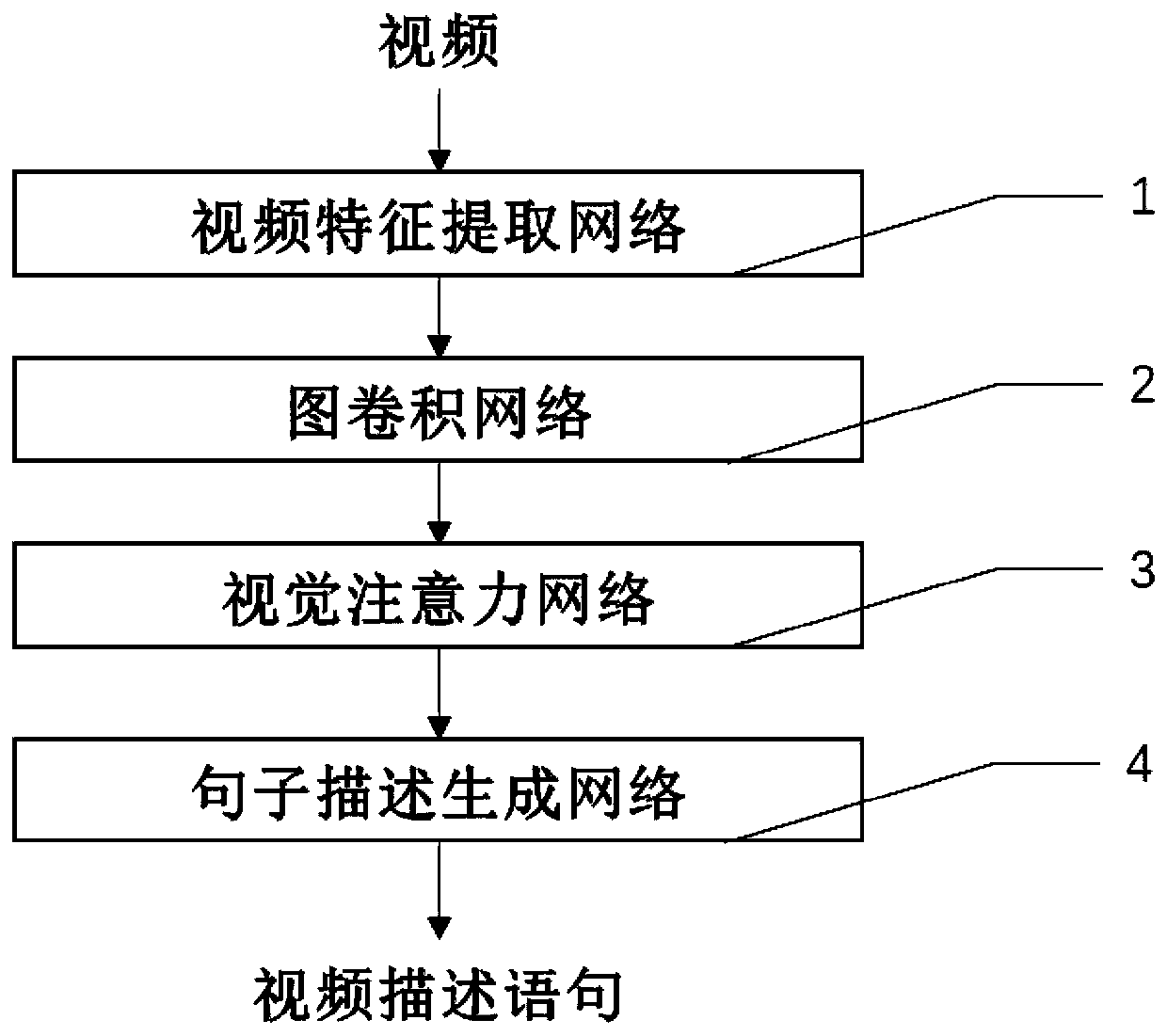

Video description generation system based on graph convolution network

A convolutional network and video description technology, which is applied to biological neural network models, instruments, character and pattern recognition, etc., can solve the problem of underutilization of video timing object information, incomplete mining of video characteristics, loss of other word information, etc. question

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

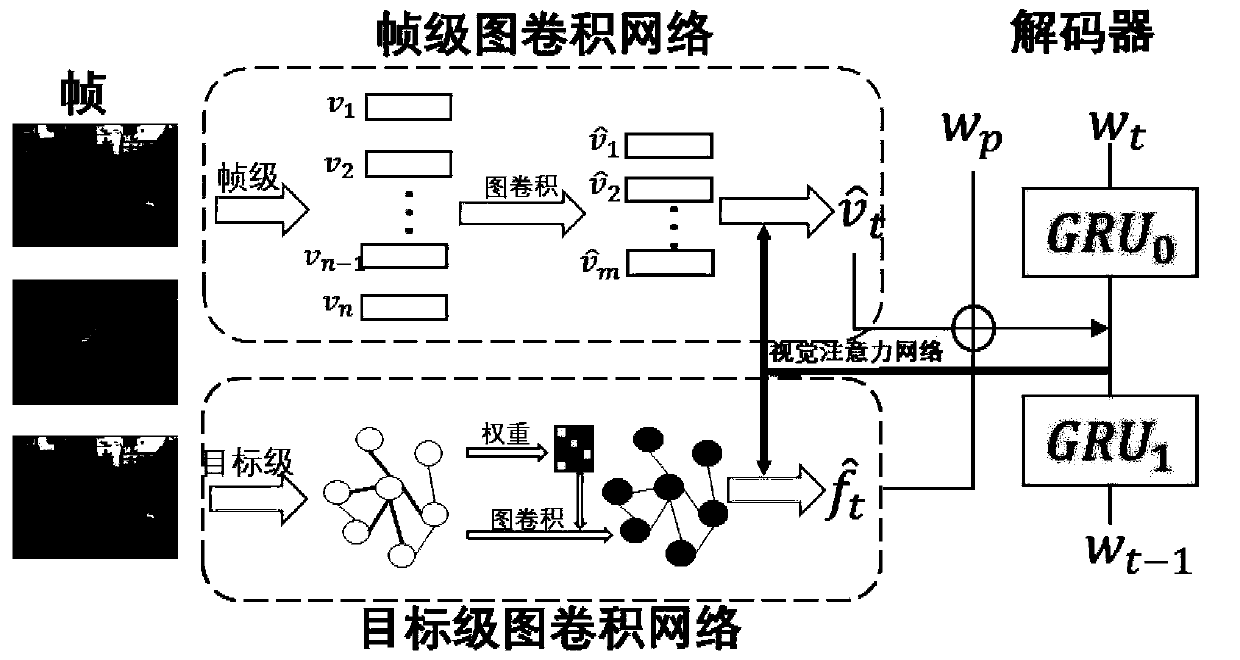

[0043] It can be seen from the background technology that the existing video description generation methods do not make full use of sequence information and specific target object information inside the video. The present invention studies the above problems, introduces the latest cutting-edge technology, that is, the graph convolutional network, and reconstructs the visual information inside the video. During the reconstruction process, the sequence information of the video frame and the semantic correlation information between the target objects are fully considered. A two-layer GRU is used as the decoder to generate the final description sentence. In the generation process, a progressive mode of coarse and fine granularity is adopted to make the video description generation more accurate. The model proposed by the invention is applicable to all video description generation technologies based on encoding-decoding mode, and can significantly improve the accuracy of generated s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com