Method for retrieving specific action video fragments in Japanese online video corpus

An action and video technology, applied in the field of video clip retrieval, can solve the problems of loss of action semantic information, lack of action retrieval and feature extraction functions, etc., to achieve the effect of enriching contextual information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

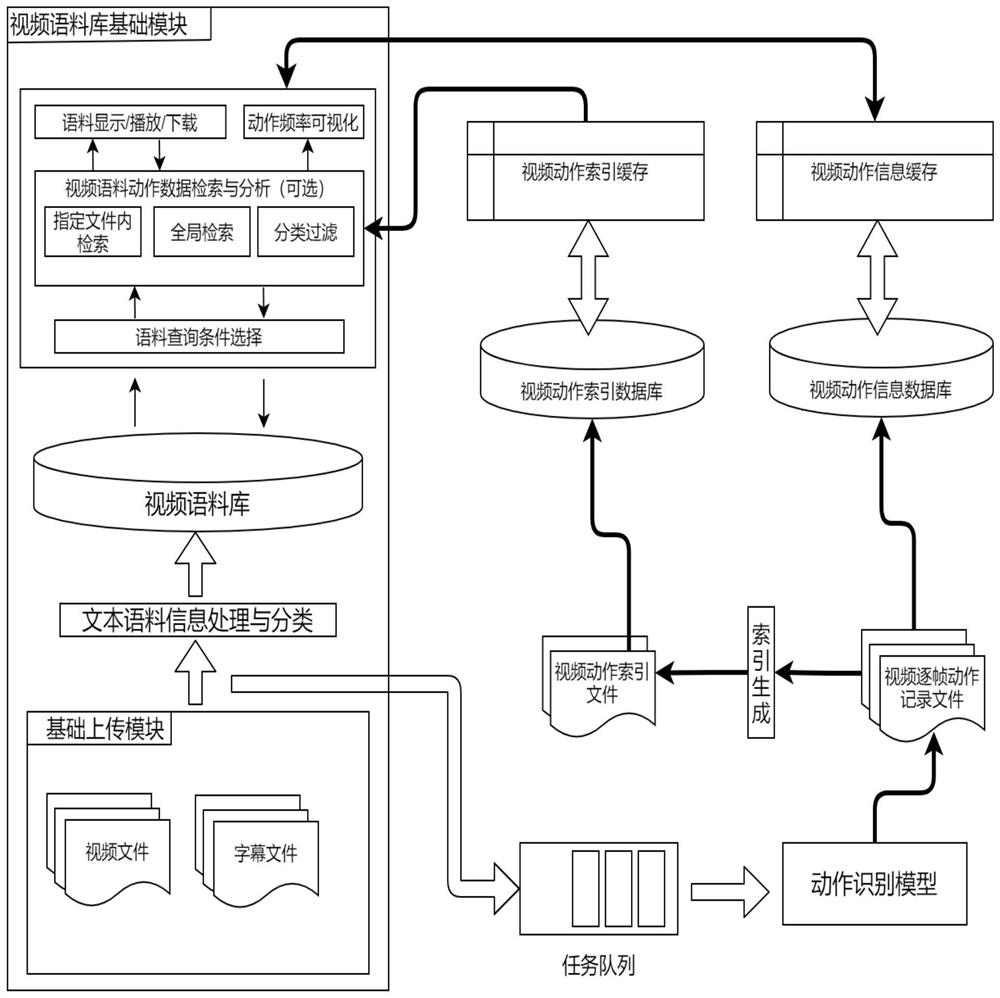

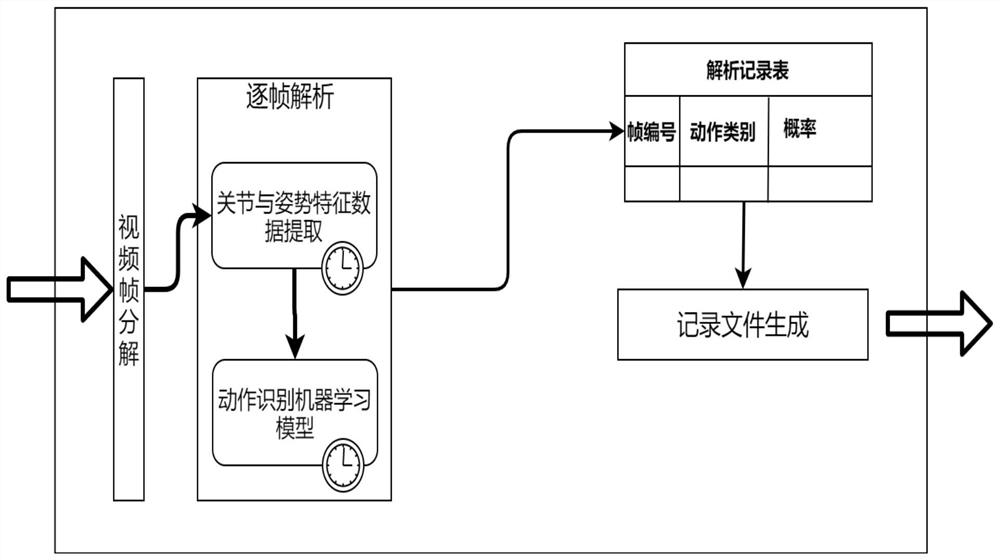

[0023] The specific implementation of the present invention will be described in detail in conjunction with the accompanying drawings and technical solutions.

[0024] The network system adopted in this embodiment is composed of a computer, a video corpus, a corpus server, and an action analysis and data analysis server, and the two servers communicate through the HTTP protocol. For the query of video corpus, the user interacts with the corpus server through the Internet, and the operation modules such as uploading are used as the basic modules of the video corpus. On top of this, an action analysis and data processing server independent of the video corpus server is added. The operating system of the server is Windows 10x64, the action analysis and data processing server is implemented based on Python, and the video action segment retrieval function is implemented based on Java EE technology. Based on JDK 1.8 and SpringBoot open source framework, the retrieval terminal is wr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com