CRNN-based picture table extraction method

An extraction method and table technology, applied in the field of image table extraction based on CRNN, can solve the problems of unclear tables, time-consuming and labor-intensive, and increase the difficulty of character cutting, and achieve the effect of improving user experience, high recognition accuracy, and improving efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

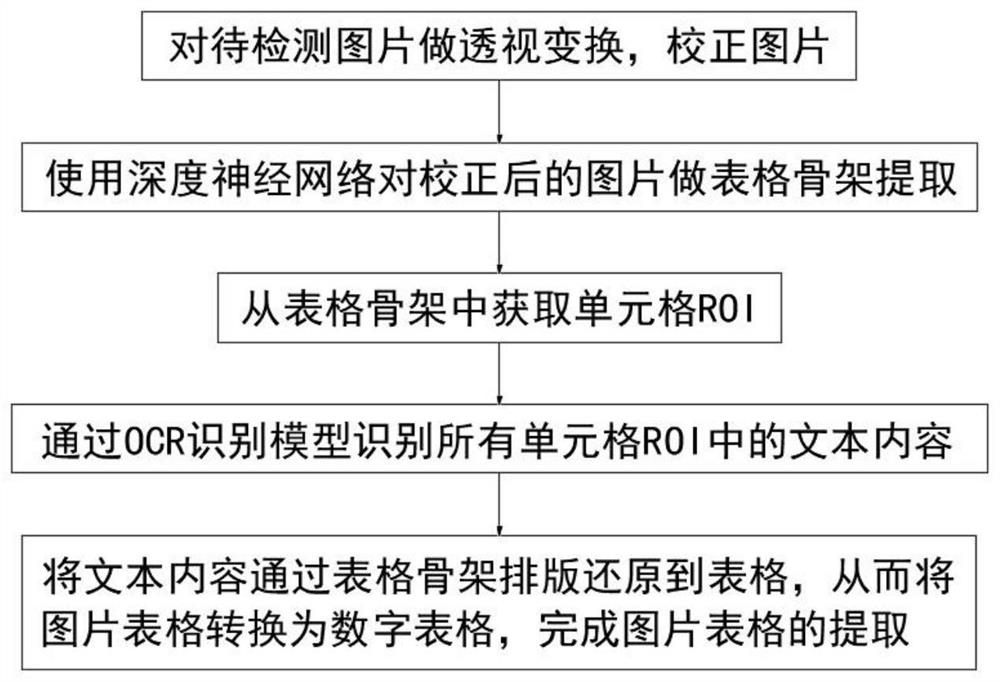

[0044] See figure 1 The present invention provides a technical solution: a method based on a CRNN-based picture extraction method, including the following steps:

[0045] S1, treating the picture to make perspective transformation, calibrating the picture, effectively keep the straight line does not deform, and there is more adaptability to the input image;

[0046] The specific steps of the picture correction are as follows:

[0047] S11, use cv2.findcontours () to detect the design of the picture form cell profile and take the maximum form of form, that is, four vertex coordinates;

[0048] S12, by detecting the reference picture and the vertex to be detected, the conversion matrix M is obtained by the cv2.getPertiveTransform () of the OpenCV-API;

[0049] S13, calculates an image after tilt correction by cv2.warpperspective () by OpenCV-API;

[0050] S2, using the depth neural network to correct the corrected picture to make form skeleton extraction, effectively reduce human-or...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com