Hand detection tracking and musical instrument detection combined interaction method and system

An interactive method and interactive system technology, applied in the combined interactive method and system field of hand detection and tracking and musical instrument detection, can solve the problems of not being able to identify the playing area of the musical instrument, and not being able to provide key point parameters of the hand

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

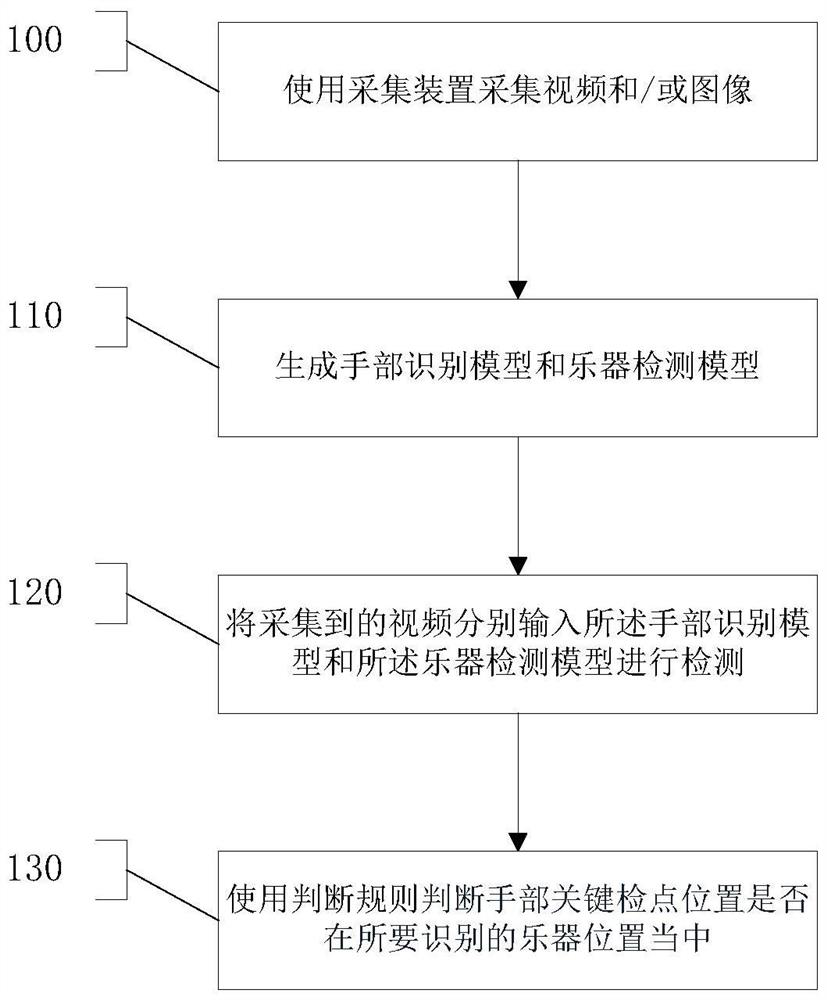

[0066] Such as figure 1 As shown, step 100 is executed to collect video and / or images using a collection device.

[0067] Step 110 is executed to generate a hand recognition model and a musical instrument detection model. The generation method of described hand recognition model comprises the following substeps:

[0068] Step 101: Configure camera parameters and collect musical instrument data in batches, and label them. The labeling method is to label N key points of the hand with X and Y values according to the position of the hand in the image, where N is the number of key points.

[0069] Step 102: Process the collected images to generate estimated X and Y values. Compress the batches of collected images, perform normalization operations on the compressed images, use the MobileNet deep convolutional neural network to perform feature extraction to generate feature maps, use the average pooling layer to perform pooling operations on the feature maps, and use the average ...

Embodiment 2

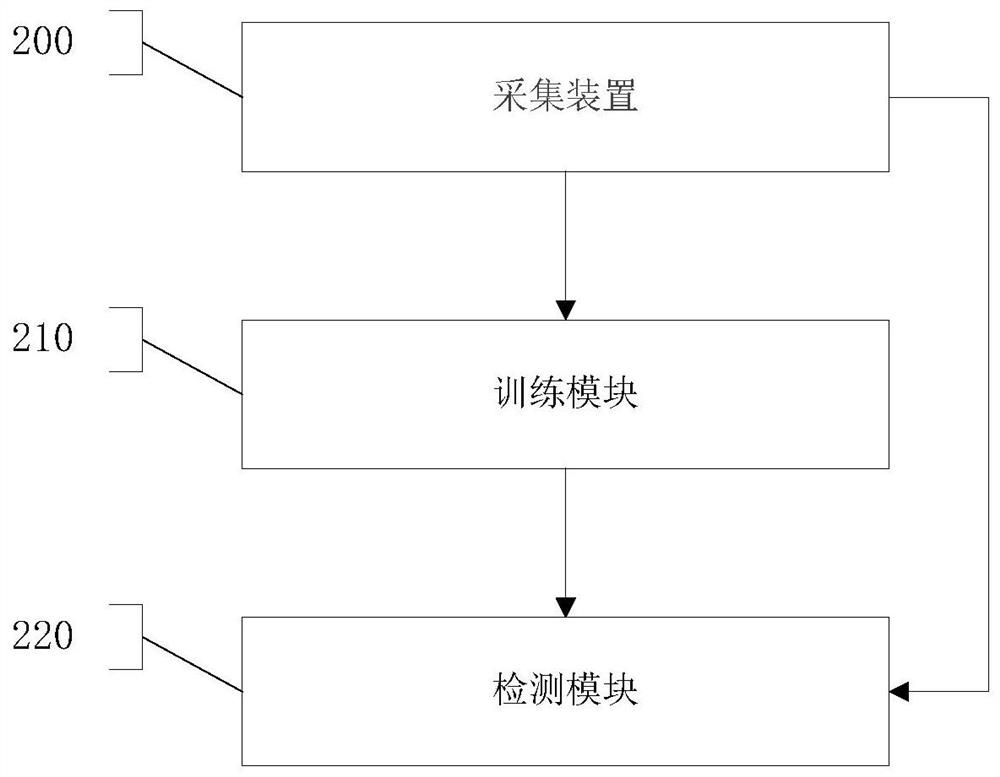

[0081] Such as figure 2 As shown, an interactive system combining hand detection and tracking with musical instrument detection includes a collection device 200 , a training module 210 and a detection module 220 .

[0082] Collection device 200: for collecting video and / or images.

[0083] Training module 210: for generating a hand recognition model and a musical instrument detection model. The generation method of described hand recognition model comprises the following substeps:

[0084] Step 101: Configure camera parameters and collect musical instrument data in batches, and label them. The labeling method is to label N key points of the hand with X and Y values according to the position of the hand in the image, where N is the number of key points.

[0085] Step 102: Process the collected images to generate estimated X and Y values. Compress the batches of collected images, perform normalization operations on the compressed images, use the MobileNet deep convolutiona...

Embodiment 3

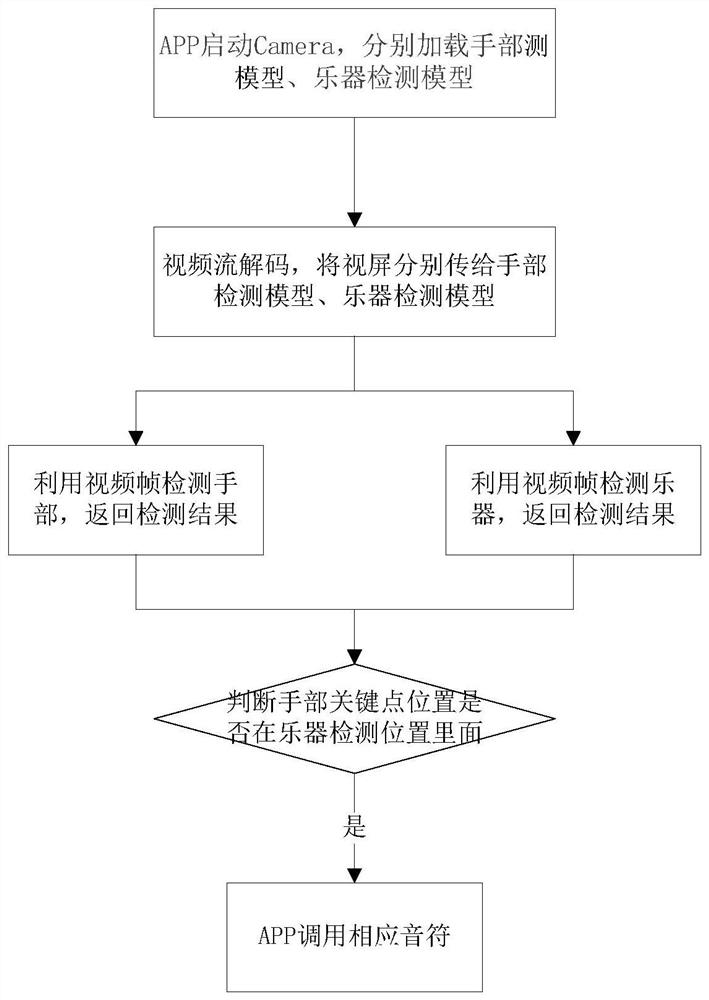

[0096] The present invention can realize that hands can interact with actual objects in AR scenes, and can produce interactive effects such as sounds and animations. Implementation methods such as image 3 As shown, the technical scheme is as follows:

[0097] 1. Hand detection method (such as Figure 4 shown)

[0098] 1. Hand detection and tracking;

[0099] 2. Hand and object detection and tracking.

[0100] 3. Configure the camera parameters to ensure that the size of the captured photos is 480*480, collect musical instrument data in batches, and mark them. The labeling method labels the X and Y values of the 21 key points of the opponent according to the position of the hand in the image.

[0101] 4. Compress the batch-collected images to a size of 256*256, perform normalization operations on the compressed images, and then use the MobileNet deep convolutional neural network to perform feature extraction to generate feature maps, and then use the average pooling lay...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com