Multi-camera vision SLAM method based on observability optimization

A multi-camera vision and camera technology, applied in image analysis, instrumentation, computing, etc., can solve the problem of weak restoration of the real world scale, and achieve the effect of improving feature matching results, improving accuracy and reliability, and robust real-time positioning

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0059] The accompanying drawings provided in this implementation case can only illustrate the basic idea of the present invention, and only show the components related to this implementation case, and are not drawn according to the number and size of components during actual implementation. The shape and quantity of each component in the actual implementation process Can be changed at will.

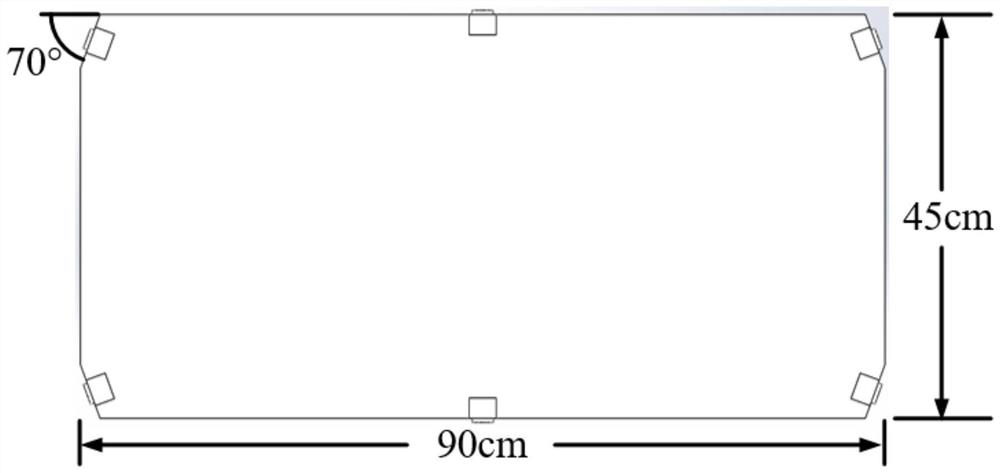

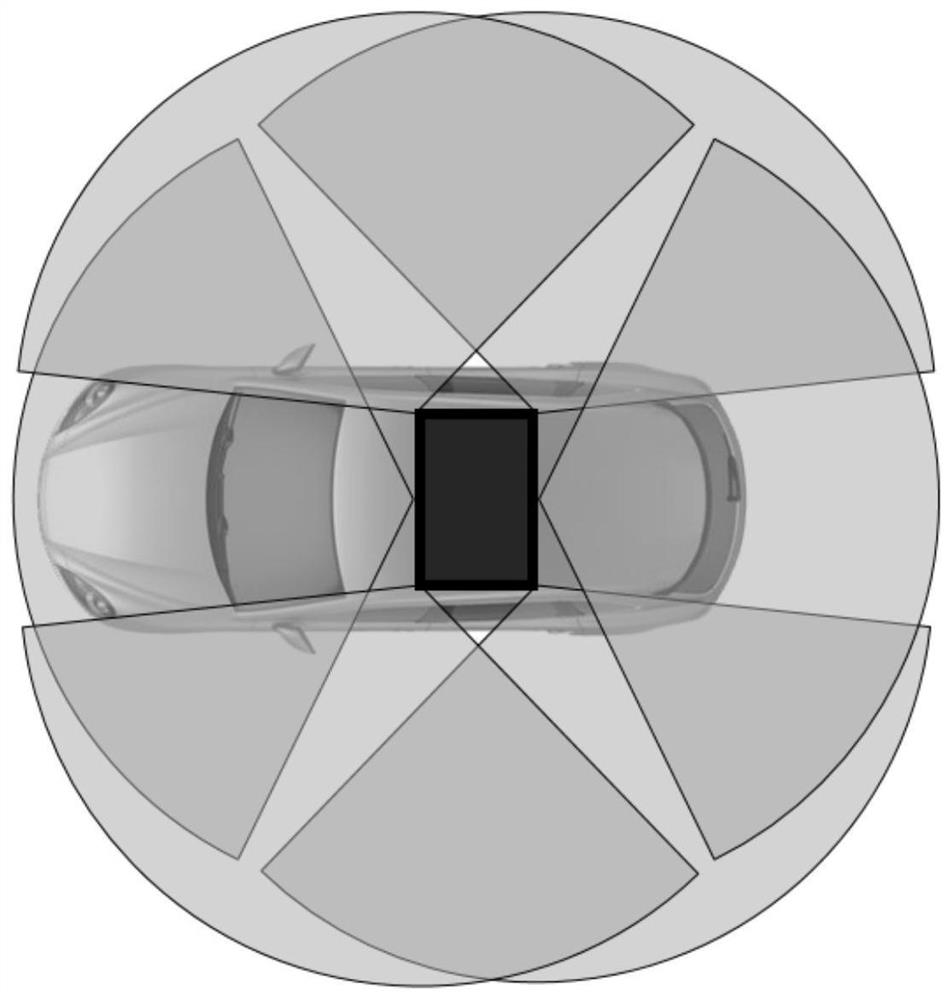

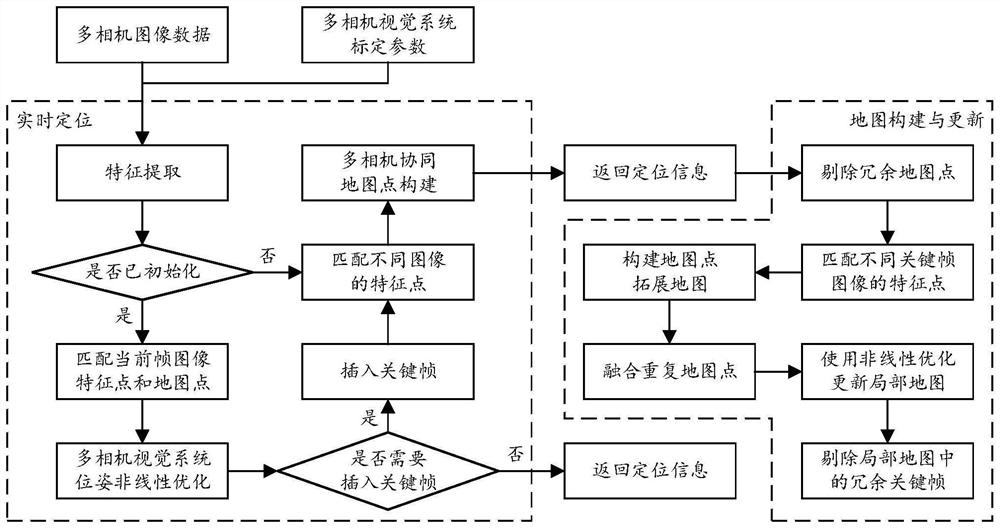

[0060] This implementation case provides a multi-camera visual SLAM method based on observability optimization. The hardware structure diagram used in this method is as follows figure 1 As shown, the figure shows a multi-camera vision system composed of 6 cameras, and the baseline length between adjacent cameras is about 45cm; figure 2 It is a schematic diagram of the installation and arrangement of the multi-camera vision system on the carrier. The horizontal field of view of each camera is about 120°. According to the requirements of the multi-camera vision system in the present inve...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com