High-performance computing resource scheduling fair sharing method

A technology of high-performance computing and resource scheduling, applied in the field of fair sharing of high-performance computing resource scheduling, can solve problems such as insufficient "fairness"

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

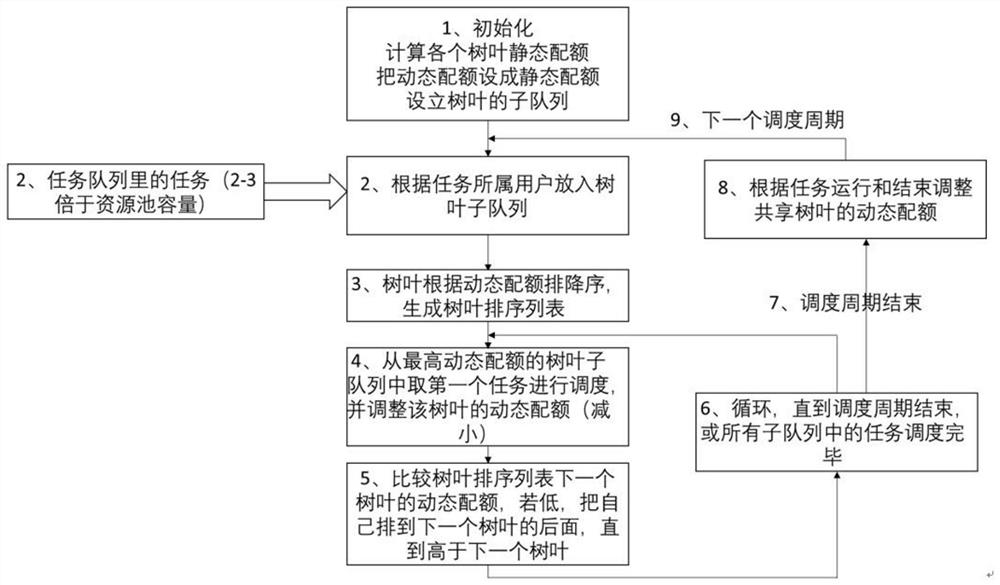

[0062] Such as figure 2 As shown, the high-performance high-performance computing resource scheduling fair sharing method provided by the present invention includes the following steps:

[0063] S1: Data structure initialization: convert the configured fair share structure into a tree data structure, calculate the static quota of each leaf, set the dynamic quota to the static quota, and set up the sub-queue of the leaf;

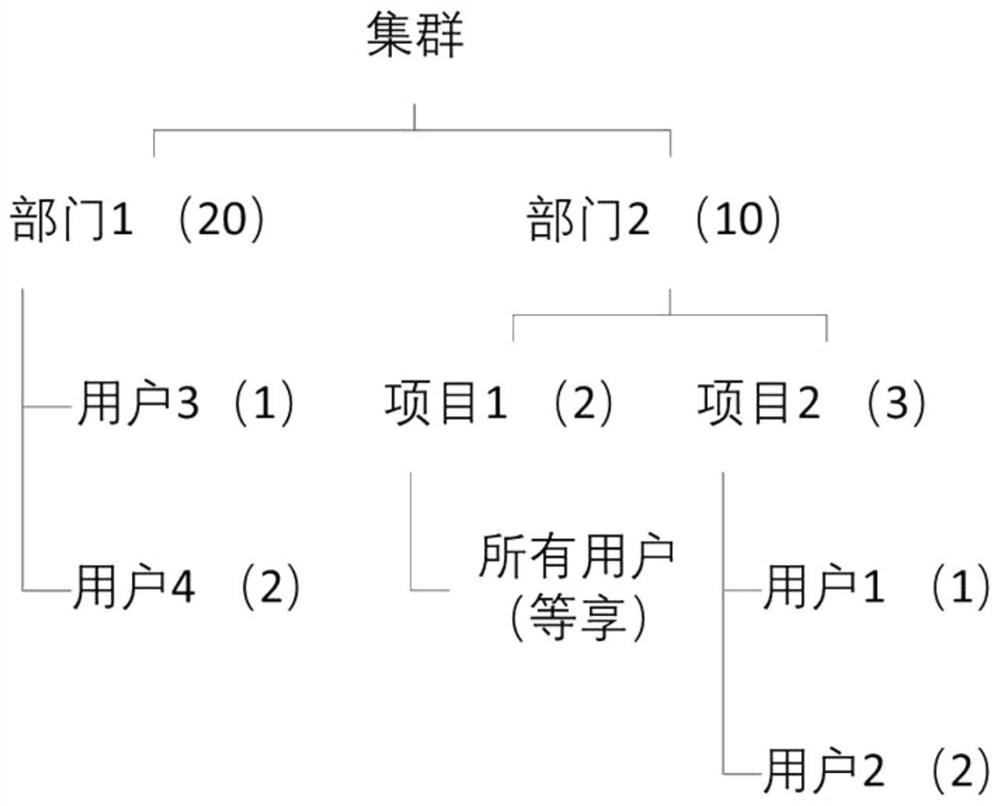

[0064] The fair share structure is described in figure 1 . All the following descriptions take the structure in this figure as an example.

[0065] S11: Calculate the global sharing quota of each leaf of the fair sharing tree, define the cluster-level quota as 1, and calculate the sharing quota of each leaf from top to bottom. exist figure 1 In the example, department 1 is 0.6667, department 2 is 0.3333, user 3 is 0.2222, user 4 is 0.4445, item 1 is 0.1333, item 2 is 0.2, user 1 is 0.0667, and user 2 is 0.0333. The quota of each unit in the last leaf pa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com