BERT model pre-training method, computer device and storage medium

A pre-training and model technology, applied in computer parts, computing, neural learning methods, etc., can solve the problems of unstable direction and amplitude of BERT model performance changes, affecting the structure and parameters of BERT models, etc., to enhance the understanding of synonyms and The effect of recognition ability, maintaining performance, and less pre-training time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] In order to make the purpose, technical solution and advantages of the application clearer, the embodiments of the application will be described in detail below in conjunction with the accompanying drawings. It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined arbitrarily with each other.

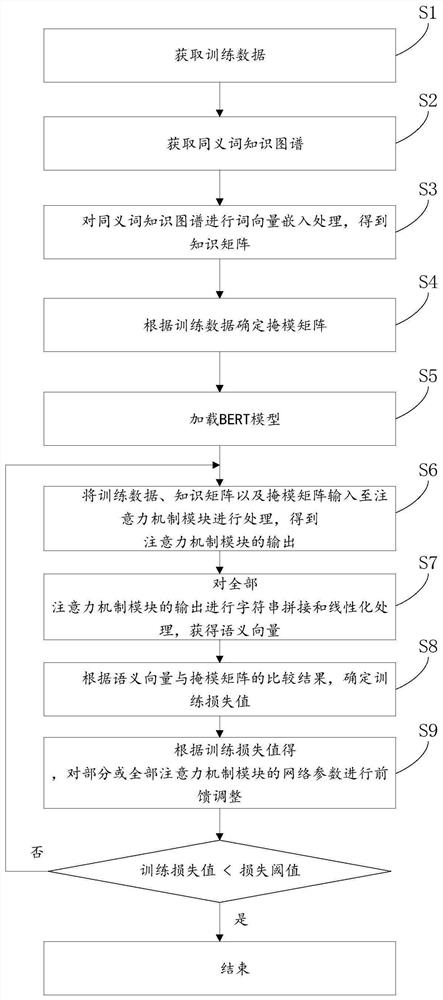

[0048] In this example, refer to figure 1 , the pre-training method of the BERT model includes the following steps:

[0049] S1. Obtain training data;

[0050] S2. Obtain the knowledge map of synonyms;

[0051] S3. Perform word vector embedding processing on the synonym knowledge map to obtain a knowledge matrix;

[0052]S4. Determine the mask matrix according to the training data;

[0053] S5. Load the BERT model; wherein, the BERT model includes multiple attention mechanism modules;

[0054] S6. For each attention mechanism module, input the training data, knowledge matrix and mask m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com