Behavior detection method

A detection method and behavioral technology, applied in neural learning methods, instruments, biological neural network models, etc., can solve problems such as low interpretability and difficult detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] Readily appreciated that the solution according to the invention, without changing the true spirit of the invention, those skilled in the art can make a variety of structures and alternative ways to achieve another embodiment. Accordingly, the following detailed description and drawings are merely illustrative of the technical solution of the present invention and should not be considered all or is deemed to define or limit the invention to the aspect of the present invention.

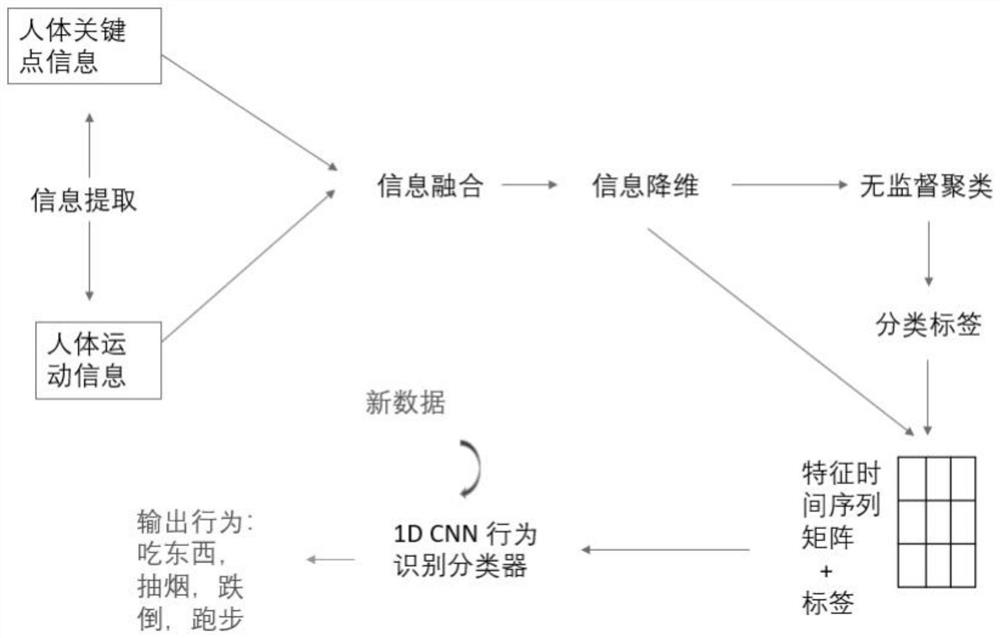

[0029] like figure 1 , The present invention provides technical solutions: A method for detecting behavior, comprising the steps of:

[0030] The first step, the body feature extraction point coordinate information

[0031] S1-1, default body posture acquisition device, and the establishment of the time series, to obtain data set information includes all human pose estimation in time sequence in;

[0032] S1-2, respectively Key Info dataset body pose estimation algorithm and information Openpose Dee...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com