End-to-end speech recognition method based on rotation position coding

A technology for rotating position and speech recognition, applied in the field of pattern recognition, can solve the problems of cumbersome implementation of matrix operations, and achieve the effect of simple implementation, few parameters and good performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment

[0068] 1. Data preparation:

[0069] In the experiment, the experimental data uses the Mandarin corpus AISHELL-1 and the English speech corpus LibriSpeech. The former has 170 hours of labeled speech, while the latter includes 970 hours of labeled corpus and an additional 800M words labeled plain text corpus for building language models.

[0070] 2. Data processing:

[0071] Extract 80-dimensional logarithmic mel filter bank features with a frame length of 25ms and a frame shift of 10ms, and normalize the features so that the mean value of each speaker's features is 0 and the variance is 1. The dictionary of AISHELL-1 contains 4231 labels, and the dictionary of LibriSpeech contains 5000 labels generated by the byte pair encoding algorithm. In addition, the vocabularies of AISHELL-1 and LibriSpeech have padding symbol "PAD", unknown symbol "UNK" and end-of-sentence symbol "EOS".

[0072] 3. Build a network:

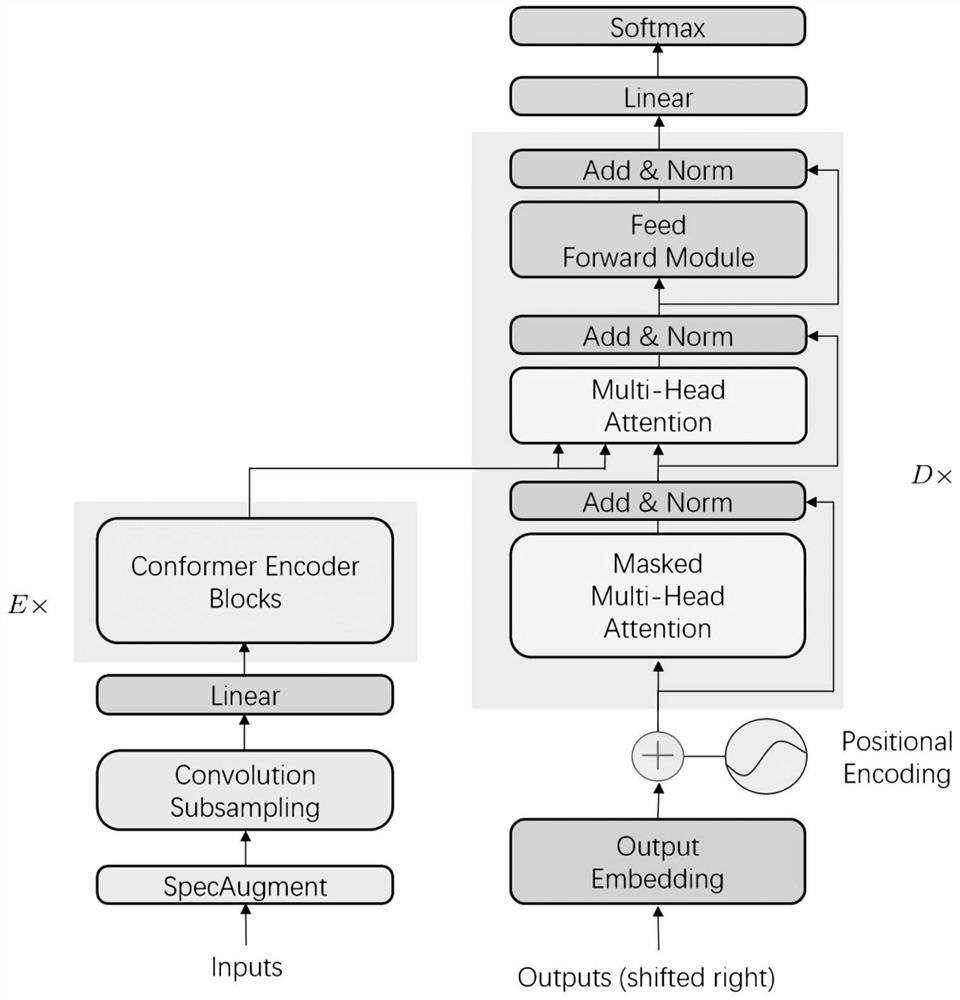

[0073] The model proposed by the present invention contains 12 enc...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com