Individual emotion recognition method fusing expressions and postures

An emotion recognition and gesture technology, applied in the field of video analysis, can solve the problems of low accuracy of individual emotion recognition in public spaces, difficult adjustment of parameters, manual selection of features, etc., to reduce the time required for training, strong adaptability, and classification results. accurate effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention is described in further detail by examples below. It must be pointed out that the following examples are only used to further illustrate the present invention, and cannot be interpreted as limiting the protection scope of the present invention. , making some non-essential improvements and adjustments to the present invention for specific implementation shall still belong to the protection scope of the present invention.

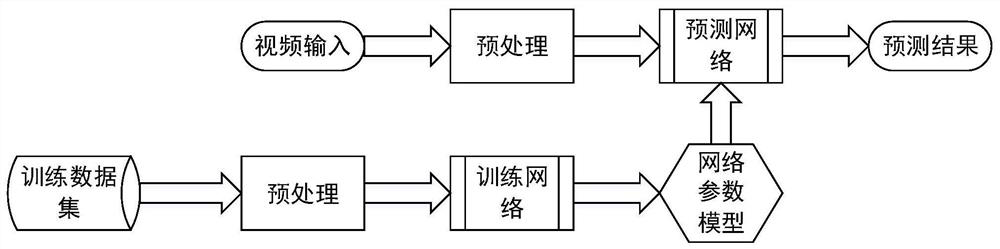

[0038] figure 1 Among them, the individual emotion recognition method that integrates expressions and gestures, specifically includes the following steps:

[0039] (1) Divide the video sequence data set into negative, neutral and positive three different individual emotion categories, divide the graded data set into training set and test set according to the ratio of 5:5, and make data labels.

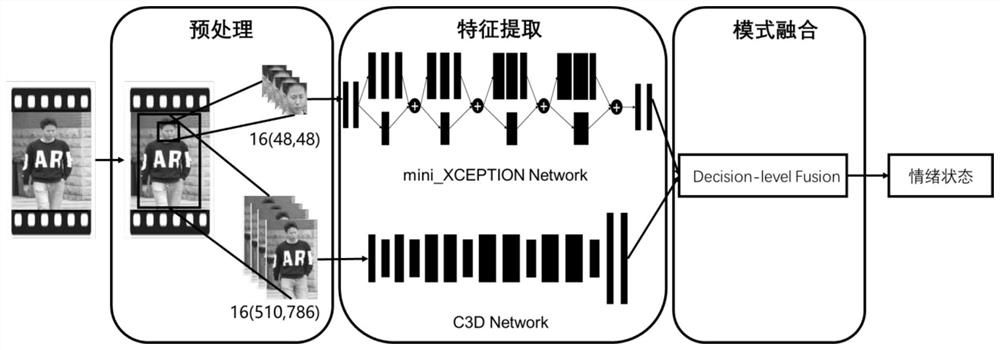

[0040] (2) The video sequences of each data set in the above step (1) are subjected to face detection processing to obtain face sequence...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com