Efficient robotic control based on input from remote client device

A remote client and robot technology, applied in the field of efficient robot control based on input from remote client devices, can solve problems such as restricting robots to operate more efficiently, and achieve the effect of slowing down delays and saving network resources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

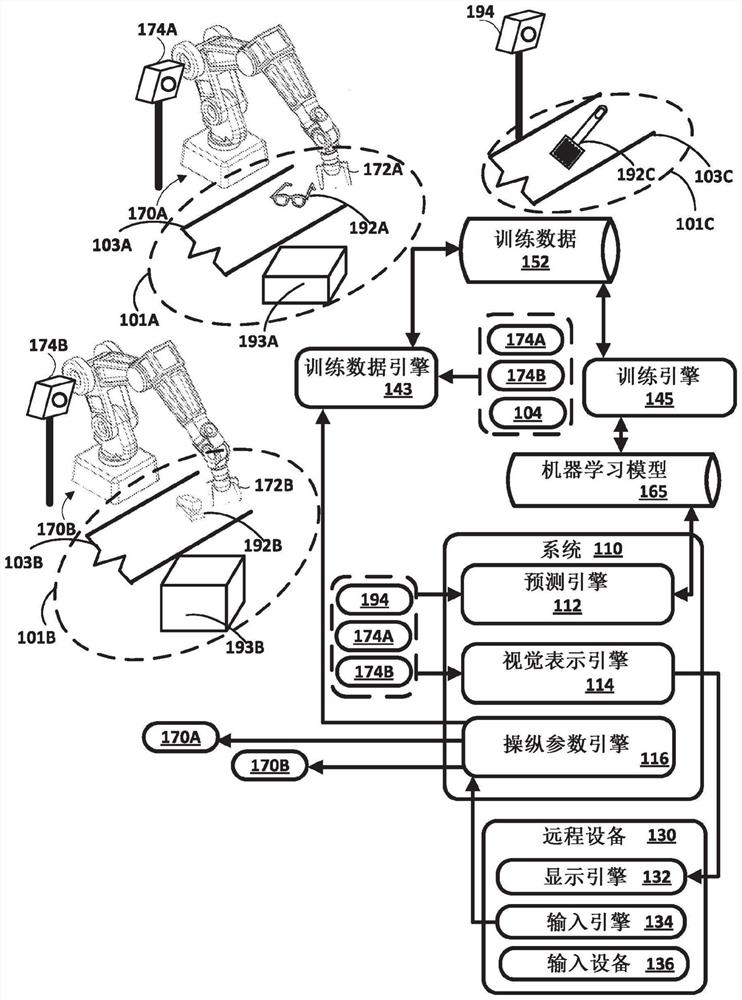

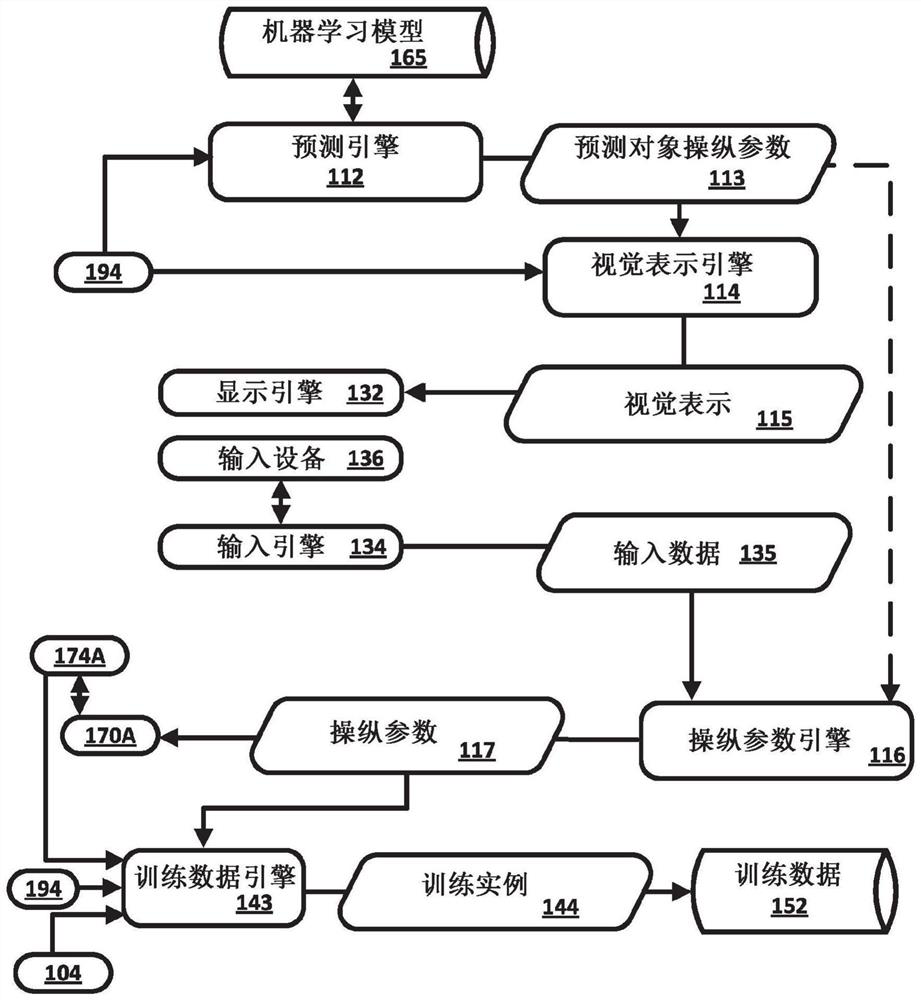

[0037] Figure 1A An example environment is shown in which implementations described herein may be practiced. Figure 1A A first robot 170A and associated robot vision components 174A, a second robot 170B and associated robot vision components 174B, and additional vision components 194 are included. Additional vision components 194 can be, for example, monocular vision cameras (e.g., generate 2D RGB images), stereo vision cameras (e.g., generate 2.5D RGB images), laser scanners (e.g., generate 2.5D "point clouds"), and can Operably connected to one or more systems disclosed herein (eg, system 110). Optionally, multiple additional vision components may be provided and the vision data from each vision component utilized as described herein. Robot vision components 174A and 174B may be, for example, monocular vision cameras, stereo vision cameras, laser scanners, and / or other vision components, and vision data from them may be provided to and used by respective robots 170A and 17...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com