Real-time special effect processing method in large-scale live video

A technology of video live broadcast and special effect processing, applied in the field of video live broadcast, can solve the problems of long time-consuming face processing and no coverage, etc., and achieve good viewing effect, smooth replacement, and good user experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments. This embodiment is carried out on the premise of the technical solution of the present invention, and detailed implementation and specific operation process are given, but the protection scope of the present invention is not limited to the following embodiments.

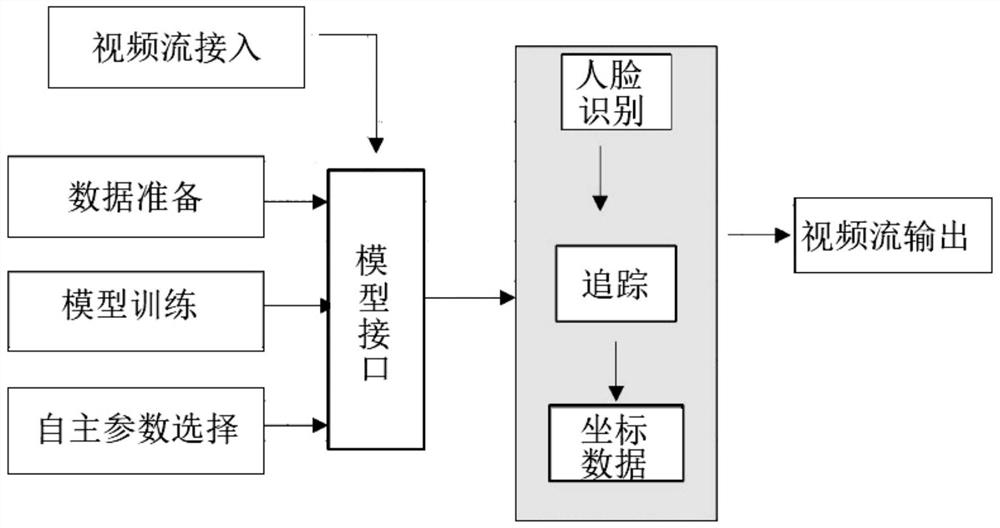

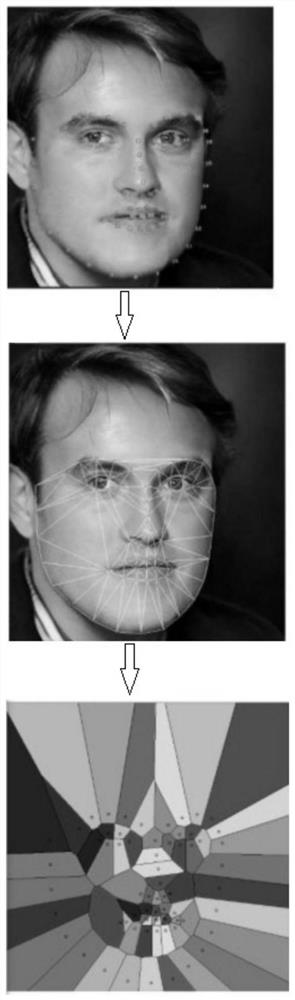

[0016] After the video stream is connected, first use the deep convolutional network to obtain the face thumbnail, which is different from opencv and other models commonly used in the market, and use the lighter and faster dlib model for target recognition; once the face is detected, use the card Mann filter tracks and outputs the coordinates; uses the fuzzy model with adjustable effect to process the face thumbnail according to the coordinates; establishes a three-layer convolution, pooling, and two-layer convolution neural network, in which: one layer uses (7*7 ) filter, with a step...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com