Unmanned aerial vehicle autonomous vision landing method based on deep hybrid camera array

A hybrid camera and depth camera technology, applied in the field of unmanned aerial vehicles, can solve the problem that the two-dimensional code image is too small and cannot be matched smoothly, the poor attitude and position calibration effect affects the accuracy of pose estimation, and cannot meet the high precision landing of unmanned aerial vehicles, etc. problem, to achieve the effect of achieving high-precision landing of autonomous vision, improving visual positioning accuracy, and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

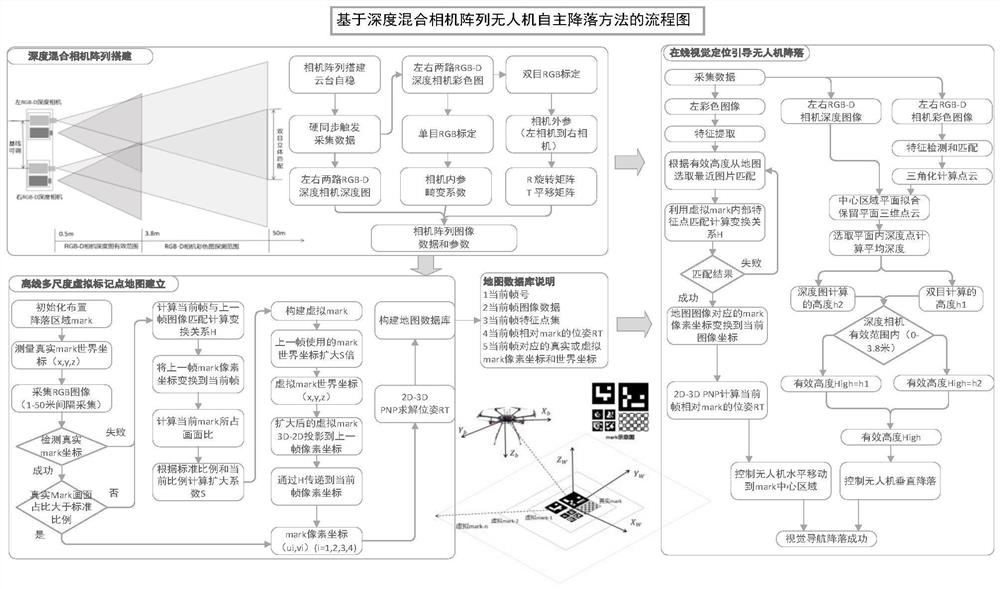

[0026] 1. Build a deep hybrid camera array

[0027] Build a deep hybrid camera array. In the present invention, two RGB-D depth cameras are combined to form a camera array, and two left and right color cameras are combined to form a binocular camera for stereo matching distance measurement. figure 1 The mid-depth hybrid camera array building block is a schematic structural diagram of the entire camera array. The device can be used to obtain two channels of active light measurement depth maps and two channels of left and right color images. The selected depth camera has the ability of hard trigger synchronous acquisition, which can ensure that the captured images are synchronized and consistent. The whole device uses a 3-axis self-stabilizing gimbal to ensure stability, so that the camera can face vertically downward relative to the ground. This invention selects DJI Jingwei series UAVs as the flight platform. They have high load and excellent flight performance. They adopt ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com