Lightweight 2D video-based facial expression driving method and system

A technology of facial expressions and driving methods, applied in neural learning methods, character and pattern recognition, image analysis, etc., can solve problems such as high production costs and expensive equipment, and achieve less resource occupation, strong practicability, and simple data acquisition Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

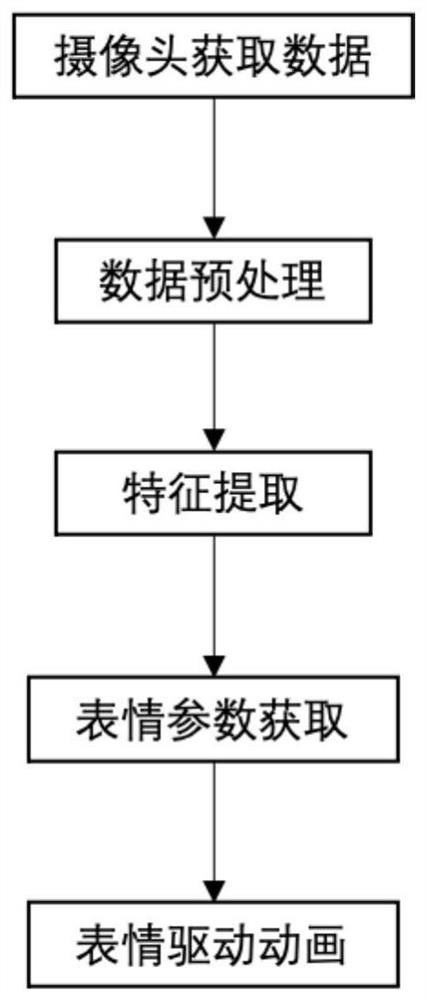

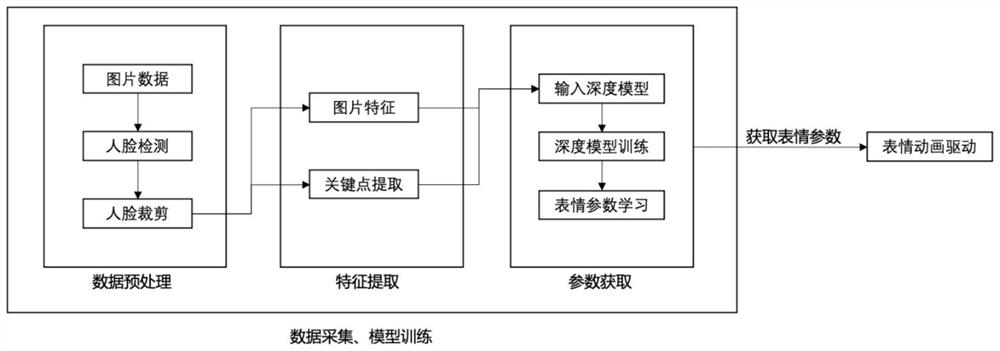

[0034] refer to Figure 1-2 , the present invention provides a kind of lightweight facial expression driving method based on 2D video, comprising the following steps:

[0035] S1, acquire data through the camera;

[0036] S2, data preprocessing: preprocessing the data acquired by the camera to obtain the intercepted face area picture;

[0037] S3, feature extraction: obtain face features and facial key point information through the face area image intercepted by S2;

[0038] S4, acquisition of facial expression parameters, acquiring facial expression parameters according to facial features and facial key point information, and driving facial animation through facial expression parameters.

[0039] Further, the specific method of data preprocessing in S2 is: the picture or video data frame I acquired by the camera frame For processing, use the MTCNN algorithm to detect the face of the picture or video, and return the relevant information such as the position of the face and ...

Embodiment 2

[0051] The present embodiment provides a light-weight facial expression driving system based on 2D video, including a camera, a data preprocessing module, a feature extraction module and an expression parameter acquisition module, wherein:

[0052] The camera is used to obtain picture or video data; the camera adopts a common RGB camera.

[0053] The data preprocessing module is used to preprocess the data obtained by the camera to obtain the intercepted face area picture;

[0054] The feature extraction module obtains face features and facial key point information through the face area image intercepted by S2;

[0055] The expression parameter acquisition module obtains expression parameters according to facial features and facial key point information, and drives facial animation through expression parameters.

[0056] The present invention extracts facial features and key point information from pictures, learns expression-related parameters through a deep neural network, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com