Nutrition management method and system based on deep learning food image recognition model

An image recognition and deep learning technology, applied in the field of meal food image data processing, can solve problems such as poor operability, errors, and inability to reflect dietary intake for a long time, and achieve the effect of supporting clinical cohort research

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 5

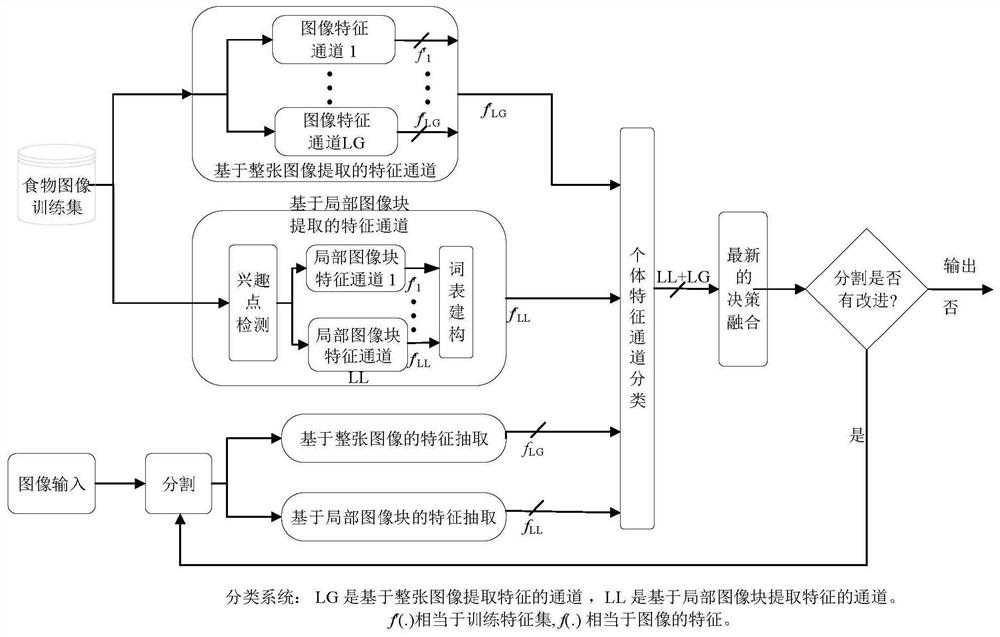

[0066] Embodiment 5 An important part of the present invention is the coding of the food image segmentation system. This embodiment further illustrates this.

[0067] Step 501: The segmentation method divides the image into each mask by detecting regions of interest (ROI); inputting the food image (matrix), binarizing the image, and extracting 3 values (red, green, blue) of each pixel. ) or 1 value (black or white);

[0068] Step 502: Extract global features and local features, extract the outline type of the food image, detect the outline but do not establish a hierarchical relationship; save the outline information by a processing approximation method; for example, a matrix outline is stored with 4 points.

[0069] Step 503: Pass to the classifier and get feedback; pass the output mask to the classifier, let the classifier learn and feedback the food image category label in the data set, and get the final mask. The returned image of the food image segmentation is the mask...

Embodiment 6

[0070] Embodiment 6 This embodiment proposes a meal image segmentation modeling, which includes the following steps:

[0071] 601. Convolutional neural network based on image segmentation focusing mechanism. It enables the network to focus more on key areas and improves the ability to extract the distinguishable semantic features of the image.

[0072] 602. The weighting mechanism is introduced into the field of medium image recognition, and a pixel-level weighting mechanism based on food images, DenseNet, is proposed. In this DenseNet, each layer gets an extra input from all previous layers and passes the feature map of that layer to all subsequent layers. Food image DenseNet uses a cascaded approach, where each layer is receiving prior information from previous layers, improving the network's ability to extract distinguishable semantic features, thereby improving recognition accuracy.

[0073] 603. Use the image segmentation mechanism to complete deep learning, output the ...

Embodiment 7

[0074] Embodiment 7 This embodiment proposes a flow of an image recognition system, including the following steps:

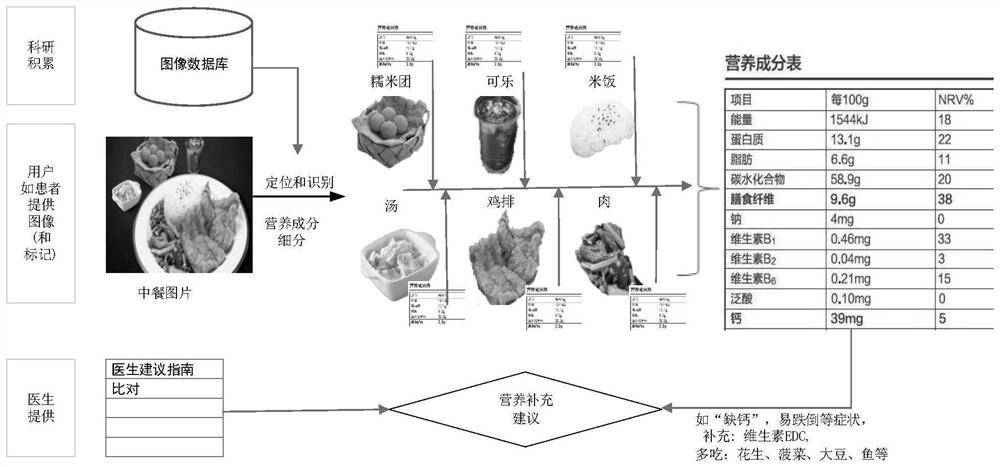

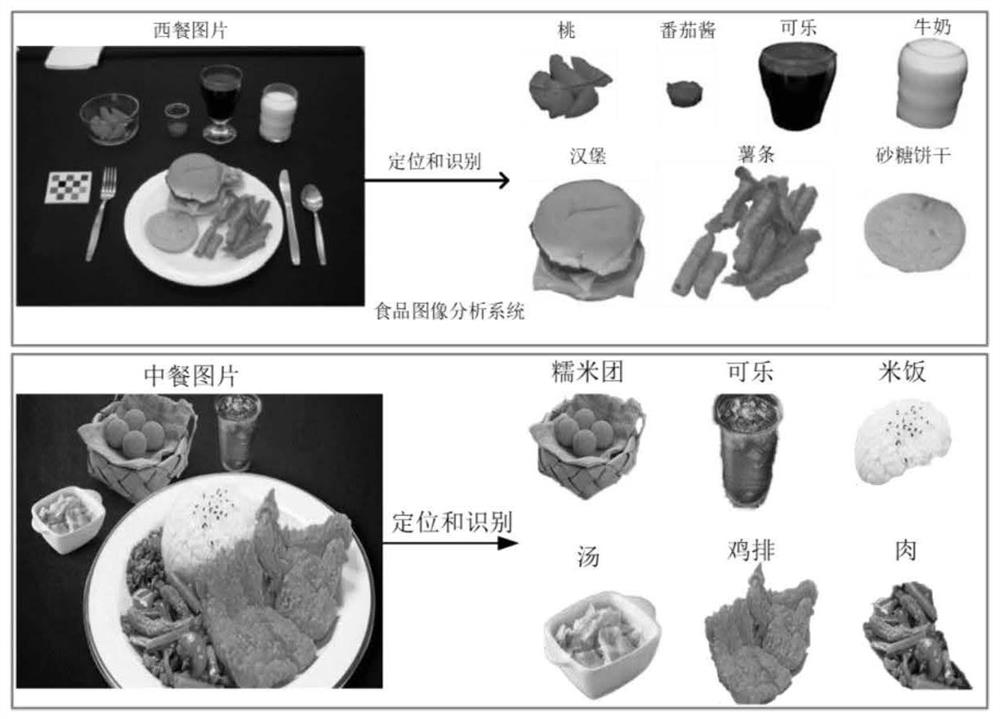

[0075] 701. Convert the food into pictures by means of mobile phone cameras, etc., and then obtain data such as food type, volume, weight, and processing method through the data model, and put the obtained data into the learning model to further optimize the algorithm.

[0076] 702. Through the server and other data sets, it is obtained whether the ratio of energy, productivity and nutrients is in an appropriate range, and finally, the data obtained by the analysis is fed back to the user and corresponding dietary suggestions are given.

[0077] 703. Image segmentation, according to the ratio of the pixels occupied by each food in the picture to the pixels occupied by all foods, the proportion of the food in the whole set is calculated. By correlating with the relevant database (such as the Chinese food composition table), the nutrients contained in each food ar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com